- PX4 Firmware (https://github.com/PX4/Firmware/)

- MAVROS (https://github.com/mavlink/mavros)

- sitl_gazebo (https://github.com/PX4/sitl_gazebo/)

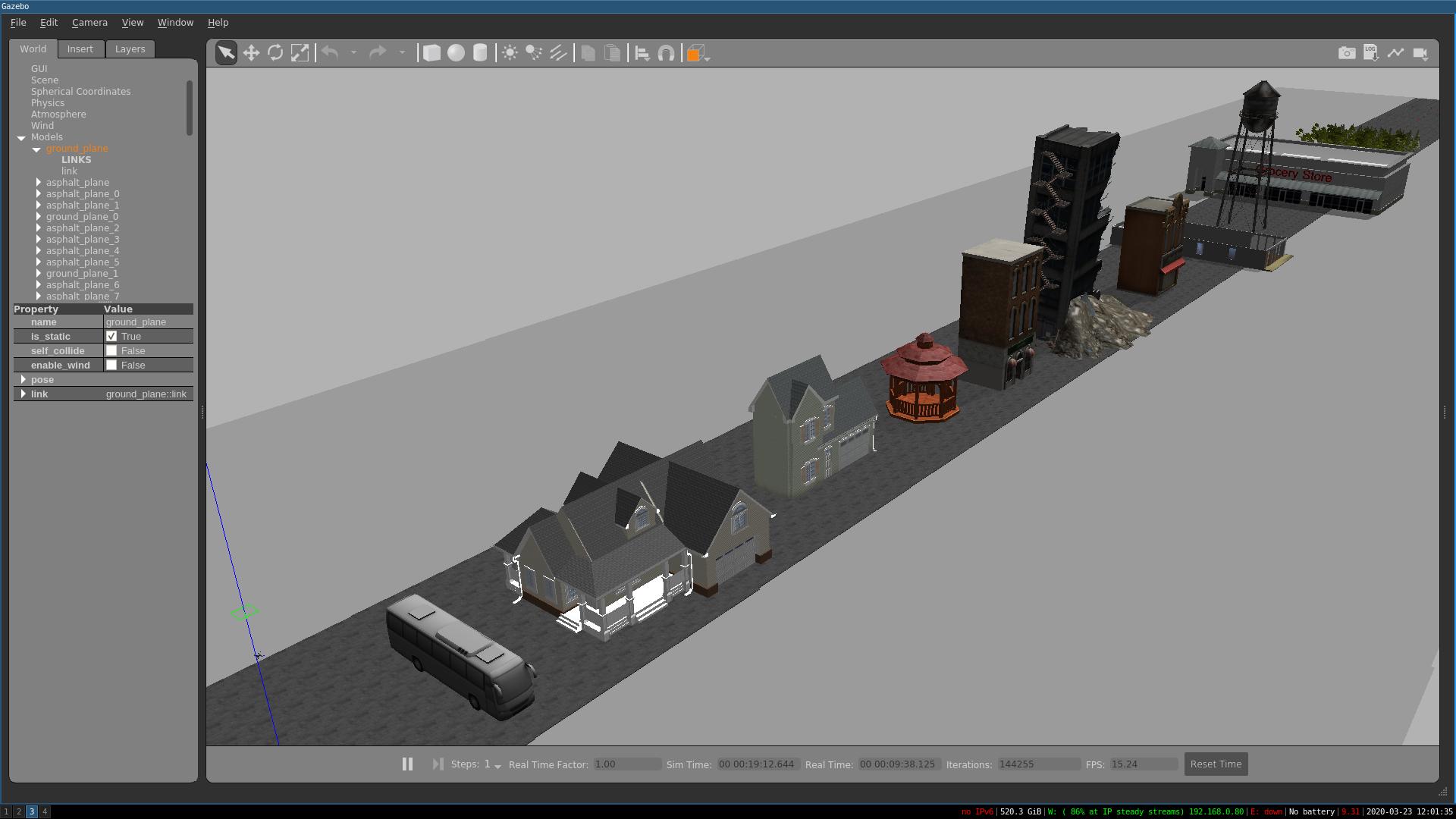

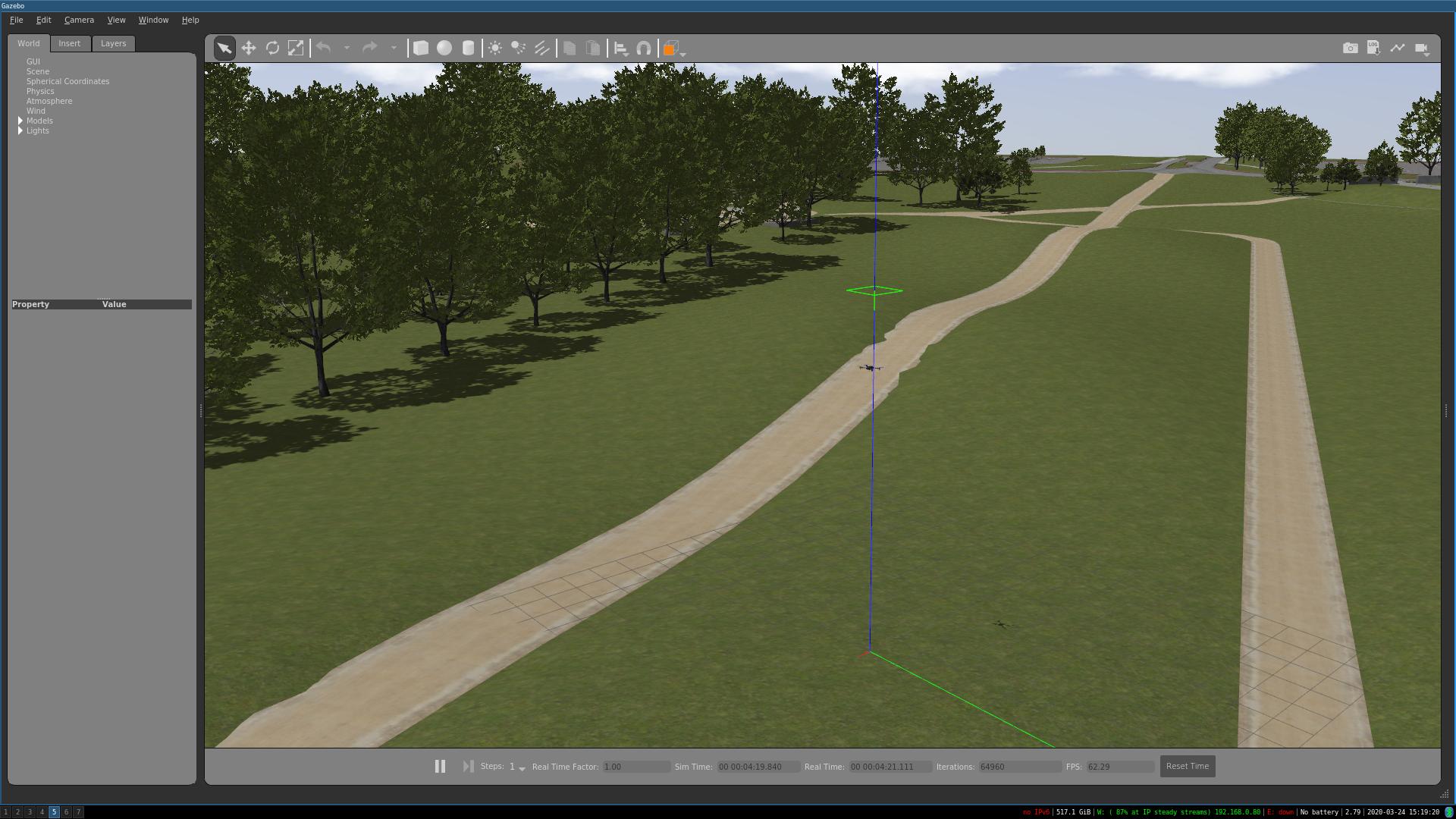

Add baylands model to gazebo path. (Baylands model is from OSRF latest gazebo commit)

python data_gather.py will hover the UAV at the start location at height 5m.

python tele_operation.py will let us move the vehicle along the terrain.

rosrun trn_imitation_learning read_bag to write images to the folder specified in code. Update the folder location and do a catkin build.

The following keys are the mapped for desired effect:

1. Left key - starts moving the drone forward by 1m/s.

2. Up - increase z velocity by 0.5 m/s

3. Down - decrease z velocity by 0.5m/s

4. Shift - go back to initial start location

5. Esc - quit recording keys (EXIT)

Use src/write_tf_record.ipynb and src/imitation_learning.ipynb to learn UP, DOWN and STAY actions for the drone from depth images saved from rosrun trn_imitation_learning read_bag.

- Run

roslaunch trn_imitation_learning mavros_posix_sitl.launchto launch the gazebo world. - Run

rosrun trn_imitation_learning data_gatherto publishmono8depth images. - Run

python nn_controller.pyandpython imitation_learning.pyto start the controller code and key mapping to enable nn control. - Press to switch to learned controller.