- This is a working implementation of a vectorized fully-connected neural network in NumPy

- Backpropagation algorithm is implemented in a full-vectorized fashion over a given minibatch

- This enables us to take advantage of powerful built-in NumPy APIs (and avoid clumsy nested loops!), consequently improving training speed

- Backpropagation code lies in the method

take_gradient_step_on_minibatchof classNeuralNetwork(seesrc/neural_network.py) - Refer to in-code documentation and comments for description of how the code is working

- Directory

src/contains the implementation of neural networkssrc/neural_network.pycontains the actual implementation of theNeuralNetworkclass (including vectorized backpropagation code)src/activations.pyandsrc/losses.pycontain implementations of activation functions and losses, respectivelysrc/utils.pycontains code to display confusion matrix

main.pycontains driver code that trains an example neural network configuration using theNeuralNetworkclass

- To download MNIST data, install python-mnist through

git clonemethod (run the script to download data; ensurepython-mnistdirectory exists inside the root directory of this project)

- Implement class NeuralNetwork

- Implement common activation and loss functions

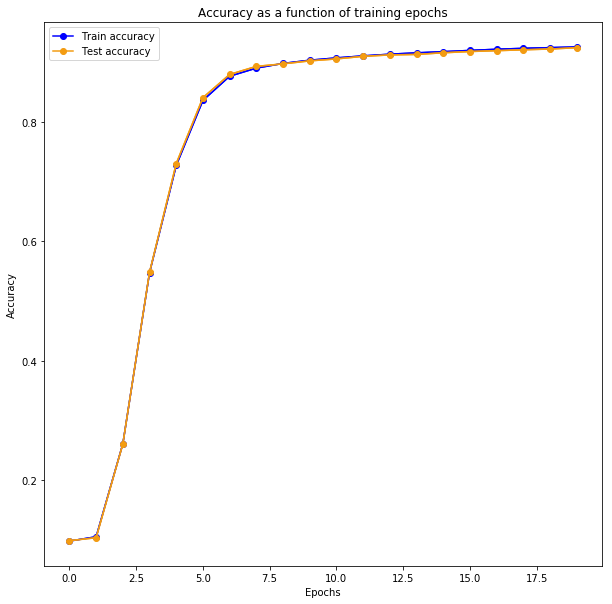

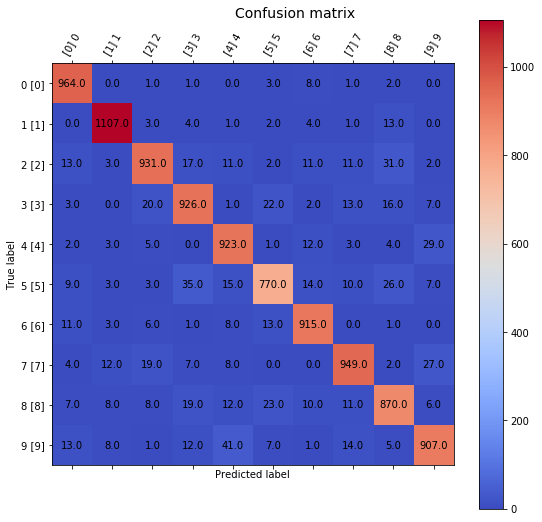

- Test implementation on MNIST data