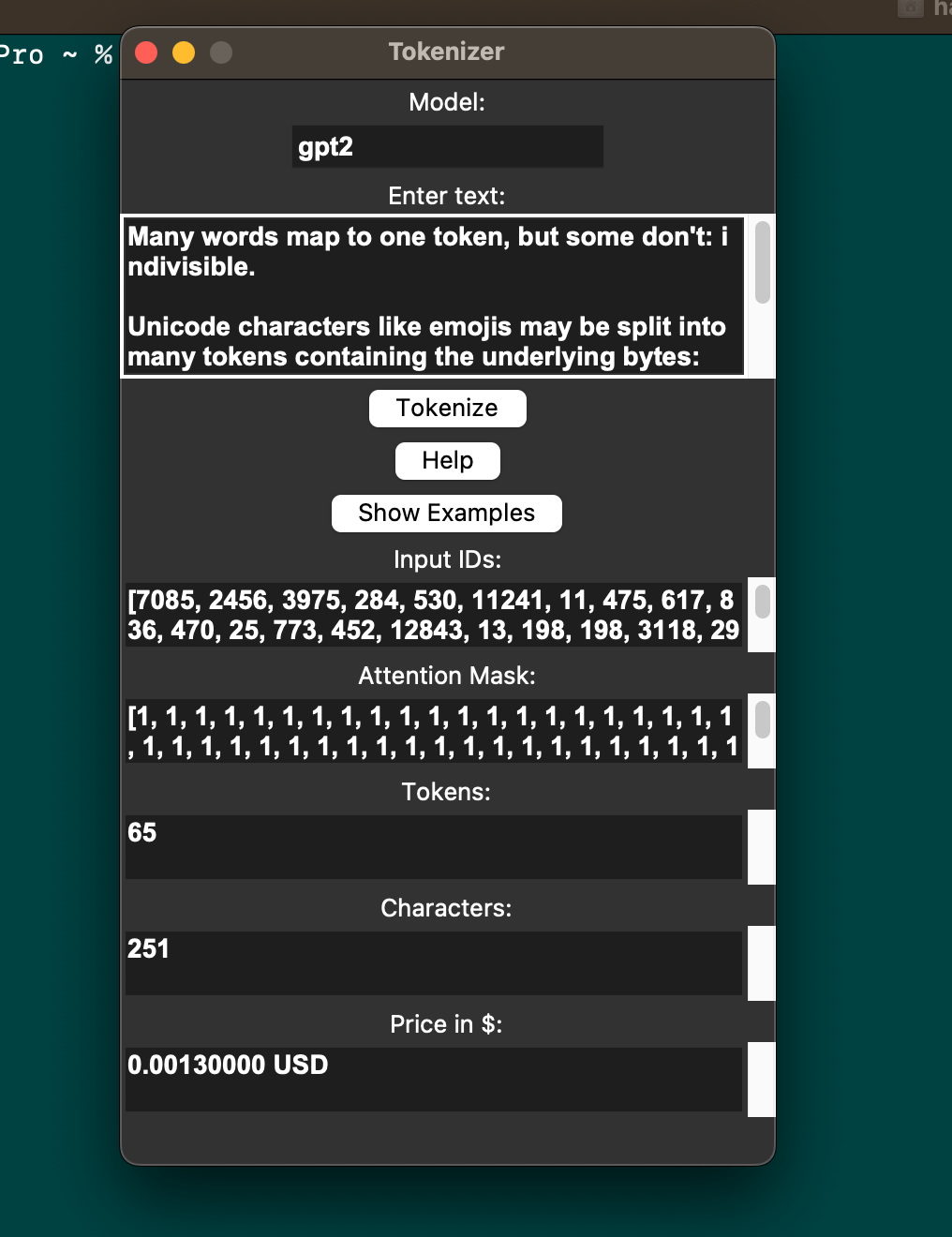

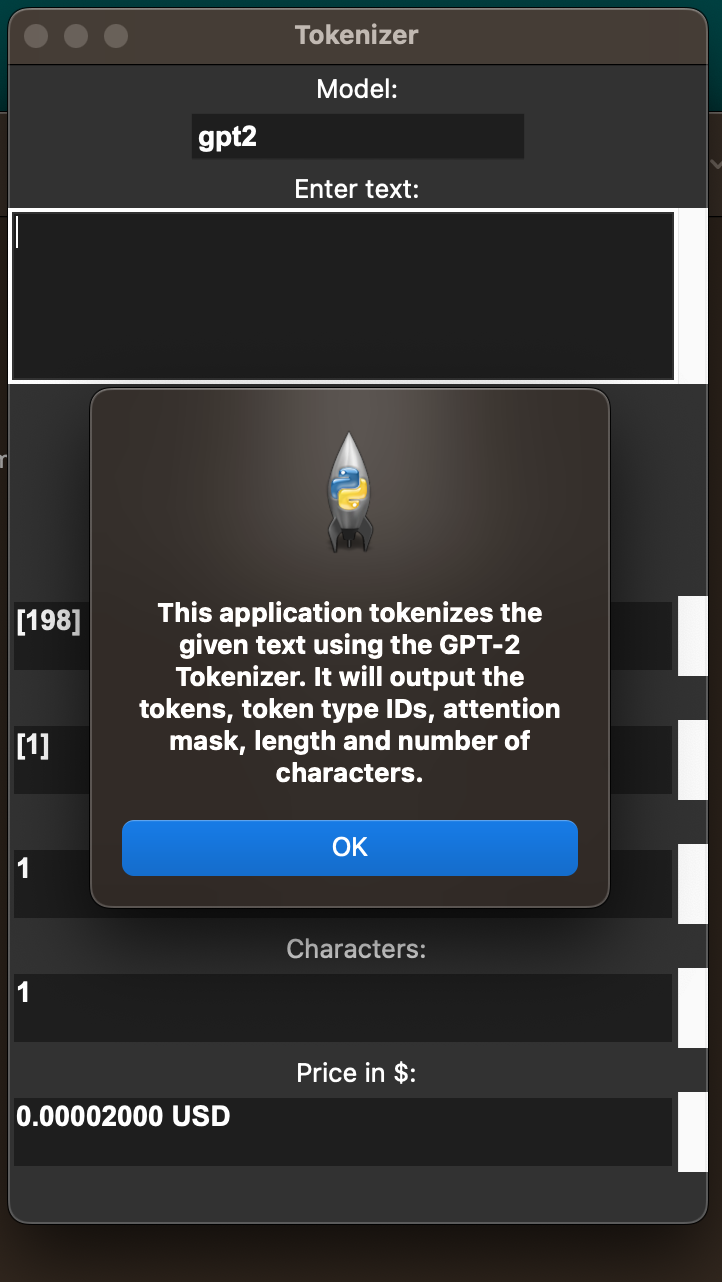

This is a Token information application using the GPT-2 Tokenizer. It takes in an input string and outputs the information of tokens, token type IDs, attention mask. The application also calculates the price of the tokens based on the number of tokens generated.

python 3.6 or higher

- tkinter

- transformers

- wonderwords

- pip install tkinter

- pip install transformers

- pip install wonderwords

- Tokenize input text using the GPT-2 Tokenizer.

- Display tokens, token type IDs, attention mask, length and number of characters.

- Display the price of tokenization based on the number of tokens generated.

- Provide a help message box to assist users.

- Generate random examples to be tokenized.

- tkinter: The tkinter library provides an easy way to create graphical interfaces. It is well-documented and widely used in the Python community.

- transformers: The transformers library provides pre-trained models for NLP tasks. These models have been trained on large amounts of data and have achieved state-of-the-art performance on many NLP benchmarks.

- GPT2TokenizerFast: The GPT-2 tokenizer is a fast and efficient tokenizer for the GPT-2 transformer model. It provides the tokenized representation of the input text, which is used in the program.

- wonderwords: The wonderwords library provides a simple way to generate random sentences. This is useful for generating an example input for the tokenization.

The price calculation is based on the Davinci token, which is the default token used in the calculation. The prices of other tokens (Curie, Babbage, Ada) are also listed in the code for reference.

Visit Chat-GPT3 Tokenizer website for checking official Web-based Tokenizer.

Written by Haseeb Mir having Apache License.