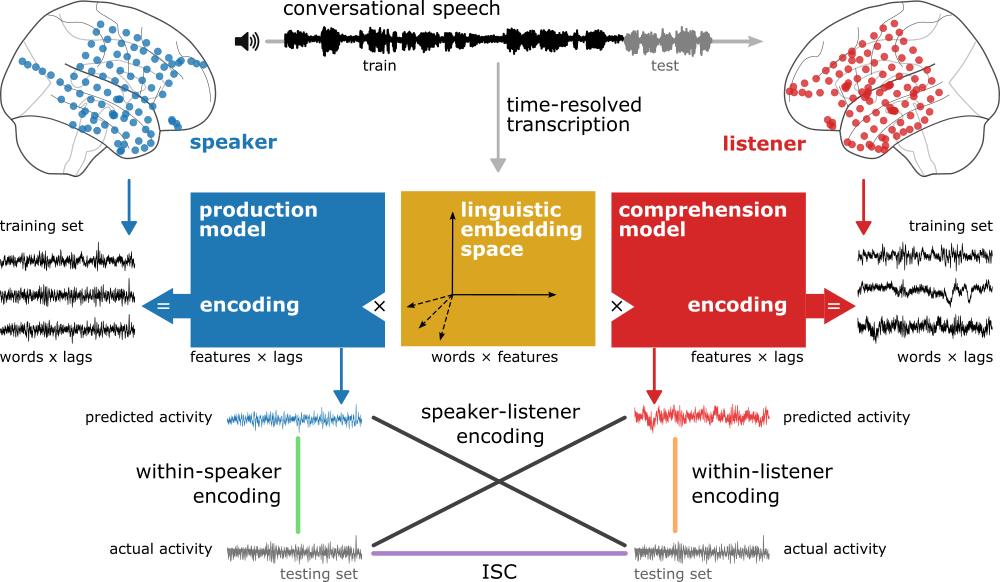

Effective communication hinges on a mutual understanding of word meaning in different contexts. We hypothesize that the embedding space learned by large language models can serve as an explicit model of the shared, context-rich meaning space humans use to communicate their thoughts. We recorded brain activity using electrocorticography during spontaneous, face-to-face conversations in five pairs of epilepsy patients. We demonstrate that the linguistic embedding space can capture the linguistic content of word-by-word neural alignment between speaker and listener. Linguistic content emerged in the speaker’s brain before word articulation and the same linguistic content rapidly reemerged in the listener’s brain after word articulation. These findings establish a computational framework to study how human brains transmit their thoughts to one another in real-world contexts.

A list and description of the main scripts used to preprocess the data, run the analyses, and visualize them.

audioalign.pychecks alignment between signal and electrodes.preprocess.pyruns the preprocessing pipeline (despike, re-reference, filter, etc)plotbrain.pygenerates brain plots per electrode

triggeraverage.pyruns an ERP analysis per electrode.audioxcorr.pycomputes cross-correlation betweeen each electrode and the audio

- generate embeddings with

embeddings.py encoding.pyfits linear models onto all electrodes and lags- [choose electrodes in

electrode-info.ipynb] after running phase shuffled permutations figures.ipynbgenerates all figures- requires

sigtest.pyto generate null distributions for ISE

See environment.yml for detals.