Jonghee Back, Binh-Son Hua, Toshiya Hachisuka, Bochang Moon

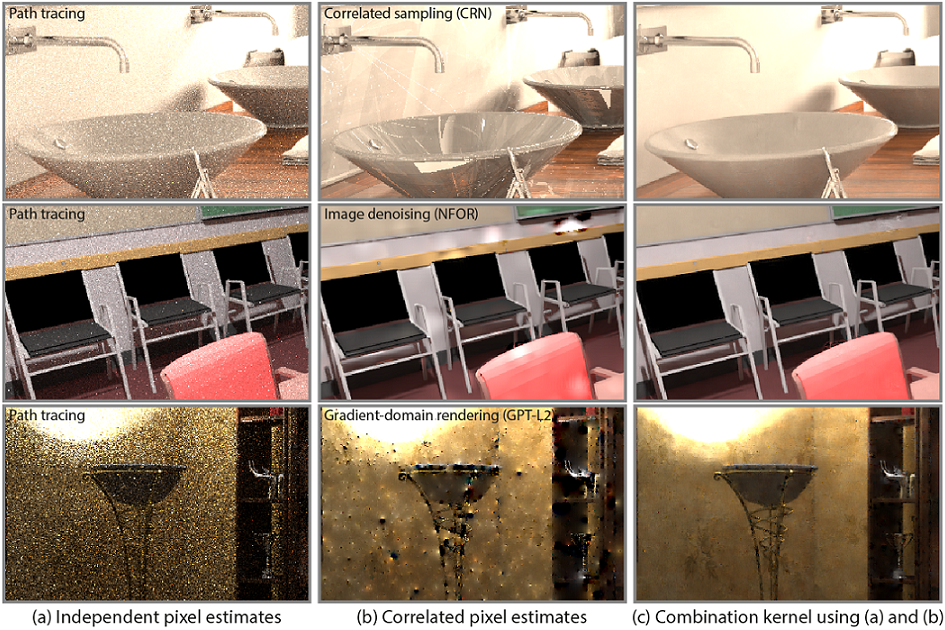

This code is the implementation of the method demonstrated in the paper Deep Combiner for Independent and Correlated Pixel Estimates. For more details, please refer to a project page.

The code is implemented on Ubuntu 16.04 with TensorFlow 1.13.2, Python 3.5 and CUDA 10.0. Furthermore, the code is tested on Ubuntu 16.04, 18.04 and 20.04. We tested the code on Nvidia graphics card with compute capability 6.1 and 7.5 (e.g., Nvidia GeForce GTX 1080, GTX 1080 Ti, RTX 2080 Ti and Quadro RTX 8000 Graphics cards). It is recommended to use a graphics card with 10GB or more for training and testing the provided codes with default setting.

If there is any problem, question or comment, feel free to contact us: Jonghee Back (jongheeback@gm.gist.ac.kr)

Please install docker and nvidia-docker. The detailed instruction of the installation can be found:

In order to build a docker image using the provided Dockerfile, please run this command:

docker build -t combiner .

After the docker image is built, main codes can be run in a new container as below:

nvidia-docker run --rm -v ${PWD}/data:/data -v ${PWD}/codes:/codes -it combiner

By using run.sh, the mentioned process can be run.

The provided codes for training or testing a network is available as follows:

- Set

mode_settingamong three options (MODE_DATA_GENERATION,MODE_TRAIN,MODE_TEST) inconfig.py.MODE_DATA_GENERATION: training dataset generation from exr imagesMODE_TRAIN: training a network using generated datasetMODE_TEST: testing a trained network

- Choose

type_combineramong two options (TYPE_SINGLE_BUFFER,TYPE_MULTI_BUFFER). Note that the provided pre-trained weights must be used differently depending on the type due to different number of parameters.TYPE_SINGLE_BUFFER: single-buffered combination kernelTYPE_MULTI_BUFFER: multi-buffered combination kernel (i.e., four buffers)

- Check other configuration settings in

config.py. - Run

tester.py

We provide two types of pre-trained weights: weights for single and multi-buffered combiner. When you unzip the files, please move __train_ckpt__ folder into data/ directory. The pre-trained weights can be downloaded using links below:

The sample data consists of four types of correlated pixel estimates with corresponding independent pixel estimates: Gradient-domain rendering with L1 and L2 reconstruction (GPT-L1 and GPT-L2), Nonlinearly Weighted First-order Regression (NFOR) and Kernel-Predicting Convolutional Networks (KPCN) denoisers.

After you download the attached sample data, please move __test_scenes__ folder into data/ directory. Other settings for testing the sample data can be changed in config.py. The sample data can be available as below:

All source codes are released under a BSD License. Please refer to our license file for more detail.

If you use our code or paper, please check below:

@article{Back20,

author = {Back, Jonghee and Hua, Binh-Son and Hachisuka, Toshiya and Moon, Bochang},

title = {Deep Combiner for Independent and Correlated Pixel Estimates},

year = {2020},

issue_data = {December 2020},

volume = {39},

number = {6},

journal = {ACM Trans. Graph.},

month = nov,

articleno = {242},

numpages = {12}

}

A code about various exr I/O operations (exr.py in code/ directory) credits to Kernel-Predicting Convolutional Networks (KPCN) denoiser project.

Initial version