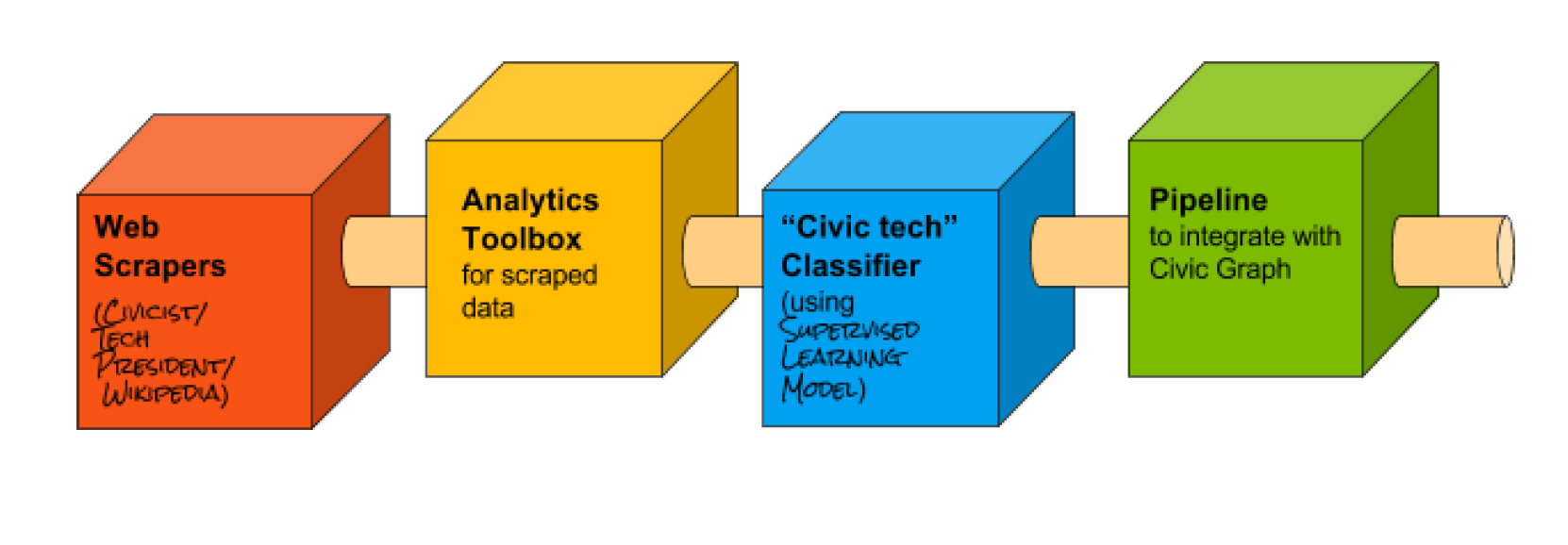

In an effort to make Civic Graph a little bit smarter, I developed four building blocks to help improve the quality (i.e. accuracy, completeness) of the data stored as well as automate aspects of the data collection process. They are:

- Web Scrapers

- Analytics Toolbox

- Classifier

- [Pipeline](/References/Pipeline Diagram.pdf) to integrate scrapers, classifier, and analysis with existing Civic Graph

-

I created a Process Map to explain how everything that I built fits together. View it [here](/References/Mad Libs Visual .pdf)

-

I also created a Handoff Document for a Future Fellow outlining how each script works, external libraries used, and how they can fully integrate my work with the existing Civic Graph in the future. View the document [here](/References/Handoff for Future Fellow.pdf).

I've compiled a list of tools and resources that I used throughout the project. They cover a range of topics including:

- Web Scraping

- Data Analysis with Python

- Text mining

- Natural Language Processing

- Machine Learning

-

BeautifulSoup: Python library for parsing XML and HTML.

-

spaCy: Free, open-source Python library for fast and accurate Natural Language Processing analysis.

-

textacy: Python library built on top of spaCy for higher level Natural Language Processing (NLP).

-

nltk: Platform for writing python programs to work with human language data. Provides over 50 corpora and lexical resources. Includes text processing libraries for classification, tokenization, stemming, tagging, parsing, semantic reasoning, wrappers for industrial-strength NLP libraries. Tools are easy to use and accurate but very slow on large datasets.

-

scikit-learn: Machine learning library in Python built on NumPy, SciPy, and matplotlib.

Created by Hannah Cutler during my fellowship at