This is the course homepage for CPSC 330: Applied Machine Learning at the University of British Columbia. You are looking at the current version (May-June 2023). Some of the previous offerings are as follows:

- 2020w1 by Mike Gelbart

- 2022s by Mehrdad Oveisi

- 2022w1 by Varada Kolhatkar

- 2022w2 by Giulia Toti, Mathias Lecuyer, Amir Abdi

Mehrdad Oveisi

- moveisi@cs.ubc.ca

- LinkedIn.com/in/oveisi

- Google Scholar

- Office hours:

- When: One hour after each class. For clarity, that will be potentially four hours per week. I will leave once there are no more questions.

- Where: Office hours are held in the same classroom after each class.

- Who: Students form both sections are welcome to attend all office hours.

| Section | Day | Lecture | Office Hour | Location |

|---|---|---|---|---|

| 911 | Tue, Thu | 13:30 - 17:00 | 17:00 - 18:00 | FSC 1005 |

| 912 | Wed, Fri | 13:30 - 17:00 | 17:00 - 18:00 | DMP 110 |

Jeffrey Ho

Please email Jeffrey Ho at the above email address for all administrative concerns such as CFA accommodations, extensions or exemptions due to sickness or extenuating circumstances.

© 2021 Varada Kolhatkar, Mike Gelbart, and Mehrdad Oveisi

Software licensed under the MIT License, non-software content licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International (CC BY-NC-SA 4.0) License. See the license file for more information.

- Syllabus / administrative info

- Calendar

- Course GitHub page

- Course Jupyter book

- Course videos YouTube channel

- Canvas

- Gradescope

- Piazza (this is where all announcements will be made)

- Setting up coding environment

- Other course documents

- iClicker Cloud (coming soon)

| IMPORTANT NOTE |

|---|

| As a general rule, summer terms are quite compact and thus time management is crucial to keep up with the course content and the deadlines. More precisely, based on the university calendar, the number of Teaching Days is 63 in winter terms and it is 28 in summer terms. That means there will be 2.25 (63÷28) times more content to learn per week, and 2.25 times faster pace for the homework due dates. In other words, you are expected to learn and deliver the same amount of work compared to winter terms, but do it 2.25 times faster! For this reason, time management is of utmost importance in order to succeed in the course. |

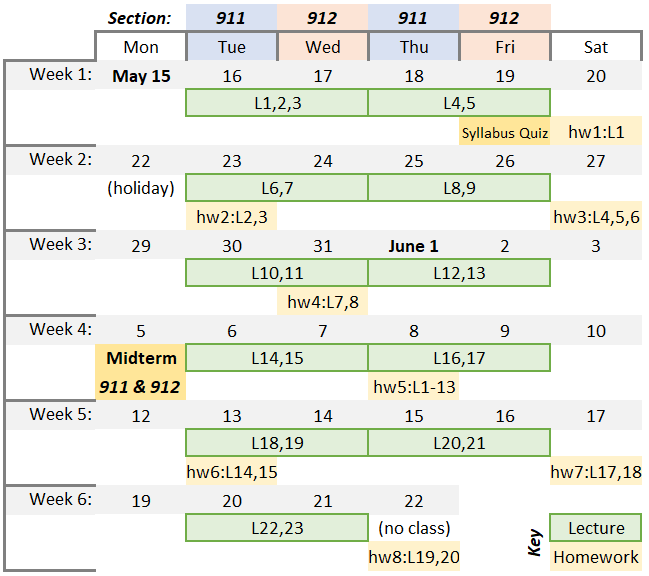

The following chart is a very compact version of the course tentative schedule.

The following sections provide for more detailed course schedule.

| Assessment | Due date | Where to find? | Where to submit? |

|---|---|---|---|

| Syllabus quiz | May 19, 13:00 | Canvas | Canvas |

| hw1 | May 20, 13:00 | Github repo | Gradescope |

| hw2 | May 23, 13:00 | Github repo | Gradescope |

| hw3 | May 27, 13:00 | Github repo | Gradescope |

| hw4 | May 31, 13:00 | Github repo | Gradescope |

| Midterm | June 05, 13:30 to 15:00 |

Section 911: LIFE 2201 Section 912: BIOL 1000 |

Canvas |

| hw5 | June 08, 13:00 | Github repo | Gradescope |

| hw6 | June 13, 13:00 | Github repo | Gradescope |

| hw7 | June 17, 13:00 | Github repo | Gradescope |

| hw8 | June 22, 13:00 | Github repo | Gradescope |

| Final exam | TBA | Location: TBA | Canvas |

Lectures:

- The lectures will be in-person (see Class Schedule above for more details).

- All lecture files are subject to change without notice up until they are covered in class.

- You are expected to watch the "Pre-watch" videos before each lecture.

- You are expected to attend the lectures.

- You will find the lecture notes under the lectures in this repository. Lectures will be posted as they become available.

| # | Date | Topic | Assigned videos | vs. CPSC 340 |

|---|---|---|---|---|

| 1 | May 16,17 | Course intro | 📹 |

n/a |

| Part I: ML fundamentals and preprocessing | ||||

| 2 | May 16,17 | Decision trees | 📹 |

less depth |

| 3 | May 16,17 | ML fundamentals | 📹 |

similar |

| 4 | May 18,19 |

|

📹 |

less depth |

| 5 | May 18,19 | Preprocessing, sklearn pipelines |

📹 |

more depth |

| 6 | May 23,24 | More preprocessing, sklearn ColumnTransformer, text features |

📹 |

more depth |

| 7 | May 23,24 | Linear models | 📹 |

less depth |

| 8 | May 25,26 | Hyperparameter optimization, overfitting the validation set | 📹 |

different |

| 9 | May 25,26 | Evaluation metrics for classification | 📹 |

more depth |

| 10 | May 30,31 | Regression metrics | 📹 |

more depth on metrics less depth on regression |

| 11 | May 30,31 | Ensembles | 📹 |

similar |

| 12 | Jun 1,2 | Feature importances, model interpretation | 📹 |

feature importances is new, feature engineering is new |

| 13 | Jun 1,2 | Feature engineering and feature selection | None | less depth |

| Jun 5 | Midterm | |||

| Part II: Unsupervised learning, transfer learning, different learning settings | ||||

| 14 | Jun 6,7 | Clustering | 📹 |

less depth |

| 15 | Jun 6,7 | More clustering | less depth | |

| 16 | Jun 8,9 | Simple recommender systems | None | less depth |

| 17 | Jun 8,9 | Text data, embeddings, topic modeling | 📹 |

new |

| 18 | Jun 13,14 | Neural networks and computer vision | less depth | |

| 19 | Jun 13,14 | Time series data | (Optional) Humour: The Problem with Time & Timezones | new |

| 20 | Jun 15,16 | Survival analysis | 📹 (Optional but highly recommended) Calling Bullshit 4.1: Right Censoring | new |

| Part III: Communication, ethics, deployment | ||||

| 21 | Jun 15,16 | Ethics | 📹 (Optional but highly recommended) |

new |

| 22 | Jun 20,21 | Communication | 📹 (Optional but highly recommended) |

new |

| 23 | Jun 20,21 | Model deployment and Conclusions | new | |

| 24 | (optional reading) Stochastic Gradient Descent | |||

| 25 | (optional reading) Combining Multiple Tables |