MobileNet-YOLO Caffe

MobileNet-YOLO

A caffe implementation of MobileNet-YOLO (YOLOv2 base) detection network, with pretrained weights on VOC0712 and mAP=0.718

| Network | mAP | Resolution | Download | NetScope |

|---|---|---|---|---|

| MobileNet-YOLO-Lite | 0.675 | 416 | deploy | graph |

| MobileNet-YOLOv3-Lite | 0.726 | 416 | deploy | graph |

| MobileNet-YOLOv3-Lite | 0.708 | 320 | deploy | graph |

Note : training from imagenet model , mAP of MobileNet-YOLOv3-Lite was 0.68

Windows Version

Performance

Compare with YOLOv2 , I can't find yolov3 score on voc2007 currently

| Network | mAP | Weight size | Inference time (GTX 1080) |

|---|---|---|---|

| MobileNet-YOLOv3-Lite | 0.708 | 20.3 mb | 8 ms (320x320) |

| MobileNet-YOLOv3-Lite | 0.726 | 20.3 mb | 14 ms (416x416) |

| Tiny-YOLO | 0.57 | 60.5 mb | N/A |

| YOLOv2 | 0.76 | 193 mb | N/A |

Note : the yolo_detection_output_layer not be optimization , and batch norm and scale layer can merge into conv layer

Other models

You can find non-depthwise convolution network here , Yolo-Model-Zoo

| network | mAP | resolution | macc | param |

|---|---|---|---|---|

| PVA-YOLOv3 | 0.703 | 416 | 2.55G | 4.72M |

| Pelee-YOLOv3 | 0.703 | 416 | 4.25G | 3.85M |

CMake Build

> git clone https://github.com/eric612/MobileNet-YOLO.git

> cd $MobileNet-YOLO_root/

> mkdir build

> cd build

> cmake ..

> make -j4

Training

Download lmdb

Unzip into $caffe_root/

Please check the path exist "$caffe_root\examples\VOC0712\VOC0712_trainval_lmdb" and "$caffe_root\examples\VOC0712\VOC0712_test_lmdb"

Download pre-trained weights , and save at $caffe_root\model\convert

> cd $caffe_root/

> sh train_yolo.sh

Training Darknet YOLOv2

> cd $caffe_root/

> sh train_darknet.sh

Demo

> cd $caffe_root/

> sh demo_yolo_lite.sh

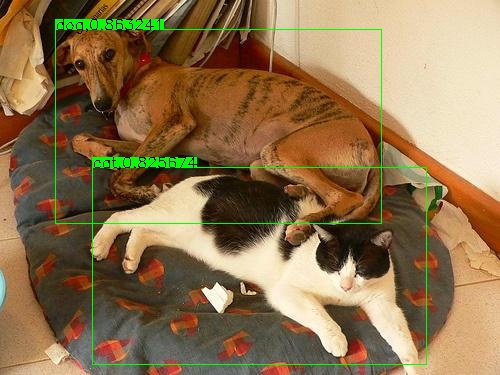

If load success , you can see the image window like this

Vehicle Dection

CLASS NAME

char* CLASSES2[6] = { "__background__","bicycle", "car", "motorbike", "person","cones" };

Maintenance

I'll appreciate if you can help me to

- Miragrate to modivius neural compute stick

- Mobilenet upgrade to v2 or model tunning

Caffe

Caffe is a deep learning framework made with expression, speed, and modularity in mind. It is developed by Berkeley AI Research (BAIR)/The Berkeley Vision and Learning Center (BVLC) and community contributors.

Check out the project site for all the details like

- DIY Deep Learning for Vision with Caffe

- Tutorial Documentation

- BAIR reference models and the community model zoo

- Installation instructions

and step-by-step examples.

Custom distributions

- Intel Caffe (Optimized for CPU and support for multi-node), in particular Xeon processors (HSW, BDW, SKX, Xeon Phi).

- OpenCL Caffe e.g. for AMD or Intel devices.

- Windows Caffe

Community

Please join the caffe-users group or gitter chat to ask questions and talk about methods and models. Framework development discussions and thorough bug reports are collected on Issues.

Happy brewing!

License and Citation

Caffe is released under the BSD 2-Clause license. The BAIR/BVLC reference models are released for unrestricted use.

Please cite Caffe in your publications if it helps your research:

@article{jia2014caffe,

Author = {Jia, Yangqing and Shelhamer, Evan and Donahue, Jeff and Karayev, Sergey and Long, Jonathan and Girshick, Ross and Guadarrama, Sergio and Darrell, Trevor},

Journal = {arXiv preprint arXiv:1408.5093},

Title = {Caffe: Convolutional Architecture for Fast Feature Embedding},

Year = {2014}

}