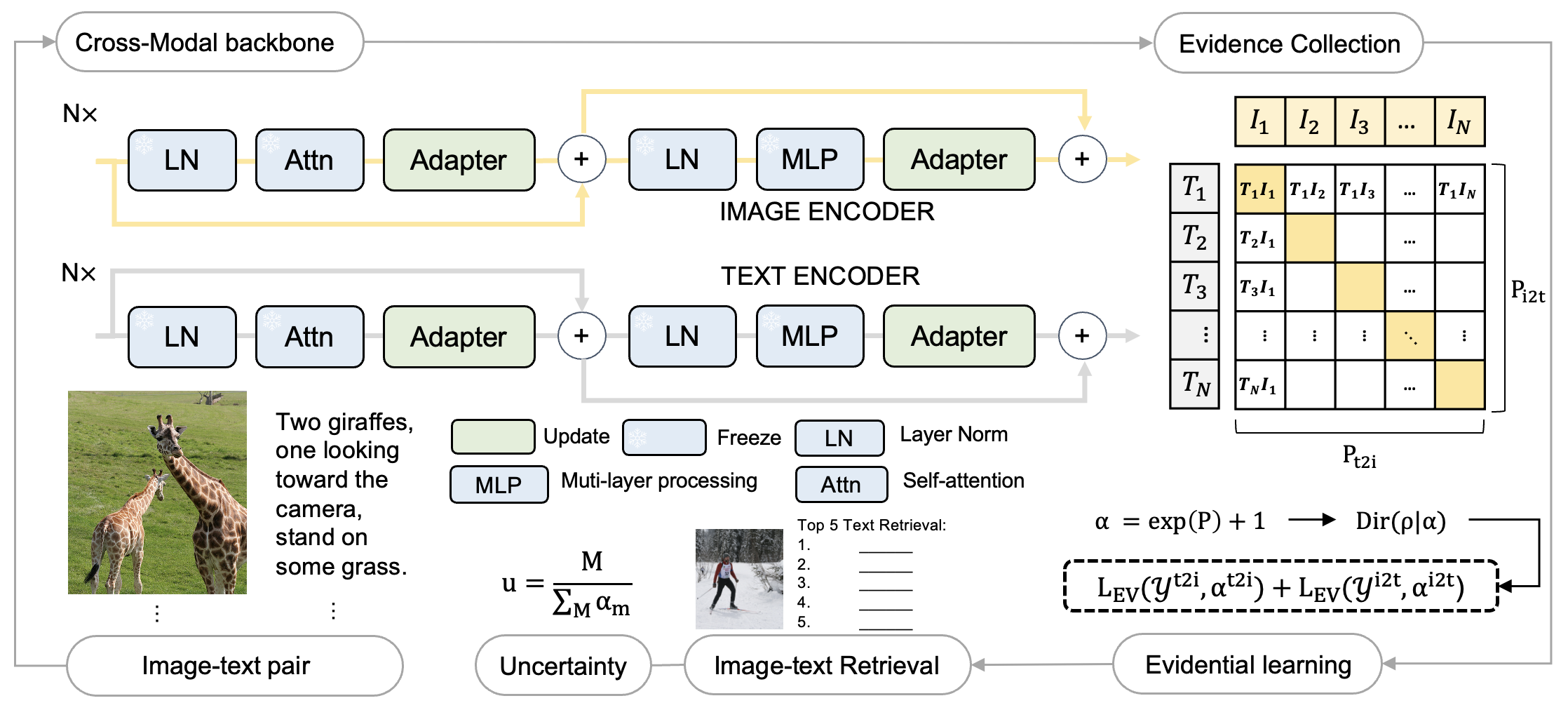

Evidential Language-Image Posterior (ELIP) achieves robust alignment between web images and semantic knowledge across various OOD cases by leveraging evidential uncertainties. The proposed ELIP can be seamlessly integrated into general image-text contrastive learning frameworks, providing an efficient fine-tuning approach without exacerbating the need for additional data.

First, install PyTorch 1.7.1 (or later) and torchvision, as well as small additional dependencies, and then install this repo as a Python package. On a CUDA GPU machine, the following will do the trick:

$ conda install --yes -c pytorch pytorch=1.7.1 torchvision cudatoolkit=11.0

$ pip install -r requirements.txtReplace cudatoolkit=11.0 above with the appropriate CUDA version on your machine or cpuonly when installing on a machine without a GPU.

Downlaod raw image of COCO2014 and FLickr from the official website. We use the annotation file from Karpathy split of COCO to train the model.

We kindly follow this work to generate our OOD images link.

To evaluate the finedtuned ELIP,

- Modify the config files located in /configs:

*_root: the root path of images and annotations.dataset: coco / flickr.pretrained: finetuned weights of ELIP.

- Run the following script:

python -m torch.distributed.run --nproc_per_node=1 \

train_retrieval.py \

--config configs/retrieval_*_eval.yaml \

--output_dir /path/to/output \

--evaluate- Finetuning ELIP with multiple GPUs please run:

python -m torch.distributed.run --nproc_per_node=4 \

train_retrieval.py \

--config configs/retrieval_coco_finetune-noEV.yaml \

--output_dir /path/to/output \

--seed 255If you find this code to be useful for your research, please consider citing.

@inproceedings{ELIP,

author = {Sun, Guohao and Bai, Yue and Yang, Xueying and Fang, Yi and Fu, Yun and Tao, Zhiqiang},

title = {Aligning Out-of-Distribution Web Images and Caption Semantics via Evidential Learning},

year = {2024},

booktitle = {Proceedings of the ACM on Web Conference 2024},

}The implement of ELIP relies on sources from CLIP. We thank the original authors for their open-sourcing.