In broad real-world scenarios, proactively asking a question requires more understanding and background knowledge than answering.

SQ-LlaVA: Self-questioning for Vision-Language Assistant [paper]

Guohao Sun, Can Qin, Jiamian Wang, Zeyuan Chen, Ran Xu, Zhiqiang Tao

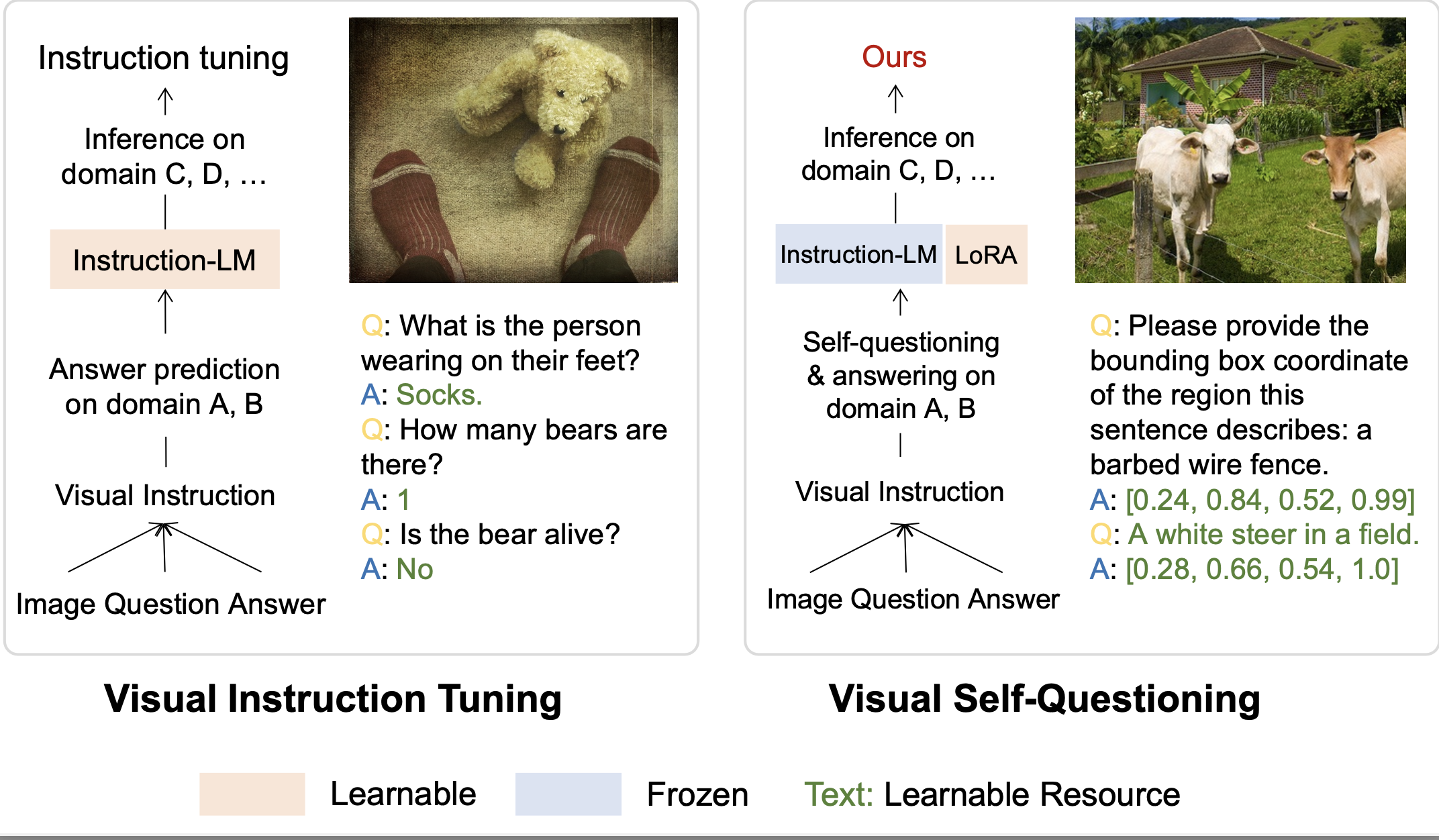

A high-level comparison between visual instruction tuning and visual self-questioning (ours) for vision-language assistant.

2024.07.01🌟 Our paper has been accepted by ECCV 2024.2024.03.10🌟 Our paper and code was released!

- Install Package

conda create -n llava python=3.10 -y

conda activate llava

pip install --upgrade pip # enable PEP 660 support

cd SQ-LLaVA

pip install -e .- Install additional packages for training cases

pip install -e ".[train]"

pip install flash-attn --no-build-isolationTo test visual self-questioning, please run run_sq.sh with the following settings.

--version v1_sq: use the self-questioning template.--n_shot 3: the number of generated questions.

CUDA_VISIBLE_DEVICES=0 python visual_questioning.py \

--model_path path/to/sqllava-v1.7-7b-lora-gpt4v-cluster-sq-vloraPTonly \

--model_base Lin-Chen/ShareGPT4V-7B_Pretrained_vit-large336-l12_vicuna-7b-v1.5 \

--conv-mode="v1_sq" \

--lora_pretrain path/to/sqllava-v1.7-7b-lora-gpt4v-cluster-sq-vloraPTonly \

--n_shot 3| Data file name | Size |

|---|---|

| sharegpt4v_instruct_gpt4-vision_cap100k.json | 134 MB |

| share-captioner_coco_lcs_sam_1246k_1107.json | 1.5 GB |

| sharegpt4v_mix665k_cap23k_coco-ap9k_lcs3k_sam9k_div2k.json | 1.2 GB |

| LLaVA | 400 MB |

For your convinence, please follow download_data.sh for data preparation.

- LAION-CC-SBU-558K: images.zip

- COCO: train2017

- WebData: images. Only for academic usage.

- SAM: images. We only use 000000~000050.tar for now.

- GQA: images

- OCR-VQA: download script. We save all files as

.jpg - TextVQA: trainvalimages

- VisualGenome: part1, part2

Then, organize the data as follows in ./mixTraindata:

Visual-self-qa

├── ...

├── mixTraindata

│ ├── llava

│ │ ├── llava_pretrain

│ │ │ ├── images

│ ├── coco

│ │ ├── train2017

│ ├── sam

│ │ ├── images

│ ├── gqa

│ │ ├── images

│ ├── ocr_vqa

│ │ ├── images

│ ├── textvqa

│ │ ├── train_images

│ ├── vg

│ │ ├── VG_100K

│ │ ├── VG_100K_2

│ ├── share_textvqa

│ │ ├── images

│ ├── web-celebrity

│ │ ├── images

│ ├── web-landmark

│ │ ├── images

│ ├── wikiart

│ │ ├── images

│ ├── share-captioner_coco_lcs_sam_1246k_1107.json

│ ├── sharegpt4v_instruct_gpt4-vision_cap100k.json

│ ├── sharegpt4v_mix665k_cap23k_coco-ap9k_lcs3k_sam9k_div2k.json

│ ├── blip_laion_cc_sbu_558k.json

│ ├── llava_v1_5_mix665k.json

├── ...

Training consists of two stages: (1) feature alignment stage; (2) visual self-questioning instruction tuning stage, teaching the model to ask questions and follow multimodal instructions.

To train on fewer GPUs, you can reduce the per_device_train_batch_size and increase the gradient_accumulation_steps accordingly. Always keep the global batch size the same: per_device_train_batch_size x gradient_accumulation_steps x num_gpus.

Both hyperparameters used in pretraining and finetuning are provided below.

- Pretraining

| Hyperparameter | Global Batch Size | Learning rate | Epochs | Max length | Weight decay |

|---|---|---|---|---|---|

| SQ-LLaVA | 256 | 1e-3 | 1 | 2048 | 0 |

- Finetuning

| Hyperparameter | Global Batch Size | Learning rate | Epochs | Max length | Weight decay |

|---|---|---|---|---|---|

| SQ-LLaVA | 128 | 2e-4 | 1 | 2048 | 0 |

Training script with DeepSpeed ZeRO-2: pretrain.sh.

--mm_projector_type cluster: the prototype extractor & a two-layer MLP vision-language connector.--vision_tower openai/clip-vit-large-patch14-336: CLIP ViT-L/14 336px.

Instruction tuning:

Training script with DeepSpeed ZeRO-3 and lora: finetune_lora_clu_sq.sh.

--mm_projector_type cluster: the prototype extractor & a two-layer MLP vision-language connector.--mm_projector_type mlp2x_gelu: a two-layer MLP vision-language connector.--vision_tower openai/clip-vit-large-patch14-336: CLIP ViT-L/14 336px.--image_aspect_ratio pad: this pads the non-square images to square, instead of cropping them; it slightly reduces hallucination.--group_by_modality_length True: this should only be used when your instruction tuning dataset contains both language (e.g. ShareGPT) and multimodal (e.g. LLaVA-Instruct). It makes the training sampler only sample a single modality (either image or language) during training, which we observe to speed up training by ~25%, and does not affect the final outcome.--version v1_sq: training for visual self-questioning.--vit_lora_enable: optimize vision encoder using vit lora.

Prepare data Please download raw images of datasets (COCO, Flickr, nocaps, conceptual) for image captioning tasks.

- Evaluate models on image captioning. See captioning.sh on 4 datasets.

- Evaluate models on a diverse set of 12 benchmarks. To ensure the reproducibility, we evaluate the models with greedy decoding. We do not evaluate using beam search to make the inference process consistent with the chat demo of real-time outputs.

See Evaluation.md.

If you find this code to be useful for your research, please consider citing.

@inproceedings{sun2024sq,

title={SQ-LLaVA: Self-Questioning for Large Vision-Language Assistant},

author={Sun, Guohao and Qin, Can and Wang, Jiamian and Chen, Zeyuan and Xu, Ran and Tao, Zhiqiang},

year = {2024},

booktitle = {ECCV},

}- LLaVA: the codebase we built upon.