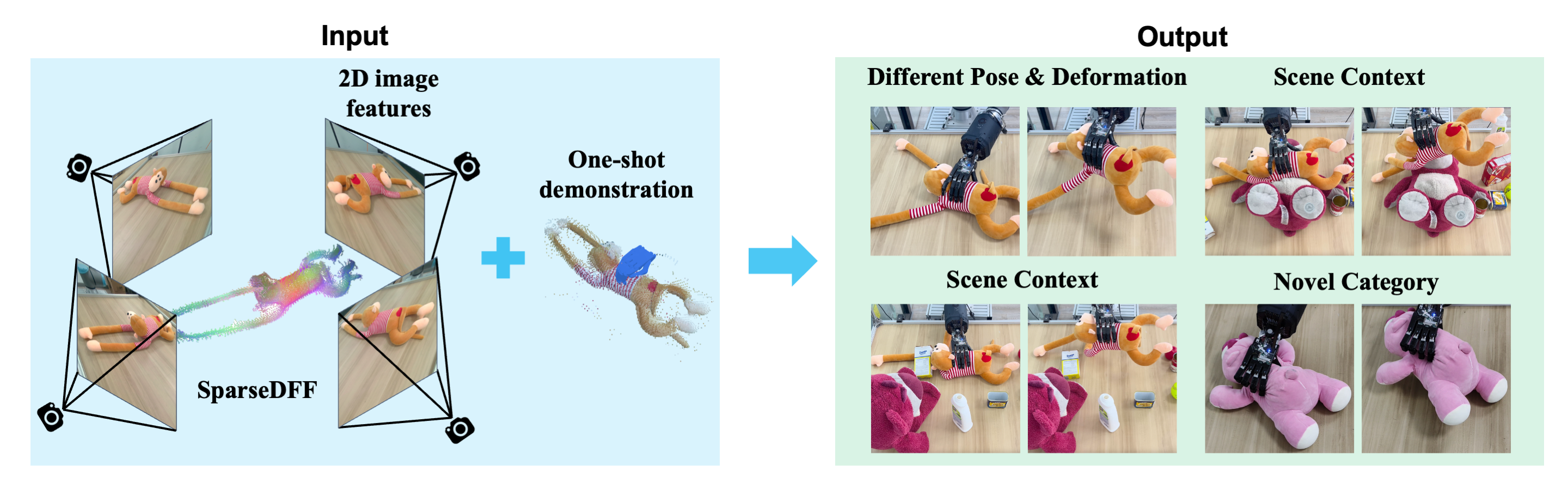

We introduce a novel method for acquiring view-consistent 3D DFFs from sparse RGBD observations, enabling one-shot learning of dexterous manipulations that are transferable to novel scenes.

ICLR 2024 Accepted

- A brief Introduction to SparseDFF.

- Everything mentioned in the paper and a step-by-step guide to use it!

- A Friendly and Useful guide for Kinect Installation and everything you need for data colection!. Additional Codes for automatic image capturing during manipulation transfer at runtime.

- Additional codes for EE besides Shadow Hand (Comming soon!)

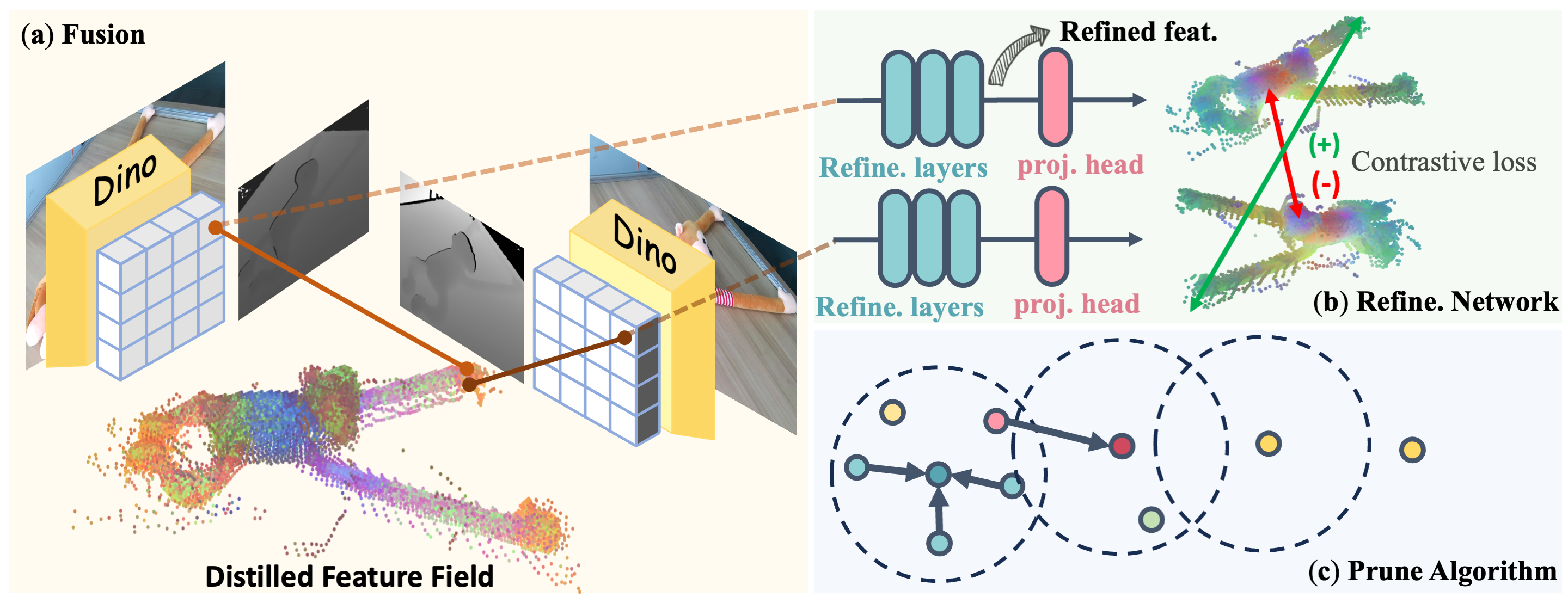

Then, A lightweight feature refinement network optimizes with a contrastive loss between pairwise views after back-projecting the image features onto the 3D point cloud.

Additionally, we implement a point-pruning mechanism to augment feature continuity within each local neighborhood.

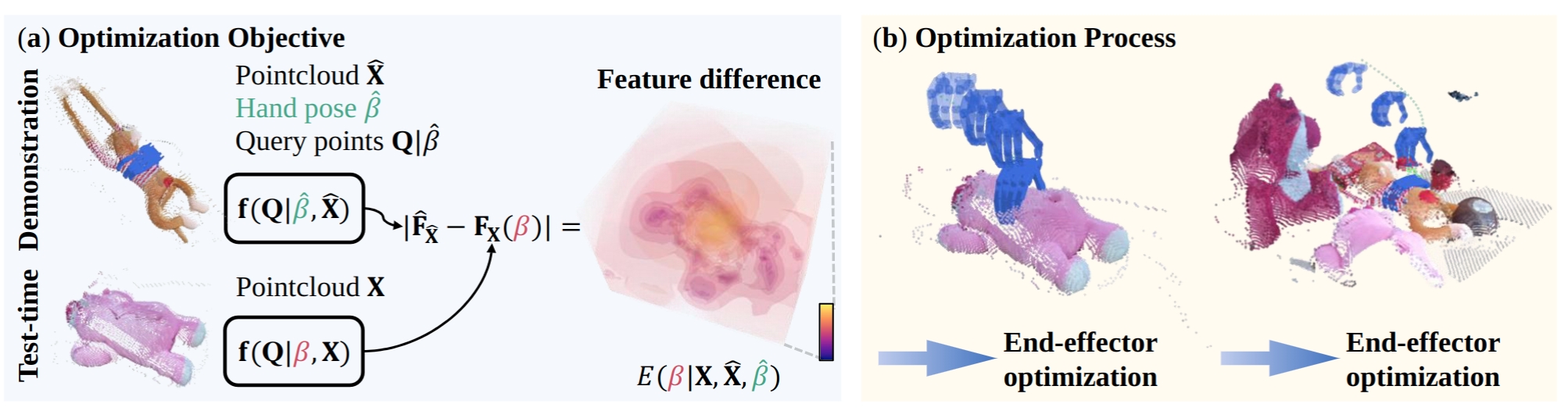

By establishing coherent feature fields on both source and target scenes, we devise an energy function that facilitates the minimization of feature discrepancies w.r.t. the end-effector parameters between the demonstration and the target manipulation.We provide sample data that allows you to directly conduct manipulation transfer within our collected data, and offer visualization code for visualizing the experimental results. Additionally, we also provide code for data collection using Kinect. For additional environment configuration and instructions, please refer to Data Collection Part.

We provide bash script for example data download and pretrained model download.

git clone --recurse-submodules git@github.com:Halowangqx/SparseDFF.git

conda create -n sparsedff python=3.9

conda activate sparsedff

# Install submodules

cd ./SparseDFF/thirdparty_module/dinov2

pip install -r requirements.txt

cd ../pytorch_kinematics

pip install -e .

cd ../pytorch3d

pip install -e .

cd ../segment-anything

pip install -e .

# download relevant packages

pip install open3d opencv-python scikit-image trimesh lxml pyvirtualdisplay pyglet==1.5.15

# download the pretrained-model for sam and dinov2

bash pth_download.sh

# download the example data

bash download.sh

If you simply wish to run our model (including both training and inference) on pre-captured data, there's no need for the following installation steps. Go ahead and start playing with SparseDFF directly!

However, if you intend to collect your own data using Azure Kinect, please proceed with the following setup.

Friendly and Useful Installation guide for Kinect SDK

If you'd like to quickly experience the results of our model, you can directly use our default example data for testing.

-

Configuration Management: All configurations are managed via the

config.yamlfile. You can find detailed usage instructions and comments for each parameter within this file. -

Running the Test: To test using the default example data, execute the following command:

python unified_optimize.py -

**A brief Visualization of Result **: After completing the test, if

visualizeis set totrueinconfig.yaml, a clear trimesh visualization will be presented. Otherwise, the visualization result will be saved as an image.

If you wish to test with your own data, follow these steps!

You need to complete the configuration of the Kinect Camera before running the subsequent code. You can also collect data in your own way and then organize it into the same data structure.

We utilize four Azure Kinects for data collection. The steps are as follows:

Capturing 3D Data: Usecamera/capture_3d.py to collect the data required for the model:

cd camera

python capture_3d.py --saveStructure of the Data:

|____20231010_monkey_original # name

| |____000262413912 # the serial number of the camera

| | |____depth.npy # depth img

| | |____colors.npy # rgb img

| | |____intrinsic.npy # intrinsic of the camera

| | |____points.npy # 3D points calculated from the depth and colors

| | |____colors.png # rgb img (.png for quick view)

| | |____distortion.npy # Distortion

| | ... # Data of other camerasTo train the refinement model corresponding to your data, follow these steps:

-

Data Preparation: Navigate to the refinement directory and execute the following commands:

cd refinement python read.py --img_data_path 20231010_monkey_original -

Start Training: Run the following command to train the model:

python train_linear_probe.py --mode glayer --key 0

-

Model Configuration: After training, you can find the corresponding checkpoint in the refinement directory, and then update the

model_pathinconfig.yamlto the appropriate path.

Before proceeding with the testing, it's important to ensure that your newly collected reference data, the trained refinement network, and the test data are correctly specified in the config.yaml file. Once these elements are confirmed and properly set up in the configuration, you can directly execute:

python unified_optimize.pyWe also provide a pipeline for automatic image capturing during manipulation transfer at runtime.

- Configure the Camera Environment: Set up the camera environment as described in the data collection section.

- Automatic Image Capture: After configuring the camera environment, change the value of

data2in theconfig.yamlfile tonullto enable automatic image capturing during reference.

With these steps, you can either quickly test our model with provided materials or delve deeper into experiments and model training with your own data. We look forward to seeing the innovative applications you create using our model!

We provide the vis_features.py script in order to perform further fine-grained visualization on the data in the ./data directory.

All the Visualizations will be saved in ./visulize directory.

Visualize the feature field using the similarity between

python vis_features.py --mode 3Dsim_volume --key 0

Visualize the optimization Result

python vis_features.py --mode result_vis --key=1 --pca --with_hand

Visualize the feature similarity between all points in reference pointcloud, test pointcloud and *a point in reference point cloud assigned by --ref_idx.

python vis_features.py --mode 3D_similarity --ref_idx 496 --similarity l2