This demo and accompanying tutorial show users how to deploy an Apache Kafka® event streaming application using ksqlDB and Kafka Streams for stream processing. All the components in the Confluent platform have security enabled end-to-end. Run the demo with the tutorial.

Table of Contents

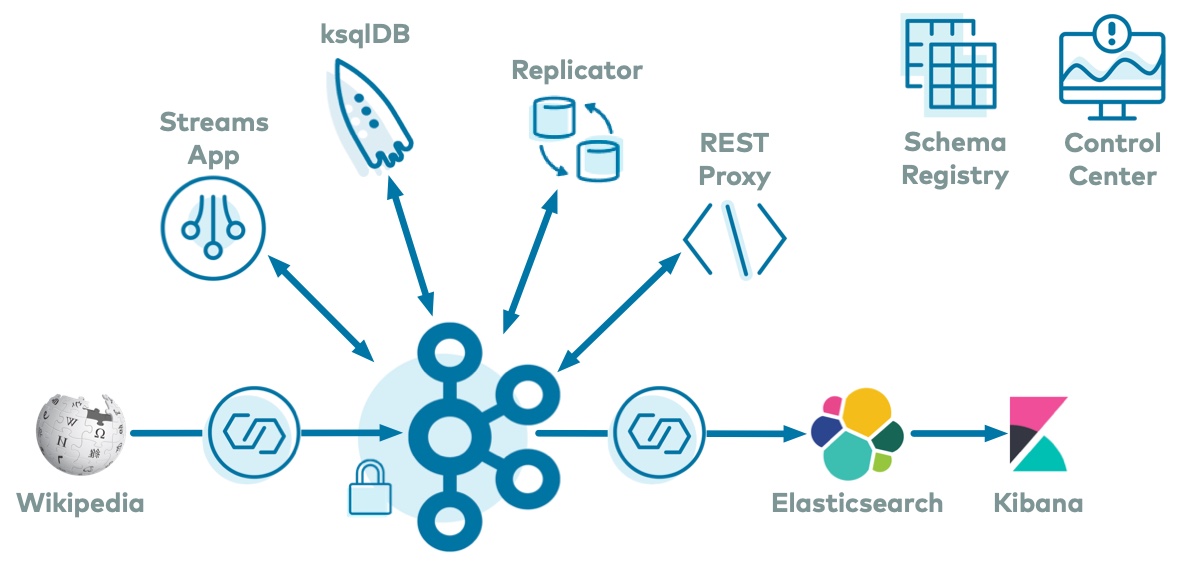

The use case is a Kafka event streaming application for real-time edits to real Wikipedia pages.

Wikimedia Foundation has IRC channels that publish edits happening to real wiki pages (e.g. #en.wikipedia, #en.wiktionary) in real time.

Using Kafka Connect, a Kafka source connector kafka-connect-irc streams raw messages from these IRC channels, and a custom Kafka Connect transform kafka-connect-transform-wikiedit transforms these messages and then the messages are written to a Kafka cluster.

This demo uses ksqlDB and a Kafka Streams application for data processing.

Then a Kafka sink connector kafka-connect-elasticsearchstreams the data out of Kafka, and the data is materialized into Elasticsearch for analysis by Kibana.

Confluent Replicator is also copying messages from a topic to another topic in the same cluster.

All data is using Confluent Schema Registry and Avro.

Confluent Control Center is managing and monitoring the deployment.

You can find the documentation for running this demo and its accompanying tutorial at https://docs.confluent.io/current/tutorials/cp-demo/docs/index.html.

For additional examples that showcase streaming applications within an event streaming platform, please refer to the examples GitHub repository.