Deep Images Hub

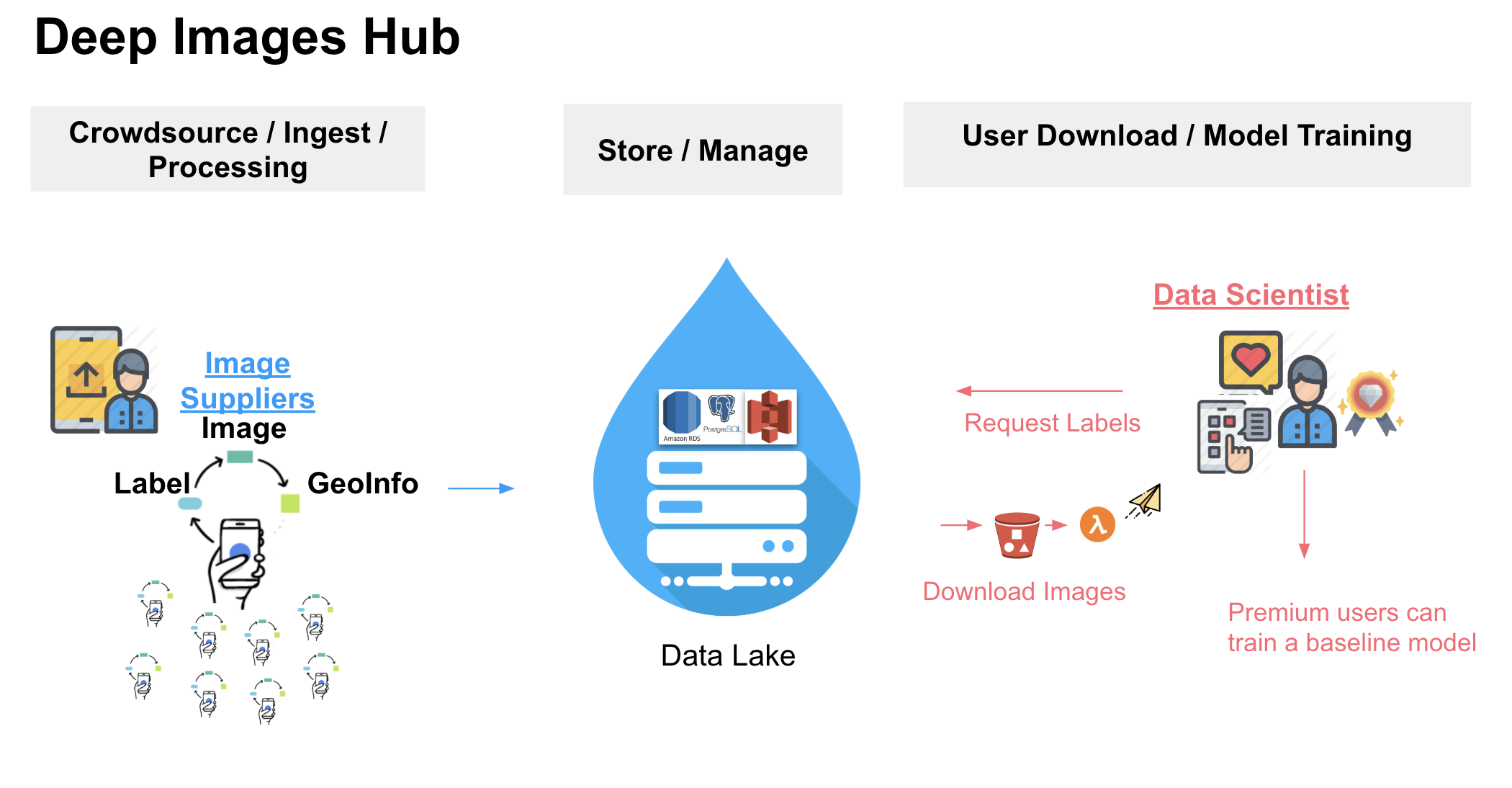

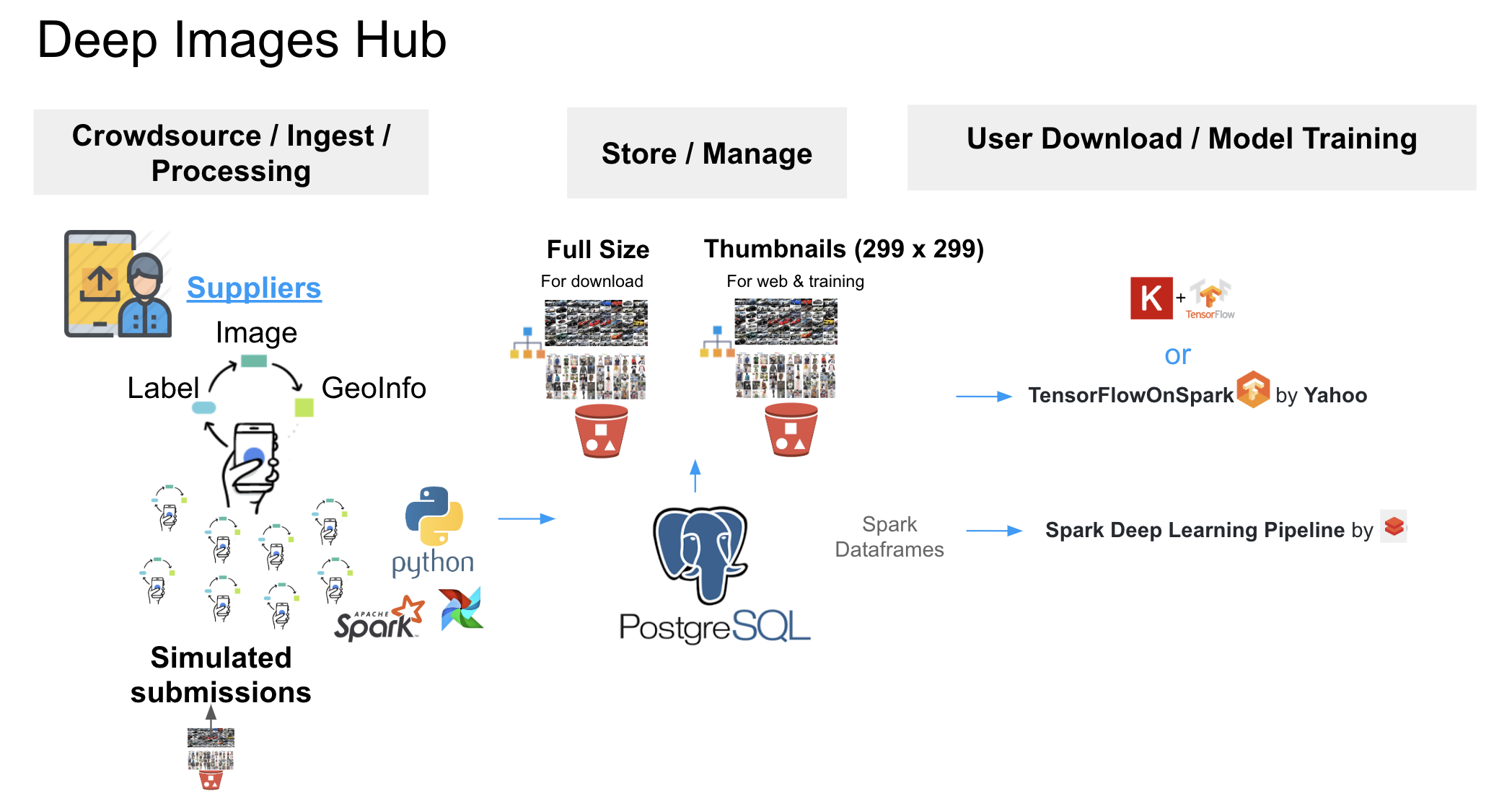

This project create a platform to automatically verify, preprocess and organize crowdsourced labeled images as datasets for users to select and download by their choice. Additionally, the platform allow premium users to train a sample computer vision model with their choice of image subsets.

Motivation

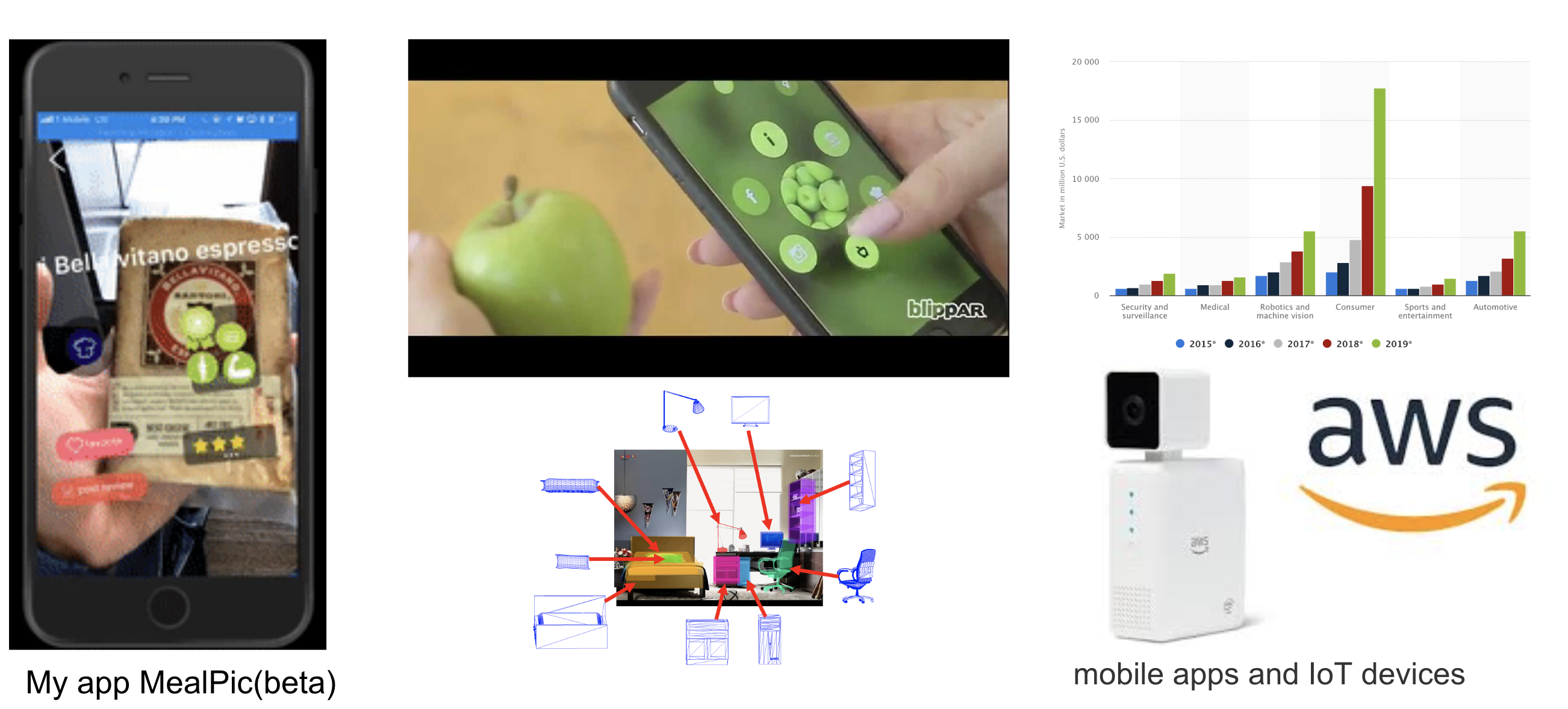

The performance of Computer Vision is now closest to human eyes thanks to the recent achievements from computer vision researchers. At the same time, the demands for Computer Vision is all time high. Especially for classifying and recognizing consumer products since it can create new business use cases and opportunities for IoT, mobile apps and Augmented Reality companies. But how come, computer Vision is still not a household name?

The major bottleneck is lack of image dataset to train CV models.To train a good quality computer vision model required a lot of image data. For example if a we want to train a computer vision model to recognize this Lemon Flavor of LaCroix Sparkling Water. The data scientist will need approximately 1000+ images of the sparkling water under all kind of lighting and background environment. The problem is, where can the we find these many of images? Even the internet does have these many of images of the same product.

Can’t imagine to train a model to recognize different soft drinks on the market.

Without image datasets, model can’t be train and new business can’t be create. As a data engineer with a heart of an entrepreneur. I spotted this problem and try to come up with a solution with Deep Images Hub.

Deep Images Hub is the centralized image data hub that provides crowdsourced labeled images from images suppliers to data scientist. Data Scientist can download the images by their choices of labels.

Additionally for premium users, they can request to train a baseline model. So Deep Image Hub is the automated platform for computer vision. We envisoned to be the artificial the computer vision platform just like Amazon's Mechanical Turk.

More details can be found in this more in-depth slides: Slides

Pain-points to be Solved

There are not enough diversified image datasets and computer vision model to meet the demands from different consumer products and services use cases?

There is not enough resource to collect large volume of diversified images for a single object. Especially for low budgeted startups.

Real-world deep learning applications are complex big data pipelines, which require a lot of data processing (such as cleaning, transformation, augmentation, feature extraction, etc.) beyond model training/inference. Therefore, it is much simpler and more efficient (for development and workflow management) to seamlessly integrate deep learning functionalities into existing big data workflow running on the same infrastructure, especially given the recent improvements that reduce deep learning training time from weeks to hours or even minutes.

Deep learning is increasingly adopted by the big data and data science community. Unfortunately, mainstream data engineers and data scientists are usually not deep learning experts; as the usages of deep learning expand and scale to larger deployment, it will be much more easier if these users can continue the use of familiar software tools and programming models (e.g., Spark or even SQL) and existing big data cluster infrastructures to build their deep learning applications.

Data

-

Open Images subset with Image-Level Labels (1.74 million images and 600 classes with hierarchies). Source

-

Dataset are stored in image source S3 bucket. An automated process will be copy the images from the source S3 bucket to Image Data Hub's bucket with preprocessing to simulate crowdsourced labeledd images submissions.

Pipeline

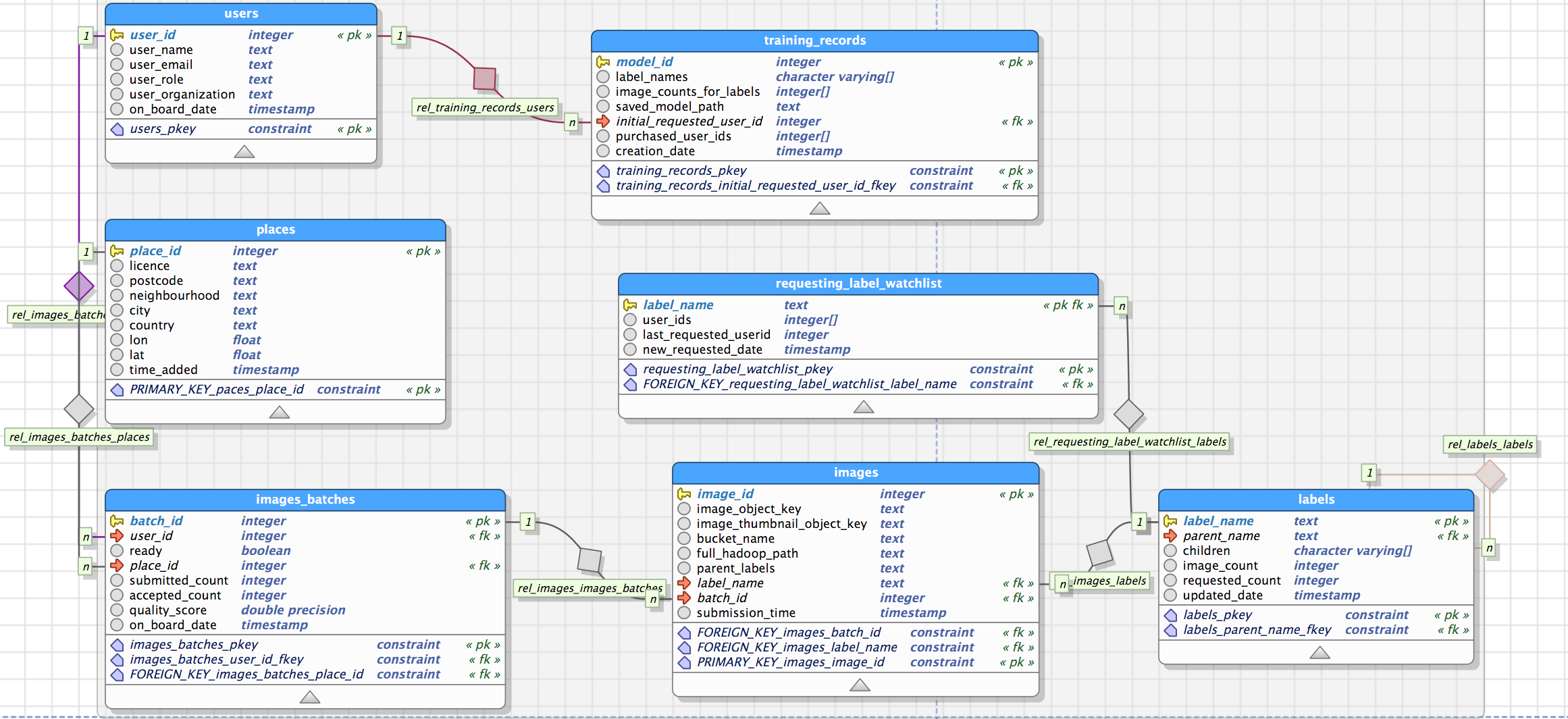

Data Modeling and Database Schema

Sample Business Use Cases that handle by the current Data Model

Model

- Model is pre-trained VGG16(num_classes=1000).

Setup

Prerequisites

- Please register for Amazon AWS account and set up your AWS CLI according to Amazon’s documentation

- Please install and configure Pegasus according to the Github Instructions

- Please create a PostgreSQL DB Instance with AWS RDS by following this user guide

Execution

Please check Deep Images Hub wiki site for detailed documentations. Here are the table of contents of how to get start:

- Data Preparation

- Can also reference the Juypter Notebook for Exploratory Data Analysis on the Pen Images classes)

- Design and Planing

- Simulating Crowdsourcing Images Workflow

- Business User Requests on Deep Image Hub Website Workflow

- Computer Vision Model Training Workflow

- Summary & Future Works

Challenges

- How to simulate crowdsourced labeled image submission?

- How can we preprocess the crowdsourced images in our centralized data hub?

- How to design a platform that host image datasets that are ready for users to download and train with distributedly on demands?

- How to train deep learning model distributed with centralized data source?

- How to work with deep learning in Spark when it is still in it's infancy.

- Work flows to connect all the dots for the platform

Extra Libraries used to simulate geolocation with given latitude and longitude

For geocoding and decoding: geopy

Extra References

These references and resources I came across while I was working on this project. They gave me lights of how to solve some of the technically challenges.