by Hengshuang Zhao*, Li Jiang*, Chi-Wing Fu, and Jiaya Jia, details are in paper.

This repository is build for PointWeb in point cloud scene understanding.

-

Requirement:

- Hardware: 4 GPUs (better with >=11G GPU memory)

- Software: PyTorch>=1.0.0, Python3, CUDA>=9.0, tensorboardX

-

Clone the repository and build the ops:

git clone https://github.com/hszhao/PointWeb.git cd PointWeb cd lib/pointops && python setup.py install && cd ../../

-

Train:

-

Download related datasets and symlink the paths to them as follows (you can alternatively modify the relevant paths specified in folder

config):mkdir -p dataset ln -s /path_to_s3dis_dataset dataset/s3dis -

Specify the gpu used in config and then do training:

sh tool/train.sh s3dis pointweb

-

-

Test:

-

Download trained segmentation models and put them under folder specified in config or modify the specified paths.

-

For full testing (get listed performance):

sh tool/test.sh s3dis pointweb

-

-

Visualization: tensorboardX incorporated for better visualization.

tensorboard --logdir=run1:$EXP1,run2:$EXP2 --port=6789

-

Other:

Description: mIoU/mAcc/aAcc/voxAcc stands for mean IoU, mean accuracy of each class, all pixel accuracy , and voxel label accuracy respectively.

mIoU/mAcc/aAcc of PointWeb on S3DIS dataset: 0.6055/0.6682/0.8658.

mIoU/mAcc/aAcc/voxAcc of PointWeb on ScanNet dataset: 0.5063/0.6061/0.8529/0.8568.

If you find the code or trained models useful, please consider citing:

@inproceedings{zhao2019pointweb,

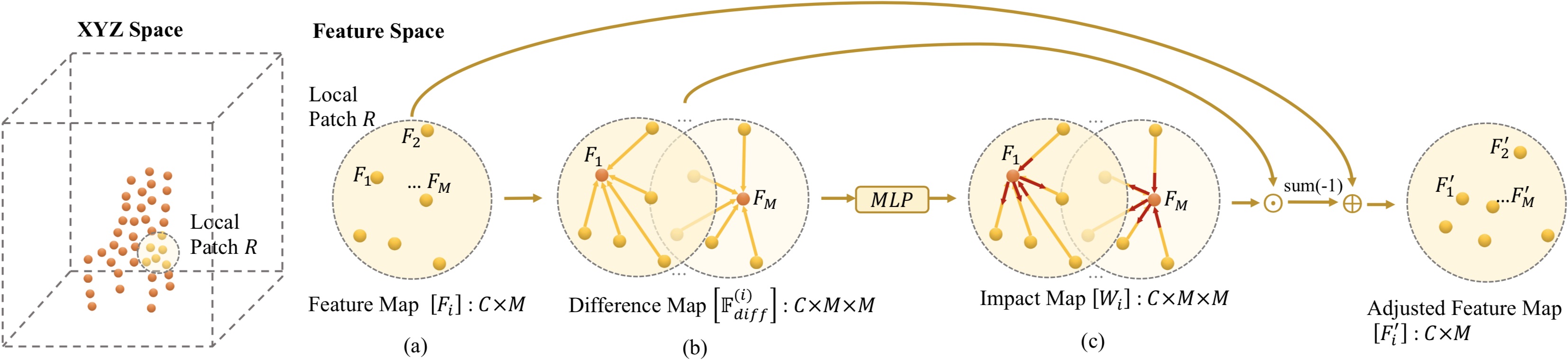

title={{PointWeb}: Enhancing Local Neighborhood Features for Point Cloud Processing},

author={Zhao, Hengshuang and Jiang, Li and Fu, Chi-Wing and Jia, Jiaya},

booktitle={CVPR},

year={2019}

}