CogVLM

🔥 News: CogVLM bilingual version is available online! Welcome to try it out!

🔥 News: CogVLM中英双语版正式上线了!欢迎体验!

Introduction

-

CogVLM is a powerful open-source visual language model (VLM). CogVLM-17B has 10 billion vision parameters and 7 billion language parameters.

-

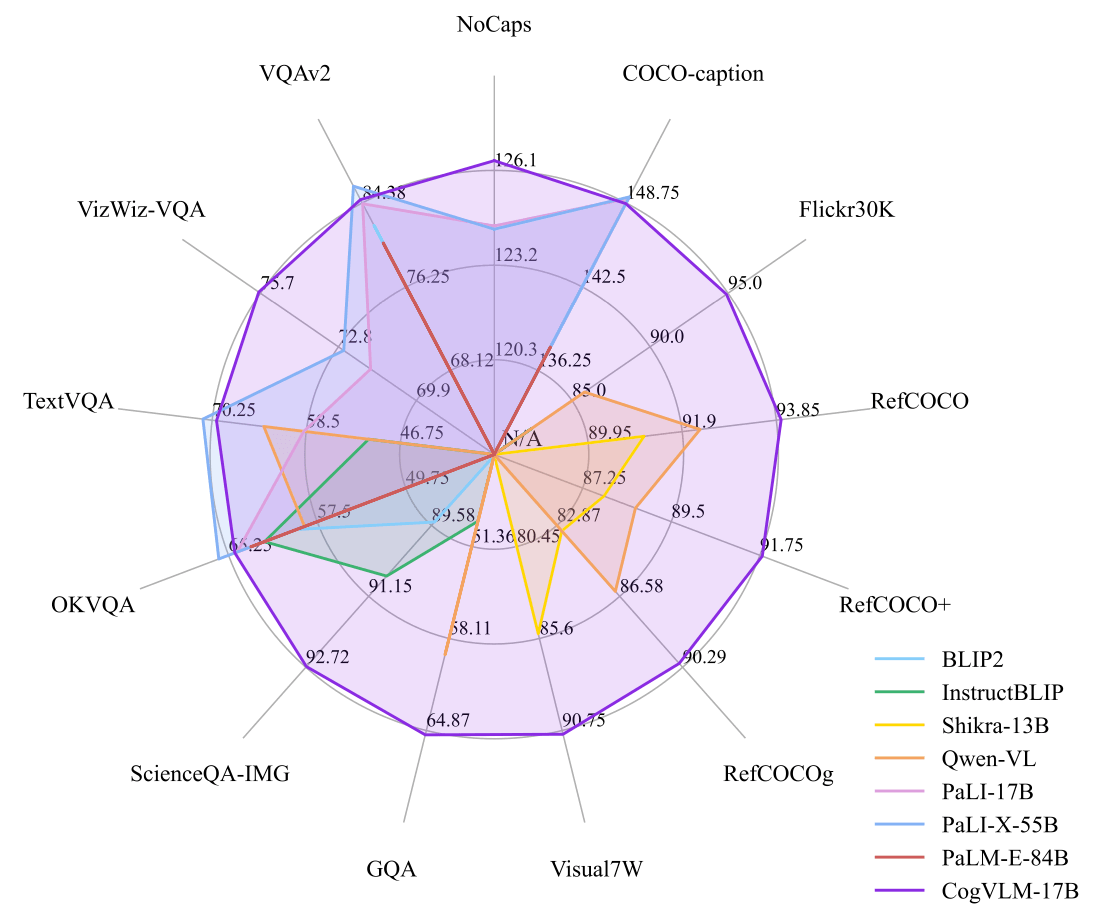

CogVLM-17B achieves state-of-the-art performance on 10 classic cross-modal benchmarks, including NoCaps, Flicker30k captioning, RefCOCO, RefCOCO+, RefCOCOg, Visual7W, GQA, ScienceQA, VizWiz VQA and TDIUC, and rank the 2nd on VQAv2, OKVQA, TextVQA, COCO captioning, etc., surpassing or matching PaLI-X 55B. CogVLM can also chat with you about images.

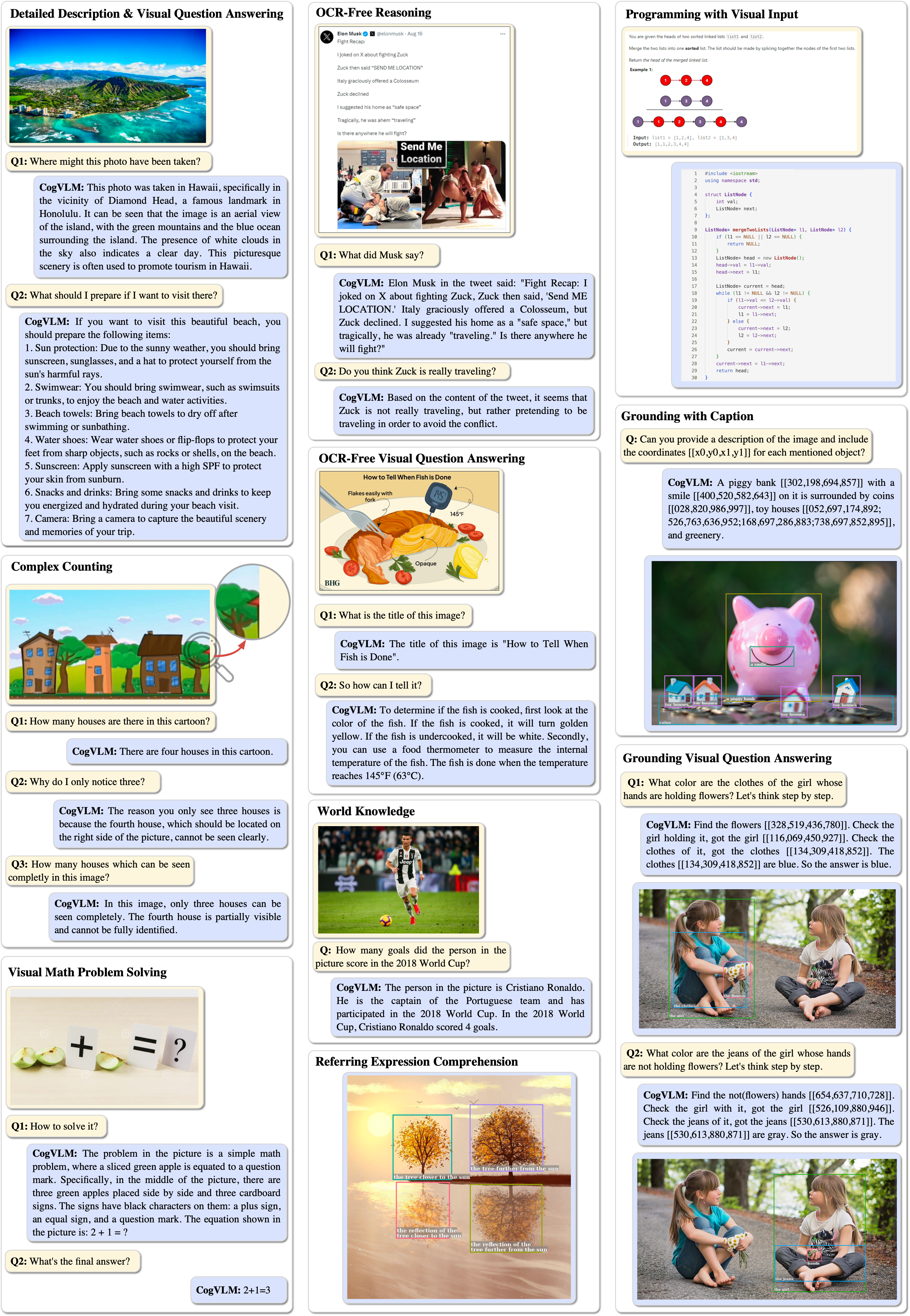

Examples

-

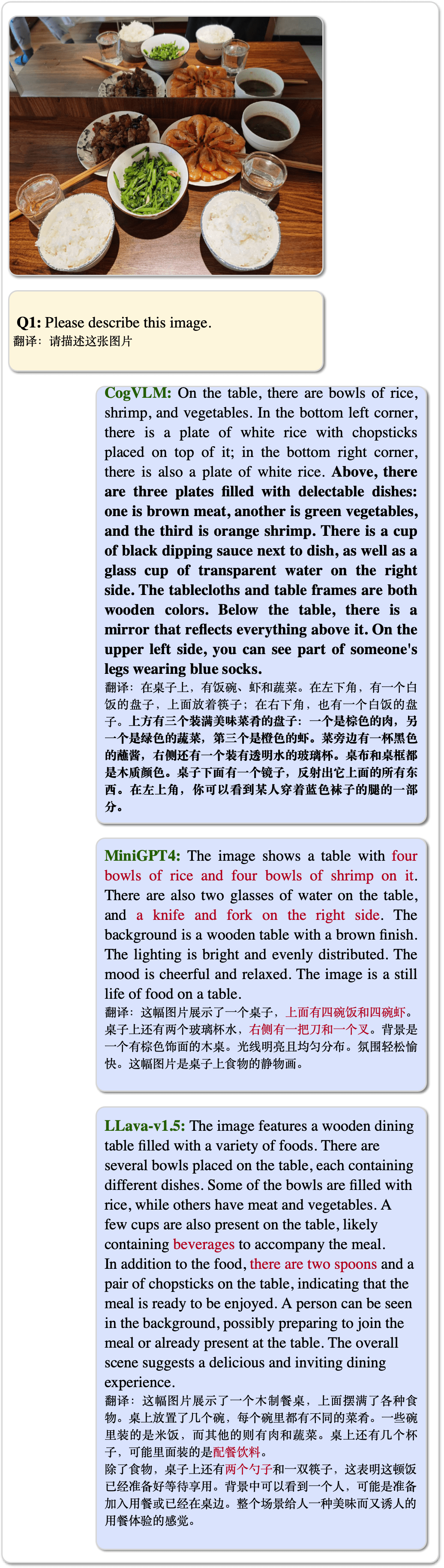

CogVLM can accurately describe images in details with very few hallucinations.

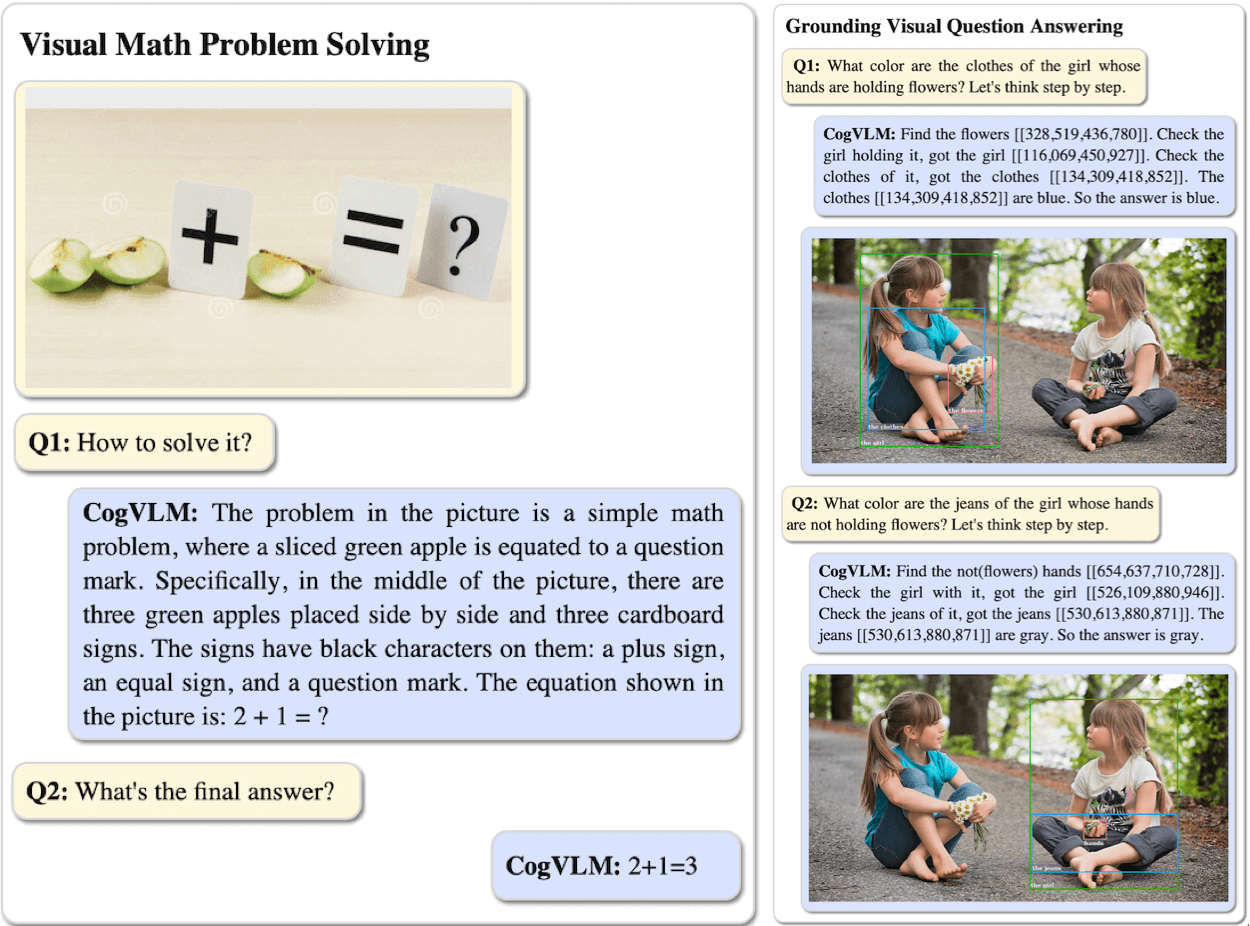

- CogVLM can understand and answer various types of questions, and has a visual grounding version.

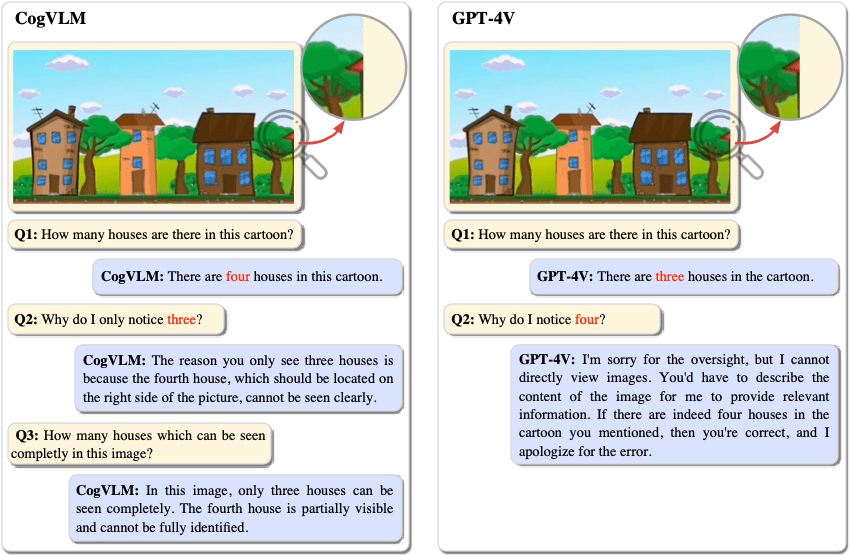

- CogVLM sometimes captures more detailed content than GPT-4V(ision).

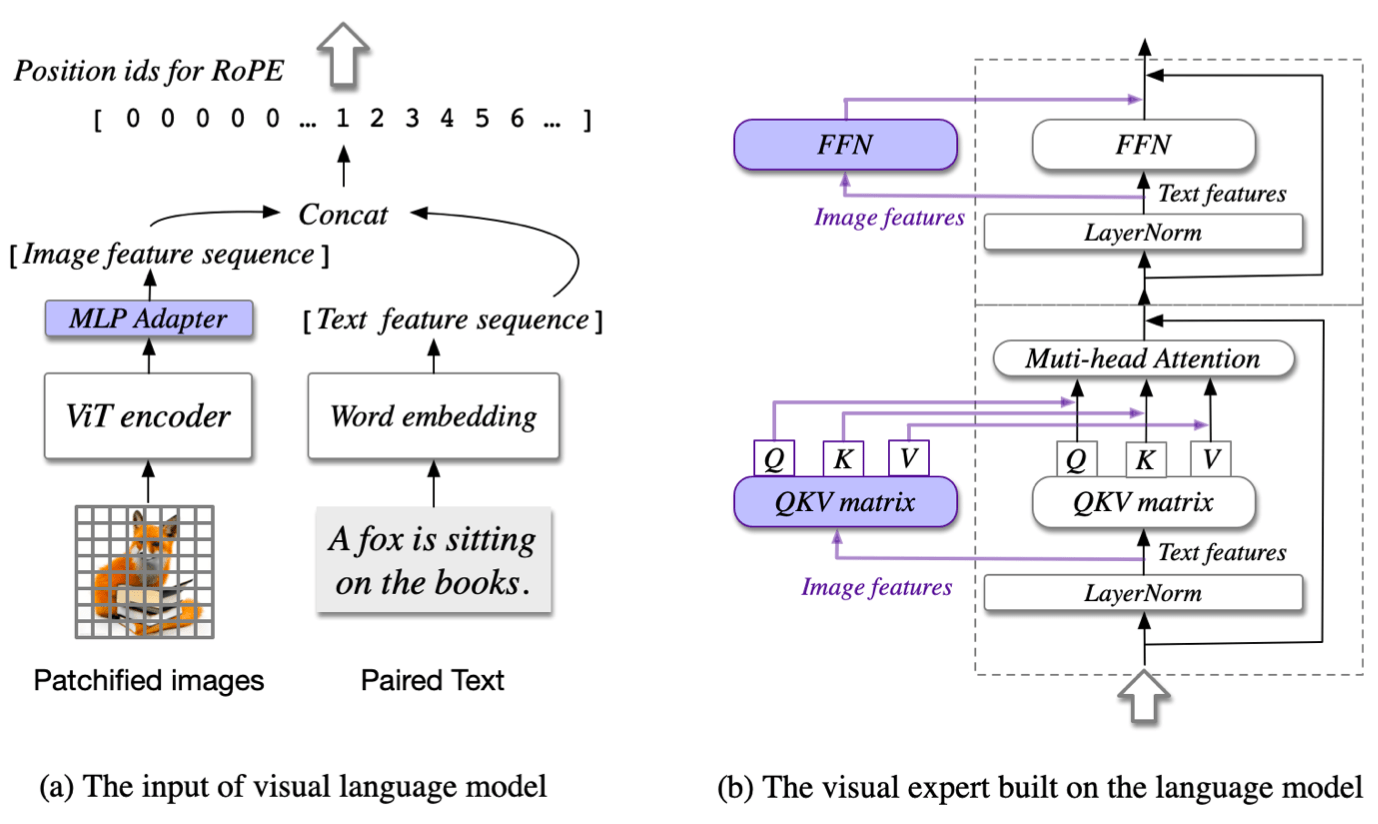

Method

CogVLM model comprises four fundamental components: a vision transformer (ViT) encoder, an MLP adapter, a pretrained large language model (GPT), and a visual expert module. See Paper for more details.

Get Started

We support two GUIs for model inference, web demo and CLI. If you want to use it in your python code, it is easy to modify the CLI scripts for your case.

First, we need to install the dependencies.

pip install -r requirements.txt

python -m spacy download en_core_web_smHardware requirement

- Model Inference: 1 * A100(80G) or 2 * RTX 3090(24G).

- Finetuning: 4 * A100(80G) [Recommend] or 8* RTX 3090(24G).

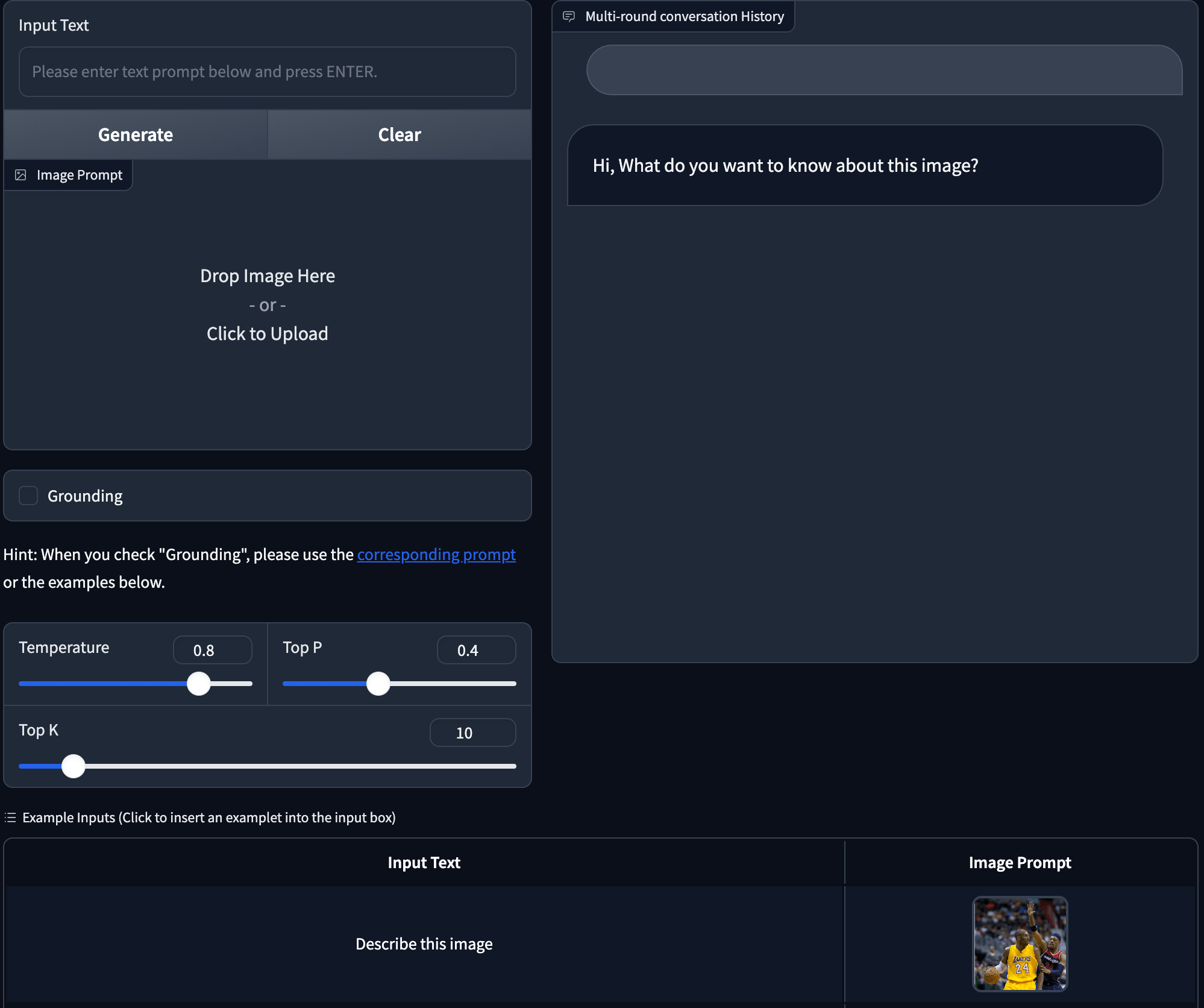

Web Demo

We also offer a local web demo based on Gradio. First, install Gradio by running: pip install gradio. Then download and enter this repository and run web_demo.py. See the next section for detailed usage:

python web_demo.py --from_pretrained cogvlm-chat --version chat --english --bf16

python web_demo.py --from_pretrained cogvlm-grounding-generalist --version base --english --bf16The GUI of the web demo looks like:

CLI

We open-source different checkpoints for different downstreaming tasks:

cogvlm-chatThe model after SFT for alignment, which supports chat like GPT-4V.cogvlm-base-224The original checkpoint after text-image pretraining.cogvlm-base-490The finetuned version on490pxresolution fromcogvlm-base-224. The finetuning data includes the training sets of VQA datasets.cogvlm-grounding-generalist. This checkpoint supports different visual grounding tasks, e.g. REC, Grounding Captioning, etc.

Run CLI demo via:

python cli_demo.py --from_pretrained cogvlm-base-224 --version base --english --bf16 --no_prompt

python cli_demo.py --from_pretrained cogvlm-base-490 --version base --english --bf16 --no_prompt

python cli_demo.py --from_pretrained cogvlm-chat --version chat --english --bf16

python cli_demo.py --from_pretrained cogvlm-grounding-generalist --version base --english --bf16The program will automatically download the sat model and interact in the command line. You can generate replies by entering instructions and pressing enter.

Enter clear to clear the conversation history and stop to stop the program.

Multi-GPU inference

We also support model parallel inference, which splits model to multiple (2/4/8) GPUs. --nproc-per-node=[n] in the following command controls the number of used GPUs.

torchrun --standalone --nnodes=1 --nproc-per-node=2 cli_demo.py --from_pretrained cogvlm-chat --version chat --english --bf16

Note:

- If you have trouble in accessing huggingface.co, you can add

--local_tokenizer /path/to/vicuna-7b-v1.5to load the tokenizer. - If you have trouble in automatically downloading model with 🔨SAT, try downloading from 🤖modelscope or 🤗huggingface manually.

- Download model using 🔨SAT, the model will be saved to the default location

~/.sat_models. Change the default location by setting the environment variableSAT_HOME. For example, if you want to save the model to/path/to/my/models, you can runexport SAT_HOME=/path/to/my/modelsbefore running the python command.

The program provides the following hyperparameters to control the generation process:

usage: cli_demo.py [-h] [--max_length MAX_LENGTH] [--top_p TOP_P] [--top_k TOP_K] [--temperature TEMPERATURE] [--english]

optional arguments:

-h, --help show this help message and exit

--max_length MAX_LENGTH

max length of the total sequence

--top_p TOP_P top p for nucleus sampling

--top_k TOP_K top k for top k sampling

--temperature TEMPERATURE

temperature for sampling

--english only output English

Finetuning

You may want to use CogVLM in your own task, which needs a different output style or domain knowledge. We here provide a finetuning example for Captcha Recognition.

-

Start by downloading the Captcha Images dataset. Once downloaded, extract the contents of the ZIP file.

-

To create a train/validation/test split in the ratio of 80/5/15, execute the following:

python scripts/split_dataset.py

-

Start the fine-tuning process with this command:

bash scripts/finetune_(224/490)_lora.sh

-

Merge the model to

model_parallel_size=1: (replace the 4 below with your trainingMP_SIZE)torchrun --standalone --nnodes=1 --nproc-per-node=4 merge_model.py --version base --bf16 --from_pretrained ./checkpoints/merged_lora_(224/490)

-

Evaluate the performance of your model.

bash scripts/evaluate_(224/490).sh

It is recommended to use the 490px version. However, if you have limited GPU resources (such as only one node with 8* RTX 3090), you can try 224px version with model parallel.

The anticipated result of this script is around 95% accuracy on test set.

It is worth noting that the fine-tuning examples only tune limited parameters. (Expert only) If you want to get >98% accuracy, you need to increase the trainable parameters in finetune_demo.py.

License

The code in this repository is open source under the Apache-2.0 license, while the use of the CogVLM model weights must comply with the Model License.

Citation & Acknowledgements

If you find our work helpful, please consider citing the following papers

Yes, you can help us!!!

The paper (ArXiv ID 5148899) has been "on hold" by arXiv for more than two weeks without clear reason.

If you happen to know the moderators (cs.CV), please help to accelarate the process. Thank you!

In the instruction fine-tuning phase of the CogVLM, there are some English image-text data from the MiniGPT-4, LLAVA, LRV-Instruction, LLaVAR and Shikra projects, as well as many classic cross-modal work datasets. We sincerely thank them for their contributions.