@article{hu2023ealss,

title={EA-LSS: Edge-aware Lift-splat-shot Framework for 3D BEV Object Detection},

author={Haotian Hu and Fanyi Wang and Jingwen Su and Yaonong Wang and Laifeng Hu and Weiye Fang and Jingwei Xu and Zhiwang Zhang},

journal={arXiv preprint arXiv:2303.17895},

year={2023}

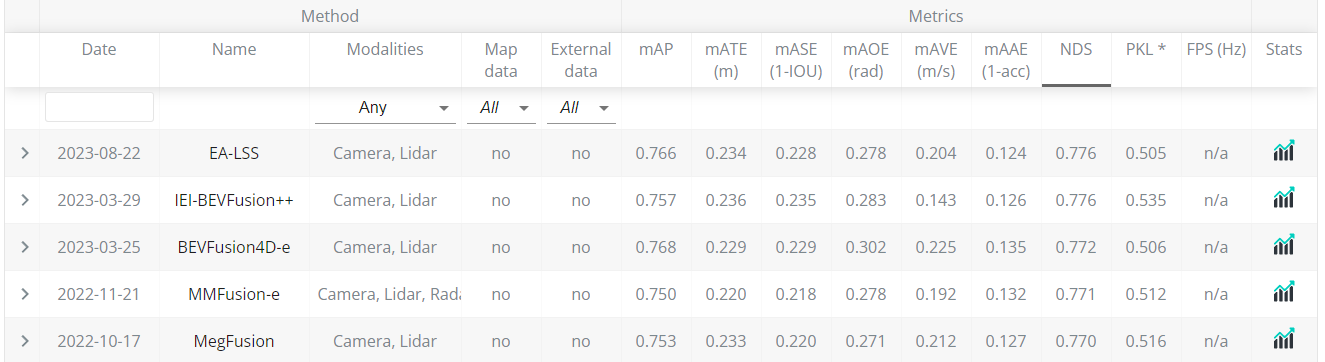

}- 2023.8.22 EA-LSS achieved the first place in the nuScenes 3D object detection leaderboard!

- 2023.8.16 create README.md

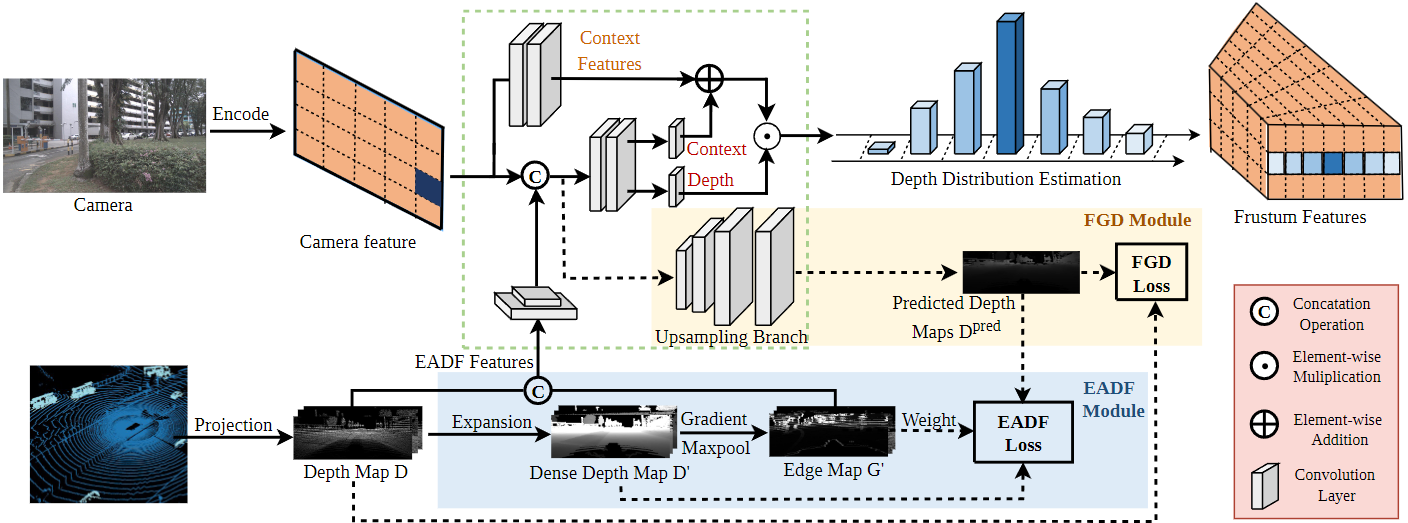

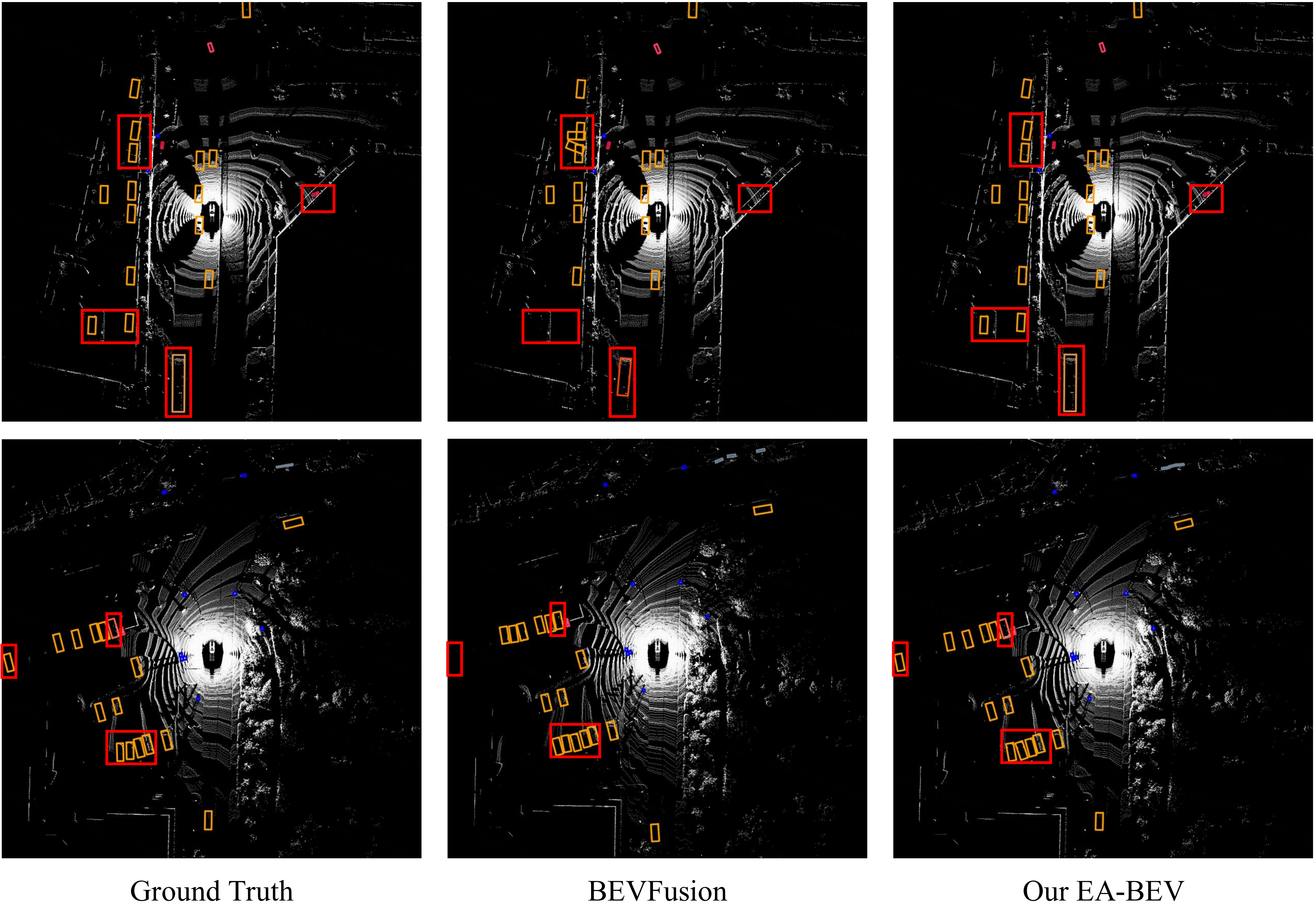

In recent years, great progress has been made in the Lift-Splat-Shot-based (LSS-based) 3D object detection method. However, inaccurate depth estimation remains an important constraint to the accuracy of camera-only and multi-modal 3D object detection models, especially in regions where the depth changes significantly (i.e., the ''depth jump'' problem). In this paper, we proposed a novel Edge-aware Lift-splat-shot (EA-LSS) framework. Specifically, edge-aware depth fusion (EADF) module is proposed to alleviate the ''depth jump'' problem and fine-grained depth (FGD) module to further enforce refined supervision on depth. Our EA-LSS framework is compatible for any LSS-based 3D object detection models, and effectively boosts their performances with negligible increment of inference time. Experiments on nuScenes benchmarks demonstrate that EA-LSS is effective in either camera-only or multi-modal models. It is worth mentioning that EA-LSS achieved the state-of-the-art performance on nuScenes test benchmarks with mAP and NDS of 76.6% and 77.6%, respectively.

# Train the camera branch.

./tools/dist_train.sh configs/EALSS/cam_stream/ealss_4x8_20e_nusc_cam.py 8

# Train the lidar branch to follow the transfusion fade strategy.

./tools/dist_train.sh configs/EALSS/lidar_stream/transfusion_nusc_voxel_L.py 8

# After loading camera and lidar branch weights, the fusion module is trained.

./tools/dist_train.sh configs/EALSS/ealss_4x8_10e_nusc_aug_large.py 8

# Verification model

./tools/dist_test.sh configs/EALSS/ealss_4x8_10e_nusc_aug_large.py WEIGHT_PATH 8 --eval bbox

| Method | mAP | NDS |

|---|---|---|

| BEVFusion(Peking University) | 71.3 | 73.3 |

| +EA-LSS | 72.2 | 74.4 |

| +EA-LSS* | 76.6 | 77.6 |

*reprsent the test time augment and model ensemble.

| Method | mAP | NDS | Latency(ms) |

|---|---|---|---|

| BEVDepth-R50 | 33.0 | 43.6 | 110.3 |

| +EA-LSS | 33.4 | 44.1 | 110.3 |

| Tig-bev | 33.8 | 37.5 | 68.0 |

| +EA-LSS | 35.9 | 40.7 | 68.0 |

| BEVFusion(MIT) | 68.5 | 71.4 | 119.2 |

| +EA-LSS | 69.4 | 71.8 | 123.6 |

| BEVFusion(Peking University) | 69.6 | 72.1 | 190.3 |

| +EA-LSS | 71.2 | 73.1 | 194.9 |

We sincerely thank the authors of BEVFusion(Peking University), BEVFusion(MIT), mmdetection3d, TransFusion for open sourcing their methods.