This project implements a fashion product search chatbot using Multimodal RAG (Retrieval-Augmented Generation), Amazon Bedrock, and OpenSearch. The chatbot processes user input to provide optimal product recommendations through both text and image-based searches.

This project is a code implementation of the concepts presented in the blog post, Multimodal RAG-Based Product Search Chatbot Using Amazon Bedrock and OpenSearch If you're interested in understanding the underlying principles and seeing a detailed explanation, I highly recommend checking out the original post.

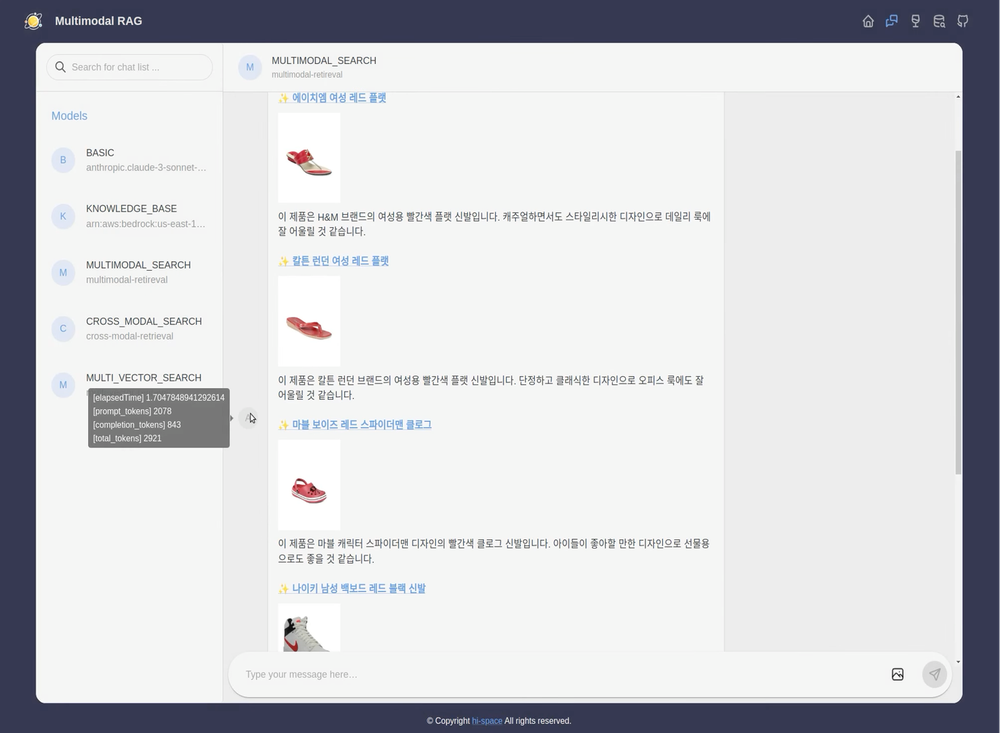

|

|

|---|---|

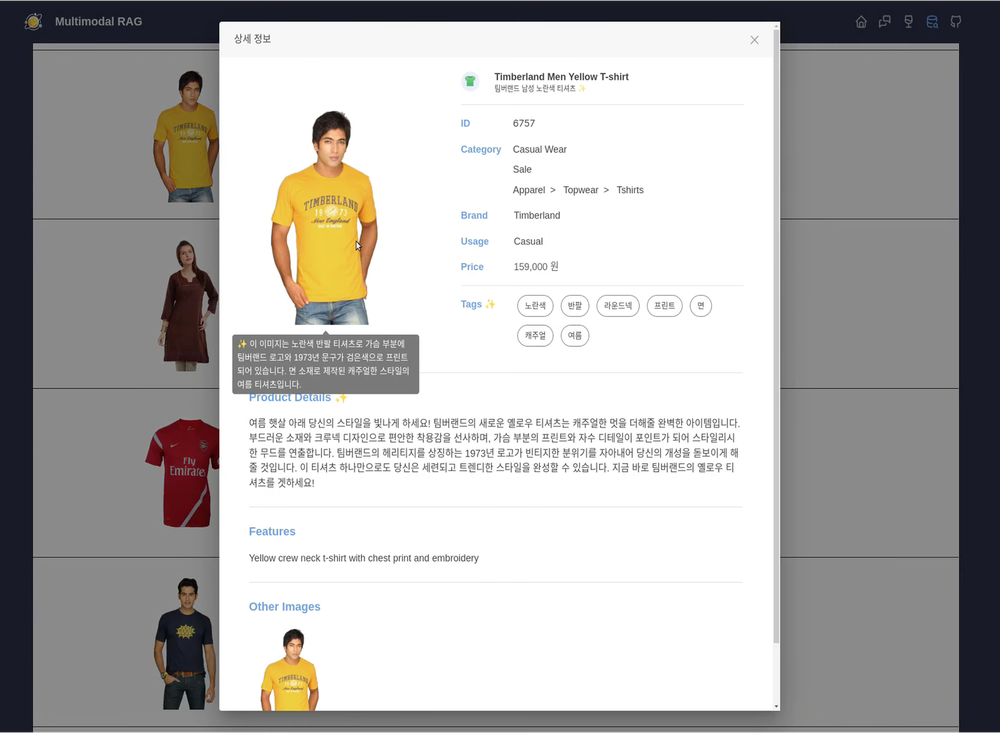

| In the item detail dialog, hovering over the image or title will show the caption generated by the LLM. | Use the tabs on the left to select the multimodal RAG search method. |

-

Item Retrieval from DynamoDB: The system retrieves product information from a DynamoDB database and provides it through a RESTful API for easy access and integration.

-

Chat History Storage Using Redis: User chat histories are stored in Redis, a fast, in-memory data store, allowing for quick retrieval and session management.

-

Asynchronous Chat Content Generation and SSE Transmission: The chatbot generates responses asynchronously, ensuring smooth user interaction, and delivers updates in real-time using Server-Sent Events (SSE).

-

Support for Knowledge Bases in Amazon Bedrock: The chatbot integrates with Amazon Bedrock to access and utilize various knowledge bases, enhancing its ability to answer complex queries and provide relevant product recommendations.

-

OpenSearch Hybrid Search: Combines both traditional keyword-based search and semantic search using OpenSearch, enabling more accurate and relevant product search results.

-

Multimodal Embedding and Multimodal LLM: The system employs multimodal embeddings and a Multimodal Large Language Model (LLM) to process and understand both text and image inputs, allowing for sophisticated, cross-modal product searches.

├── backend # Backend project (Python, FastAPI)

├── frontend # Frontend project (TypeScript, Next.js)

├── data # Sample data

└── notebooks # Data preparation notebooks

Before proceeding with the steps below, ensure you have your AWS account information and have set up the necessary infrastructure for Amazon Bedrock, Amazon DynamoDB, and Amazon OpenSearch Service.

- Data Preparation: Refer to the README in the notebooks directory and follow the steps to run the code, which will store the embedding data in OpenSearch.

- Run the Backend: Follow the instructions in the backend README to start the backend server.

- Run the Frontend: Follow the instructions in the frontend README to start the frontend application.