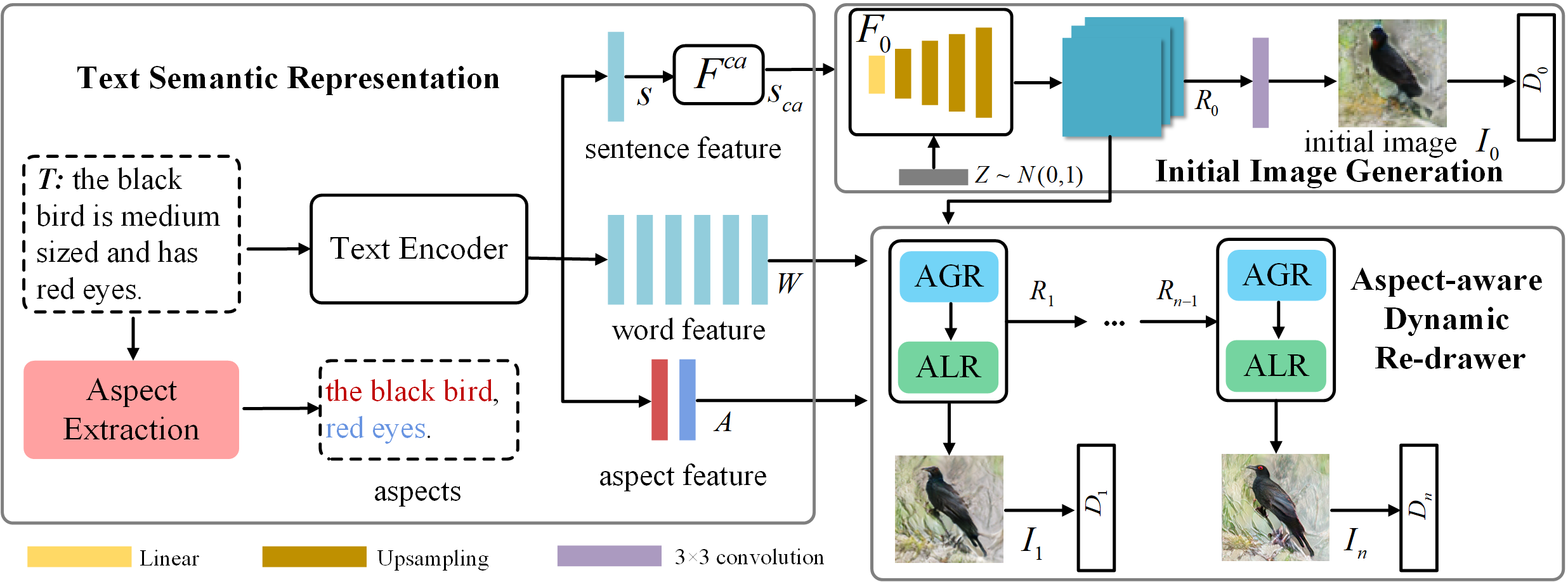

Pytorch implementation for reproducing DAE-GAN results in the paper [DAE-GAN: Dynamic Aspect-aware GAN for Text-to-Image Synthesis] by Shulan Ruan, Yong Zhang, Kun Zhang, Yanbo Fan, Fan Tang, Qi Liu, Enhong Chen. (This work was performed when Ruan was an intern with Tencent AI Lab).

python 3.6

Pytorch

In addition, please add the project folder to PYTHONPATH and pip install the following packages:

python-dateutileasydictpandastorchfilenltkscikit-image

Data

- Download our preprocessed metadata for birds coco, name them as captions.pickle and save them to data/birds and data/coco

- Download the birds image data. Extract them to

data/birds/ - Download coco dataset and extract the images to

data/coco/

Training

-

Train DAE-GAN models:

- For bird dataset:

python main.py --cfg cfg/bird_DAEGAN.yml --gpu 0 - For coco dataset:

python main.py --cfg cfg/coco_DAEGAN.yml --gpu 0

- For bird dataset:

-

*.ymlfiles are example configuration files for training/evaluation our models.

Pretrained Model

- DAMSM for bird. Download and save it to

DAMSMencoders/ - DAMSM for coco. Download and save it to

DAMSMencoders/ - DAE-GAN for bird. Download and save it to

models/. netG_3s means generation with 3 Generators (2 aspects) in total. netG_4s means generation with 4 Generators (3 aspects) in total. - DAE-GAN for coco. Download and save it to

models/

Validation

- To generate images for all captions in the validation dataset, change B_VALIDATION to True in the eval_*.yml. and then run

python main.py --cfg cfg/eval_coco.yml --gpu 1 - We compute inception score for models trained on birds using StackGAN-inception-model.

- We compute inception score for models trained on coco using improved-gan/inception_score.

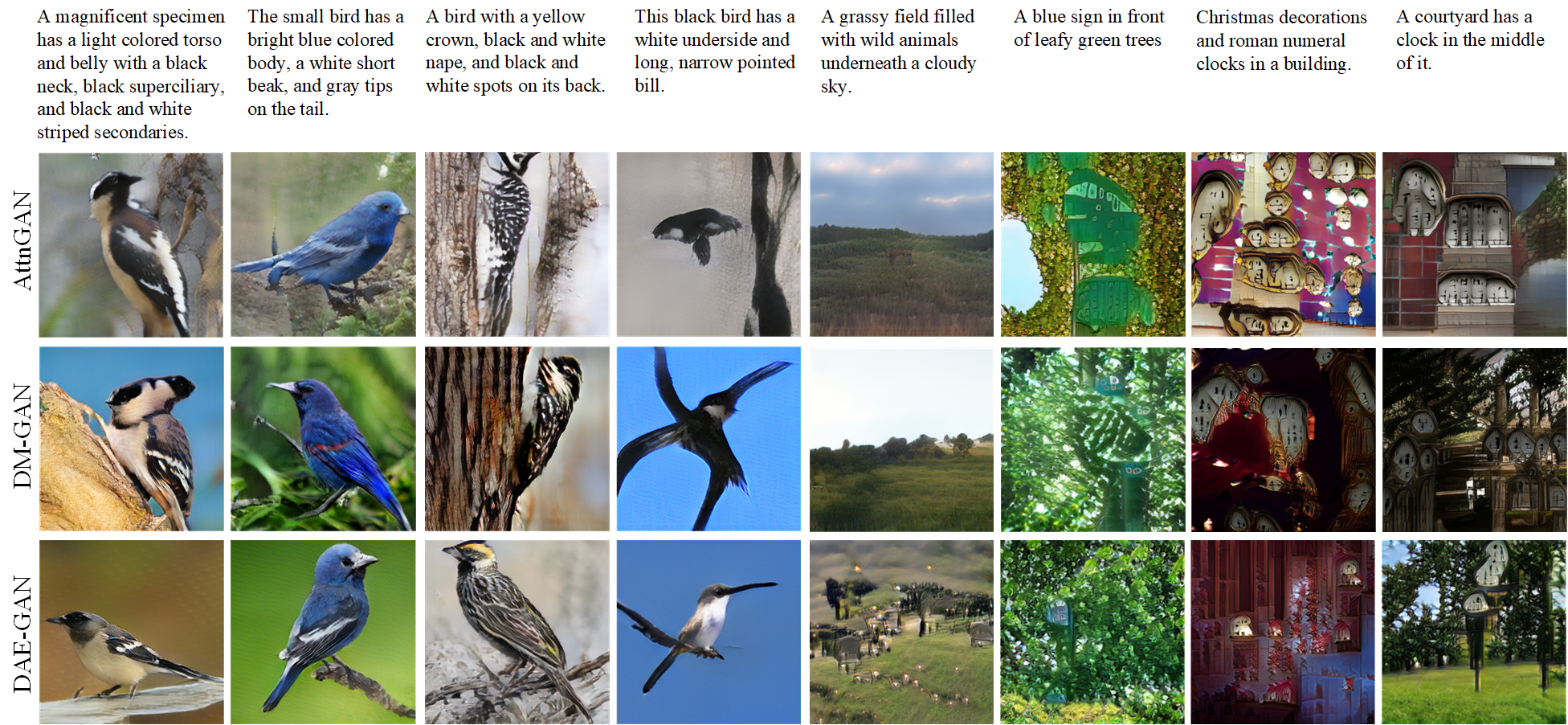

Examples generated by DAE-GAN

Citing DAE-GAN

If you find DAE-GAN useful in your research, please consider citing:

@inproceedings{ruan2021dae,

title={DAE-GAN: Dynamic Aspect-aware GAN for Text-to-Image Synthesis},

author={Ruan, Shulan and Zhang, Yong and Zhang, Kun and Fan, Yanbo and Tang, Fan and Liu, Qi and Chen, Enhong},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={13960--13969},

year={2021}

}

Reference

- StackGAN++: Realistic Image Synthesis with Stacked Generative Adversarial Networks [code]

- Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks [code]

- AttnGAN: Fine-Grained Text to Image Generation with Attentional Generative Adversarial Networks [code]

- DM-GAN: Dynamic Memory Generative Adversarial Networks for Text-to-Image Synthesis [code]