The project is still under construction, we will continue to update it and welcome contributions/pull requests from the community.

|

|

|

|

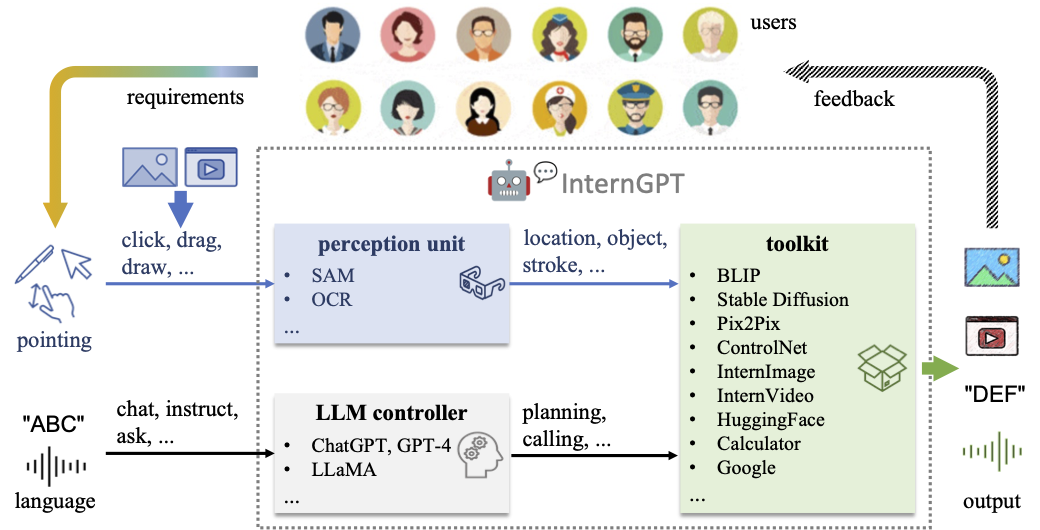

🤖💬 InternGPT [Paper]

InternGPT(short for iGPT) / InternChat(short for iChat) is pointing-language-driven visual interactive system, allowing you to interact with ChatGPT by clicking, dragging and drawing using a pointing device. The name InternGPT stands for interaction, nonverbal, and ChatGPT. Different from existing interactive systems that rely on pure language, by incorporating pointing instructions, iGPT significantly improves the efficiency of communication between users and chatbots, as well as the accuracy of chatbots in vision-centric tasks, especially in complicated visual scenarios. Additionally, in iGPT, an auxiliary control mechanism is used to improve the control capability of LLM, and a large vision-language model termed Husky is fine-tuned for high-quality multi-modal dialogue (impressing ChatGPT-3.5-turbo with 93.89% GPT-4 Quality).

(2023.15.15) The model_zoo including HuskyVQA has been released! Try it on your local machine!

(2023.15.15) Our code is also publicly available on Hugging Face! You can duplicate the repository and run it on your own GPUs.

InternGPT is online (see https://igpt.opengvlab.com). Let's try it!

[NOTE] It is possible that you are waiting in a lengthy queue. You can clone our repo and run it with your private GPU.

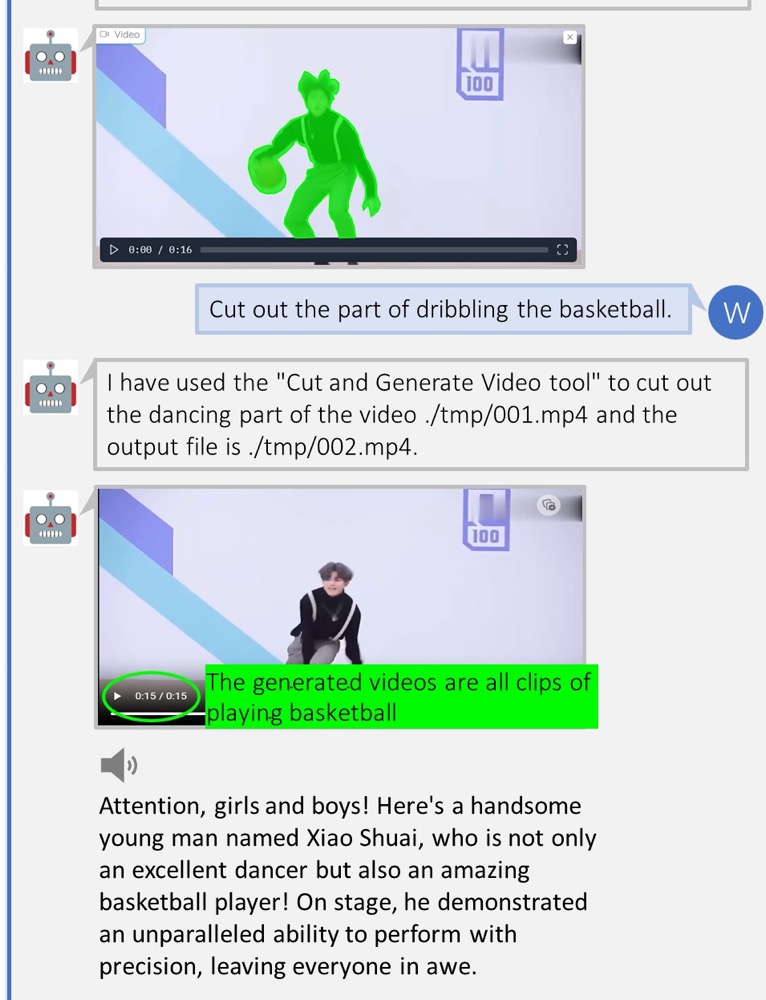

After uploading the image, you can have a multi-modal dialogue by sending messages like: "what is it in the image?" or "what is the background color of image?".

You also can interactively operate, edit or generate the image as follows:

- You can click the image and press the button

Pickto visualize the segmented region or press the buttonOCRto recognize the words at chosen position; - To remove the masked reigon in the image, you can send the message like:

"remove the masked region"; - To replace the masked reigon in the image, you can send the message like:

"replace the masked region with {your prompt}"; - To generate a new image, you can send the message like:

"generate a new image based on its segmentation describing {your prompt}" - To create a new image by your scribble, you should press button

Whiteboardand draw in the board. After drawing, you need to press the buttonSaveand send the message like:"generate a new image based on this scribble describing {your prompt}".

online_demo.mp4

- Support Chinese

- Support MOSS

- More powerful foundation models based on InternImage and InternVideo

- More accurate interactive experience

- OpenMMLab toolkit

- Web page & code generation

- Support search engine

- Low cost deployment

- Response verification for agent

- Prompt optimization

- User manual and video demo

- Support voice assistant

- Support click interaction

- Interactive image editing

- Interactive image generation

- Interactive visual question answering

- Segment anything

- Image inpainting

- Image caption

- image matting

- Optical character recognition

- Action recognition

- Video caption

- Video dense caption

- video highlight interpretation

- Linux

- Python 3.8+

- PyTorch 1.12+

- CUDA 11.6+

- GCC & G++ 5.4+

- GPU Memory >= 17G for loading basic tools (HuskyVQA, SegmentAnything, ImageOCRRecognition)

conda create -n ichat python=3.8

conda activate ichat

pip install -r requirements.txtour model_zoo has been released in huggingface!

You can download it and directly place it into the root directory of this repo before running the app.

HuskyVQA, a strong VQA model, is also available in model_zoo. More details can refer to our report.

Running the following shell can start a gradio service:

python -u app.py --load "HuskyVQA_cuda:0,SegmentAnything_cuda:0,ImageOCRRecognition_cuda:0" --port 3456if you want to enable the voice assistant, please use openssl to generate the certificate:

mkdir certificate

openssl req -x509 -newkey rsa:4096 -keyout certificate/key.pem -out certificate/cert.pem -sha256 -days 365 -nodesand then run:

python -u app.py --load "HuskyVQA_cuda:0,SegmentAnything_cuda:0,ImageOCRRecognition_cuda:0" --port 3456 --httpsThis project is released under the Apache 2.0 license.

If you find this project useful in your research, please consider cite:

@misc{2023interngpt,

title={InternGPT: Solving Vision-Centric Tasks by Interacting with ChatGPT Beyond Language},

author={Zhaoyang Liu and Yinan He and Wenhai Wang and Weiyun Wang and Yi Wang and Shoufa Chen and Qinglong Zhang and Yang Yang and Qingyun Li and Jiashuo Yu and Kunchang Li and Zhe Chen and Xue Yang and Xizhou Zhu and Yali Wang and Limin Wang and Ping Luo and Jifeng Dai and Yu Qiao},

howpublished = {\url{https://arxiv.org/abs/2305.05662}},

year={2023}

}Thanks to the open source of the following projects:

Hugging Face LangChain TaskMatrix SAM Stable Diffusion ControlNet InstructPix2Pix BLIP Latent Diffusion Models EasyOCR

Welcome to discuss with us and continuously improve the user experience of InternGPT.

WeChat QR Code