Applications are within docker images to be pulled and run by Hilbert.

| Current top folders | Folder description |

|---|---|

helpers |

shell scripts shared between images |

images |

our base and application's images |

NOTE: Some applications may need further services (applications) to run in background

- Each image must reside in an individual sub-folder. Create one named after your image (lowercase, only [a-z0-9] characters should be used).

- Copy some simple existing

Dockerfile(e.g. from/chrome/) and change it according to your needs (see below). - Same with

Makefileanddocker-compose.yml

make pullwill try to pull the desired base imagemakewill try to (re-)build your image (currently no build arguments are supported)make checktry to run the default command withing your imagemake prunewill clean-up dangling docker images (left after rebuilding images)

NOTE: make needs to be installed only on your development host.

For example see chrome/Dockerfile.

-

Specify your contact email via

MAINTAINER -

Choose a proper base image among already existing (see above) -

FROM

NOTE: currently we base our images on top of phusion/baseimage:0.9.18hilbert/baseimage

which is based on ubuntu:14.04 and contains a usefull launcher

wrapper (/sbin/my_init).

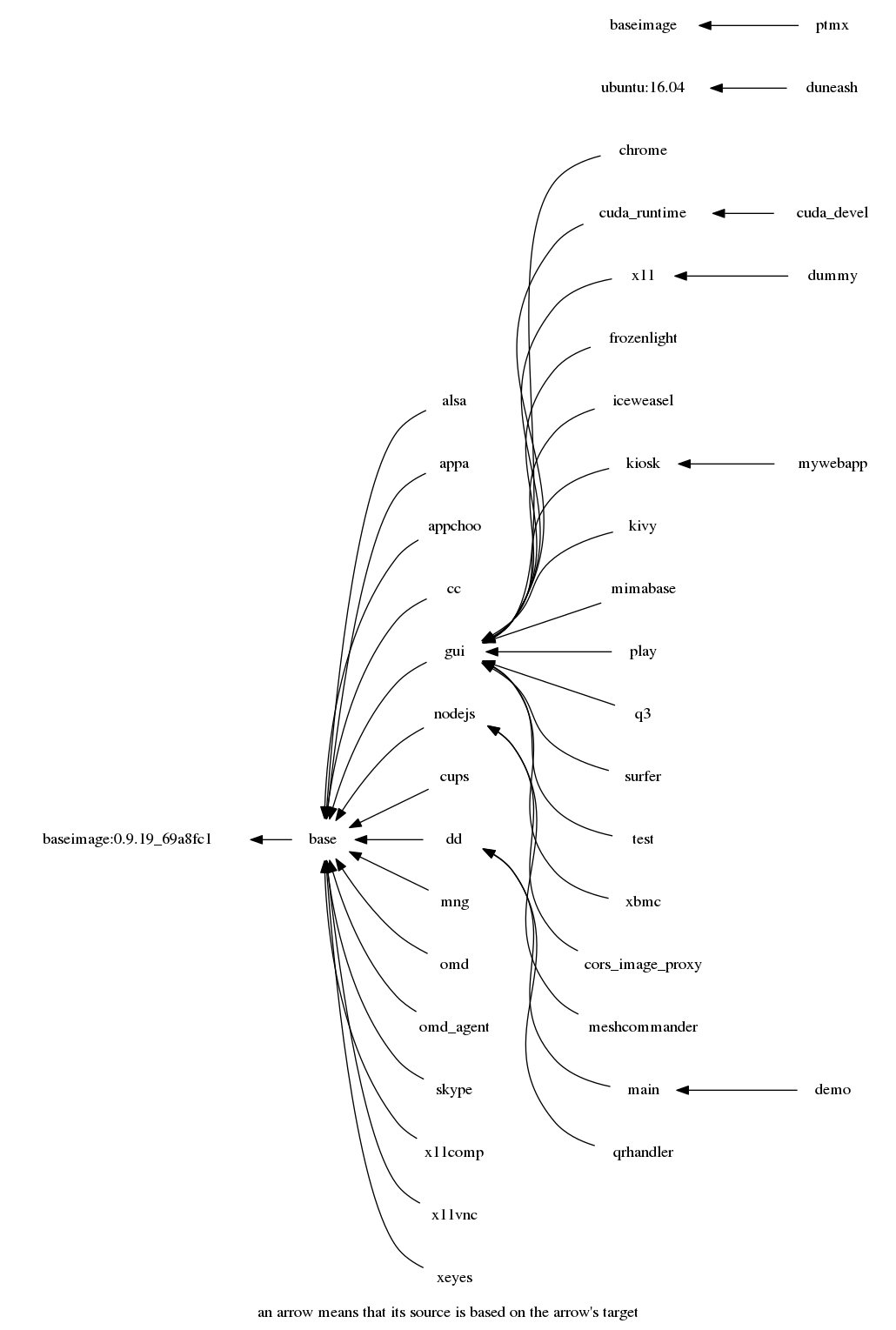

NOTE: we share docker images as much as possible by choosing closest possible base image to start new image from.

- Install additional SW packages (e.g. see

chrome/Dockerfile) -RUN

NOTE: one may also need to add keys and packages repositories .

NOTE: it may be necessary to update repository caches before installing some packages. Also do not forget to clean-up afterwards.

NOTE: The best way to install something is RUN update.sh && install.sh YOUR_PACKAGE && clean.sh

- Add necessary local resources and online resources into your image -

ADDorCOPY

NOTE: use ADD URL_TO_FILE FILE_NAME_IN_IMAGE to add something from

network in build-time.

NOTE: use COPY local_file1 local_file2 ... PATH_IN_IMAGE/ to copy

local files (located alongside with your Dockerfile) into the image

(with owner: root and the same file permissions).

- Run any initial configuration (post installation) actions -

RUN

NOTE: only previouslly installed/added (into the image) executables can be run.

- one can have build arguments but we do not use them.

- one can set environment variables within

Dockerfileand later override them in run-time. - exposing network ports may be done either statically inside

Dockerfileor dynammically in run-time. - Same goes to specification of default command / entry-point script/application and labels.

NOTE: no need to put run-time specifications inside Dockerfile (e.g. EXPOSE, PORT, ENTRYPOINT, CMD etc.) as they will be overrriden in run-time via docker-composer.

TODO: create docker-compose.yml for all images

maketo build the image

What can be specified in run-time:

-

your docker image

-

default command

-

environment variables to be passed to executed command

-

exposed (and redirected) ports

-

mounted devices

-

mounted volumes (local and docker's logical)

-

restart policy: "on-failure:5" (e.g. see https://blog.codeship.com/ensuring-containers-are-always-running-with-dockers-restart-policy/)

-

labels attached to running container (e.g.

is_top_app=0for BG service andis_top_app=1for top-front GUI application) -

working directory

-

mode of execution: not

privilegedin most cases

See for example mng/docker-compose.yml or mng/Makefile

setup.sh: Pull or build necessary starting images (hilbert/*). Previously our images were available via a different tag inmalex984/dockapprepository (https://registry.hub.docker.com/u/malex984/dockapp/). Run (and change)setup.shin order to pull the base image and build starting images. We assume the host linux to run docker service.- We are experimenting with different customization approaches:

hilbert/dummycontainscustomize.shwhich performs customization to the running :dummy container, these customization changes can than be detected withdocker diffand archived together (e.g./tmp/OGL.tgz) for later use byhilbert/base/setup_ogl.sh.- (obsolete)

:up/customize.sh: Customize each libGL-needing image (e.g.:x11and:testby default for now): Running:up/customize.shsuch a host will enable one to detect known hardware or kernel modules (e.g. VirtualBox Guest Additions or NVidia driver) in order to localize/customize some starting images

under corresponding tag name (e.g.:test.nv.340.76or:x11.vb.4.3.26), which than will be tagged with local names (e.g.test:latestorx11:latest). We assume host system to be fully pre-configured (and all necessary kernel modules installed and loaded). Therefore we avoid installing/building kernel modules inside docker container (e.g. usingdkms).

runme.sh: Launch demo prototype application. The shell scriptrunme.shis supposed to be the demo entry point. Using host docker it runsmain(or its alteration if available) image, which contains a glue-together scriptmain.shthat now overtakes the control (!) over the host system (docker service and/dev).

Note thatmain.sh(and its helpers, e.g.run.shadsv.sh) is the only piece which is supposed to be aware of docker! The glue gives proposes a choice menu (e.g. viahilbert/menu/menu.sh), which exits with some return code, depending on which the glue script takes some action or quits the main infinite loop.- Choose

X11Serverif your host was not running X11 server in order to witch the host monitor into graphical mode. NOTE: please don't do that while using the host monitor in text mode since at the momentmenu.shis only suitable for console/text mode (but we are working on a GUI alternative). Better to do that via SSH. - Now assuming a running X11 (on host or inside a docker container) one can choose any application to run (e.g.

Test) or Quit.

xterm: Error 32, errno 2: No such file or directory

Reason: get_pty: not enough ptys

It seems that somebody clears permissions on /dev/pts/ptmx in the

course of the docker mounting /dev or using it by containers...

Since this problem happens rarely it may be related to unexpected "docker rm -vf" for a running container with allocated pty. Also the following may be related:

Quick Fix is sudo chmod a+rw /dev/pts/ptmx

NOTE: what about /dev/ptmx?

According to http://stackoverflow.com/a/29546560 : if your machine had a kernel update but you didn't restart yet then docker freaks out like that.

docker rmi $(docker images -f "dangling=true" -q)

docker images | grep malex984/dockapp | awk ' { print $1 ":" $2 } ' | xargs docker rmi -f # old

docker images | grep 'hilbert/' | awk ' { print $1 ":" $2 } ' | xargs docker rmi -f

docker rmi -f x11 test dummy

docker ps -aq | xargs docker rm -fv

This project is licensed under the Apache v2 license. See also Notice.