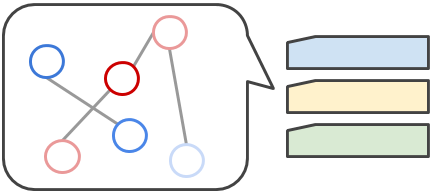

Implementation of two types of graph convolution layers, and application of them on TCGA RNA-seq data and a graph defined over genes by BioGrid PPI.

def convolutionGraph(inputs,

num_outputs,

glap,

activation_fn=nn.relu,

weights_initializler=initializers.xavier_initializer(),

biases_initializer=init_ops.zeros_initializer(),

reuse=None,

scope=None)

convlolutionGraph() implements a graph convolution layer defined by Kipf et al.

inputsis a 2d tensor that goes into the layer.num_outputsspecifies the number of channels wanted on the output tensor.glapis an instance of tf.SparseTensor that defines a graph laplacian matrix DAD.

def convolutionGraph_sc(inputs,

num_outputs,

glap,

activation_fn=nn.relu,

weights_initializler=initializers.xavier_initializer(),

biases_initializer=init_ops.zeros_initializer(),

reuse=None,

scope=None):

convlolutionGraph_sc() implements a graph convolution layer defined by Kipf et al, except that self-connection of nodes are allowed.

inputsis a 2d tensor that goes into the layer.num_outputsspecifies the number of channels wanted on the output tensor.glapis an instance of tf.SparseTensor that defines a graph laplacian matrix DAD.

This file contains 4 common initialization methods for network weights, i.e., uniform(), glorot(), zeros(), and ones().

We currently do not use it.

This file contains

- Two versions of DataFeeder instances.

- Helper functions for data processing. See ipynb/DataProcessing.ipynb for how to use them.

- A class definition for

intx.

We have ppi matrix extracted from BioGrid in March 2018. This defines a graph. We also have TCGA RNA-seq data for approx 9000 samples. This is the data that are getting convolved over the graph. You can download datafiles from here.

See ipynb/runningGraphConv.py for implemention and training of the models. It is should be straight forward.

For any question, e-mail me at hiranumn at cs dot washington dot edu.