Supported Backbones:

Supported Heads:

Supported Standalone Models:

Supported Modules:

Notes: Check each backbone model's accuracy and download its weights from image-classification if it is not provided in this repo.

ADE20K-val

| Model | Backbone Head |

mIoU (%) | Params (M) GFLOPs (512x512) |

Weights |

|---|---|---|---|---|

| SegFormer B0|B1|B2|B3|B4 |

MiT SegFormer |

38.0|43.1|47.5|50.0|51.1 |

4|14|28|47|648 |16|62|79|96 |

models backbones |

| CycleMLP B1|B2|B3|B4|B5 |

CycleMLP FPN |

39.5|42.4|44.5|45.1|45.6 |

19|31|42|56|79 |

backbones |

CityScapes-val

| Model | Img Size | Backbone | mIoU (%) | Params (M) | GFLOPs | Weights |

|---|---|---|---|---|---|---|

| SegFormer B0|B1 |

1024x1024 | MiT | 78.1|80.0 |

4|14 |

126|244 |

backbones |

| FaPN | 512x1024 | ResNet-50 | 80.0 | 33 | - | N/A |

| SFNet | 1024x1024 | ResNetD-18 | 79.0 | 13 | - | backbones |

| HarDNet | 1024x1024 | HarDNet-70 | 77.7 | 4 | 35 | model backbone |

| DDRNet 23slim|23 |

1024x2048 | DDRNet | 77.8|79.5 |

6|20 |

36|143 |

models backbones |

| Dataset | Type | Categories | Train Images |

Val Images |

Test Images |

Image Size (HxW) |

|---|---|---|---|---|---|---|

| COCO-Stuff | General Scene Parsing | 171 | 118,000 | 5,000 | 20,000 | - |

| ADE20K | General Scene Parsing | 150 | 20,210 | 2,000 | 3,352 | - |

| PASCALContext | General Scene Parsing | 59 | 4,996 | 5,104 | 9,637 | - |

| SUN RGB-D | Indoor Scene Parsing | 37 | 2,666 | 2,619 | 5,050+labels | - |

| Mapillary Vistas | Street Scene Parsing | 65 | 18,000 | 2,000 | 5,000 | 1080x1920 |

| CityScapes | Street Scene Parsing | 19 | 2,975 | 500 | 1,525+labels | 1024x2048 |

| CamVid | Street Scene Parsing | 11 | 367 | 101 | 233+labels | 720x960 |

| MHPv2 | Multi-Human Parsing | 59 | 15,403 | 5,000 | 5,000 | - |

| MHPv1 | Multi-Human Parsing | 19 | 3,000 | 1,000 | 980+labels | - |

| LIP | Multi-Human Parsing | 20 | 30,462 | 10,000 | - | - |

| CIHP | Multi-Human Parsing | 20 | 28,280 | 5,000 | - | - |

| ATR | Single-Human Parsing | 18 | 16,000 | 700 | 1,000+labels | - |

| SUIM | Underwater Imagery | 8 | 1,525 | - | 110+labels | - |

Check DATASETS to find more segmentation datasets.

Datasets Structure (click to expand)

Datasets should have the following structure:

data

|__ ADEChallenge

|__ ADEChallengeData2016

|__ images

|__ training

|__ validation

|__ annotations

|__ training

|__ validation

|__ CityScapes

|__ leftImg8bit

|__ train

|__ val

|__ test

|__ gtFine

|__ train

|__ val

|__ test

|__ CamVid

|__ train

|__ val

|__ test

|__ train_labels

|__ val_labels

|__ test_labels

|__ VOCdevkit

|__ VOC2010

|__ JPEGImages

|__ SegmentationClassContext

|__ ImageSets

|__ SegmentationContext

|__ train.txt

|__ val.txt

|__ COCO

|__ images

|__ train2017

|__ val2017

|__ labels

|__ train2017

|__ val2017

|__ MHPv1

|__ images

|__ annotations

|__ train_list.txt

|__ test_list.txt

|__ MHPv2

|__ train

|__ images

|__ parsing_annos

|__ val

|__ images

|__ parsing_annos

|__ LIP

|__ LIP

|__ TrainVal_images

|__ train_images

|__ val_images

|__ TrainVal_parsing_annotations

|__ train_segmentations

|__ val_segmentations

|__ CIHP

|__ instance-leve_human_parsing

|__ train

|__ Images

|__ Category_ids

|__ val

|__ Images

|__ Category_ids

|__ ATR

|__ humanparsing

|__ JPEGImages

|__ SegmentationClassAug

|__ SUIM

|__ train_val

|__ images

|__ masks

|__ TEST

|__ images

|__ masks

|__ SunRGBD

|__ SUNRGBD

|__ kv1/kv2/realsense/xtion

|__ SUNRGBDtoolbox

|__ traintestSUNRGBD

|__ allsplit.mat

|__ Mapillary

|__ training

|__ images

|__ labels

|__ validation

|__ images

|__ labels

Note: For PASCALContext, download the annotations from here and put it in VOC2010.

Augmentations (click to expand)

Check out the notebook here to test the augmentation effects.

Pixel-level Transforms:

- ColorJitter (Brightness, Contrast, Saturation, Hue)

- Gamma, Sharpness, AutoContrast, Equalize, Posterize

- GaussianBlur, Grayscale

Spatial-level Transforms:

- Affine, RandomRotation

- HorizontalFlip, VerticalFlip

- CenterCrop, RandomCrop

- Pad, ResizePad, Resize

- RandomResizedCrop

Requirements (click to expand)

- python >= 3.6

- torch >= 1.8.1

- torchvision >= 0.9.1

Other requirements can be installed with pip install -r requirements.txt.

Configuration (click to expand)

Create a configuration file in configs. Sample configuration for ADE20K dataset can be found here. Then edit the fields you think if it is needed. This configuration file is needed for all of training, evaluation and prediction scripts.

Training (click to expand)

To train with a single GPU:

$ python tools/train.py --cfg configs/CONFIG_FILE.yamlTo train with multiple gpus, set DDP field in config file to true and run as follows:

$ python -m torch.distributed.launch --nproc_per_node=2 --use_env tools/train.py --cfg configs/CONFIG_FILE.yamlEvaluation (click to expand)

Make sure to set MODEL_PATH of the configuration file to your trained model directory.

$ python tools/val.py --cfg configs/CONFIG_FILE.yamlTo evaluate with multi-scale and flip, change ENABLE field in MSF to true and run the same command as above.

Inference

Make sure to set MODEL_PATH of the configuration file to model's weights.

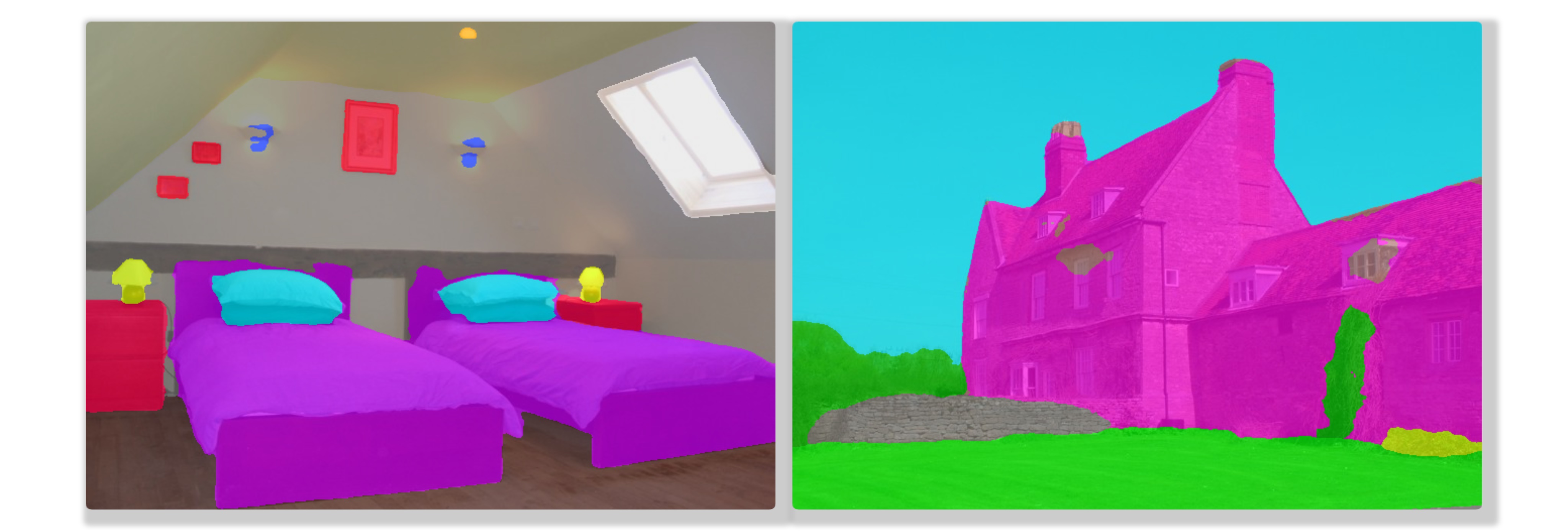

$ python tools/infer.py --cfg configs/CONFIG_FILE.yamlExample test results:

References (click to expand)

Citations (click to expand)

@article{xie2021segformer,

title={SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers},

author={Xie, Enze and Wang, Wenhai and Yu, Zhiding and Anandkumar, Anima and Alvarez, Jose M and Luo, Ping},

journal={arXiv preprint arXiv:2105.15203},

year={2021}

}

@misc{xiao2018unified,

title={Unified Perceptual Parsing for Scene Understanding},

author={Tete Xiao and Yingcheng Liu and Bolei Zhou and Yuning Jiang and Jian Sun},

year={2018},

eprint={1807.10221},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@article{hong2021deep,

title={Deep Dual-resolution Networks for Real-time and Accurate Semantic Segmentation of Road Scenes},

author={Hong, Yuanduo and Pan, Huihui and Sun, Weichao and Jia, Yisong},

journal={arXiv preprint arXiv:2101.06085},

year={2021}

}

@misc{zhang2021rest,

title={ResT: An Efficient Transformer for Visual Recognition},

author={Qinglong Zhang and Yubin Yang},

year={2021},

eprint={2105.13677},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{huang2021fapn,

title={FaPN: Feature-aligned Pyramid Network for Dense Image Prediction},

author={Shihua Huang and Zhichao Lu and Ran Cheng and Cheng He},

year={2021},

eprint={2108.07058},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{chen2021cyclemlp,

title={CycleMLP: A MLP-like Architecture for Dense Prediction},

author={Shoufa Chen and Enze Xie and Chongjian Ge and Ding Liang and Ping Luo},

year={2021},

eprint={2107.10224},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{wang2021pvtv2,

title={PVTv2: Improved Baselines with Pyramid Vision Transformer},

author={Wenhai Wang and Enze Xie and Xiang Li and Deng-Ping Fan and Kaitao Song and Ding Liang and Tong Lu and Ping Luo and Ling Shao},

year={2021},

eprint={2106.13797},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@article{Liu2021PSA,

title={Polarized Self-Attention: Towards High-quality Pixel-wise Regression},

author={Huajun Liu and Fuqiang Liu and Xinyi Fan and Dong Huang},

journal={Arxiv Pre-Print arXiv:2107.00782 },

year={2021}

}

@misc{chao2019hardnet,

title={HarDNet: A Low Memory Traffic Network},

author={Ping Chao and Chao-Yang Kao and Yu-Shan Ruan and Chien-Hsiang Huang and Youn-Long Lin},

year={2019},

eprint={1909.00948},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@inproceedings{sfnet,

title={Semantic Flow for Fast and Accurate Scene Parsing},

author={Li, Xiangtai and You, Ansheng and Zhu, Zhen and Zhao, Houlong and Yang, Maoke and Yang, Kuiyuan and Tong, Yunhai},

booktitle={ECCV},

year={2020}

}

@article{Li2020SRNet,

title={Towards Efficient Scene Understanding via Squeeze Reasoning},

author={Xiangtai Li and Xia Li and Ansheng You and Li Zhang and Guang-Liang Cheng and Kuiyuan Yang and Y. Tong and Zhouchen Lin},

journal={ArXiv},

year={2020},

volume={abs/2011.03308}

}