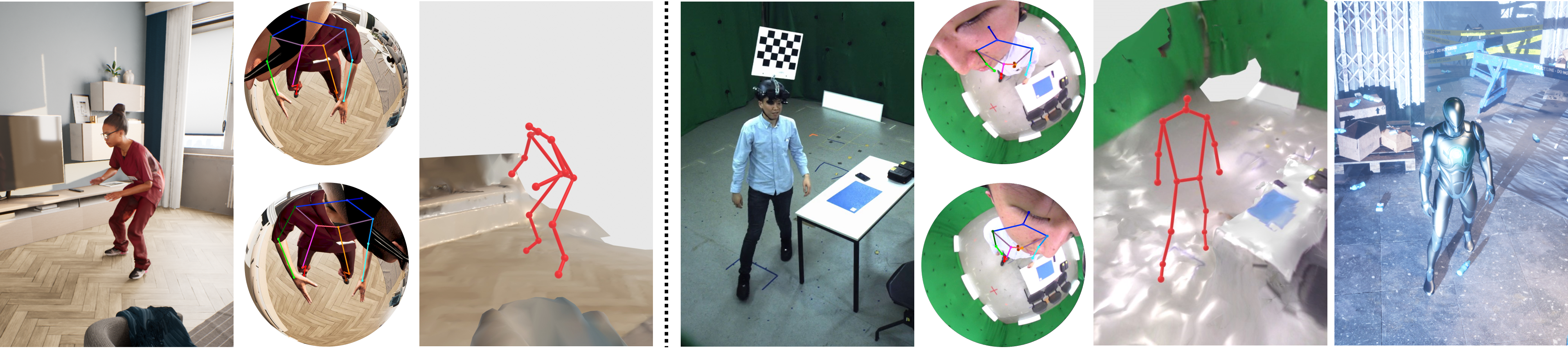

Official PyTorch inference code of our CVPR 2024 paper, "3D Human Pose Perception from Egocentric Stereo Videos".

For any questions, please contact the first author, Hiroyasu Akada [hakada@mpi-inf.mpg.de] .

[Project Page] [Benchmark Challenge]

@inproceedings{hakada2024unrealego2,

title = {3D Human Pose Perception from Egocentric Stereo Videos},

author = {Akada, Hiroyasu and Wang, Jian and Golyanik, Vladislav and Theobalt, Christian},

booktitle = {Computer Vision and Pattern Recognition (CVPR)},

year = {2024}

}

You can download the UnrealEgo2/UnrealEgo-RW datasets on our benchmark challenge page.

You can donwload depth data from SfM/Metashape described in our paper.

-

Depth from UnrealEgo-RW test split

bash download_unrealego2_test_sfm.sh bash download_unrealego_rw_test_sfm.sh

Note that these depth data are different from the synthetic depth maps available on our benchmark challenge page.

We tested our code with the following dependencies:

- Python 3.9

- Ubuntu 18.04

- PyTorch 2.0.0

- Cuda 11.7

Please install other dependencies:

pip install -r requirements.txt

You can download our trained models. Please save them in ./log/(experiment_name).

bash scripts/test/unrealego2_pose-qa-avg-df_data-ue2_seq5_skip3_B32_lr2-4_pred-seq_local-device_pad.sh

--data_dir [path to the `UnrealEgoData2_test_rgb` dir]

--metadata_dir [path to the `UnrealEgoData2_test_sfm` dir]

Please modify the arguments above. The pose predictions will be saved in ./results/UnrealEgoData2_test_pose (raw and zip versions).

-

Model without pre-training on UnrealEgo2

bash scripts/test/unrealego2_pose-qa-avg-df_data-ue-rw_seq5_skip3_B32_lr2-4_pred-seq_local-device_pad.sh --data_dir [path to the `UnrealEgoData_rw_test_rgb` dir] --metadata_dir [path to the `UnrealEgoData_rw_test_sfm` dir] -

Model with pre-training on UnrealEgo2

bash scripts/test/unrealego2_pose-qa-avg-df_data-ue2_seq5_skip3_B32_lr2-4_pred-seq_local-device_pad_finetuning_epoch5-5.sh --data_dir [path to the `UnrealEgoData_rw_test_rgb` dir] --metadata_dir [path to the `UnrealEgoData_rw_test_sfm` dir]

Please modify the arguments above. The pose predictions will be saved in ./results/UnrealEgoData_rw_test_pose (raw and zip versions).

For quantitative results of your methods, please follow the instructions in our benchmark challenge page and submit a zip version.