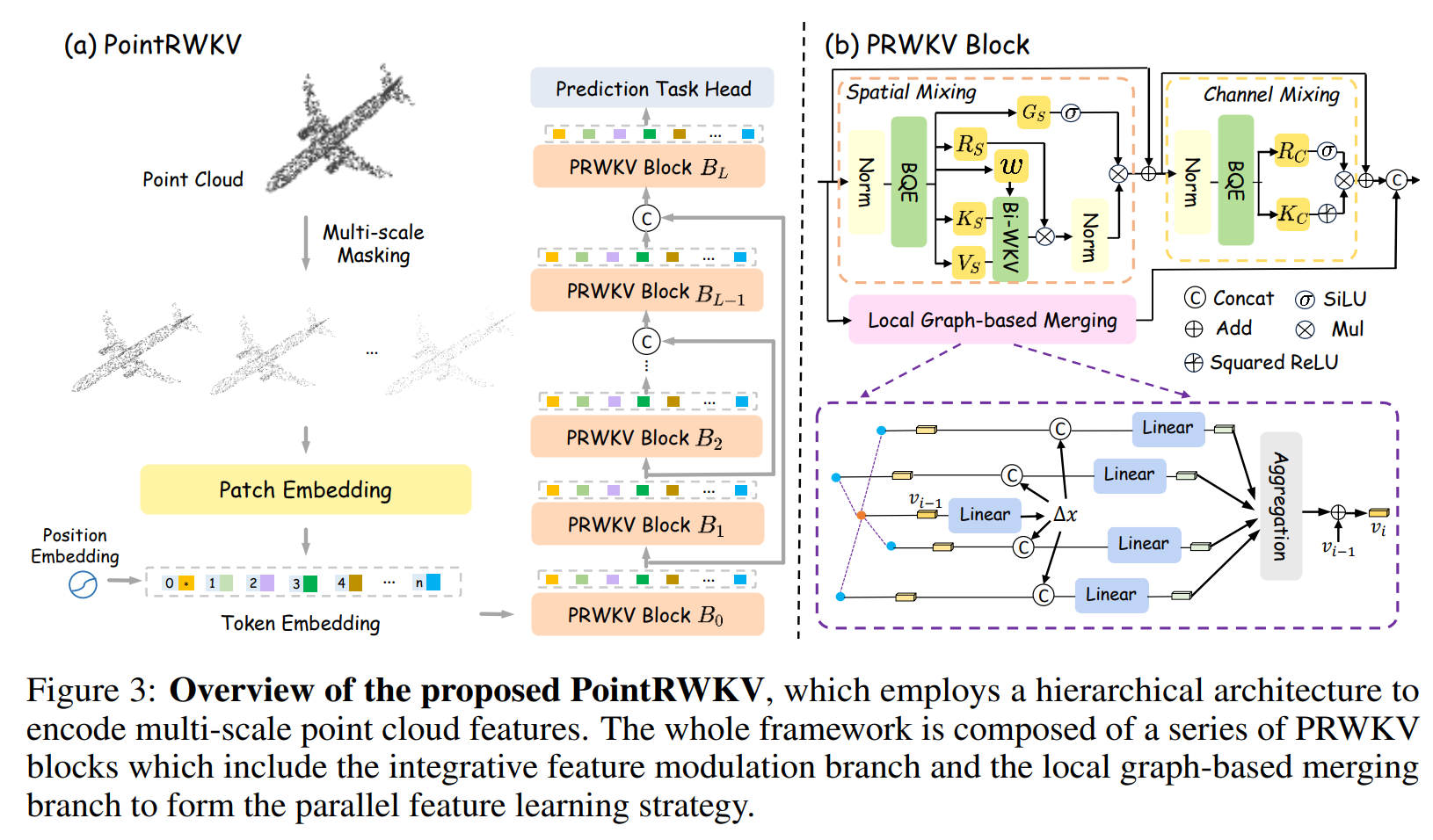

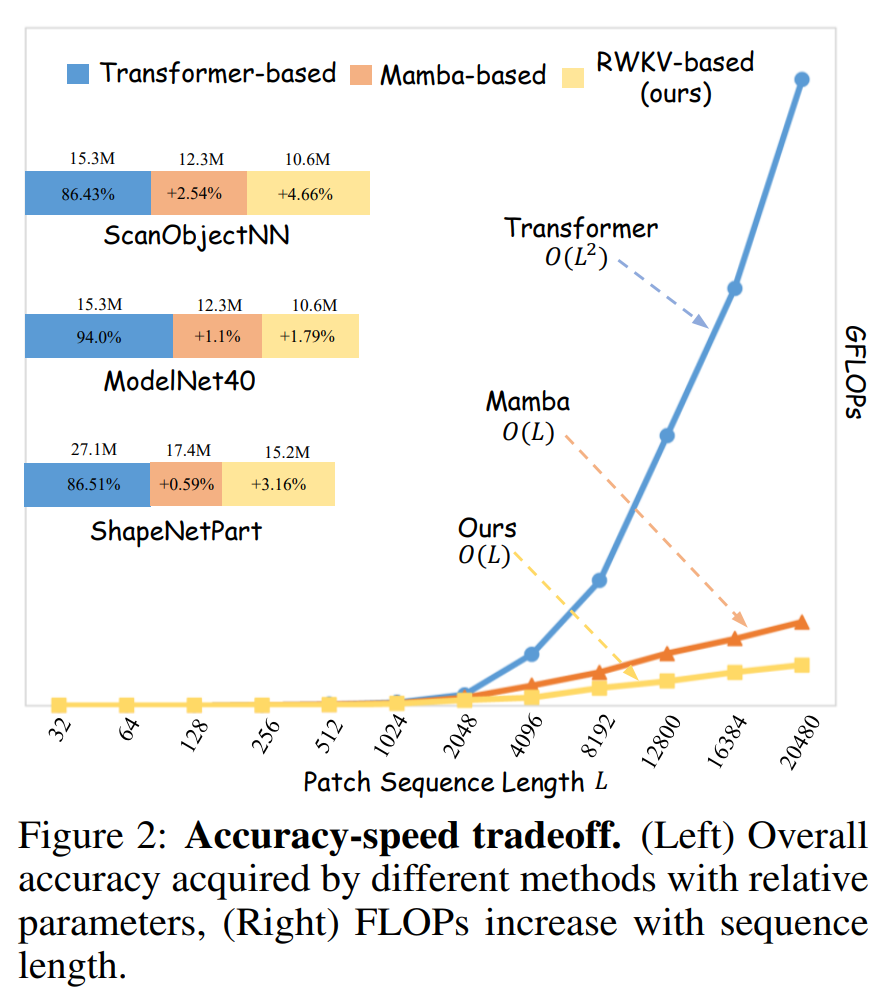

Transformers have revolutionized the point cloud learning task, but the quadratic complexity hinders its extension to long sequences. This puts a burden on limited computational resources. The recent advent of RWKV, a fresh breed of deep sequence models, has shown immense potential for sequence modeling in NLP tasks. In this work, we present PointRWKV, a new model of linear complexity derived from the RWKV model in the NLP field with the necessary adaptation for 3D point cloud learning tasks. Specifically, taking the embedded point patches as input, we first propose to explore the global processing capabilities within PointRWKV blocks using modified multi-headed matrix-valued states and a dynamic attention recurrence mechanism. To extract local geometric features simultaneously, we design a parallel branch to encode the point cloud efficiently in a fixed radius near-neighbors graph with a graph stabilizer. Furthermore, we design PointRWKV as a multi-scale framework for hierarchical feature learning of 3D point clouds, facilitating various downstream tasks. Extensive experiments on different point cloud learning tasks show our proposed PointRWKV outperforms the transformer- and mamba-based counterparts, while significantly saving about 42% FLOPs, demonstrating the potential option for constructing foundational 3D models.

pip install -r requirements.txt

# Chamfer Distance & emd

cd ./extensions/chamfer_dist

python setup.py install --user

cd ./extensions/emd

python setup.py install --user

# PointNet++

pip install "git+https://github.com/erikwijmans/Pointnet2_PyTorch.git#egg=pointnet2_ops&subdirectory=pointnet2_ops_lib"

# GPU kNN

pip install --upgrade https://github.com/unlimblue/KNN_CUDA/releases/download/0.2/KNN_CUDA-0.2-py3-none-any.whl

| Task | Dataset | Acc.(Scratch) | Download (Scratch) | Acc.(pretrain) | Download (Finetune) |

|---|---|---|---|---|---|

| Pre-training | ShapeNet | - | model | ||

| Classification | ModelNet40 | 94.66% | model | 96.16% | model |

| Classification | ScanObjectNN | 92.88% | model | 93.05% | model |

| Part Segmentation | ShapeNetPart | - | - | 90.26% mIoU | model |

The overall directory structure should be:

│PointRWKV/

├──cfgs/

├──data/

│ ├──ModelNet/

│ ├──ModelNetFewshot/

│ ├──ScanObjectNN/

│ ├──ShapeNet55-34/

│ ├──shapenetcore_partanno_segmentation_benchmark_v0_normal/

├──datasets/

├──.......

│ModelNet/

├──modelnet40_normal_resampled/

│ ├── modelnet40_shape_names.txt

│ ├── modelnet40_train.txt

│ ├── modelnet40_test.txt

│ ├── modelnet40_train_8192pts_fps.dat

│ ├── modelnet40_test_8192pts_fps.dat

Download: You can download the processed data from Point-BERT repo, or download from the official website and process it by yourself.

│ModelNetFewshot/

├──5way10shot/

│ ├── 0.pkl

│ ├── ...

│ ├── 9.pkl

├──5way20shot/

│ ├── ...

├──10way10shot/

│ ├── ...

├──10way20shot/

│ ├── ...

Download: Please download the data from Point-BERT repo. We use the same data split as theirs.

│ScanObjectNN/

├──main_split/

│ ├── training_objectdataset_augmentedrot_scale75.h5

│ ├── test_objectdataset_augmentedrot_scale75.h5

│ ├── training_objectdataset.h5

│ ├── test_objectdataset.h5

├──main_split_nobg/

│ ├── training_objectdataset.h5

│ ├── test_objectdataset.h5

Download: Please download the data from the official website.

│ShapeNet55-34/

├──shapenet_pc/

│ ├── 02691156-1a04e3eab45ca15dd86060f189eb133.npy

│ ├── 02691156-1a6ad7a24bb89733f412783097373bdc.npy

│ ├── .......

├──ShapeNet-55/

│ ├── train.txt

│ └── test.txt

Download: Please download the data from Point-BERT repo.

|shapenetcore_partanno_segmentation_benchmark_v0_normal/

├──02691156/

│ ├── 1a04e3eab45ca15dd86060f189eb133.txt

│ ├── .......

│── .......

│──train_test_split/

│──synsetoffset2category.txt

Download: Please download the data from here.

This project is based on Point-BERT (paper, code), Point-MAE (paper, code), PointM2AE (paper, code). Thanks for their wonderful works.

If you find this repository useful in your research, please consider giving a star ⭐ and a citation

@article{he2024pointrwkv,

title={PointRWKV: Efficient RWKV-Like Model for Hierarchical Point Cloud Learning},

author={He, Qingdong and Zhang, Jiangning and Peng, Jinlong and He, Haoyang and Li, Xiangtai and Wang, Yabiao and Wang, Chengjie},

journal={arXiv preprint arXiv:2405.15214},

year={2024}

}