This repository is a collection of links to papers and code repositories relevant in implementing LLMs with reduced privacy risks. These correspond to papers discussed in our survey available at: https://arxiv.org/abs/2312.06717

This repository will be periodically updated with relevant papers scraped from Arxiv. The survey paper itself will be updated on a slightly less frequent basis. Papers that have been added to this repository but not the paper will be marked with asterisks.

If you have a paper relevant to LLM privacy, please nominate them for inclusion

Repo last updated 5/30/2024

Paper last updated 5/30/2024

@misc{neel2023privacy,

title={Privacy Issues in Large Language Models: A Survey},

author={Seth Neel and Peter Chang},

year={2023},

eprint={2312.06717},

archivePrefix={arXiv},

primaryClass={cs.AI}

}

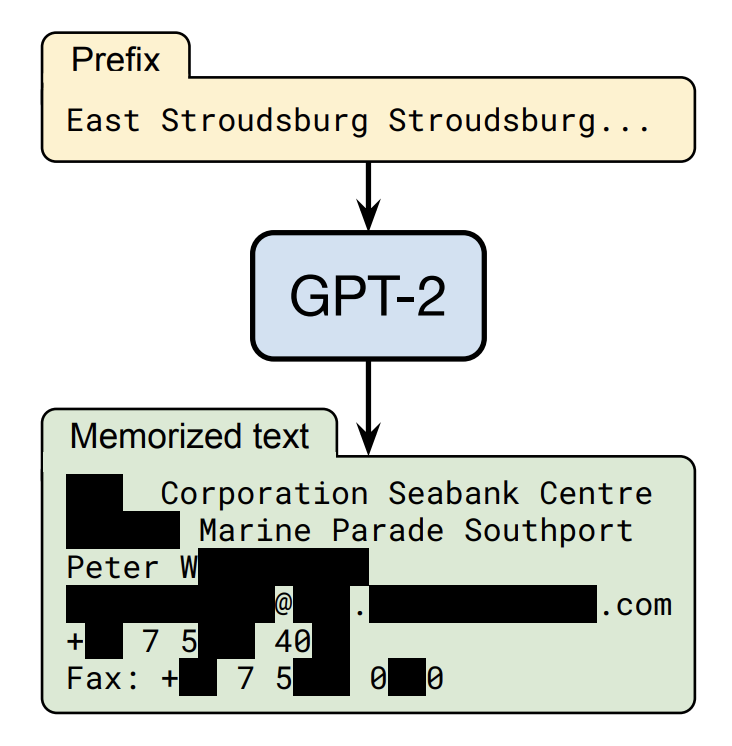

Image from Carlini 2020

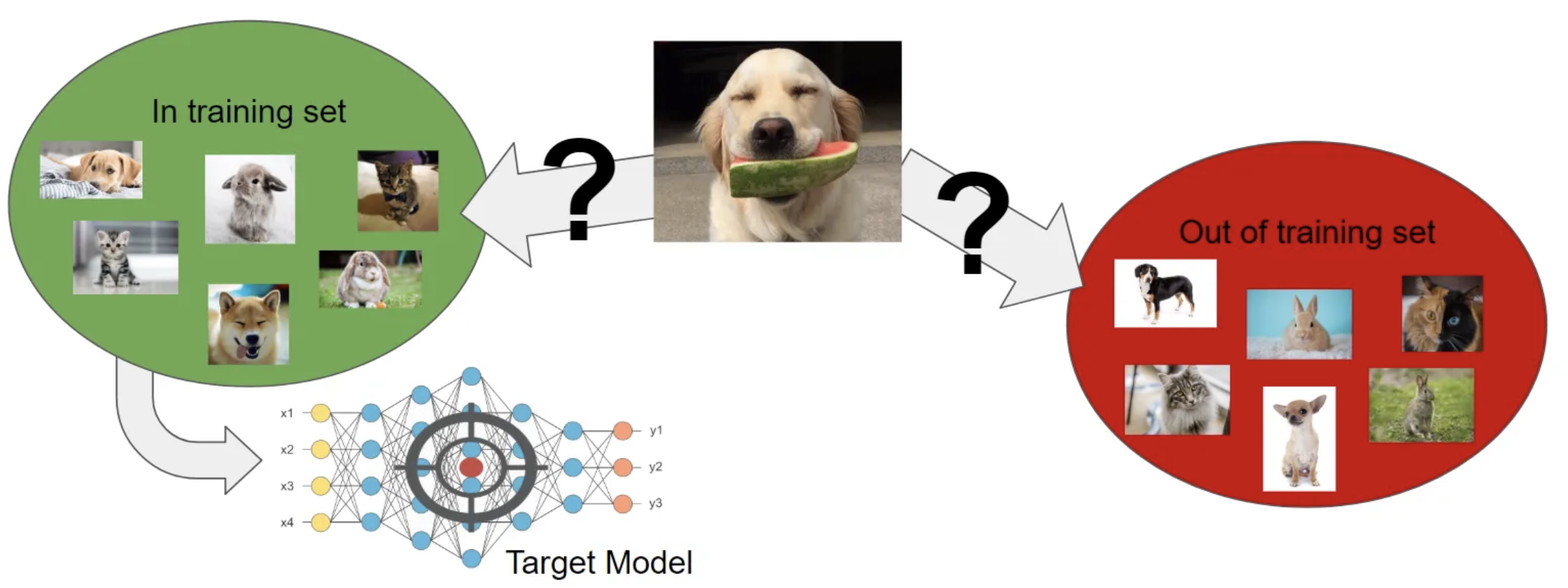

Image from Tindall

Furthermore, see [Google Training Data Extraction Challenge]

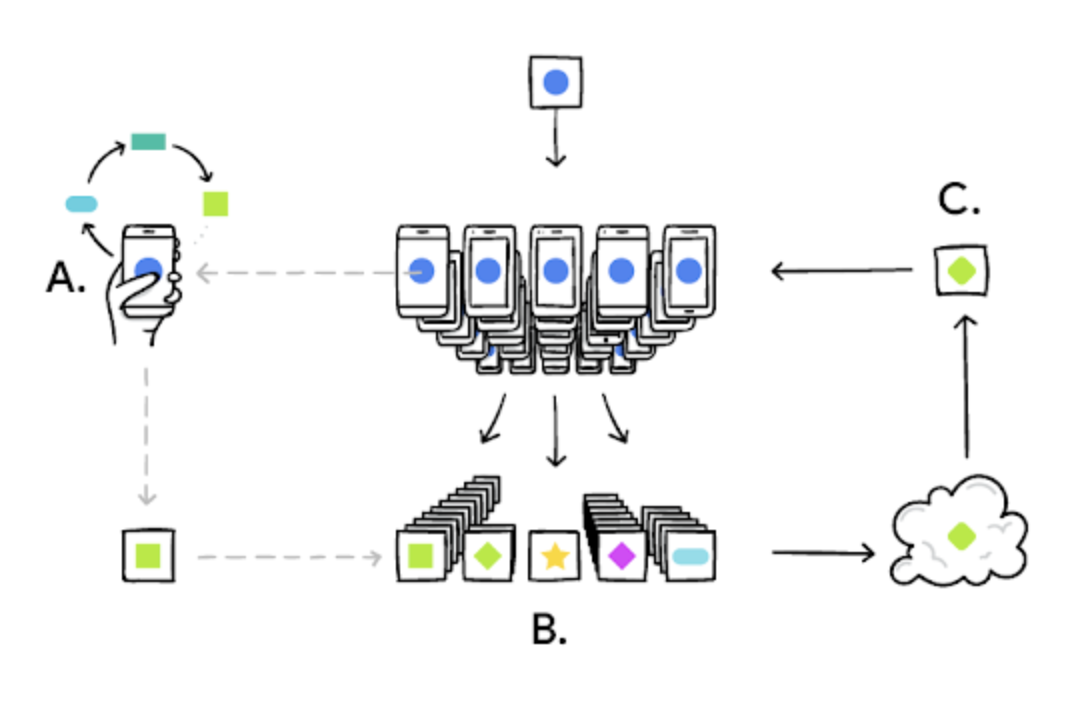

Image from Google AI Blog

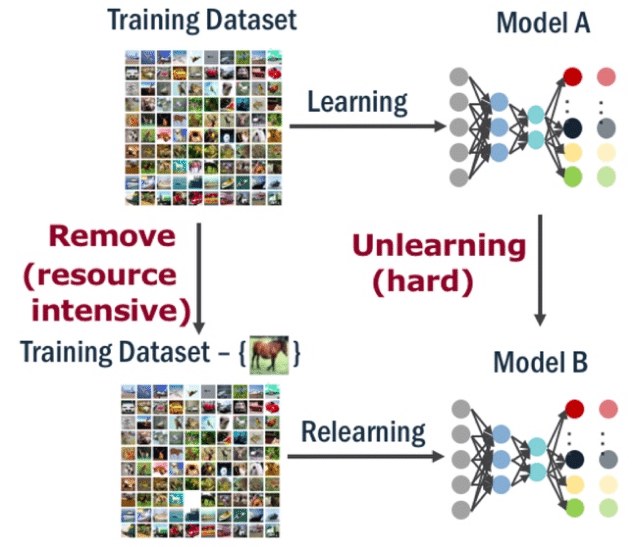

Image from Felps 2020

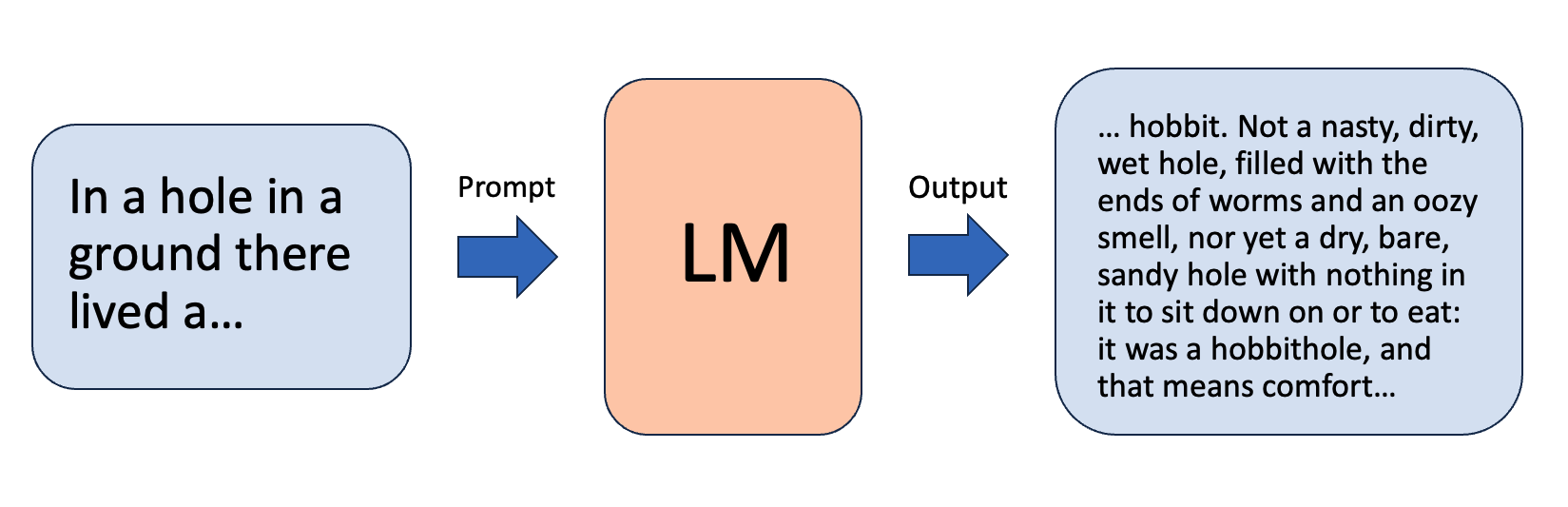

Custom Image

| Paper Title | Year | Author | Code |

|---|---|---|---|

| Can Copyright be Reduced to Privacy? | 2023 | Elkin-Koren et al. | |

| DeepCreativity: Measuring Creativity with Deep Learning Techniques | 2022 | Franceschelli et al. | |

| Foundation Models and Fair Use | 2023 | Henderson et al. | |

| Preventing Verbatim Memorization in Language Models Gives a False Sense of Privacy | 2023 | Ippolito et al. | |

| Copyright Violations and Large Language Models | 2023 | Karamolegkou et al. | [Code] |

| Formalizing Human Ingenuity: A Quantitative Framework for Copyright Law's Substantial Similarity | 2022 | Scheffler et al. | |

| On Provable Copyright Protection for Generative Models | 2023 | Vyas et al. |

| Paper Title | Year | Author | Code |

|---|---|---|---|

| Membership Inference Attacks on Machine Learning: A Survey | 2022 | Hu et al. | |

| When Machine Learning Meets Privacy: A Survey and Outlook | 2021 | Liu et al. | |

| Rethinking Machine Unlearning for Large Language Models | 2024 | Liu et al. | |

| A Survey of Machine Unlearning | 2022 | Nguyen et al. | |

| ***A Survey of Large Language Models | 2023 | Zhao et al. |

Repository maintained by Peter Chang (pchang@hbs.edu)