This is a PyTorch implementation of Hyperband: A Novel Bandit-Based Approach to Hyperparameter Optimization by Lisha Li, Kevin Jamieson, Giulia DeSalvo, Afshin Rostamizadeh and Ameet Talwalkar.

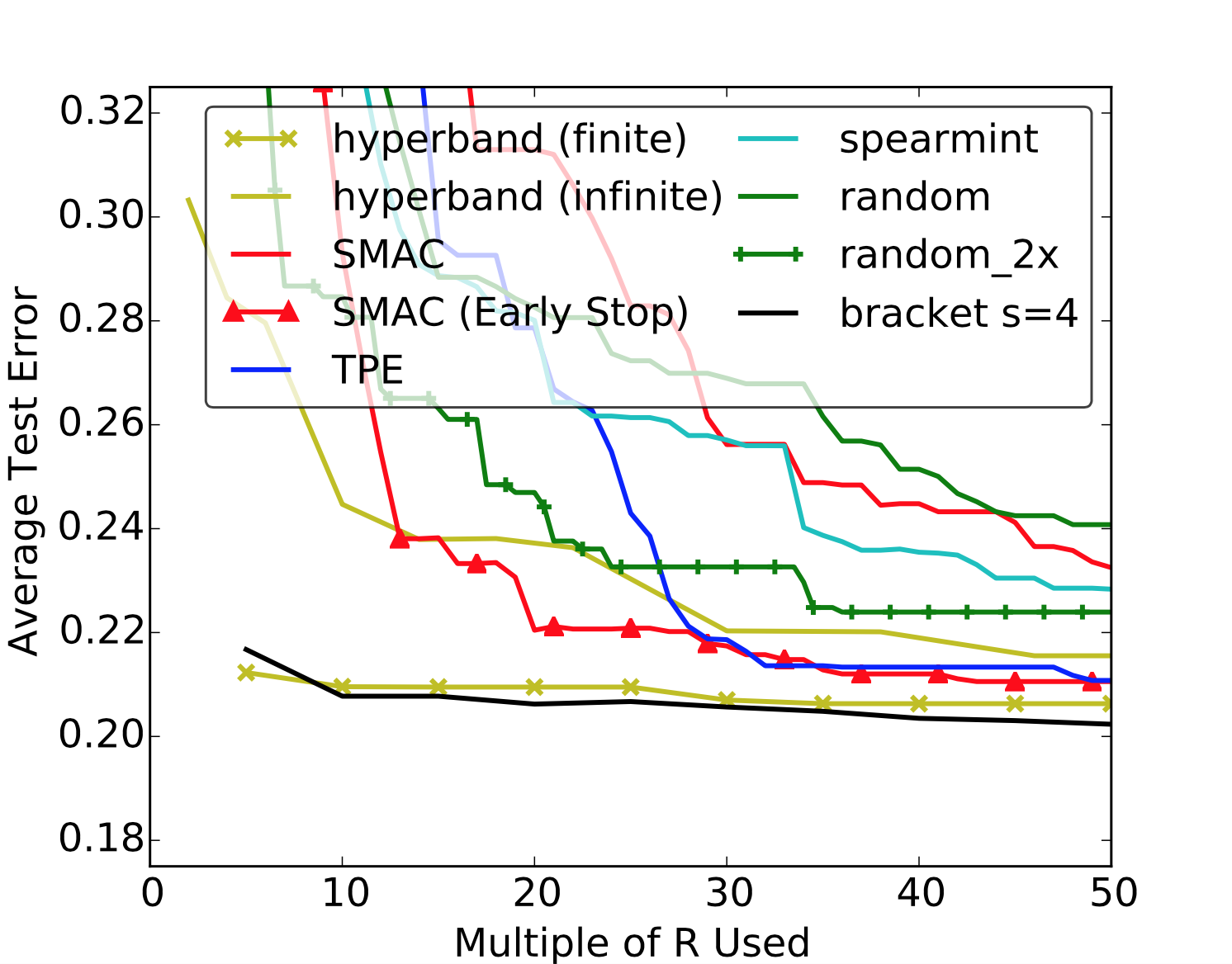

HyperBand is a hyperparameter optimization algorithm that exploits the iterative nature of SGD and the embarassing parallelism of random search. Unlike Bayesian optimization methods which focus on optimizing hyperparameter configuration selection, HyperBand poses the problem as a hyperparameter evaluation problem, adaptively allocating more resources to promising configurations while quickly eliminating poor ones. This allows it to evaluate orders of magnitude more hyperparameter configurations.

- Python 3.5+

- PyTorch

- tqdm

Note: We currently only support FC networks. ConvNet support coming soon!

- Install requirements using:

pip install -r requirements.txt

- Define your model in

model.py. This should return ann.Sequentialobject. Take note of the last layer, i.e. usingnn.LogSoftmax()vs.nn.Softmax()will require possible changes in the training method. For example, let's define a 4 layer FC network as follows:

Sequential(

(0): Linear(in_features=784, out_features=512)

(1): ReLU()

(2): Linear(in_features=512, out_features=256)

(3): ReLU()

(4): Linear(in_features=256, out_features=128)

(5): ReLU()

(6): Linear(in_features=128, out_features=10)

(7): LogSoftmax()

)

- Write your own

data_loader.pyif you do not have a dataset that is supported bytorchvision.datasets. Else, slightly editdata_loader.pyto suit your dataset of choice:CIFAR-10,CIFAR-100,Fashion-MNIST,MNIST, etc. - Create your hyperparameter dictionary in

main.py. You must follow the following syntax:

params = {

'2_hidden': ['quniform', 512, 1000, 1],

'4_hidden': ['quniform', 128, 512, 1],

'all_act': ['choice', [[0], ['choice', ['selu', 'elu', 'tanh']]]],

'all_dropout': ['choice', [[0], ['uniform', 0.1, 0.5]]],

'all_batchnorm': ['choice', [0, 1]],

'all_l2': ['uniform', 1e-8, 1e-5],

'optim': ['choice', ["adam", "sgd"]],

} Keys are of the form {layer_num}_{hyperparameter} where layer_num can be a layer from your nn.Sequential model or all to signify all layers. Values are of the form [distribution, x] where distribution can be one of uniform, quniform, choice, etc.

For example, 2_hidden: ['quniform', 512, 1000, 1] means to sample the hidden size of layer 2 of the model (Linear(in_features=512, out_features=256)) from a quantile uniform distribution with lower bound 512, upper bound 1000 and q = 1.

all_dropout: ['choice', [[0], ['uniform', 0.1, 0.5]]] means to choose whether to apply dropout or not to all layers. choice means pick from elements in a list and [0] means False while the other choice, implicitly implied to mean true, means to sample Dropout probability from a uniform distribution with lower bound 0.1 and upper bound 0.5.

- Edit the

config.pyfile to suit your needs. Concretely, you can edit the hyperparameters of HyperBand, the default learning rate, the dataset of choice, etc. There are 2 parameters that control the HyperBand algorithm:max_iter: maximum number of iterations allocated to a given hyperparam configeta: proportion of configs discarded in each round of successive halving.epoch_scale: a boolean indicating whethermax_itershould be computed in terms of mini-batch iterations or epochs. This is useful if you want to speed up HyperBand and don't want to evaluate a full pass on a large dataset.

Set max_iter to the usual amount you would train neural networks for. It's mostly a rule fo thumb, but something in the range [80, 150] epochs. Larger values of nu correspond to a more aggressive elimination schedule and thus fewer rounds of elimination. Increase to receive faster results at the cost of a sub-optimal performance. Authors advise a value of 3 or 4.

- As a last step, depending on the last layer in your model, you may wish to edit the

train_one_epoch()method in thehyperband.pyfile. The default usesF.nll_lossbecause it assumes the user usedLogSoftmaxbut feel free to edit the loss to tailor to your needs.

Finally, you can run the algorithm using:

python main.py

- Activation

- all

- per layer

- L1/L2 regularization (weights & biases)

- all

- per layer

- Add Batch Norm

- sandwiched between every layer

- Add Dropout

- sandwiched between every layer

- Add Layers

- conv Layers

- fc Layers

- Change Layer Params

- change fc output size

- change conv params

- Optimization

- batch size

- learning rate

- optimizer (adam, sgd)

- setup checkpointing

- train loop

- < 1 epoch support

- filter out early stopping configs

- logging support

- max exploration option (

s = s_max) - input error checking

- plotting loss support

- arbitrary loss support

- multi-gpu support

- conv nn support