- OMERO on AWS

- Pre-requisite

- Deploy OMERO Stack

- OMERO Data Ingestion

- OMERO.insight on AppStream

- OMERO CLI on EC2

- Clean Up the deployed stack

- Reference

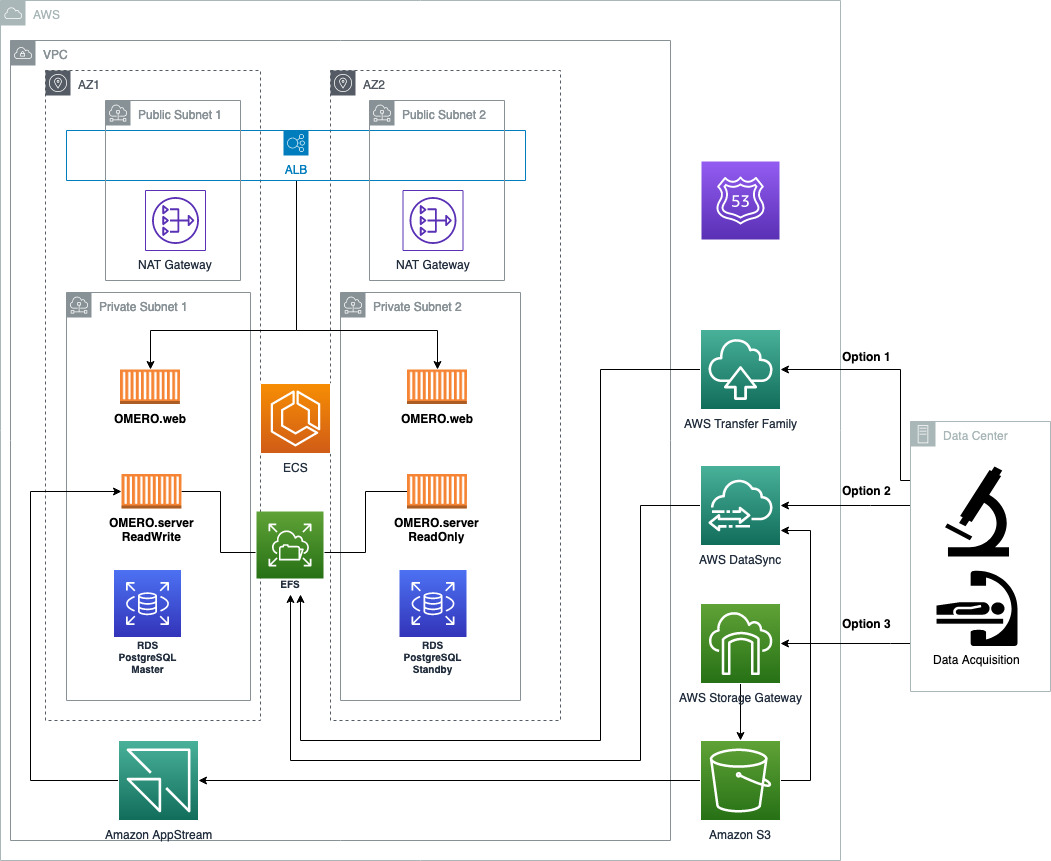

OMERO is an open source microscopic image management tool. OMERO deployment is a typical three tier Web application. OMERO web and server are containerized and can run on AWS ECS. The data is stored in the AWS EFS mounted to OMERO server and the AWS RDS PostgreSQL database. Amazon EFS Intelligent-tiering can reduce the storage cost for workloads with changing access patterns.

First create the network infrastructure. One way to do it is to deploy using this CloudFormation template, which will create one AWS VPC, two public subnets and two private subnets. If you want to add VPC flow logs, you can deploy the network infrastructure CloudFormation template in this repository and select true for AddVPCFlowLog parameter.

If you have registered or transfer your domain to AWS Route53, you can use the associated Hosted zones to automate the DNS validatition for the SSL certificate issued by AWS Certificate Manager (ACM). It is noteworthy the Route53 domain and associated public Hosted zone should be on the same AWS account as the ACM that issues SSL certificate, in order to automate the DNS validation. ACM will create a validation CNAME record in the public Hosted zone. This DNS validated public certificate can be used for TLS termination for Applicaton Load Balancer

The diagram of Architecture is here:

Current OMERO server only support one writer per mounted network share file. To avoid a race condition between the two instances trying to own a lock on the network file share, we will deploy one read+write OMERO server and one read only OMERO server in the following CloudFormation templates using this 1-click deployment:

which will deploy two nested CloudFormation templates, one for storage (EFS and RDS) and one for ECS containers (OMERO web and server). It also deploys a certificate for TLS termination at Application Load Balancer. Majority of parameters already have default values filled and subject to be customized. VPC and Subnets are required, which can be obtained from pre-requisite deployment. It also requires the Hosted Zone ID and fully qualifed domain name in AWS Route53, which will be used to validate SSL Certificate issued by AWS ACM.

If you do not need the redundency for read only OMERO server, you can deploy a single read+write OMERO server using this 1-click deployment:

Even though the OMERO server is deployed in single instance, you can achieve the Hight Availability (HA) deployment of OMERO web and PostgreSQL database. You have option to deploy the OMERO containers on ECS Fargate or EC2 launch type.

If you want to expose the OMERO server port, i.e. 4064 and 4063, to public, you can deploy a stack with Network Load Balancer (NLB) for those two ports using this 1-click deployment:

If you do not have registered domain and associated hosted zone in AWS Route53, you can deploy the following CloudFormation stacks and access to OMERO web through Application Load Balancer DNS name without TLS termination using this 1-click deployment:

A cost estimate for the compute resources deployed by this solution can be found here.

There are multiple ways to ingest image data into OMERO on AWS:

- Transfer data directly to EFS mounted to OMERO server through AWS Transfer Family, from on premises data center or data acquisition facilities.

- Transfer data to EFS through AWS DataSync, either from on premises data acquisition or from Amazon S3.

- Upload data to Amazon S3 first through AWS Stroage Gateway or other tools, then import into OMERO using virtualized OMERO.insight desktop application accessed through Amazon AppStream 2.0

There are two primary ways to land image data into EFS file share, using AWS DataSync or AWS Transfer Family. AWS DataSync can be used to transfer data from S3 as well.

You can create a DataSync task to monitor a S3 bucket and copy the files over to the EFS file share mounted to OMERO server using 1-click deployment:

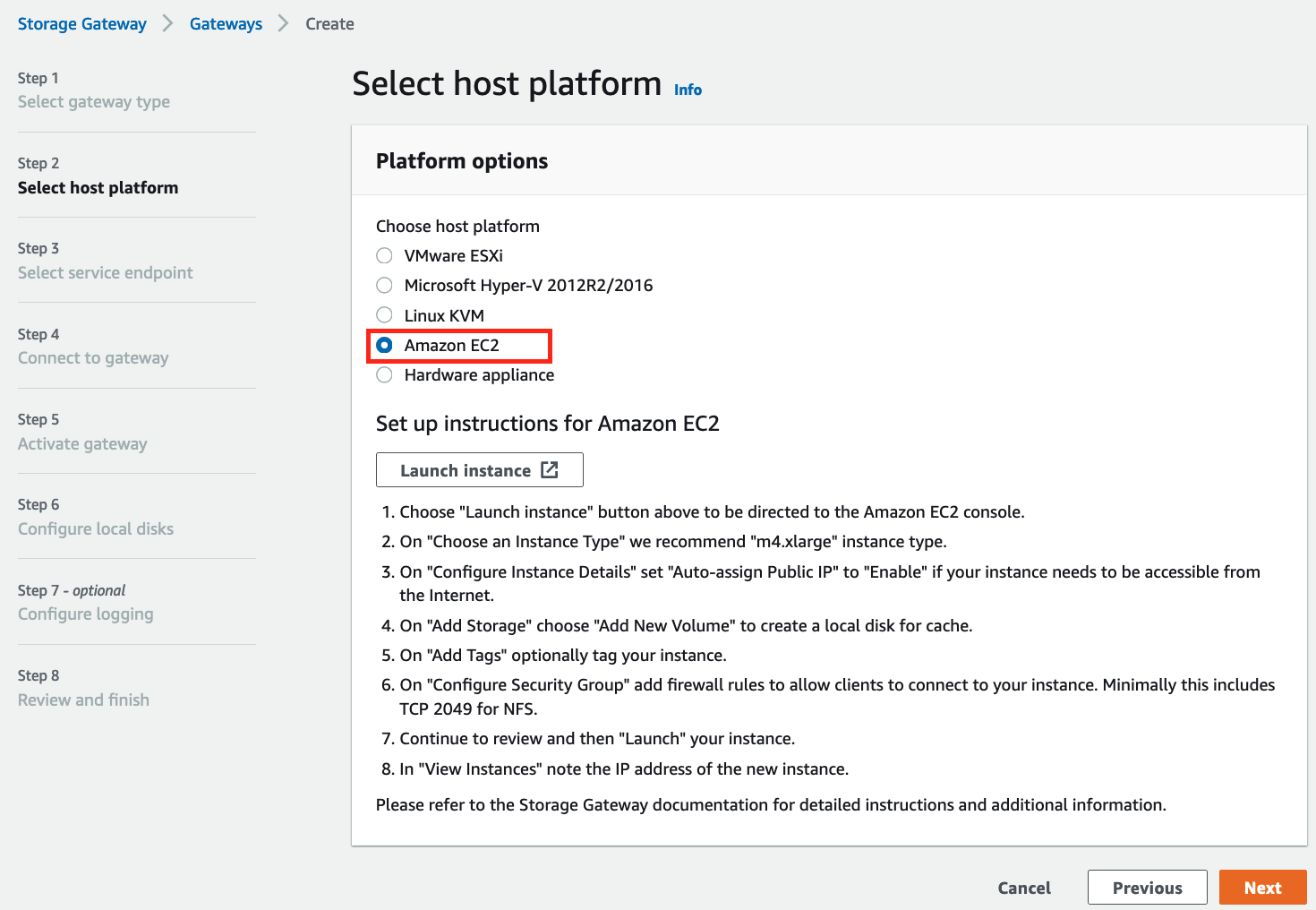

If you have huge amount of data and I/O performance is not critical, you can save the image files on Amazon S3 and deploy a S3 filegateway on Amazon EC2 to cache a subset of files, and mount a NFS file share instead of Amazon EFS volume to OMERO server. Although Amazon S3 cost significantly less than Amazon EFS, the file gateway instance does have the extra cost. We have done a storage cost comparison for 2TB of data between Amazon EFS with intelligent tiering and Amazon S3 with filegateway on EC2.

You can follow the instruction to deploy an Amazon S3 File gateway on EC2 to cache the images in S3 bucket to reduce storage cost. Alternatively, you can create the EC2 file gateway instance and S3 bucket using 1-click deployment:

Create File Gateway on EC2:

Connect to the EC2 file gateway instance through IP address and activate it. Follow instruction to configure local disks and logging.

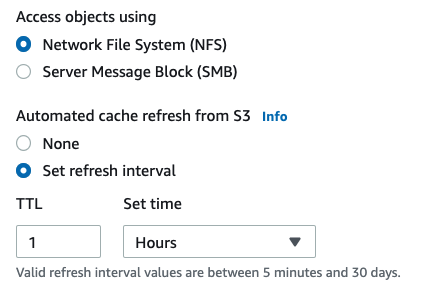

Create a NFS file share on top of the S3 bucket created earlier. If you want to upload files to S3 bucket separately and have them visible to the NFS share, you should configure the cache refresh:

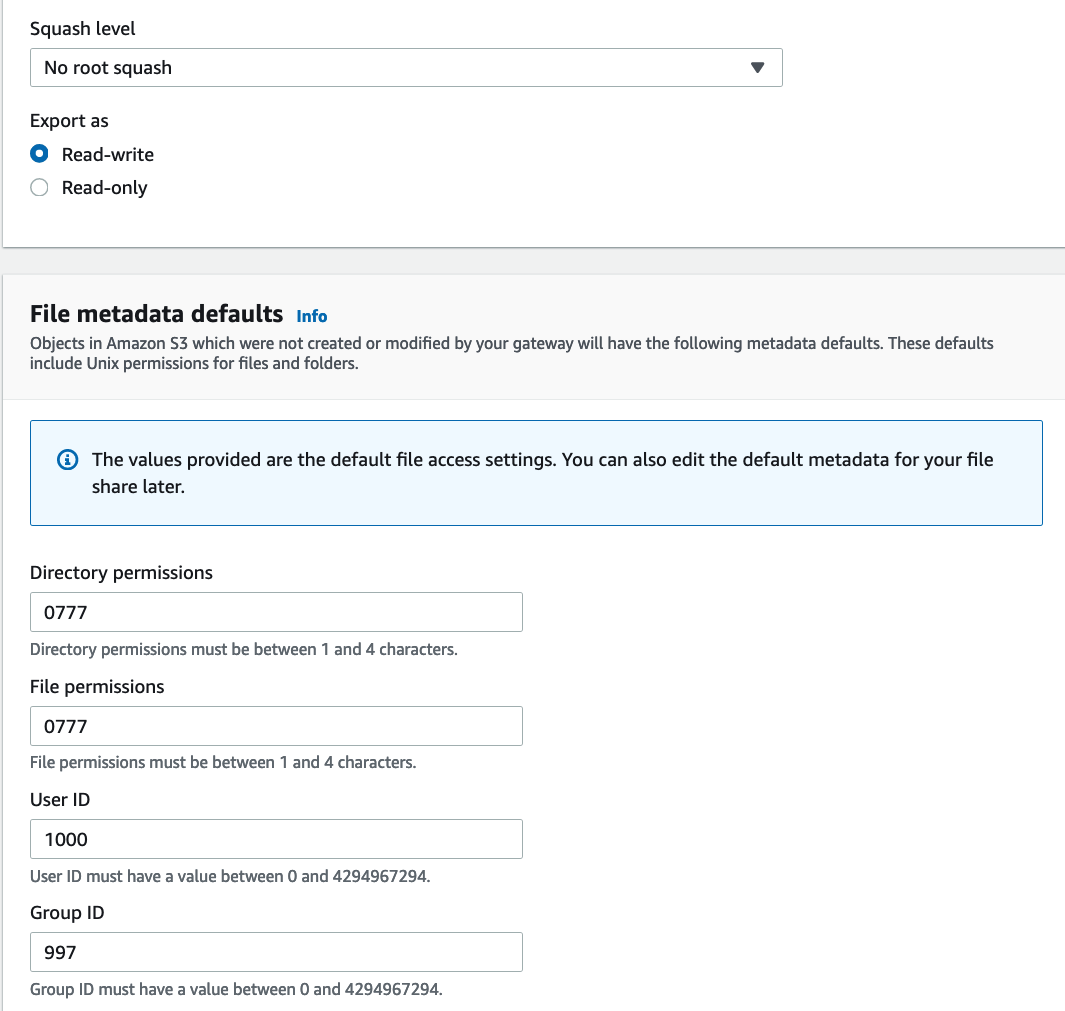

If you want to make all of the files uploaded to S3 visible as owned by "omero-server" within container mounted to the storage gateway, you can make the following change to the new file share created:

Then you will be able to deploy OMERO stack with NFS mount as storage backend through this 1-click deployment:

Two parameters were from the S3 file gateway deployment: S3FileGatewayIp and S3BucketName.

Or if you want to deploy with a domain name and SSL certificate:

Once image data landed on EFS, you can access and import them into OMERO using Command Line Interface (CLI). Amazon ECS Exec command has been enabled for OMERO.server container service. To run ECS Exec command on Fargate containers, you should have AWS CLI v1.19.28/v2.1.30 or later installed first, and install SSM plugin for AWS CLI. Grab your ECS task ID of OMERO server on AWS ECS Console, and run:

aws ecs execute-command --cluster OMEROECSCluster --task <your ECS task Id> --interactive --command "/bin/sh"If you failed to execute the command, you can use the diagnosis tool to check the issue.

After login, you will need to change to a non-root OMERO system user, like omero-server, and activate the virtual env:

source /opt/omero/server/venv3/bin/activateAfter environment activated, you can run in-place import on images that have already been transfered to the EFS mount, like omero import --transfer=ln_s <file>. It is noteworthy that the OMERO CLI importer has to run on the OMERO server container.

If you deployed OMERO server on EC2 launch type, you can run docker commands to import images as well. Assuming you want to import all of the images in /OMERO/downloads:

containerID=`docker ps | grep omeroserver | awk '{print $1;}'`

files=`docker exec $containerID bash -c "ls /OMERO/downloads"`

for FILE in $files; do docker exec $containerID bash -c "source /opt/omero/server/venv3/bin/activate; omero import -s localhost -p 4064 -u root --transfer=ln_s /OMERO/downloads/$FILE"; doneOMERO supports automated file import using DropBox. You can create a folder in the /OMERO/DropBox directory with folder name as username, like:

mkdir /OMERO/DropBox/rootThen run the following commands to create the automated import job on OMERO server:

export OMERODIR=/opt/omero/server/OMERO.server

$OMERODIR/bin/omero config set omero.fs.watchDir "/OMERO/DropBox"

$OMERODIR/bin/omero config set omero.fs.importArgs '-T "regex:^.*/(?<Container1>.*?)"'You can check if jobs are created successfully, by checking /opt/omero/server/OMERO.server/var/log/DropBox.log file, you should see something like: Started OMERO.fs DropBox client

According to the documentation, you can add different arguments, like for in-place import:

$OMERODIR/bin/omero config set omero.fs.importArgs '-T "regex:^.*/(?<Container1>.*?)"; --transfer=ln_s'or to check if a given path has already been imported beforehand (avoid duplicate import):

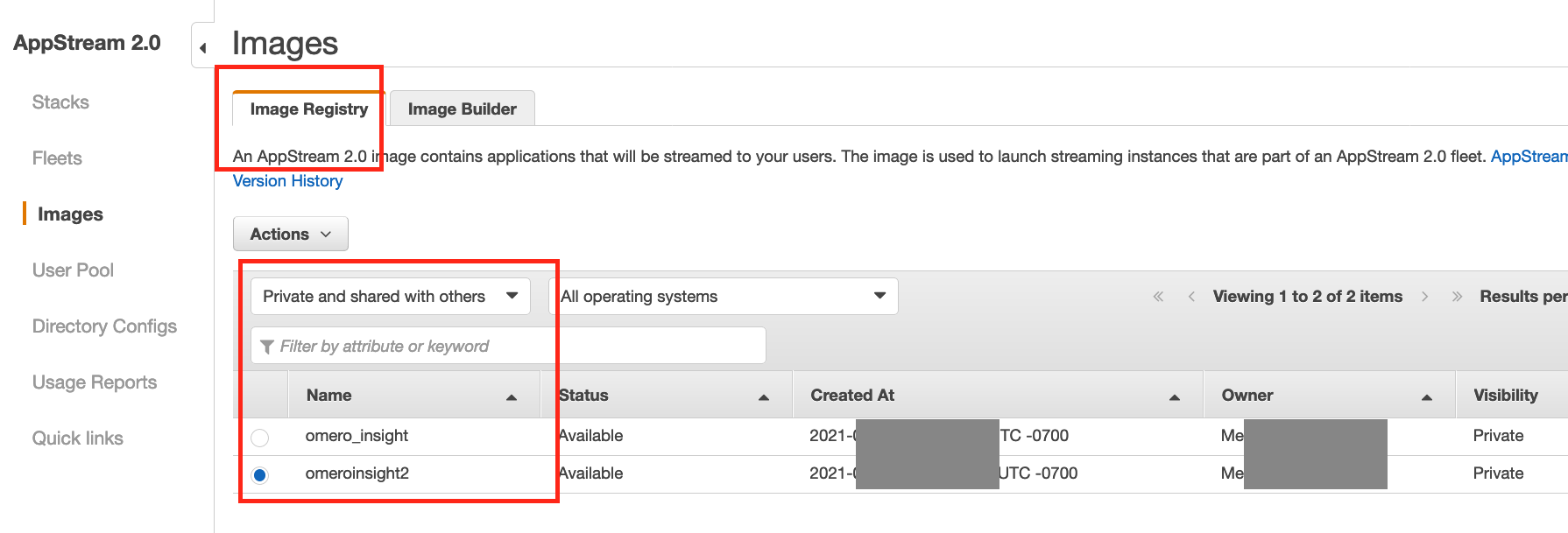

$OMERODIR/bin/omero config set omero.fs.importArgs '-T "regex:^.*/(?<Container1>.*?)"; --exclude=clientpath'Here are steps to run OMERO.insight on Amazon AppStream 2.0, which can import data from Amazon S3 directly into OMERO server:

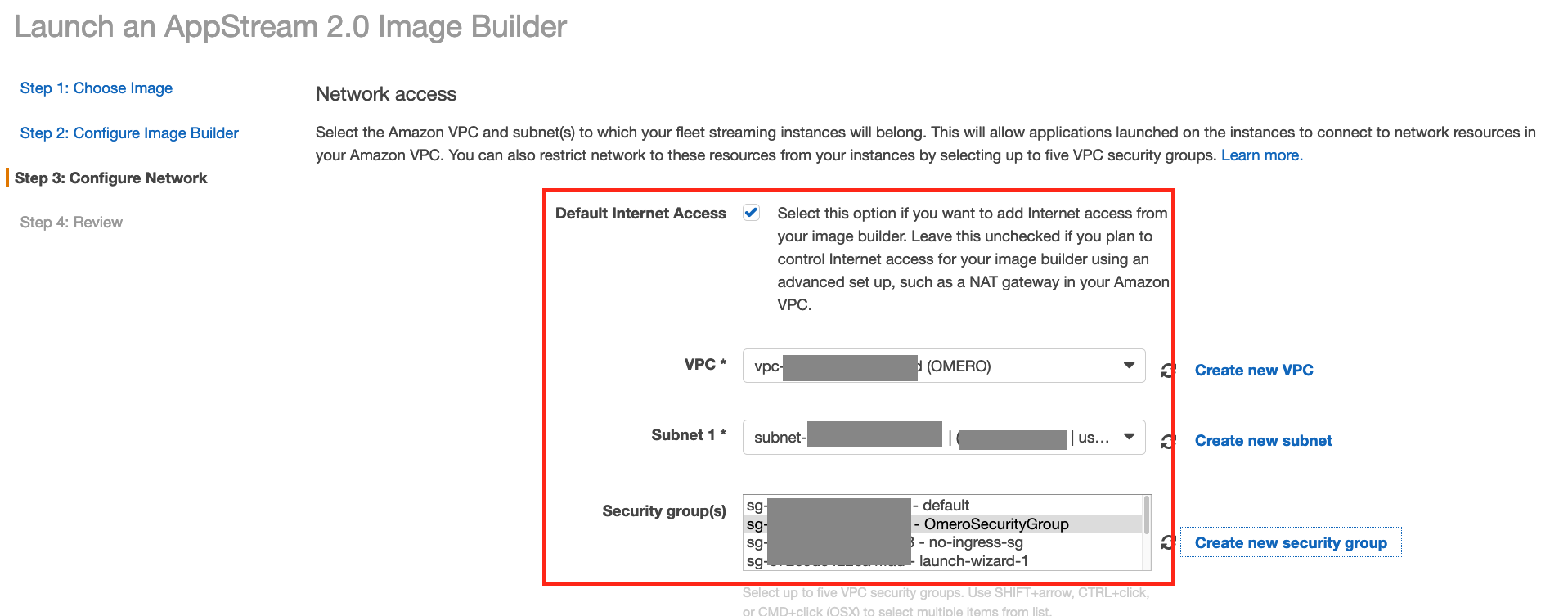

- Follow instruction to create a custom image using AppStream console. The Windows installation file can be downloaded here. Launch image builder and start with any of the Windows base images, select size of instance, either create a new or use existing IAM role with S3 access permission. Select the VPC where OMERO stack was deployed, and a public subnet that can be access through internet. The image builder instance will be stopped after image building.

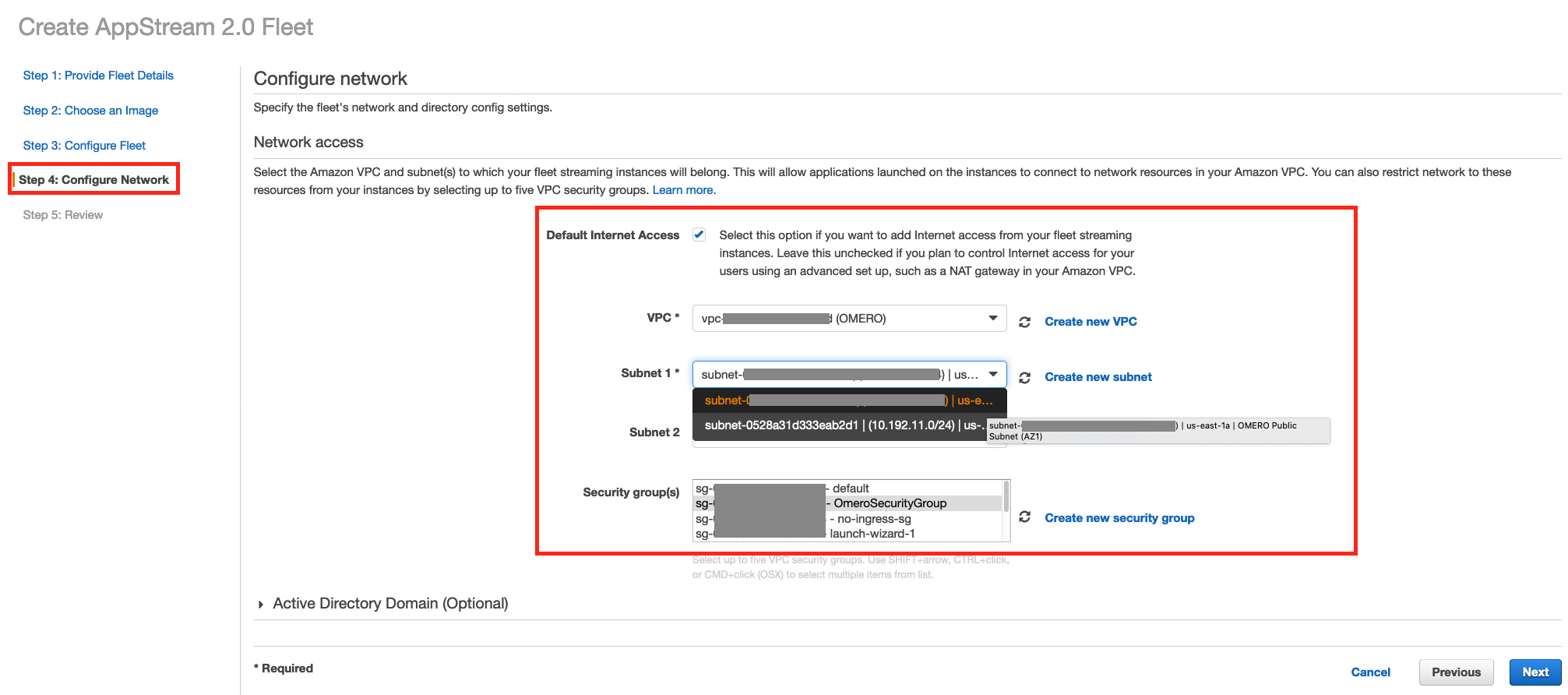

- Follow instructions to create a fleet and a stack. When you create the fleet, select the VPC where OMERO stack was deployed, two public subnets that can be access through internet, and OMERO security group that allow access to OMERO server.

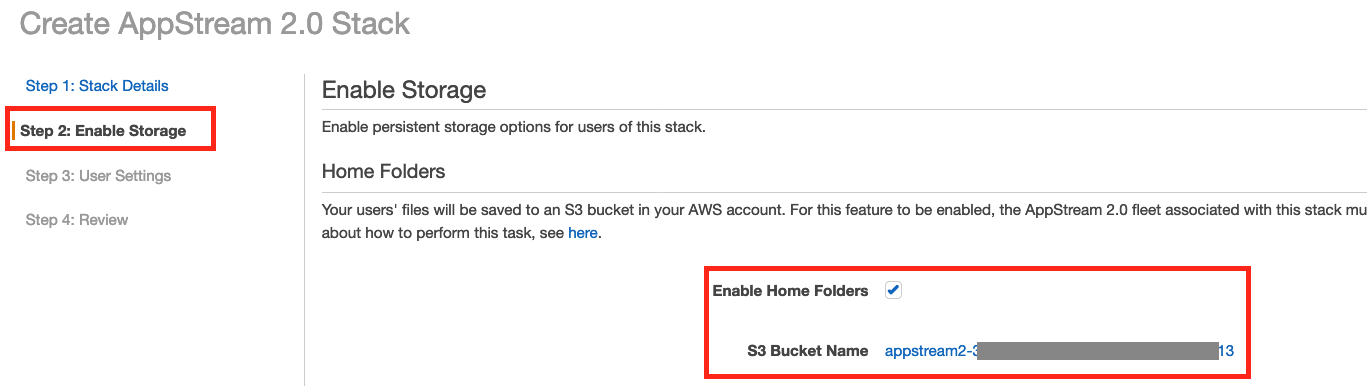

When you create the stack, enable the Home Folder in Amazon S3, then you will be able to import image files from S3 directly to OMERO server.

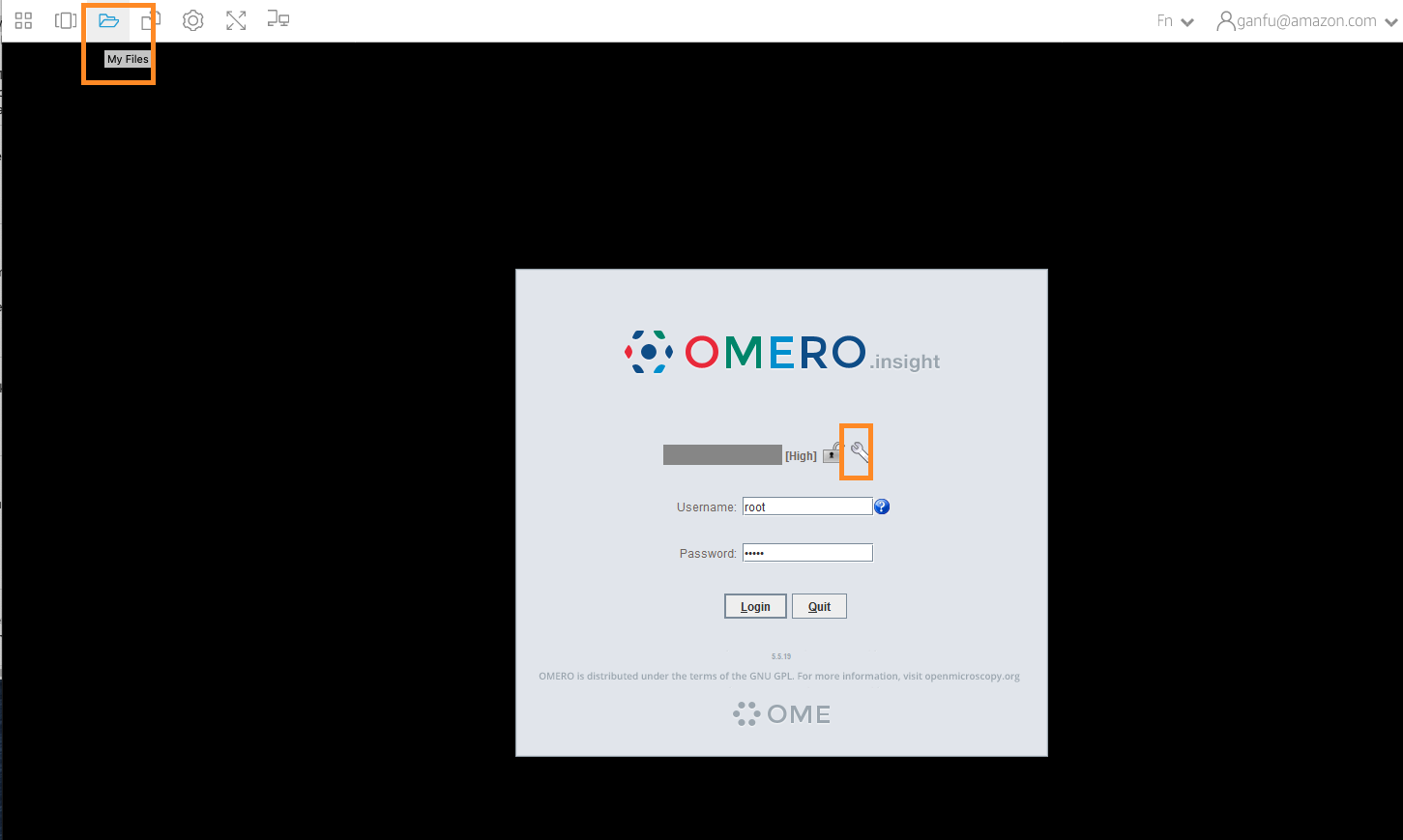

- Create a user in the AppStream 2.0 User Pool, and access OMERO.insight through the streaming service using the login URL in the email or created for the given stack. Once access the virtualized OMERO.insight on AppStream 2.0, you can access the home folder on S3 and setup OMERO server connection.

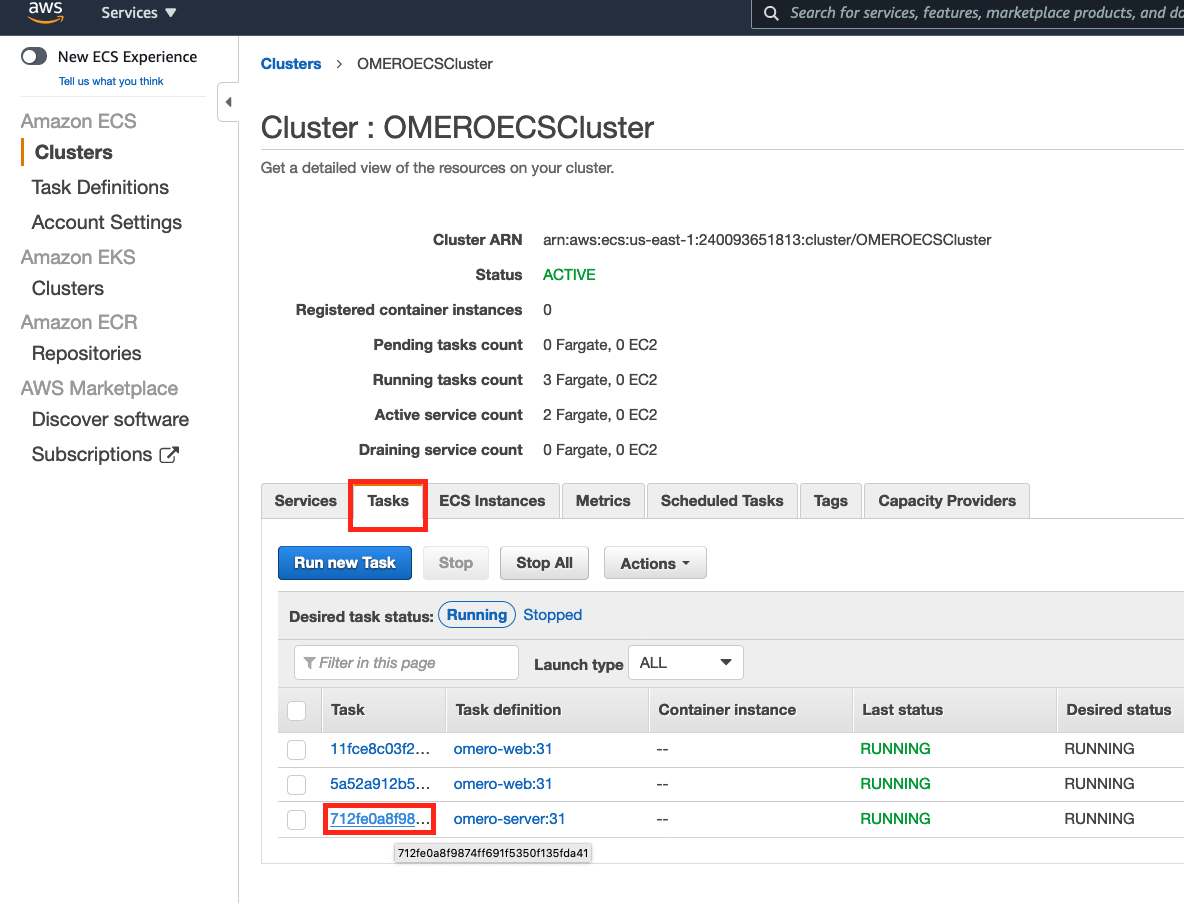

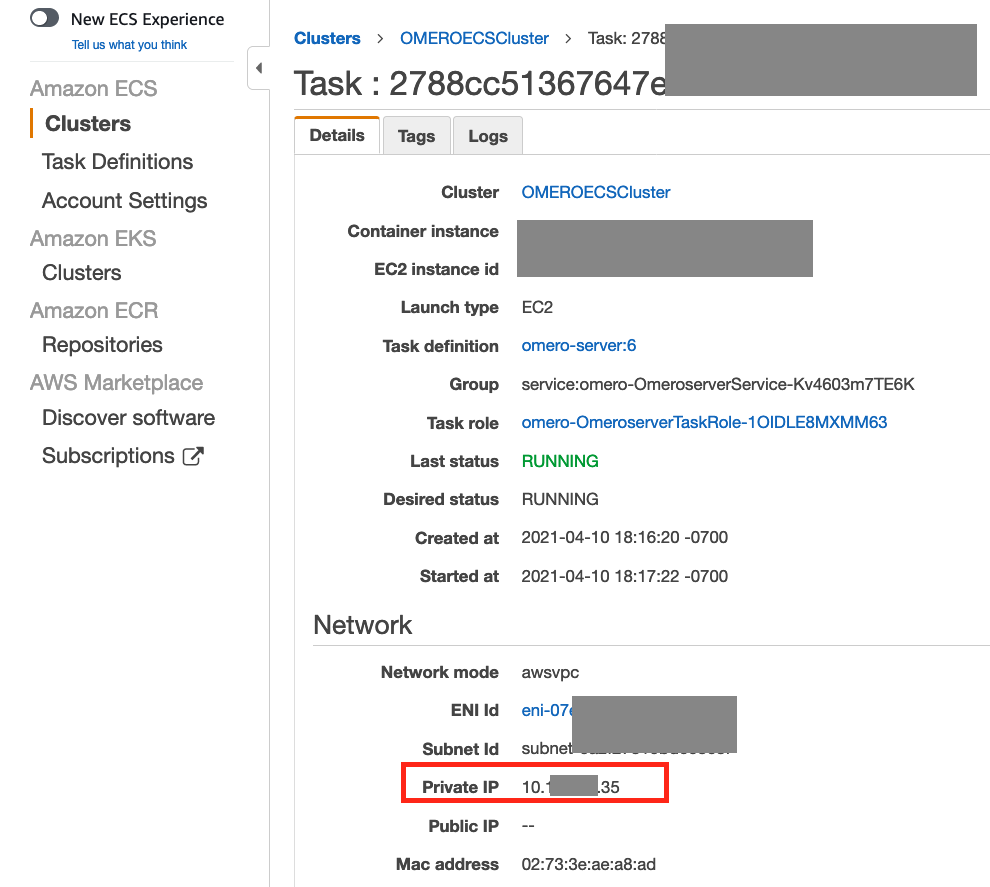

The OMERO server container instance has been assigned with a private IP address by awsvpc network model in this deployment. The IP address can be found on AWS console ECS Cluster => Task => Task detail page:

It is noteworthy that the OMERO CLI cannot perform in-place import on separate EC2 instance.

If you want to run OMERO CLI client on another EC2 instance to transfer and import images from Amazon S3, you can use this 1-click deployment:

You can reuse the EFSFileSystem Id, AccessPoint Id, EFSSecurityGroup, OmeroSecurityGroup, PrivateSubnetId, and VPCID from the aforementioned deployments. EC2 instance have installed AWS CLI, Amazon Corretto 11, and omero-py, using AWS EC2 user data shell scripts. To import microscopic images to OMERO server, you can connect to the EC2 instance using Session Manager (login as ssm-user) and run:

source /etc/bashrc

conda create -n myenv -c ome python=3.6 bzip2 expat libstdcxx-ng openssl libgcc zeroc-ice36-python omero-py -y

source activate myenvYou can download image from AWS S3 using command:

aws s3 cp s3://xxxxxxxx.svs .and then use OMERO client CLI on the EC2 instance to import the image to OMERO:

omero loginThe IP address of the OMERO server can be found in the same way demonstrated above. The default port number is 4064, and default username (root) and password (omero) for OMERO are used here.

Once you login, you can import whole slide images, like:

omero import ./xxxxxx.svs

- If you deployed with SSL certificate, go to the HostedZone in Route53 and remove the validation record that route traffic to _xxxxxxxx.xxxxxxxx.acm-validations.aws.

- Empty and delete the S3 bucket for Load Balancer access log (LBAccessLogBucket in the template) before deleting the OMERO stack

- Manually delete the EFS file system and RDS database. By default the storage retain after the CloudFormation stack is deleted.

The following information was used to build this solution:

- OMERO Docker

- OMERO deployment examples

- Deploying Docker containers on ECS

- Tutorial on EFS for ECS EC2 launch type.

- Blog post on EFS for ECS Fargate.

- Blog post on EFS as Docker volume

See CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.

This deployment was built based on the open source version of OMERO. OMERO is licensed under the terms of the GNU General Public License (GPL) v2 or later. Please note that by running Orthanc under GPLv2, you consent to complying with any and all requirements imposed by GPLv2 in full, including but not limited to attribution requirements. You can see a full list of requirements under GPLv2.