BitStream-Corrupted Video Recovery:

A Novel Benchmark Dataset and Method

(This page is under continuous update and construction..)

Update

2023.09.22: 🎉 We are delight to announce that our paper has been accepted by NeurIPS 2023 Dataset and Benchamrk Track, we will upload the preprint version soon!

2023.08.30: We additionally presented some performance comparisons in video form, which highlights the advantage of our method in recovering long-term and large area corruptions.

2023.08.29: We shared our proposed video recovery method and evaluation results in the method part.

2023.08.24: 1). New YouTube-VOS&DAVIS branches is available with tougher parameter adjustments and corruption ratios. 2). New subset with higher resolutions in 1080P and 4K is included. 3). H.265 protocol is supported.

Table of Contents

Dataset

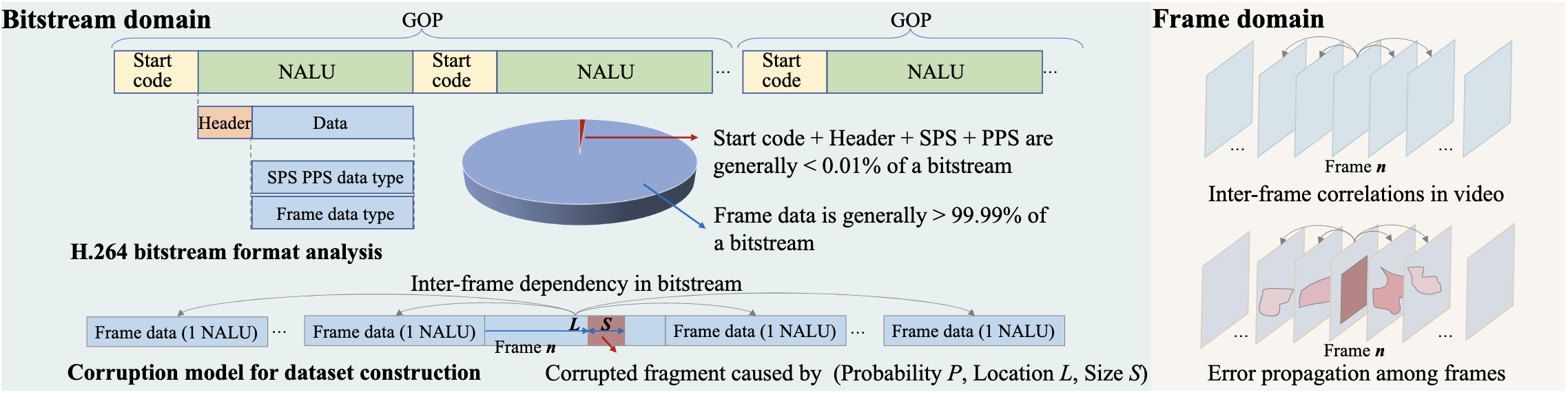

For each video in YouTubeVOS&DAVIS subset, under various parameter setting, we provide differently corrupted videos (from left to right: (P, L, S) = (1/16, 0.4, 2048), (1/16, 0.4, 4096), (1/16, 0.2, 4096), (2/16, 0.4, 4096), and additional (1/16, 0.4, 8192), (1/16, 0.8, 4096), (4/16, 0.4, 4096) branches , respectively. The explanation of the parameter will be explained below/in paper.).

Property

- Flexible video resolution setting (480P, 720P, 1080P, 4K)

- Realistic video degradation caused by bitstream corruption.

- Unpredictable error pattern in various degree.

- With about 30K video clips and 3.5M frames, 50% frames have corruption.

- ...

Download

For dataset downloading, please check this link (Extension for higher resolution, more parameter combination, and uploading are in progress).

Extraction

We have seperated the dataset into training and testing set and for each branch in YouTube-VOS & DAVIS.

YouTube-UGC 1080P subset and Videezy4K 4K subset is currently constructed for testing.

After downloading the .tar.gz files, please firstly restore the original .tar.gz files, unzipping the archives and formatting the folders by

$ bash format.sh

After the data preparation, ffmpeg encoded orignial (GT) video bitstream is provided in the _144096 branch with folder name GT_h264 and its decoded frame sequence with corruption is provided in the folder named GT_JPEGImages.

We also provide the h264 bitstreams of each video and their decoded frame sequence as commonly used video dataset.

Additionally, the mask sequence which is used for corruption region indication is provided in the masks folder in each branch, the files are structured as following:

BSCV-Dataset

|-scripts # Codes for dataset construction

|-YouTube-VOS&DAVIS

| |-train_144096 # Branch_144096

| |-GT_h264

| |-##########.h264 # H.264 video bitstream with original id in YouTube-VOS dataset

| |-... # 3,471 bitstream files in total

| |-GT_JPEGImages

| |-########## # Corresponding video ID

| |-00000.jpg # Decoded frame sequence with different length

| |-...

| |-... # 3,471 frame sequence folder in total

| |-BSC_h264 # Corresponding corrupted bitstream

| |-##########_2.h264

| |-...

| |-BSC_JPEGImages # Corresponding decoded corrupted frame sequence

| |-##########

| |-00000.jpg

| |-...

| |-...

| |-masks # Corresponding mask sequence

| |-##########

| |-00000.png

| |-...

| |-...

| |-Diff # The binary difference map for mask geneartion by morphological operations

| |-##########

| |-00000.png

| |-...

| |-...

| |-train_142048 # The following branch has the same structure, without GT data only

| |-BSC_h264

| |-BSC_JPEGImages

| |-masks

| |-Diff

| |...

|-YouTube-UGC-1080P

| |-FHD

| |-FHD_124096

| |-GT_h264

| |-GT_JPEGImages

| |-BSC_h264

| |-BSC_JPEGImages

| |-masks

| |-Diff

| |...

|-Videezy4K-4K

| |-QHD

| |-GT_h264

| |-GT_JPEGImages

| |-BSC_h264

| |-BSC_JPEGImages

| |-masks

| |-Diff

Extension

We adopt FFmpeg as our video codec, please refer to the official guide line for your ffmpeg installation.

We proposed a parameter model for generating bitstream corruption and therefore causing arbitrarily corrupted videos, even additional branches.

You can use the provided program with your parameter combination to generate arbitrary branches based on the GOP size 16 as our setting, by the following commands, e.g.

python corrpt_Gen.py --prob 1 --pos 0.4 --size 4096

Please use integer for prob and size, and float for pos due to the limitation of our current experimental setting.

If you want to adjust the GOP size, please refer to FFmpeg's instruction to recoding the frame sequence in folder GT_JPEGImages of branch _144096.

PS: It seems the working principle is different between Linux and Windows version of FFmpeg since we have some practical lost-frame error in decoding on Linux but the same bitstream is fine on Windows. So we recommend using FFmpeg on windows to deal with the lost frame issue if you are generating new branches.

Method

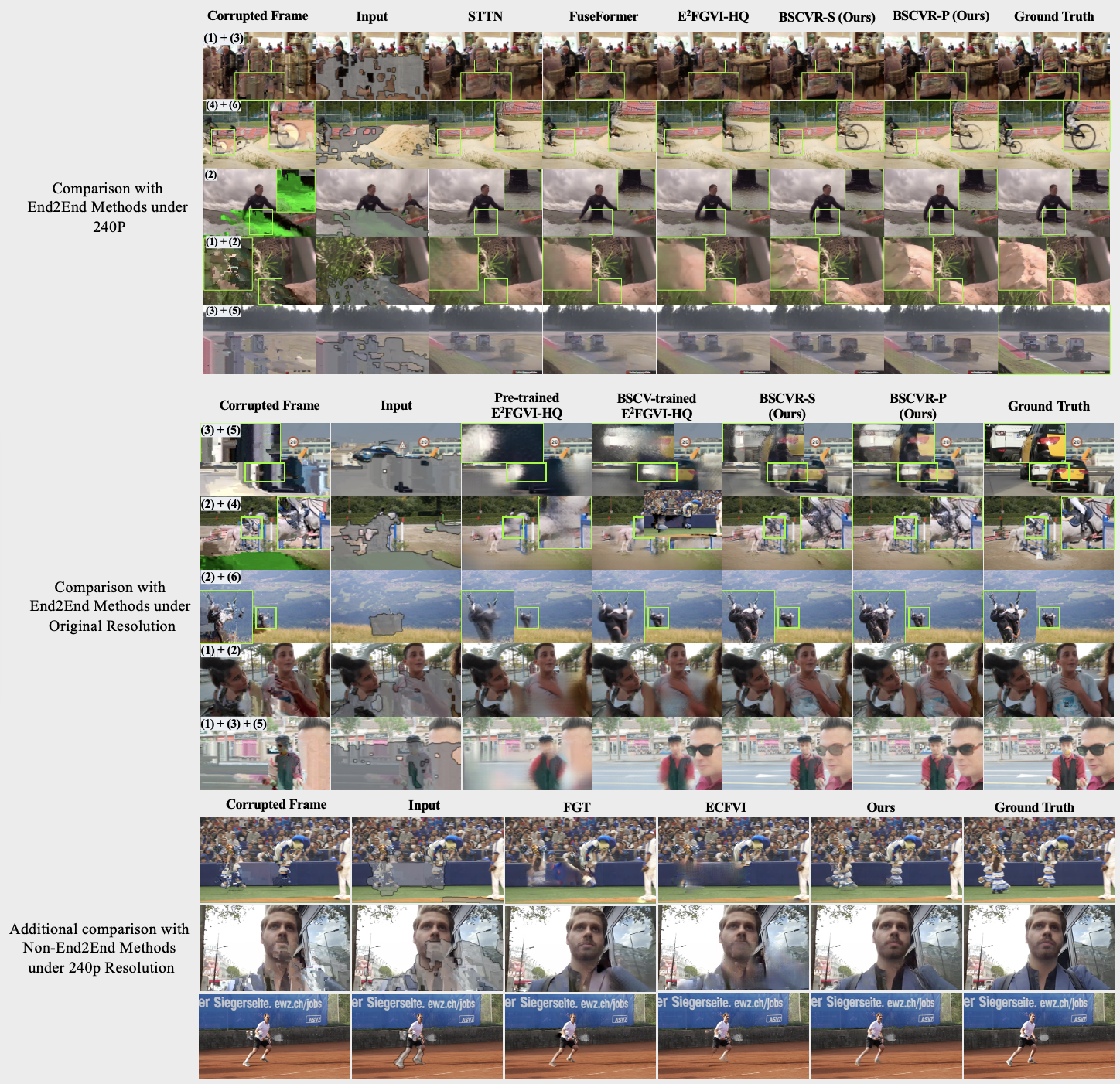

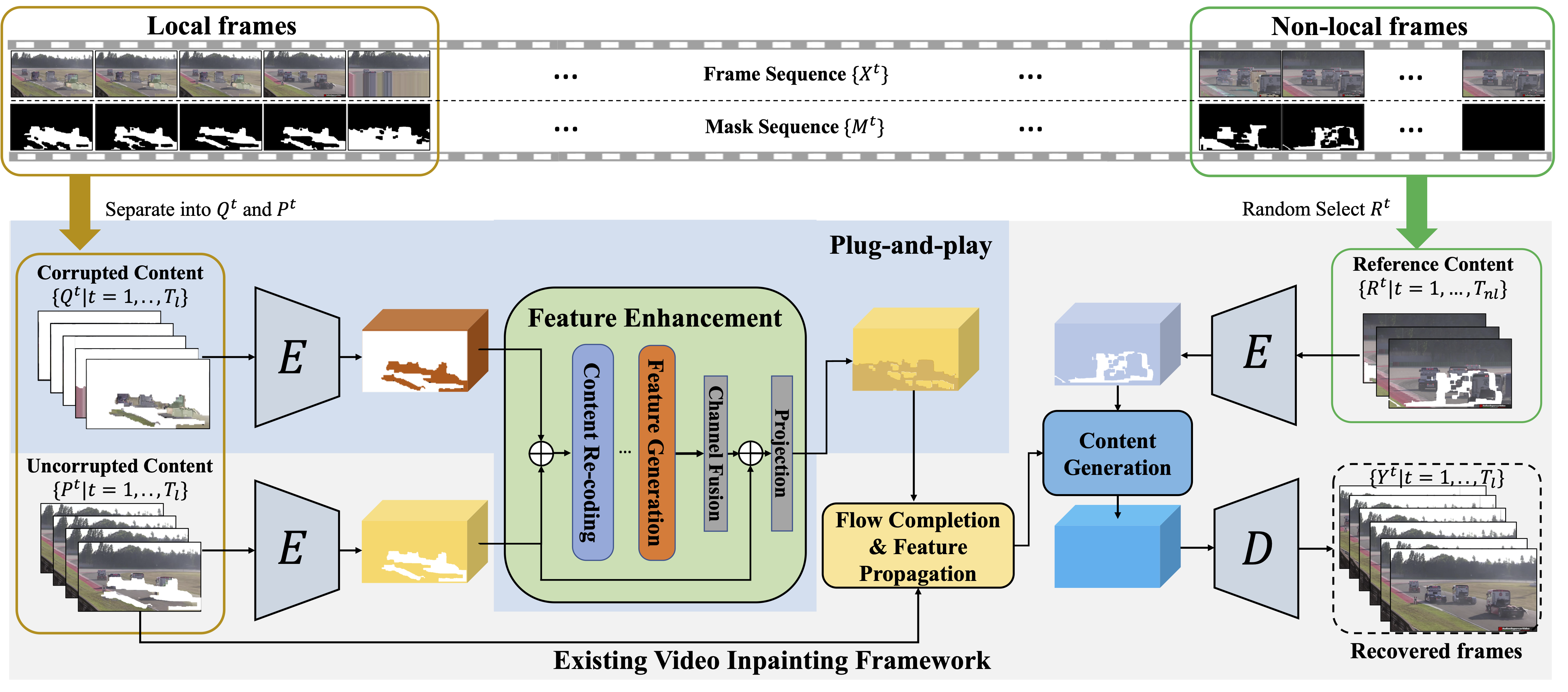

We propose a recovery framework based on end to end video inpainting method while leveraging the partial contents in the corrupted region, and we achieved better recovery quality compared with existing SOTA video inpainting methods.

More performance comparisons of recovery results in video form are illustrated below

Under 240P Resolution: From left to right, top to down is Corrupted Video, Mask Input, STTN, FuseFormer, E2FGVI-HQ, BSCVR-S(Ours), BSCVR-P(Ours), and Ground Truth, in sequence.

Under Original 480/720P Resolution: From left to right, top to down is Corrupted Video, Mask Input, Ground Truth, Pretrained E2FGVI-HQ, BSCV-trained E2FGVI-HQ, BSCVR-S(Ours), in sequence.

For additional comparison with non-end-to-end methods, our preliminary evaluation result is

| Method | PSNR |

SSIM |

LPIPS |

VFID |

Runtime |

|---|---|---|---|---|---|

| FGT[2] | 31.5407 | 0.8967 | 0.0486 | 0.3368 | ~1.97 |

| ECFVI[1] | 20.8676 | 0.7692 | 0.0705 | 0.3019 | ~2.24 |

| BSCVR-S (Ours) | 28.8288 | 0.9138 | 0.0399 | 0.1704 | 0.172 |

| BSCVR-P (Ours) | 29.0186 | 0.9166 | 0.0391 | 0.1730 | 0.178 |

Performance comparisons of recovery results in video form are illustrated below, from left to right, top to down is Corrupted Video, Mask Input, Ground Truth, FGT, ECFVI, and Our method in sequence.

These video form presentations well highlight the advantages of our model in recovering long-term video sequences and large-area error patterns caused by bitstream corruptions.

Experimental Setup

The code for our method, experimental setup, and evaluation scripts will be released soon after packaging and checking.

Citation

If you find our paper and/or code helpful, please consider citing:

@article{liu2023bitstream,

title={Bitstream-Corrupted Video Recovery: A Novel Benchmark Dataset and Method},

author={Liu, Tianyi and Wu, Kejun and Wang, Yi and Liu, Wenyang and Yap, Kim-Hui and Chau, Lap-Pui},

journal={arXiv preprint arXiv:2309.13890},

year={2023}

}