Codes for my paper "SSDA-YOLO: Semi-supervised Domain Adaptive YOLO for Cross-Domain Object Detection" accepted by journal Computer Vision and Image Understanding

Domain adaptive object detection (DAOD) aims to alleviate transfer performance degradation caused by the cross-domain discrepancy. However, most existing DAOD methods are dominated by outdated and computationally intensive two-stage Faster R-CNN, which is not the first choice for industrial applications. In this paper, we propose a novel semi-supervised domain adaptive YOLO (SSDA-YOLO) based method to improve cross-domain detection performance by integrating the compact one-stage stronger detector YOLOv5 with domain adaptation. Specifically, we adapt the knowledge distillation framework with the Mean Teacher model to assist the student model in obtaining instance-level features of the unlabeled target domain. We also utilize the scene style transfer to cross-generate pseudo images in different domains for remedying image-level differences. In addition, an intuitive consistency loss is proposed to further align cross-domain predictions. We evaluate SSDA-YOLO on public benchmarks including PascalVOC, Clipart1k, Cityscapes, and Foggy Cityscapes. Moreover, to verify its generalization, we conduct experiments on yawning detection datasets collected from various real classrooms. The results show considerable improvements of our method in these DAOD tasks, which reveals both the effectiveness of proposed adaptive modules and the urgency of applying more advanced detectors in DAOD.

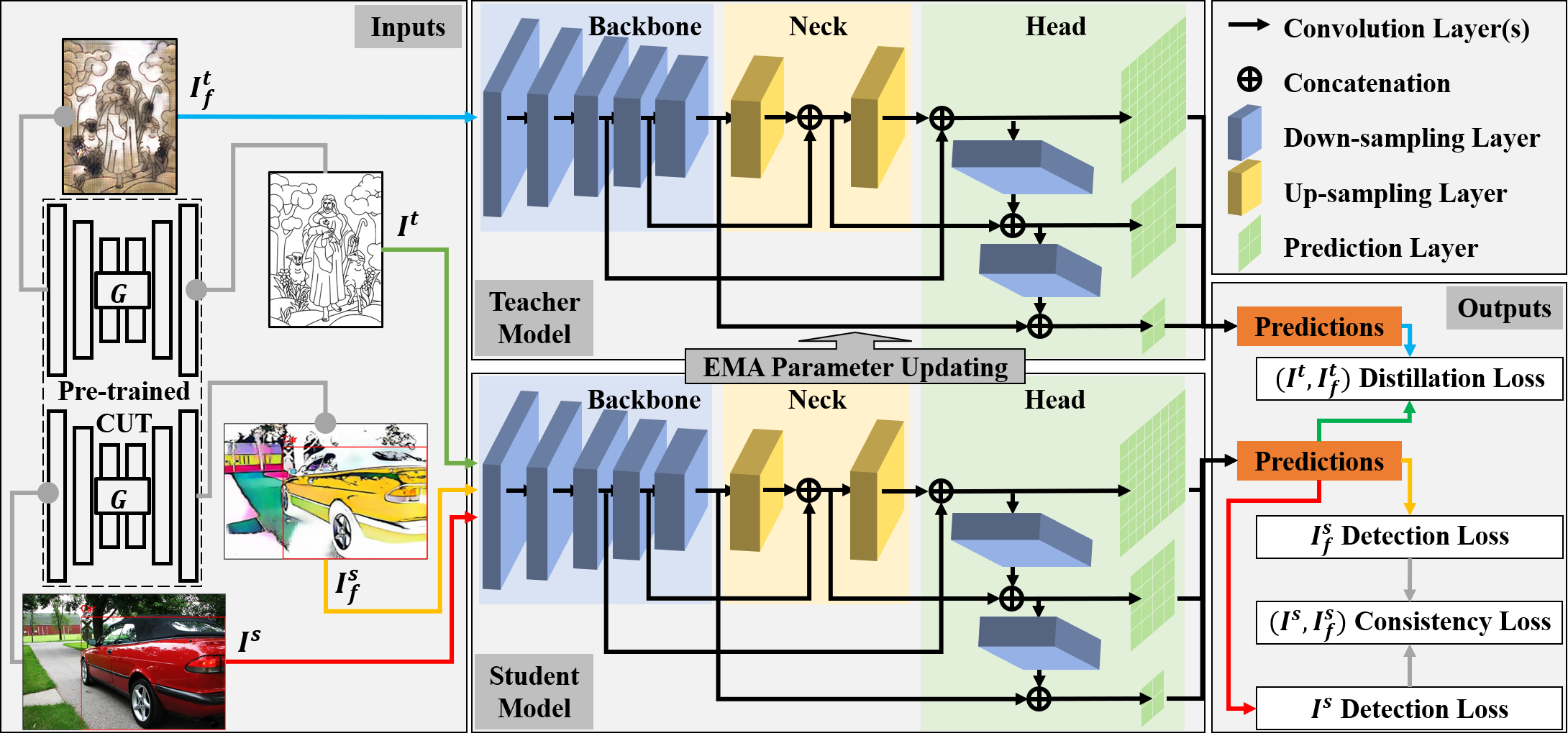

SSDA-YOLO is designed for domain adaptative cross-domain object detection based on the knowledge distillation framework and robust YOLOv5. The network architecture is as below.

So far, we have trained and evaluated it on two pubilc available transfer tasks: PascalVOC → Clipart1k and CityScapes → CityScapes Foggy.

Environment: Anaconda, Python3.8, PyTorch1.10.0(CUDA11.2), wandb

$ git clone https://github.com/hnuzhy/SSDA-YOLO.git

$ pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

# Codes are only evaluated on GTX3090+CUDA11.2+PyTorch1.10.0. You can follow the same config if needed

# [method 1][directly install from the official website][may slow]

$ pip3 install torch==1.10.0+cu111 torchvision==0.11.1+cu111 torchaudio==0.10.0+cu111 \

-f https://download.pytorch.org/whl/cu111/torch_stable.html

# [method 2]download from the official website and install offline][faster]

$ wget https://download.pytorch.org/whl/cu111/torch-1.10.0%2Bcu111-cp38-cp38-linux_x86_64.whl

$ wget https://download.pytorch.org/whl/cu111/torchvision-0.11.1%2Bcu111-cp38-cp38-linux_x86_64.whl

$ wget https://download.pytorch.org/whl/cu111/torchaudio-0.10.0%2Bcu111-cp38-cp38-linux_x86_64.whl

$ pip3 install torch*.whl- PascalVOC(2007+2012): Please follow the instructions in py-faster-rcnn to prepare VOC datasets. Or you can follow the scripts in file VOC.yaml to build VOC datasets.

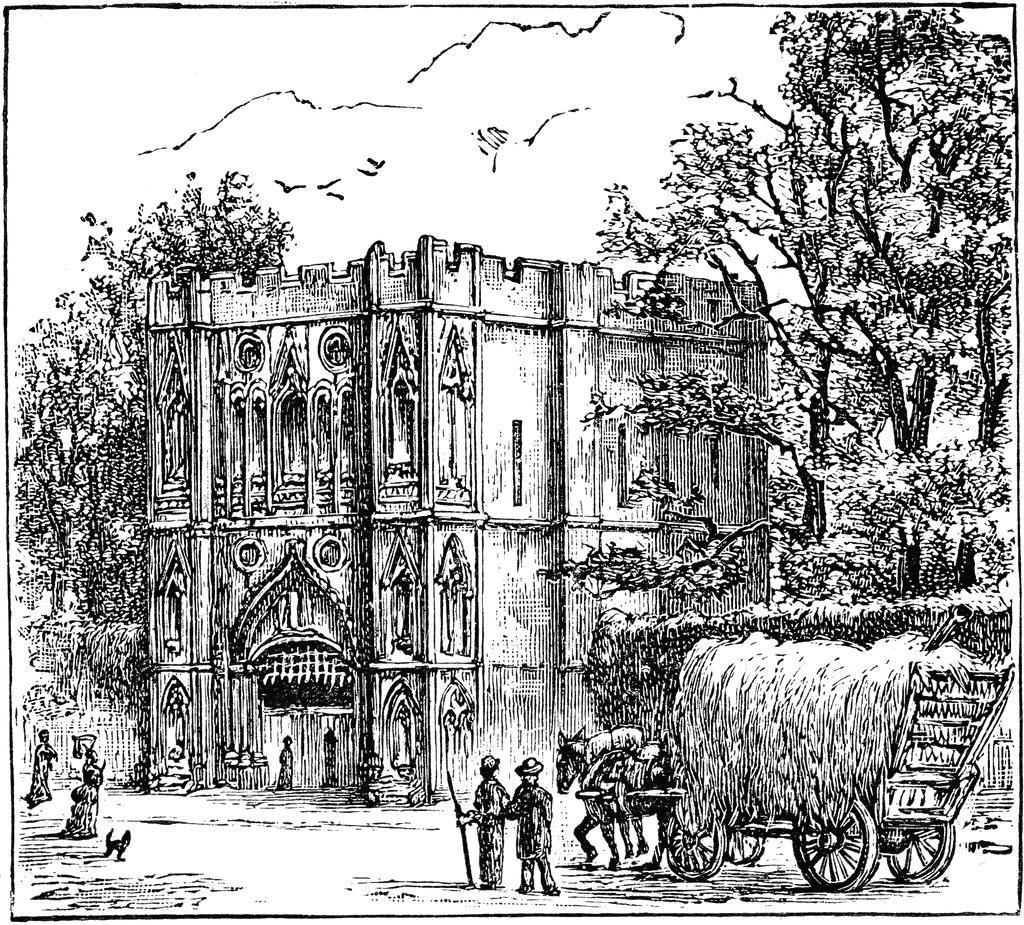

- Clipart1k: This datast is originally released in cross-domain-detection. Dataset preparation instruction is also in it Cross Domain Detection/datasets.

- VOC-style → Clipart-style: Images translated by CycleGAN are available in the website dt_clipart by running

bash prepare_dt.sh. - Clipart-style → VOC-style: We trained a new image style transfer model based on CUT(ECCV2020). The generated 1k VOC-style images are uploaded in google drive.

- VOC foramt → YOLOv5 format: Change labels and folders placing from VOC foramt to YOLOv5 format. Follow the script convert_voc2clipart_yolo_label.py

- CityScapes: Download from the official website. Images leftImg8bit_trainvaltest.zip (11GB) [md5]; Annotations gtFine_trainvaltest.zip (241MB) [md5].

- CityScapes Foggy: Download from the official website. Images leftImg8bit_trainval_foggyDBF.zip (20GB) [md5]; Annotations are the same with

CityScapes. Note, we chose foggy images withbeta=0.02out of three kind of choices(0.01, 0.02, 0.005). - Normal-style → Foggy-style: We trained a new image style transfer model based on CUT(ECCV2020). The generated Foggy-style fake CityScapes images have been uploaded to google drive.

- Foggy-style → Normal-style: We trained a new image style transfer model based on CUT(ECCV2020). The generated Normal-style fake CityScapes Foggy images have been uploaded to google drive.

- VOC foramt → YOLOv5 format: Follow the script convert_CitySpaces_yolo_label.py and convert_CitySpacesFoggy_yolo_label.py

- PascalVOC → Clipart1k

| [source real] VOC | [source fake] VOC2Clipart | [target real] Clipart | [target fake] Clipart2VOC |

|---|---|---|---|

|

|

|

|

- CityScapes → CityScapes Foggy

| [source real] City Scapes | [source fake] CS2CSF | [target real] CityScapes Foggy | [target fake] CSF2CS |

|---|---|---|---|

|

|

|

|

We put the paths of the dataset involved in the training in the yaml file. Five kind of paths are need to be setted. Taking pascalvoc0712_clipart1k_VOC.yaml as an example.

path: root path of datasets;

train_source_real: subpaths of real source images with labels for training.

e.g., PascalVOC(2007+2012) trainval set;

train_source_fake: subpaths of fake source images with labels for training.

e.g., Clipart-style fake PascalVOC(2007+2012) trainval set;

train_target_real: subpaths of real target images without labels for training.

e.g., Clipart1k train set;

train_tatget_fake: subpaths of fake target images without labels for training.

e.g., VOC-style fake Clipart1k train set;

test_target_real: subpaths of real target images with labels for testing.

e.g., Clipart1k test set;

nc: number of classes;

names: class names list.Still taking PascalVOC → Clipart1k as an example. The pretrained model yolov5l.pt can be downloaded from the official YOLOv5 website.

NOTE: The version of YOLOv5 that we used is 5.0, weights from other versions may be not matched. The pretrained model urls of [yolov5s, yolov5m, yolov5l, yolov5x]

- https://github.com/ultralytics/yolov5/releases/download/v5.0/yolov5s.pt

- https://github.com/ultralytics/yolov5/releases/download/v5.0/yolov5m.pt

- https://github.com/ultralytics/yolov5/releases/download/v5.0/yolov5l.pt

- https://github.com/ultralytics/yolov5/releases/download/v5.0/yolov5x.pt

python -m torch.distributed.launch --nproc_per_node 4 \

ssda_yolov5_train.py \

--weights weights/yolov5l.pt \

--data yamls_sda/pascalvoc0712_clipart1k_VOC.yaml \

--name voc2clipart_ssda_960_yolov5l \

--img 960 --device 0,1,2,3 --batch-size 24 --epochs 200 \

--lambda_weight 0.005 --consistency_loss --alpha_weight 2.0If you want to resume a breakout training, following the script below.

python -m torch.distributed.launch --nproc_per_node 4 -master_port 12345 \

ssda_yolov5_train.py \

--weights weights/yolov5l.pt \

--data yamls_ssda/pascalvoc0712_clipart1k_VOC.yaml \

--name voc2clipart_ssda_960_yolov5l_R \

--student_weight runs/train/voc2clipart_ssda_960_yolov5l/weights/best_student.pt \

--teacher_weight runs/train/voc2clipart_ssda_960_yolov5l/weights/best_teacher.pt \

--img 960 --device 0,1,2,3 --batch-size 24 --epochs 200 \

--lambda_weight 0.005 --consistency_loss --alpha_weight 2.0After finishing the training of PascalVOC → Clipart1k task.

python ssda_yolov5_test.py --data yamls_sda/pascalvoc0712_clipart1k_VOC.yaml \

--weights runs/train/voc2clipart_ssda_960_yolov5l/weights/best_student.pt \

--name voc2clipart_ssda_960_yolov5l \

--img 960 --batch-size 4 --device 0- YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite

- UMT(A Pytorch Implementation of Unbiased Mean Teacher for Cross-domain Object Detection (CVPR 2021))

- CUT - Contrastive unpaired image-to-image translation, faster and lighter training than cyclegan (ECCV 2020, in PyTorch)

@article{zhou2022ssda-yolo,

title={SSDA-YOLO: Semi-supervised Domain Adaptive YOLO for Cross-Domain Object Detection},

author={Zhou, Huayi and Jiang, Fei and Lu, Hongtao},

journal={arXiv preprint arXiv:2211.02213},

year={2022}

}

or

@article{zhou2023ssda,

title={SSDA-YOLO: Semi-supervised domain adaptive YOLO for cross-domain object detection},

author={Zhou, Huayi and Jiang, Fei and Lu, Hongtao},

journal={Computer Vision and Image Understanding},

pages={103649},

year={2023},

publisher={Elsevier}

}