Official PyTorch Code for Paper: "Efficient Split-Mix Federated Learning for On-Demand and In-Situ Customization" Junyuan Hong, Haotao Wang, Zhangyang Wang and Jiayu Zhou, ICLR 2022. [paper], [code], [slides], [poster].

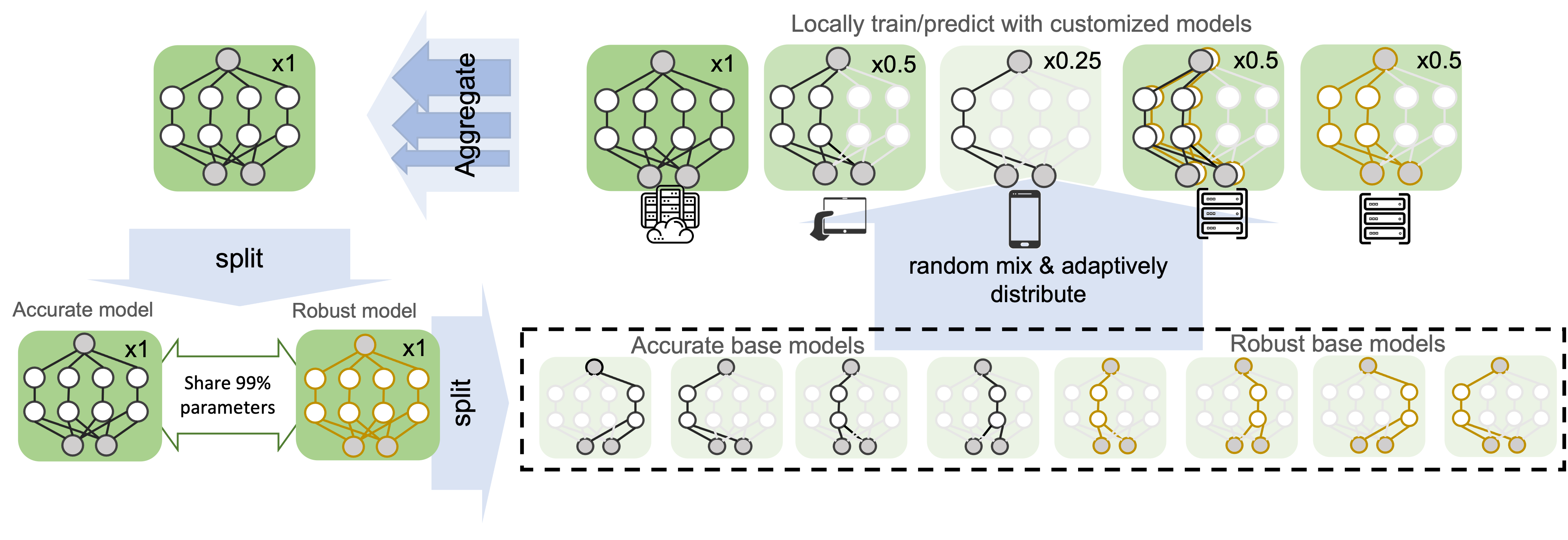

TL;DR Split-Mix is an efficient and flexible Federated Learning algorithm allowing customizing model sizes and robustness during both training and testing time.

Federated learning (FL) provides a distributed learning framework for multiple participants to collaborate learning without sharing raw data. In many practical FL scenarios, participants have heterogeneous resources due to disparities in hardware and inference dynamics that require quickly loading models of different sizes and levels of robustness. The heterogeneity and dynamics together impose significant challenges to existing FL approaches and thus greatly limit FL's applicability. In this paper, we propose a novel Split-Mix FL strategy for heterogeneous participants that, once training is done, provides in-situ customization of model sizes and robustness. Specifically, we achieve customization by learning a set of base sub-networks of different sizes and robustness levels, which are later aggregated on-demand according to inference requirements. This split-mix strategy achieves customization with high efficiency in communication, storage, and inference. Extensive experiments demonstrate that our method provides better in-situ customization than the existing heterogeneous-architecture FL methods.

Preparation:

- Package dependencies: Use

conda env create -f environment.ymlto create a conda env and activate byconda activate splitmix. Major dependencies includepytorch, torchvision, wandb, numpy, thopfor model size customization, andadvertorchfor adversarial training. - Data: Set up your paths to data in utils/config.py. Refer to

FedBN for details of Digits and

DomainNet datasets.

- Cifar10: Auto download by

python -m utils.data_utils --download=Cifar10. - Digits: Download the FedBN Digits zip file to

DATA_PATHS['Digits']'defined inutils.config. Unzip all files. - DomainNet: Download the FedBN DomainNet split file to

DATA_PATHS['DomainNetPathList']defined inutils.config. Download Clipart, Infograph, Painting, Quickdraw, Real, Sketch, put underDATA_PATH['DomainNet']directory. Unzip all files.

- Cifar10: Auto download by

Train and test:

- Customize model sizes: Set

--datato be one ofDigits,DomainNet,Cifar10.# SplitMix python fed_splitmix.py --data Digits --no_track_stat # train python fed_splitmix.py --data Digits --no_track_stat --test --test_slim_ratio=0.25 # test # FedAvg python fedavg.py --data Digits --width_scale=0.125 --no_track_stat # train python fedavg.py --data Digits --width_scale=0.125 --no_track_stat --test # test # SHeteroFL python fed_hfl.py --data Digits --no_track_stat # train python fed_hfl.py --data Digits --no_track_stat --test --test_slim_ratio=0.25 # test

- Customize robustness and model sizes (during training and testing)

# SplitMix + DAT python fed_splitmix.py --adv_lmbd=0.5 python fed_splitmix.py --adv_lmbd=0.5 --test --test_noise=LinfPGD --test_adv_lmbd=0.1 # robust test python fed_splitmix.py --adv_lmbd=0.5 --test --test_noise=none --test_adv_lmbd=0.1 # standard test # individual FedAvg + AT python fedavg.py --adv_lmbd=0.5 python fed_splitmix.py --adv_lmbd=0.5 --test --test_noise=LinfPGD # robust test python fed_splitmix.py --adv_lmbd=0.5 --test --test_noise=none # standard test

For fed_splitmix, you may use --verbose=1 to print more information and --val_ens_only to

speed up evaluation by only evaluating widest models.

We provide detailed parameter settings in sweeps. Check sweeps/Slimmable.md for experiments of customizing model sizes. Check sweeps/AT.md for customizing robustness and joint customization of robustness and model sizes. Example use of sweep:

~> wandb sweep sweeps/fed_niid/digits.yaml

wandb: Creating sweep from: sweeps/fed_niid/digits.yaml

wandb: Created sweep with ID: <ID>

wandb: View sweep at: https://wandb.ai/<unique ID>

wandb: Run sweep agent with: wandb agent <unique ID>

~> export CUDA_VISIBLE_DEVICES=0 # choose desired GPU

~> wandb agent <unique ID>

Pre-trained models will be shared upon request.

@inproceedings{hong2022efficient,

title={Efficient Split-Mix Federated Learning for On-Demand and In-Situ Customization},

author={Hong, Junyuan and Wang, Haotao and Wang, Zhangyang and Zhou, Jiayu},

booktitle={ICLR},

year={2022}

}This material is based in part upon work supported by the National Institute of Aging 1RF1AG072449, Office of Naval Research N00014-20-1-2382, National Science Foundation under Grant IIS-1749940. Z. W. is supported by the U.S. Army Research Laboratory Cooperative Research Agreement W911NF17-2-0196 (IOBT REIGN).