This repository covers two use cases.

-

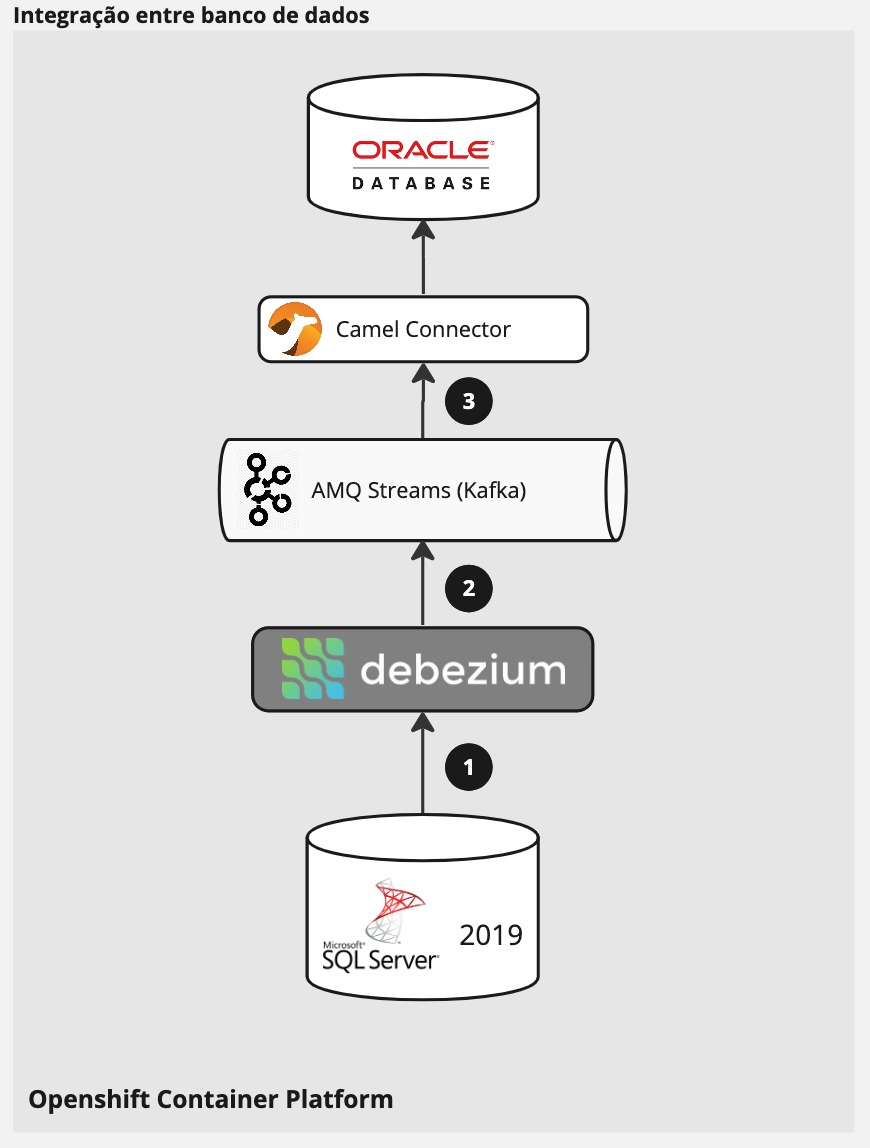

Data migration between SQL Server 2019 and Oracle Database using Chanage Data Capture with Debezium and Strimzi

-

Data transformation XML/JSON with Camel and the ability to communicate with REST and SOAP webservices.

-

SQL Server is running with

AGENT ENABLED. Debezium is running as a connector inKafka Connect, connected to theSQL Serverlisten to all events in theOrderstable. -

Capture all events from

SQL Serverand send it toKafkainJSONformat, storing in themssql-server-linux.dbo.Orderstopic. -

Camel application that consume events from the

mssql-server-linux.dbo.Orderstopic, and insert intoOracle database.

-

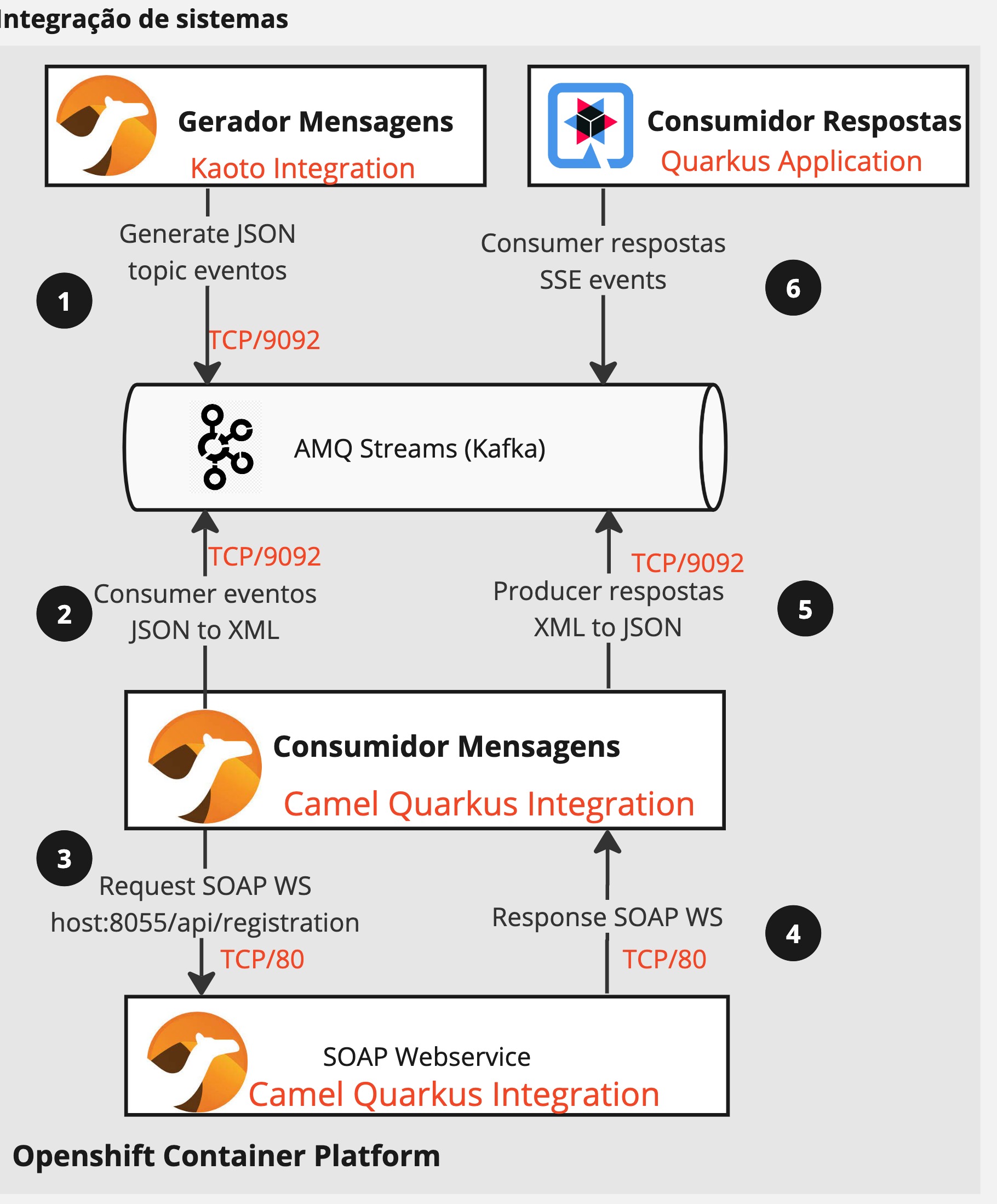

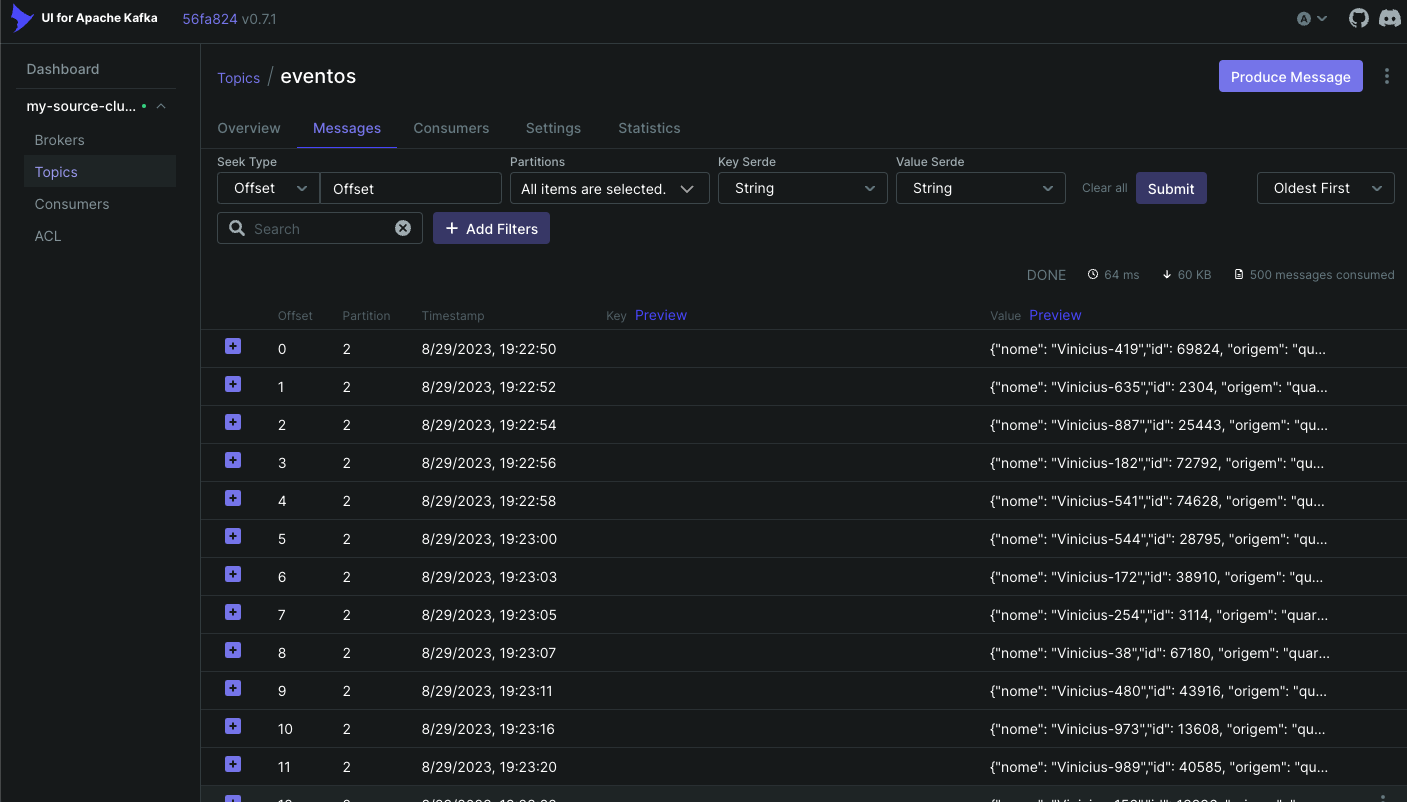

Generate JSON messages in each 1 seg and send through the

eventostopic inKafka. -

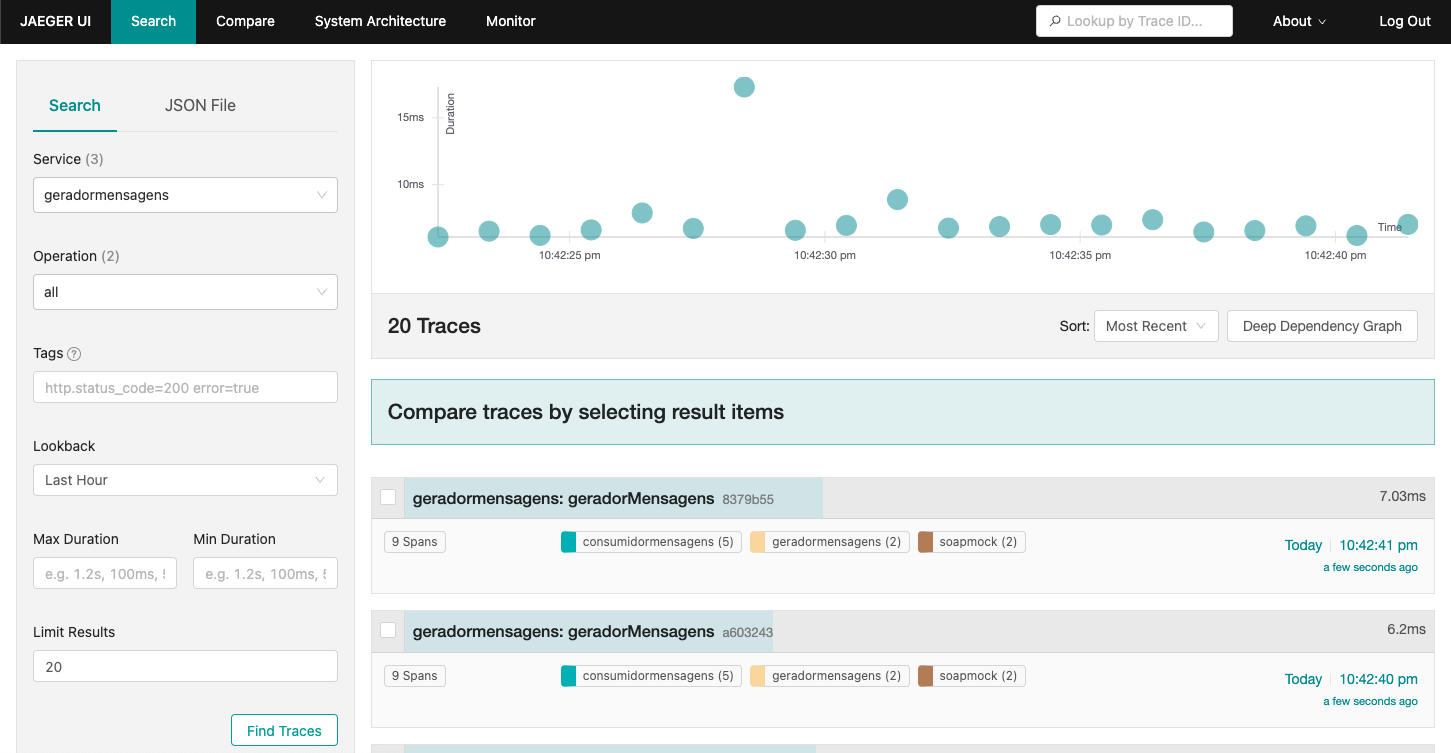

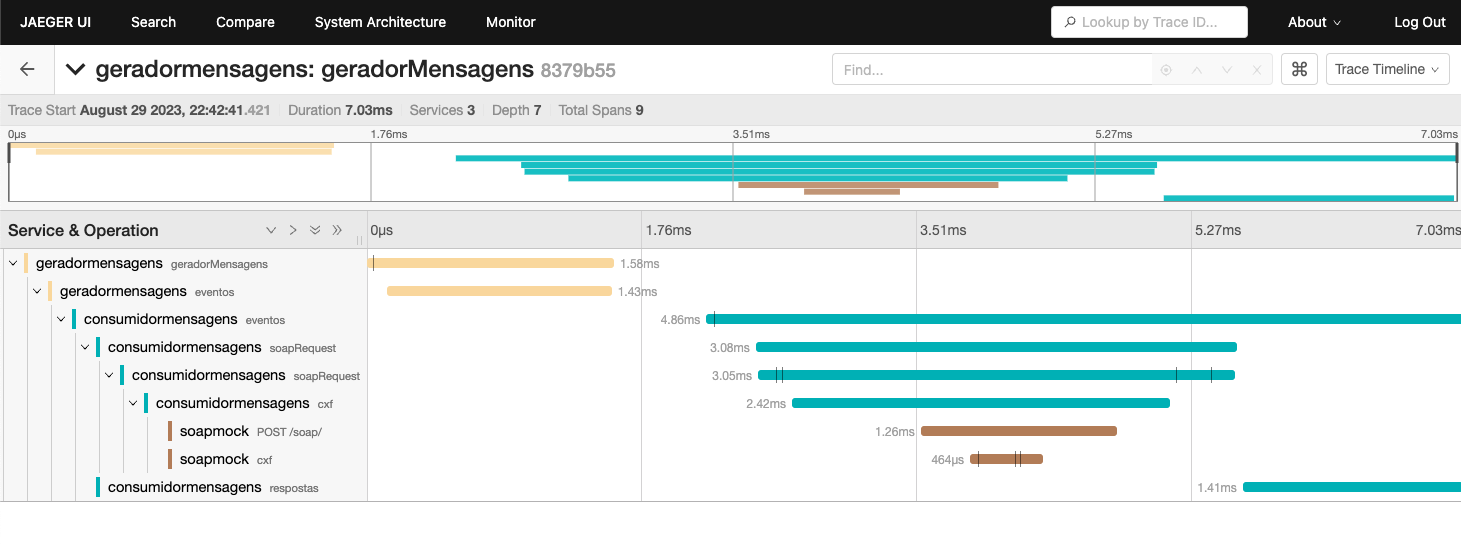

2 e 5. Consume

JSONmessages from the topiceventos, transform it toXMLand do a SOAP request. Receive a response from the SOAP service, transfor it back toJSONand send to the topicrespostasinKafka. -

3 e 4. SOAP Web service built using Camel Quarkus.

-

6.

Quarkusapplication that consume messages from the topicrespostasand show it in a webpage that is receiving live events usingSSE(server-sent events).

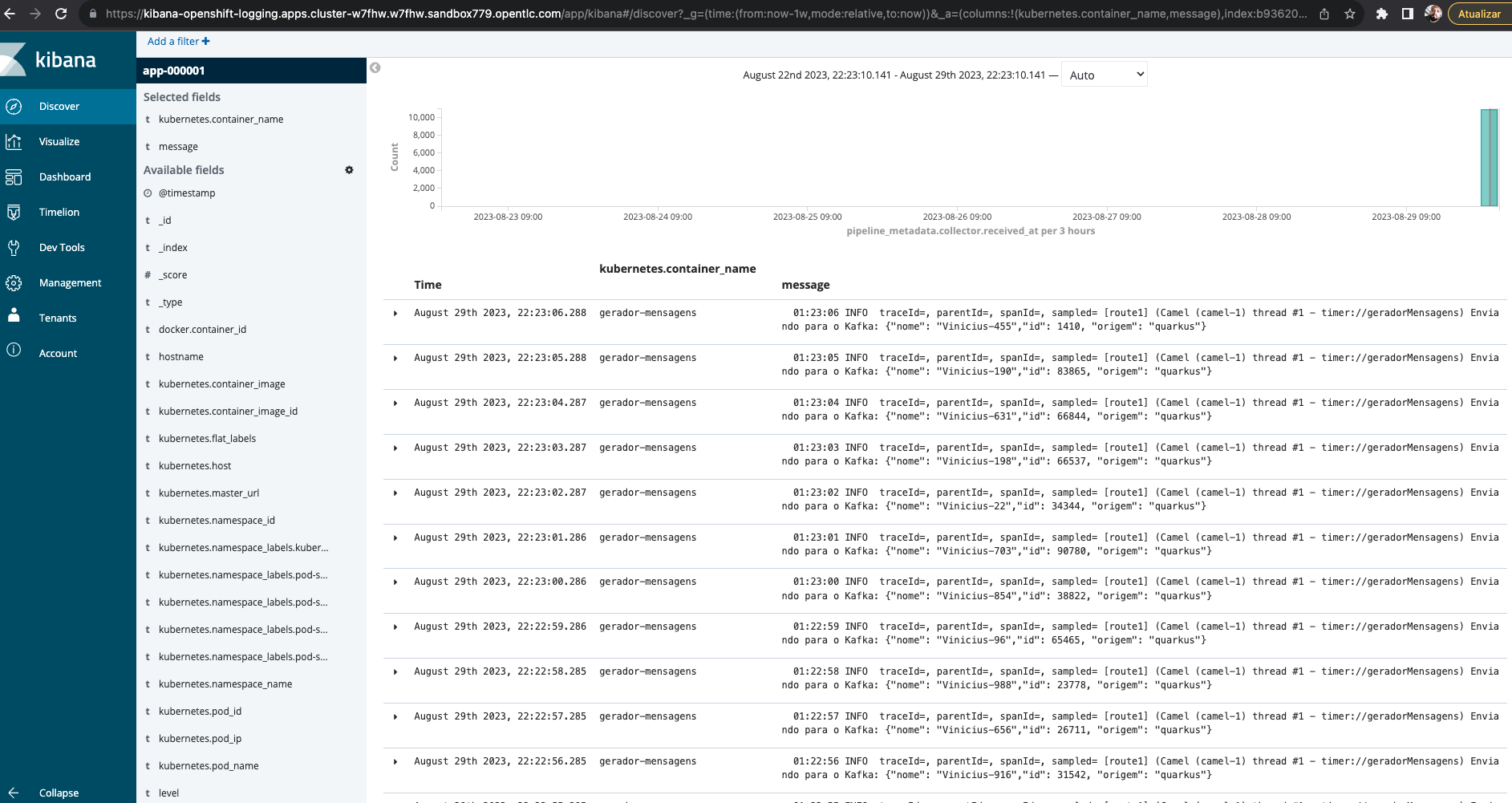

If you want to see log aggregation Openshift feature, install this following the documentation.

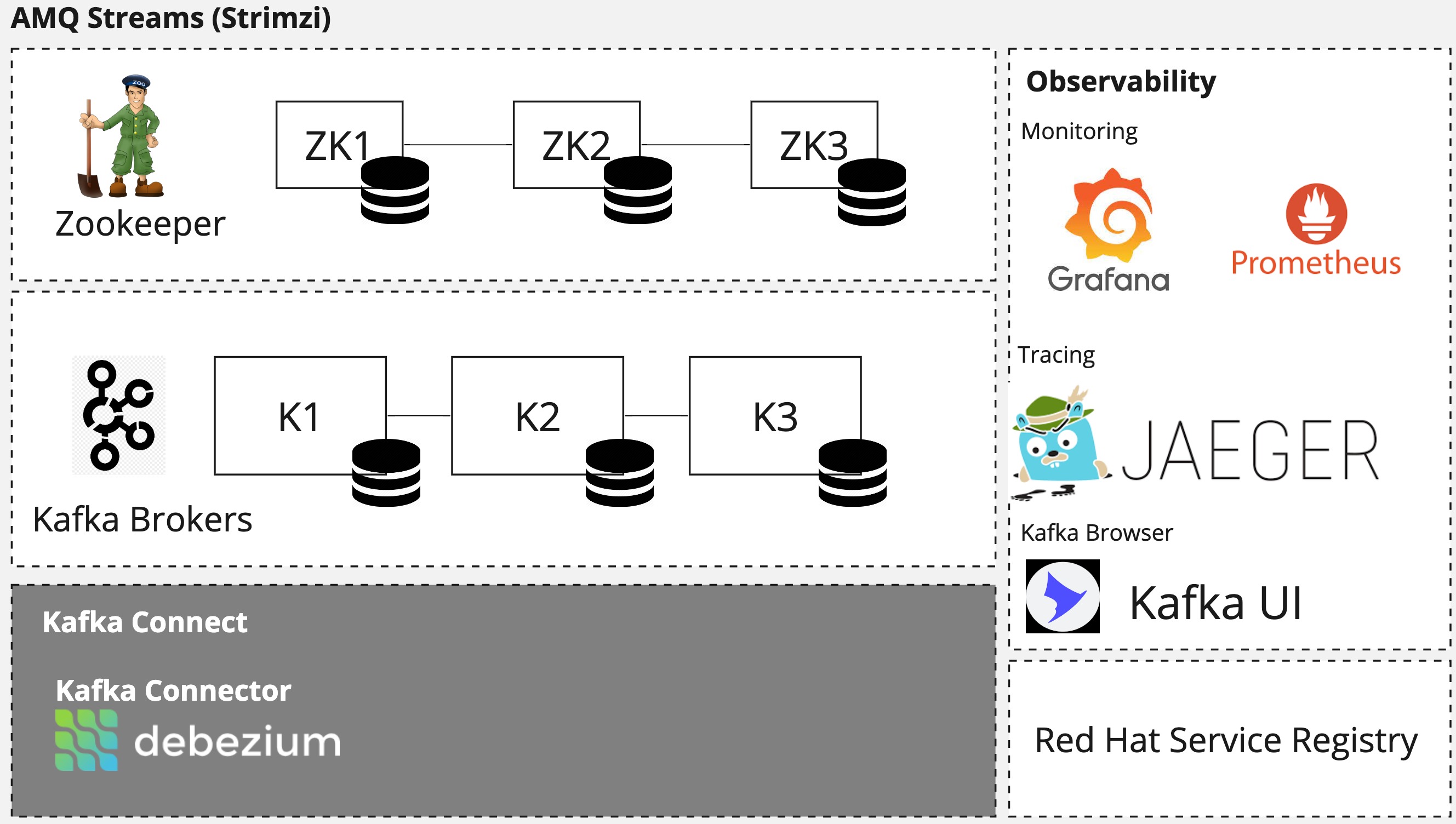

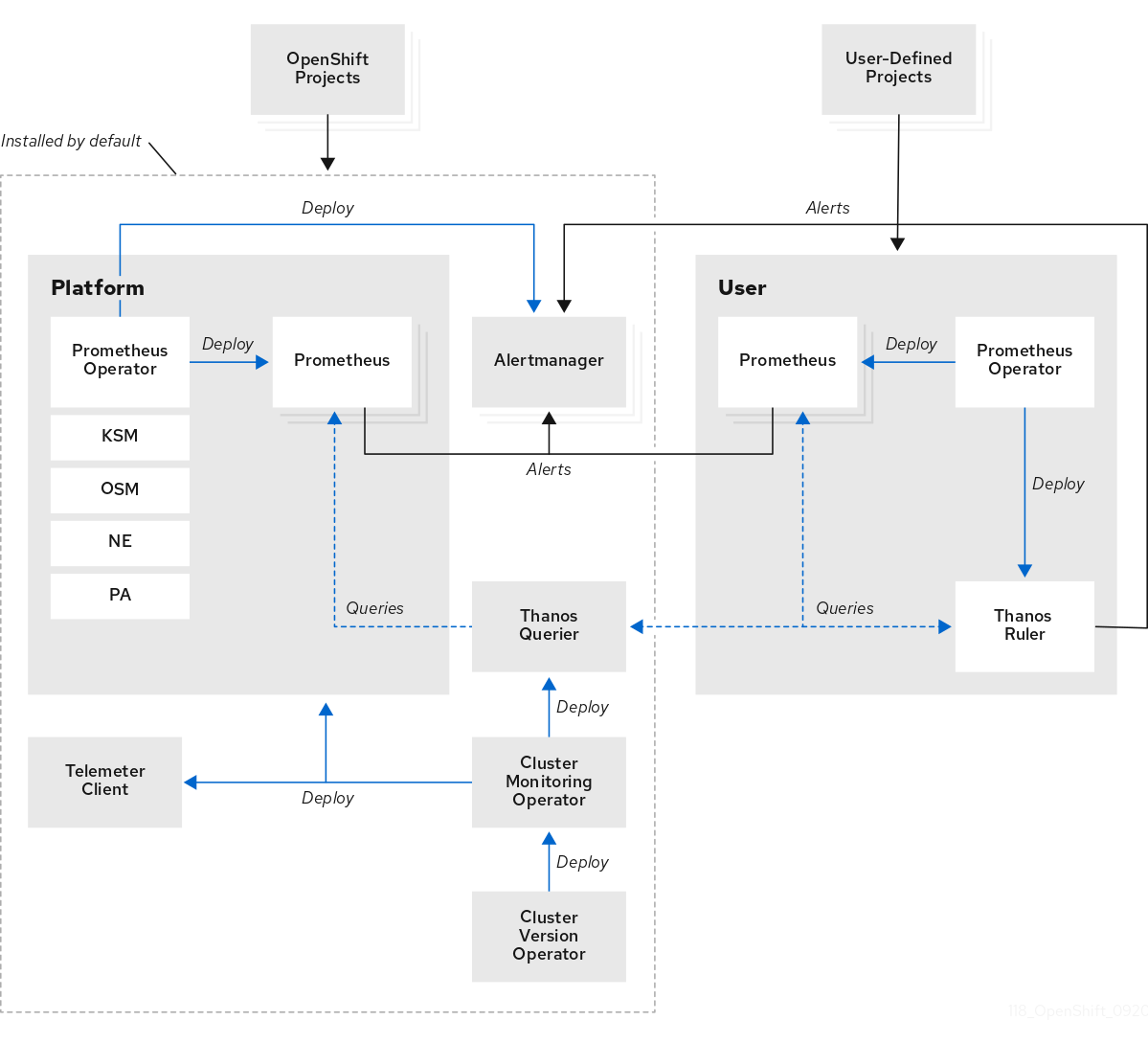

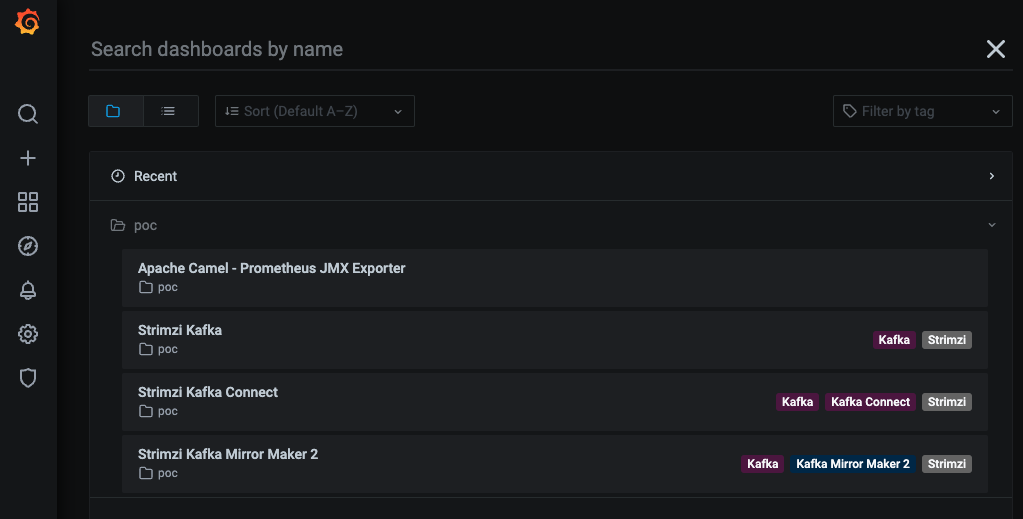

On this poc we use the Openshift Monitoring Stack and a custom Grafana to use our own dashboards.

Custom dashboards deployed

-

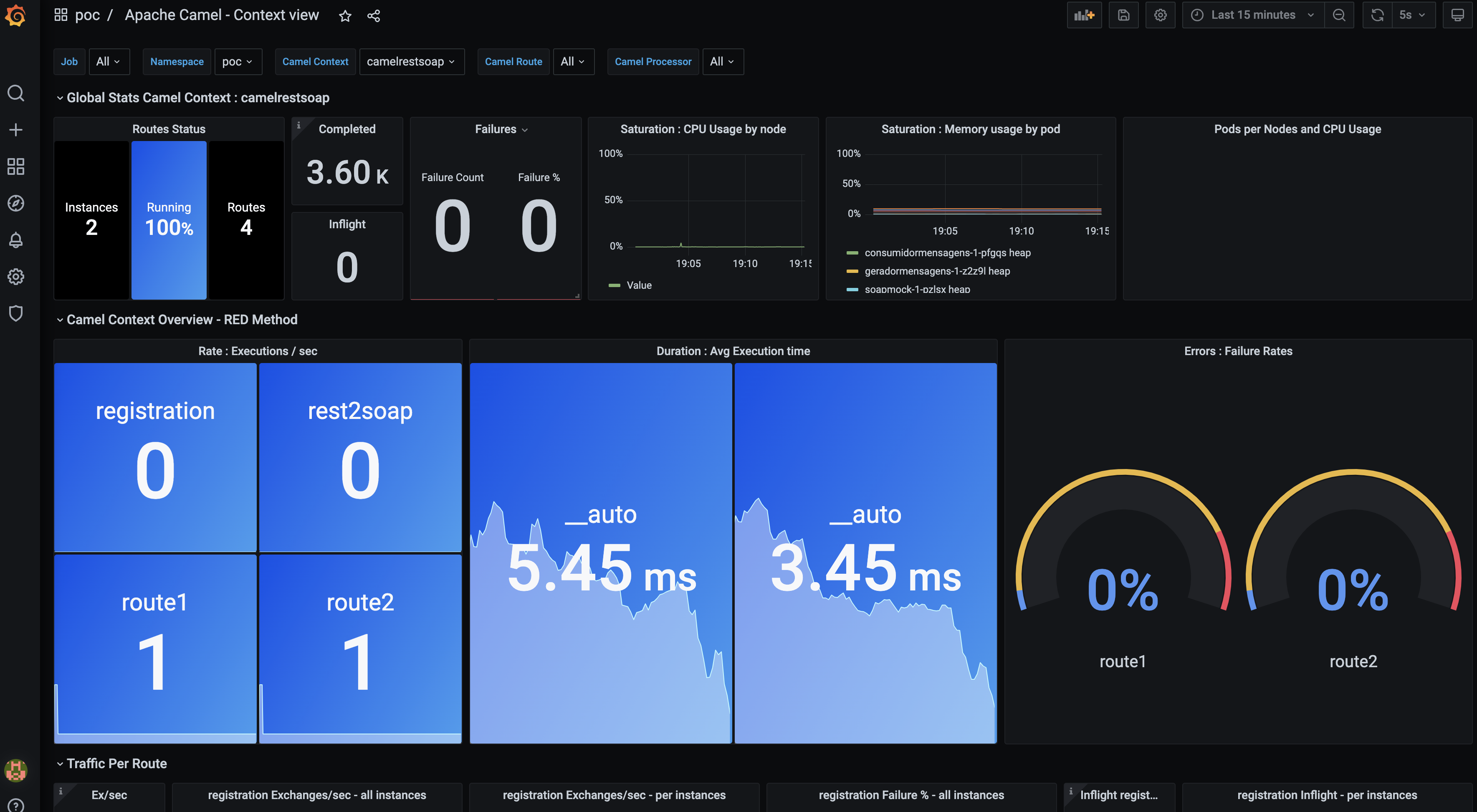

Apache Camel

-

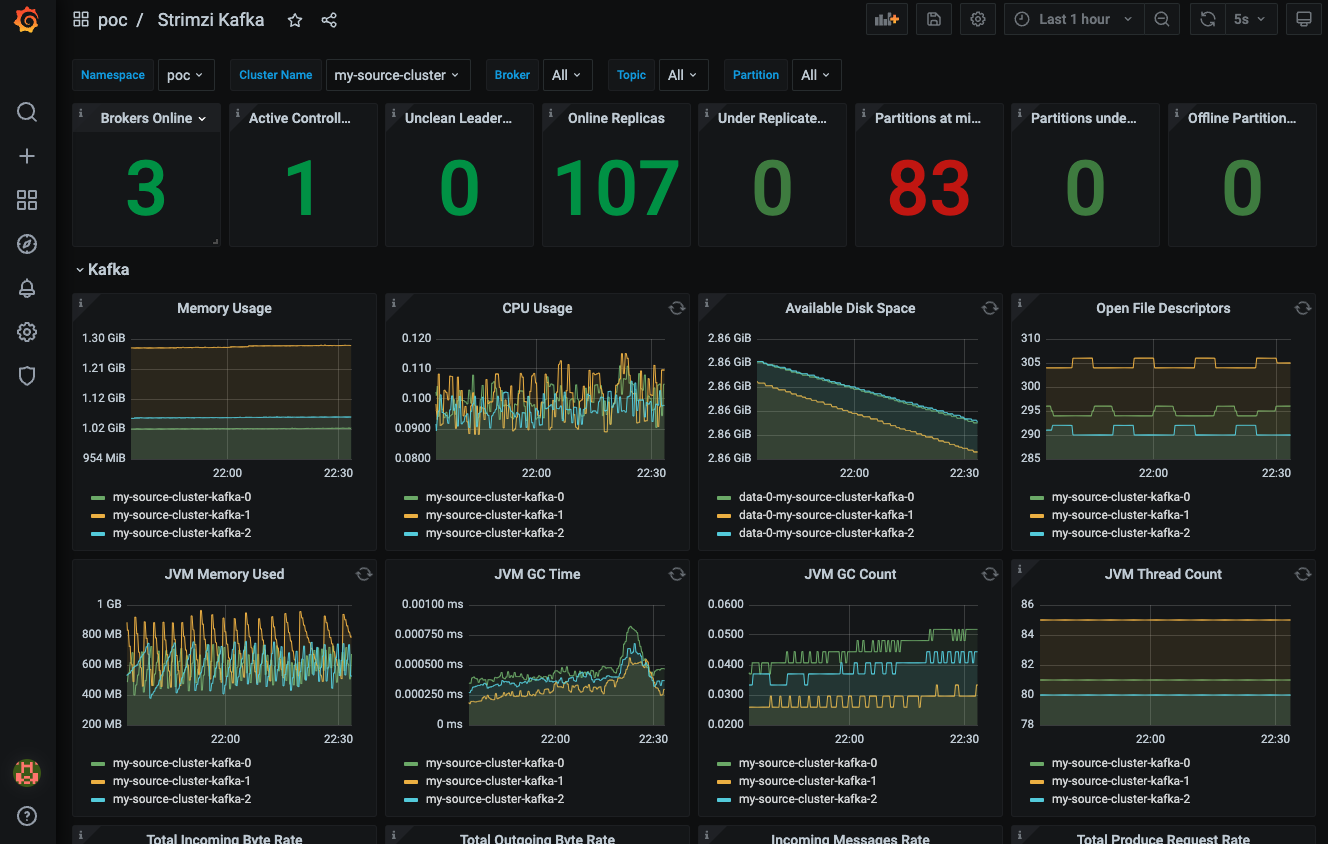

Strimzi Kafka

-

Strimzi Kafka Connect

-

Strimgi Kafka Mirror Maker 2

Sample dashboard of Kafka

Sample dashboard of Camel

Also for browsing Kafka specific components we are using Kafka UI.

All the proof of concept it’s inside Openshift.

|

Note

|

I decided to remove Operators logic from the playbooks because it looks to broke whenever there is a new version of a Operator the playbooks starts to fail (It’s annoying). |

-

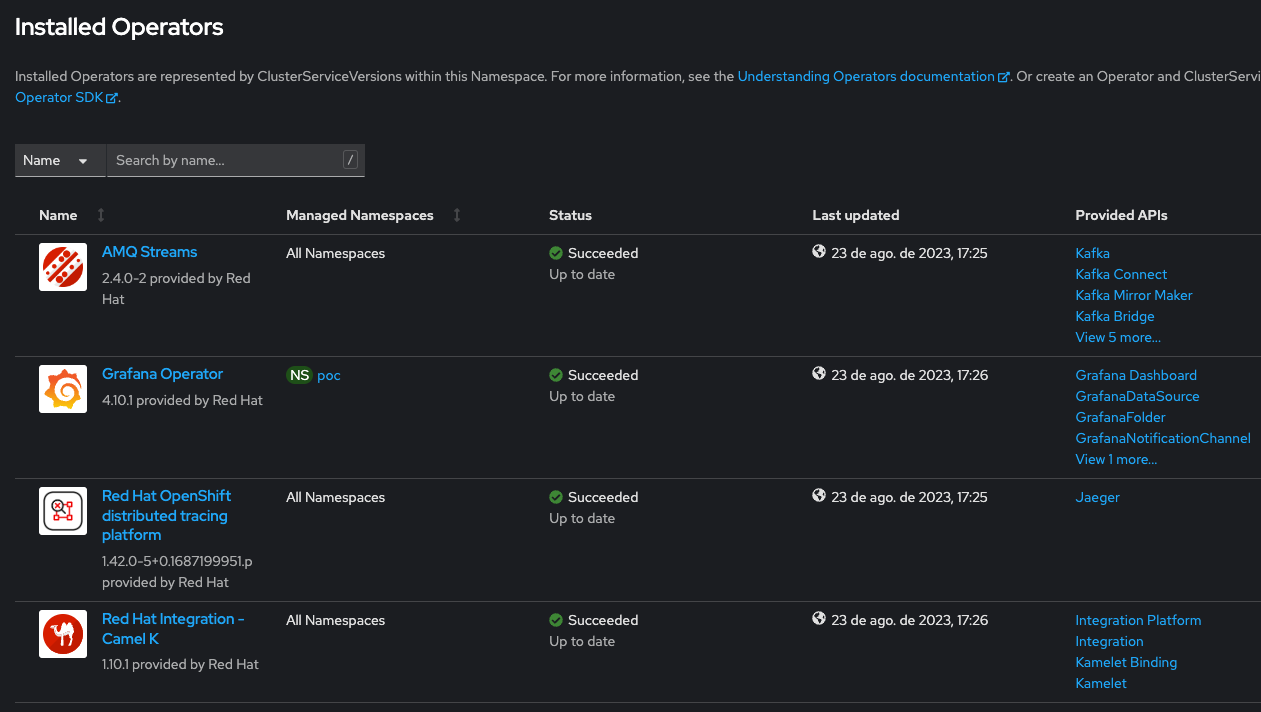

Red Hat Openshift Platform

-

AMQ Streams Operator

-

AMQ Grafana Operator

-

Red Hat Camel K Operator

-

Red Hat OpenShift distributed tracing platform

consumidor-respostas |

8080/TCP |

debezium-connect-cluster-connect-api |

8083/TCP |

grafana-alert |

9094/TCP |

grafana-operator-controller-manager-metrics-service |

8443/TCP |

grafana-service |

3000/TCP |

jaeger-agent |

5775/UDP,5778/TCP,6831/UDP,6832/UDP |

jaeger-collector |

9411/TCP,14250/TCP,14267/TCP,14268/TCP,4317/TCP,4318/TCP |

jaeger-collector-headless |

9411/TCP,14250/TCP,14267/TCP,14268/TCP,4317/TCP,4318/TCP |

jaeger-query |

443/TCP,16685/TCP |

kafka-ui |

8080/TCP |

mssql-server-linux |

1433/TCP |

my-apache-php-app |

80/TCP |

my-source-cluster-kafka-0 |

9094/TCP |

my-source-cluster-kafka-1 |

9094/TCP |

my-source-cluster-kafka-2 |

9094/TCP |

my-source-cluster-kafka-bootstrap |

9091/TCP,9092/TCP,9093/TCP |

my-source-cluster-kafka-brokers |

9090/TCP,9091/TCP,9092/TCP,9093/TCP |

my-source-cluster-kafka-external-bootstrap |

9094/TCP |

my-source-cluster-zookeeper-client |

2181/TCP |

my-source-cluster-zookeeper-nodes |

2181/TCP,2888/TCP,3888/TCP |

oracle-19c-orapoc |

1521/TCP,5500/TCP |

soapmock |

80/TCP |

| Parameter | Example Value | Definition |

|---|---|---|

tkn |

sha256~vFanQbthlPKfsaldJT3bdLXIyEkd7ypO_XPygY1DNtQ |

access token for a user with cluster-admin privileges |

server |

OpenShift Cluster API URL |