This is the source code that accompanies my blog post Convolutional neural networks on the iPhone with VGGNet.

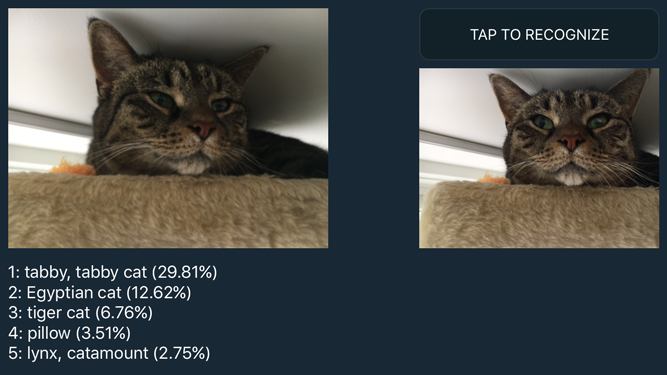

This project shows how to implement the 16-layer VGGNet convolutional neural network for basic image recognition on the iPhone.

VGGNet was a competitor in the ImageNet ILSVRC-2014 image classification competition and scored second place. For more details about VGGNet, see the project page and the paper:

Very Deep Convolutional Networks for Large-Scale Image Recognition

K. Simonyan, A. Zisserman

arXiv:1409.1556

The iPhone app uses the VGGNet version from the Caffe Model Zoo.

You need an iPhone or iPad that supports Metal, running iOS 10 or better. (I have only tested the app on an iPhone 6s.)

NOTE: The source code won't run as-is. You need to do the following before you can build the Xcode project:

0 (optional) - If you don't want to set up a local environment for all of below, you can download the coverted file from here.

1 - Download the prototxt file.

2 - Download the caffemodel file.

3 - Run the conversion script from Terminal (requires Python 3 and the numpy and google.protobuf packages):

$ python3 convert_vggnet.py VGG_ILSVRC_16_layers_deploy.prototxt VGG_ILSVRC_16_layers.caffemodel ./output

This generates the file ./output/parameters.data. It will take a few minutes! The reason you need to download the caffemodel file and convert it yourself is that parameters.data is a 500+ MB file and you can't put those on GitHub.

4 - Copy parameters.data into the VGGNet-iOS/VGGNet folder.

5 - Now you can build the app in Xcode (version 8.0 or better). You can only build for the device, the simulator isn't supported (gives compiler errors).

The VGGNet+Metal source code is licensed under the terms of the MIT license.