energy_py supports reinforcement learning for energy systems. This library provides agents and environments, as well as tools to run experiments.

energy_py is built and maintained by Adam Green - adam.green@adgefficiency.com.

energy_py provides a simple and familiar low-level API for agent and environment initialization and interactions

import energy_py

env = energy_py.make_env(env_id='battery')

agent = energy_py.make_agent(

agent_id='dqn',

env=env,

total_steps=1000000

)

observation = env.reset()

while not done:

action = agent.act(observation)

next_observation, reward, done, info = env.step(action)

training_info = agent.learn()

observation = next_observationThe most common access point for a user will be to run an experiment. An experiment is run by passing the experiment name and run name as arguments

$ cd energy_py/experiments

$ python experiment.py example dqnResults for this run are then available at

$ cd energy_py/experiments/results/example/dqn

$ ls

agent_args.txt

debug.log

env_args.txt

env_histories

ep_rewards.csv

expt.ini

info.log

runs.iniThe progress of an experiment can be watched with TensorBoard

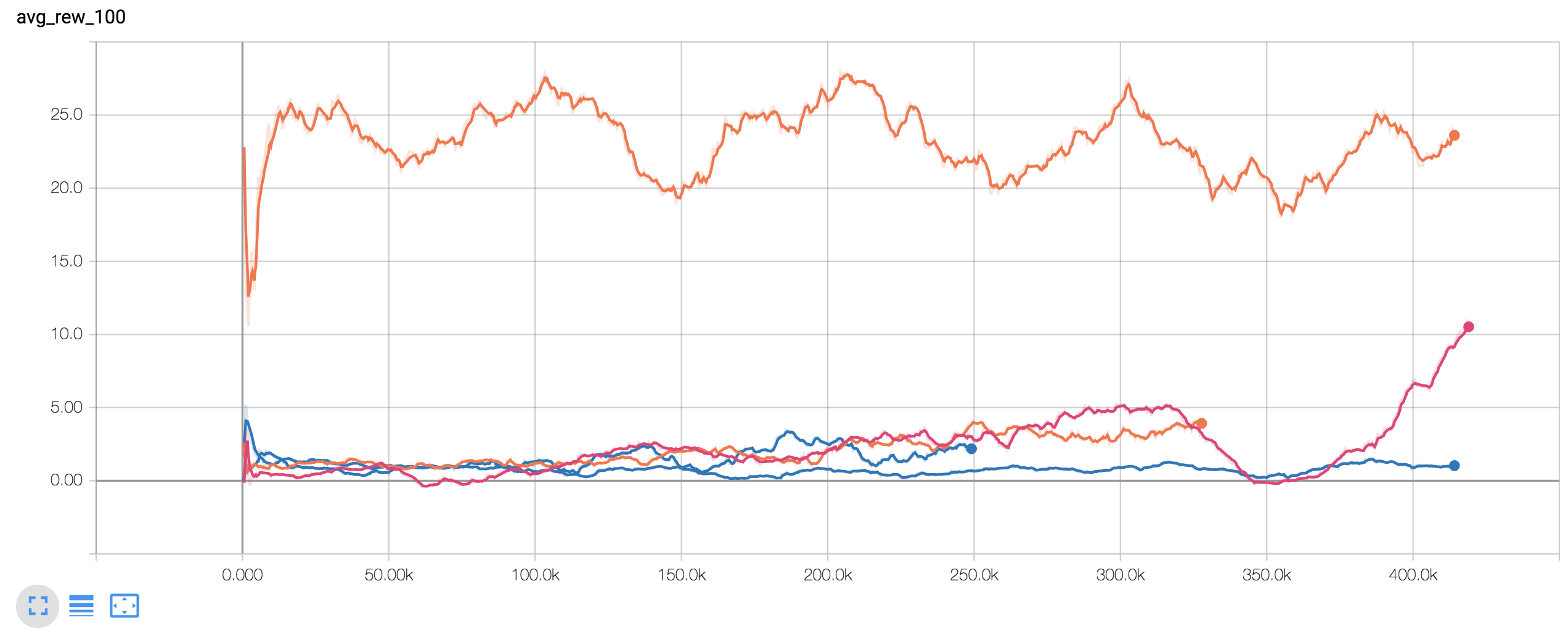

$ tensorboard --logdir='./energy_py/experiments/results'

The main dependencies of energy_py are TensorFlow, numpy, pandas and matplotlib.

To install energy_py using an Anaconda virtual environment

$ conda create --name energy_py python=3.5.2

$ activate energy_py (windows) OR source activate energy_py (unix)

$ git clone https://github.com/ADGEfficiency/energy_py.git

$ cd energy_py

$ python setup.py install (using package)

or

$ python setup.py develop (developing package)

$ pip install --ignore-installed -r requirements.txt

The aim of energy_py is to provide

- high quality implementations of agents suited to solving energy problems

- mutiple energy environments

- tools to run experiments

The design philosophies of energy_py

- simplicity

- iterative design

- simple class heirarchy structure (maximum of two levels)

- utilize Python standard library (deques, namedtuples etc)

- utilize TensorFlow & TensorBoard

- provide sensible defaults for args

energy_py was heavily infulenced by Open AI baselines and gym.

energy_py is currently focused on a high quality impelementation of DQN (ref Mnih et. al (2015)) and along with naive and heuristic agents for comparison.

DQN was chosen because:

- it is an established algorithm,

- many examples of DQN implementations on GitHub,

- highly extensible (DDQN, prioritized experience replay, dueling, n-step returns - see Rainbow for a summary

- most energy environments have low dimensional action spaces (making discretization tractable). Discretization still means a loss of action space shape, but the action space dimensionality is reasonable.

- ability to learn off policy

Naive agents include an agent that randomly samples the action space, independent of observation. Heuristic agents are usually custom built for a specific environment. Examples of heuristic agents include actions based on the time of day or on the values of a forecast.

energy_py provides custom built models of energy environments and wraps around Open AI gym. Support for basic gym models is included to allow debugging of agents with familiar environments.

Beware that gym deals with random seeds for action spaces in particuar ways. v0 of gym environments ignore the selected action 25% of the time and repeat the previous action (to make environment more stochastic). v4 can remove this randomness.

CartPole-v0

Classic cartpole balancing - gym - energy_py

Pendulum-v0

Inverted pendulum swingup - gym - energy_py

MountainCar-v0

An exploration problem - gym - energy_py

Electric battery storage

Dispatch of a battery arbitraging wholesale prices - energy_py

Battery is defined by a capacity and a maximum rate to charge and discharge, with a round trip efficieny applied on storage.

Demand side flexibility

Dispatch of price responsive demand side flexibility - energy_py

Flexible assset is a chiller system, with an action space of the return temperature setpoint.