This Terraform module is used to provision an opinionated GCP environment for the Polarstomps web application. It deploys all resources in us-west1 by default.

It will deploy:

- A VPC with a public and private subnet

- A VPC route that allows egress to the Internet

- Firewall rules to enable ingress from a "home" IP address

- A Cloud Router and a Cloud Nat in order to enable routing to a private GKE cluster

- A GKE/Kubernetes autopilot cluster that has private nodes and a public control plane endpoint (that is locked down to that same "home" IP address)

The philosphy of this module is that it enforces a set of hypothetical standards for an organization - i.e, the Polarstomps organization. It assumes that environments would be relatively homogenous and so works to enforce a set of defaults in order to minimize the need for creating wrapper modules to set inputs.

However, it also gives you an escape hatch for customizing the underlying environment. For the average instantiation, the only real inputs are an environment string (dev/stage/prod) and subnet cidrs.

This module defines a logical environment as "one GKE cluster in one VPC." This is fairly simplistic but so are the needs of the Polarstomps web application. If you need multiple clusters per environment (say you want a cluster solely dedicated to processing HIPAA or PII data) then this module isn't for you. It would need to be refactored so that the GKE cluster is its own module. For now, centralizing the GKE cluster and the VPC into a single module helps with code maintainability.

Autopilot is pretty neat. It is the recommended way of deploying Kubernetes in GCP now and, honestly, simplifies administration quite a bit. This means there are no node pools managed by this module.

Autopilot knows which worker nodes (and GPUs) are needed by your application by looking at the nodeSelector for a manifest and then provisioning the right type of node for your workload.

If you need a standard GKE cluster then this module is not for you.

Some use cases for a standard GKE cluster:

- Your one true passion in life is to manage node pools for a Kubernetes cluster

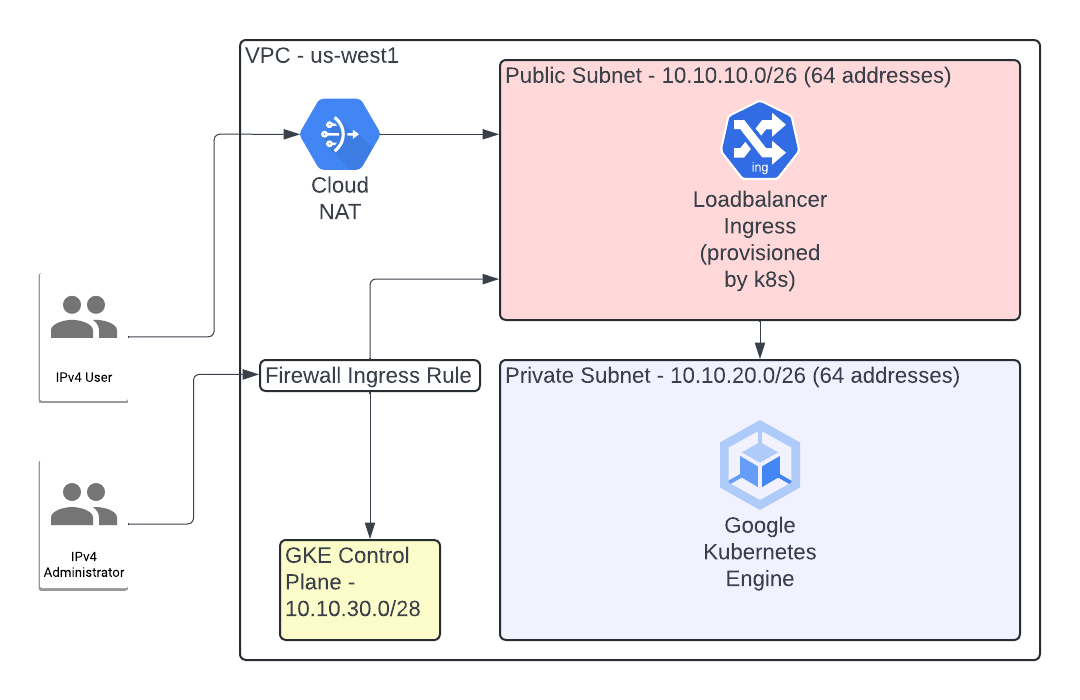

By default, this module creates the below diagram:

You can populate your ~/.kube/config with auth details for the provisioned cluster by running: gcloud container clusters get-credentials $(terragrunt output -raw gke_cluster_name) --zone us-west1. If you customize the region then you'll need to change the shell command.

After you setup your kubeconfig, you can then bootstrap ArgoCD like so:

kubectl create namespace argocd

kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yamlAnd then to login to it you:

kubectl config set-context --current --namespace argocd

argocd login --core

argocd admin initial-password -n argocdThen port forward into the ArgoCD UI with kubectl port-forward svc/argocd-server -n argocd 8080:443 and then use the password generated from the above shell to get to the good stuff.

An example of using this module is in the polarstomps-infra-gcp repository as its used to deploy the dev and prod environments.

The most basic usage looks like this:

module "prod" {

source = "github.com/howdoicomputer/tf-polarstomps-gcp-environment?ref=v1"

env = "prod"

project_id = "REPLACE_ME"

public_subnet_cidr = "10.10.40.0/26"

private_subnet_cidr = "10.10.50.0/26"

control_plane_cidr = "10.10.60.0/28"

# The IP address that is allowed to contact the GKE control plane.

#

# It's also allowed to SSH into the public subnet by default.

#

my_ip_address = "1.1.1.1/32"

}Note, this module makes copious use of the coalesce and coalescelist functions in order to make the tradeoff of DRY module instantiation with slightly obscured defaults.

Generally, this means that any list of objects has a default defined in the locals block of main.tf rather than the defaults of variables.tf.

module "environment" {

env = "prod"

public_subnet_cidr = "10.10.40.0/26"

private_subnet_cidr = "10.10.50.0/26"

control_plane_cidr = "10.10.60.0/28"

# Disable ingress on 22 for a home address

#

# If this is set to true and my_ip_address

# is defined then a 22 ingress rule would be

# created for the public_subnet_cidr.

#

vpc_enable_my_ip_ingress_rule = false

# We still need to set an IP address for the public control plane even if

# we're not using that same address to SSH into the public subnet.

#

my_ip_address = "1.1.1.1/32"

# Define your own rules. You rebel you.

#

vpc_firewall_rules = [

{

name = "foobar"

description = "foobar"

direction = "INGRESS"

priority = 0

destination_ranges = ["0.0.0.0/26"]

source_ranges = ["1.1.1.1/32"]

allow = [{

protocol = "tcp"

ports = ["22"]

}]

}

]

}module "environment" {

env = "prod"

# The node subnet name and the private subnet name need to match

#

# Or, really, the subnet name that you want the GKE worker nodes to be

# created in needs to match the private subnet name. The worker pools

# have to live somewhere and you get to choose where. You caregiver you.

#

gke_node_subnet_name = "private"

vpc_subnets = [

{

subnet_name = "public"

subnet_ip = "10.0.10.0/26"

subnet_region = "us-west1"

description = "foobar"

},

{

subnet_name = "private"

subnet_ip = "10.0.20.0/26"

subnet_region = "us-west1"

description = "foobar"

}

]

}module "environment" {

env = "prod"

vpc_routes = [{

name = "egress"

description = "egress"

destination_range = "0.0.0.0/0"

tags = "egress-inet"

next_hop_internet = "true"

}]

}No Terraform module is perfect. Terraform, as a language, is frought with peril. If you need help setting this up then don't hesitate to ping me.

This module uses Terratest. The example configurations it tests are in /examples.

export TF_VAR_MY_IP_ADDRESS=foobar/32

export TF_VAR_PROJECT_ID=pid

cd ./test

go testIn order to automate the above environment variables, feel free to use an .envrc file. An example is provided in the root of this repo.

The below is generated by terraform-docs. The defaults column of the inputs should be taken with a grain of salt after reading the above.

| Name | Version |

|---|---|

| terraform | >=1.3 |

| >= 5.40.0, != 5.44.0, != 6.2.0, != 6.3.0, < 7 |

| Name | Version |

|---|---|

| >= 5.40.0, != 5.44.0, != 6.2.0, != 6.3.0, < 7 |

| Name | Source | Version |

|---|---|---|

| firewall_rules | terraform-google-modules/network/google//modules/firewall-rules | ~> 9.0.0 |

| gke_cluster | terraform-google-modules/kubernetes-engine/google//modules/beta-autopilot-private-cluster | ~> 33.0 |

| routes | terraform-google-modules/network/google//modules/routes | ~> 9.0.0 |

| subnets | terraform-google-modules/network/google//modules/subnets | ~> 9.0.0 |

| vpc | terraform-google-modules/network/google//modules/vpc | ~> 9.0.0 |

| Name | Type |

|---|---|

| google_compute_router.router | resource |

| google_compute_router_nat.nat | resource |

| google_client_config.default | data source |

| Name | Description | Type | Default | Required |

|---|---|---|---|---|

| env | What to name a logical environment | string |

n/a | yes |

| gke_control_plane_cidr | The cidr block for the GKE control plane. | string |

"10.10.30.0/28" |

no |

| gke_deletion_protection | Deletino protection. I'm not made of money so this is false by default. | string |

false |

no |

| gke_enable_private_endpoint | Whether or not to make the control plane endpoint private to a subnet | string |

false |

no |

| gke_enable_private_nodes | Hide them nodes. | string |

true |

no |

| gke_enable_vertical_pod_autoscaling | Enabling GKE vertical pod autoscaling. | bool |

true |

no |

| gke_horizontal_pod_autoscaling | Enabling GKE horizontal pod autoscaling. | bool |

true |

no |

| gke_kubernetes_version | Which Kubernetes version to use for the cluster. | string |

"latest" |

no |

| gke_master_authorized_networks | Use to override the default ingress rule that is constructed from var.my_ip_address | list(object({ |

[] |

no |

| gke_network_tags | GKE network tags. | list(string) |

[] |

no |

| gke_node_subnet_name | The subnet name for nodes created by GKE autopilot | string |

n/a | yes |

| gke_pods_range_cidr | The cidr block for the GKE pods | string |

"192.168.0.0/18" |

no |

| gke_release_channel | The release channel for GKE versions | string |

"REGULAR" |

no |

| gke_svc_range_cidr | The cidr block for GKE services | string |

"192.168.64.0/18" |

no |

| my_ip_address | A source IP used to connect to the k8s control plane | string |

n/a | yes |

| nat_ip_allocate_option | https://registry.terraform.io/providers/hashicorp/google/latest/docs/resources/compute_router_nat#nat_ip_allocate_option | string |

"AUTO_ONLY" |

no |

| nat_source_subnetwork_ip_ranges_to_nat | https://registry.terraform.io/providers/hashicorp/google/latest/docs/resources/compute_router_nat#source_subnetwork_ip_ranges_to_nat | string |

"ALL_SUBNETWORKS_ALL_IP_RANGES" |

no |

| project_id | n/a | string |

n/a | yes |

| region | n/a | string |

"us-west1" |

no |

| vpc_auto_create_subnets | Whether or not to automatically create VPC subnets. This should almost always be off. | bool |

false |

no |

| vpc_enable_my_ip_ingress_rule | Whether or not to create an ingress rule for a home IP address. Is added to var.vpc_firewall_rules. If set then you need to set var.vpc_public_subnet_cidr to your public subnet cidr block. | bool |

true |

no |

| vpc_firewall_rules | Use to define firewall rules | list(object({ |

[] |

no |

| vpc_private_subnet_cidr | The cidr block for the private subnet | string |

"10.10.20.0/26" |

no |

| vpc_private_subnet_name | The name of the VPC private subnet. Default: infra-$(env)-private-01 | string |

"" |

no |

| vpc_private_subnet_secondary_ranges | Use to override the creation of secondary subnet ranges for GKE worker node allocations. | list(object({ |

[] |

no |

| vpc_public_subnet_cidr | The cidr block for the public subnet | string |

"10.10.10.0/26" |

no |

| vpc_public_subnet_name | The name of the VPC public subnet. Default: infra-$(env)-public-01 | string |

"" |

no |

| vpc_routes | Use to override the default VPC routes. | list(object({ |

[] |

no |

| vpc_routing_mode | https://registry.terraform.io/providers/hashicorp/google/latest/docs/resources/compute_network#routing_mode | string |

"GLOBAL" |

no |

| vpc_shared_vpc_host | Whether or not to setup a VPC as a 'shared VPC.' | bool |

false |

no |

| vpc_subnets | Use to override the creation of the default subnets. There be dragons here. | list(object({ |

[] |

no |

| Name | Description |

|---|---|

| gke_cluster_endpoint | n/a |

| gke_cluster_name | n/a |

| vpc_network_id | n/a |

| vpc_network_name | n/a |

| vpc_network_subnets | n/a |