Official repository for "Visual Transformation Telling".

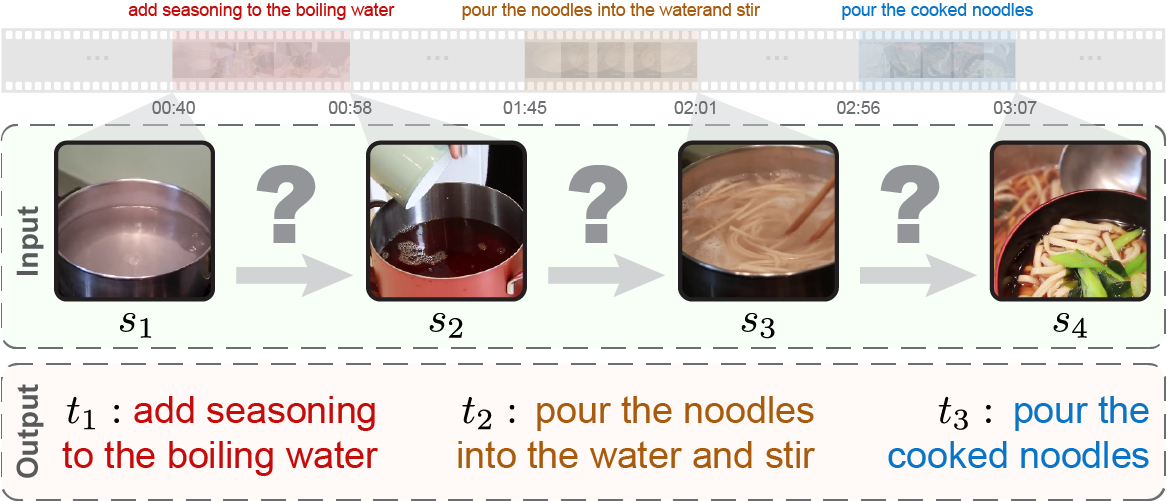

Figure: Visual Transformation Telling (VTT). Given states, which are images extracted from videos, the goal is to reason and describe transformations between every two adjacent states.

Visual Transformation Telling

Wanqing Cui*, Xin Hong*, Yanyan Lan, Liang Pang, Jiafeng Guo, Xueqi Cheng

(* equal contribution)

Motivation: Humans can naturally reason from superficial state differences (e.g. ground wetness) to transformations descriptions (e.g. raining) according to their life experience. In this paper, we propose a new visual reasoning task to test this transformation reasoning ability in real-world scenarios, called Visual Transformation Telling (VTT).

Task: Given a series of states (i.e. images), VTT requires to describe the transformation occurring between every two adjacent states.

If you find this code useful, please star this repo and cite us:

@misc{cui2024visual,

title={Visual Transformation Telling},

author={Wanqing Cui and Xin Hong and Yanyan Lan and Liang Pang and Jiafeng Guo and Xueqi Cheng},

year={2024},

eprint={2305.01928},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

VTT dataset can be downloaded at Google Drive.

1. Clone the repository

git clone https://github.com/hughplay/VTT.git

cd VTT2. Prepare the dataset and pretrained molecular encoder weights

Download the vtt.tar.gz and decompress it under the data directory.

mkdir data

cd data

unzip vtt.tar.gz

After decompress the package, the directory structure should be like this:

.

`-- dataset

`-- vtt

|-- states

| |-- xxx.png

| `-- ...

`-- meta

`-- vtt.jsonl

3. Build the docker image and launch the container

make initFor the first time, it will prompt you the following configurations (enter for the default values):

Give a project name [vtt]:

Code root to be mounted at /project [.]:

Data root to be mounted at /data [./data]:

Log root to be mounted at /log [./data/log]:

directory to be mounted to xxx [container_home]:

`/home/hongxin/code/vtt/container_home` does not exist in your machine. Create? [yes]:

After Creating xxx ... done, the environment is ready. You can run the following command to go inside the container:

make inIn the container, train a classical model (e.g. TTNet) by running:

python train.py experiment=sota_v5_fullNote: You may need to learn some basic knowledge about Pytorch Lightning and Hydra to better understand the code.

Tune LLaVA with LoRA:

zsh scripts/training/train_vtt_concat.shTo test a trained classical model, you can run:

python tset.py <train_log_dir>To test MLMs (e.g. Gemini Pro Vision), you can run:

python test_gemini.py(modify paths accordingly)

- human evaluation results: docs/lists/human_results

- MLMs predictions: docs/lists/llm_results/

The code is licensed under the MIT license and the VTT dataset is licensed under the Creative Commons Attribution-NonCommercial 4.0 International License.

This is a project based on DeepCodebase template.