PyTorch implementation of state-of-the-art music tagging models 🎶

Demo and Docker image on Replicate

Evaluation of CNN-based Automatic Music Tagging Models, SMC 2020 [arxiv]

-- Minz Won, Andres Ferraro, Dmitry Bogdanov, and Xavier Serra

TL;DR

- If your dataset is relatively small: take advantage of domain knowledge using Musicnn.

- If you want a simple but the best performing model: Short-chunk CNN with Residual connection (so-called vgg-ish model with a small receptive field)

- If you want the best performance with generalization ability: Harmonic CNN

- FCN : Automatic Tagging using Deep Convolutional Neural Networks, Choi et al., 2016 [arxiv]

- Musicnn : End-to-end Learning for Music Audio Tagging at Scale, Pons et al., 2018 [arxiv]

- Sample-level CNN : Sample-level Deep Convolutional Neural Networks for Music Auto-tagging Using Raw Waveforms, Lee et al., 2017 [arxiv]

- Sample-level CNN + Squeeze-and-excitation : Sample-level CNN Architectures for Music Auto-tagging Using Raw Waveforms, Kim et al., 2018 [arxiv]

- CRNN : Convolutional Recurrent Neural Networks for Music Classification, Choi et al., 2016 [arxiv]

- Self-attention : Toward Interpretable Music Tagging with Self-Attention, Won et al., 2019 [arxiv]

- Harmonic CNN : Data-Driven Harmonic Filters for Audio Representation Learning, Won et al., 2020 [pdf]

- Short-chunk CNN : Prevalent 3x3 CNN. So-called vgg-ish model with a small receptieve field.

- Short-chunk CNN + Residual : Short-chunk CNN with residual connections.

conda create -n YOUR_ENV_NAME python=3.7

conda activate YOUR_ENV_NAME

pip install -r requirements.txt

STFT will be done on-the-fly. You only need to read and resample audio files into .npy files.

cd preprocessing/

python -u mtat_read.py run YOUR_DATA_PATH

cd training/

python -u main.py --data_path YOUR_DATA_PATH

Options

'--num_workers', type=int, default=0

'--dataset', type=str, default='mtat', choices=['mtat', 'msd', 'jamendo']

'--model_type', type=str, default='fcn',

choices=['fcn', 'musicnn', 'crnn', 'sample', 'se', 'short', 'short_res', 'attention', 'hcnn']

'--n_epochs', type=int, default=200

'--batch_size', type=int, default=16

'--lr', type=float, default=1e-4

'--use_tensorboard', type=int, default=1

'--model_save_path', type=str, default='./../models'

'--model_load_path', type=str, default='.'

'--data_path', type=str, default='./data'

'--log_step', type=int, default=20

cd training/

python -u eval.py --data_path YOUR_DATA_PATH

Options

'--num_workers', type=int, default=0

'--dataset', type=str, default='mtat', choices=['mtat', 'msd', 'jamendo']

'--model_type', type=str, default='fcn',

choices=['fcn', 'musicnn', 'crnn', 'sample', 'se', 'short', 'short_res', 'attention', 'hcnn']

'--batch_size', type=int, default=16

'--model_load_path', type=str, default='.'

'--data_path', type=str, default='./data'

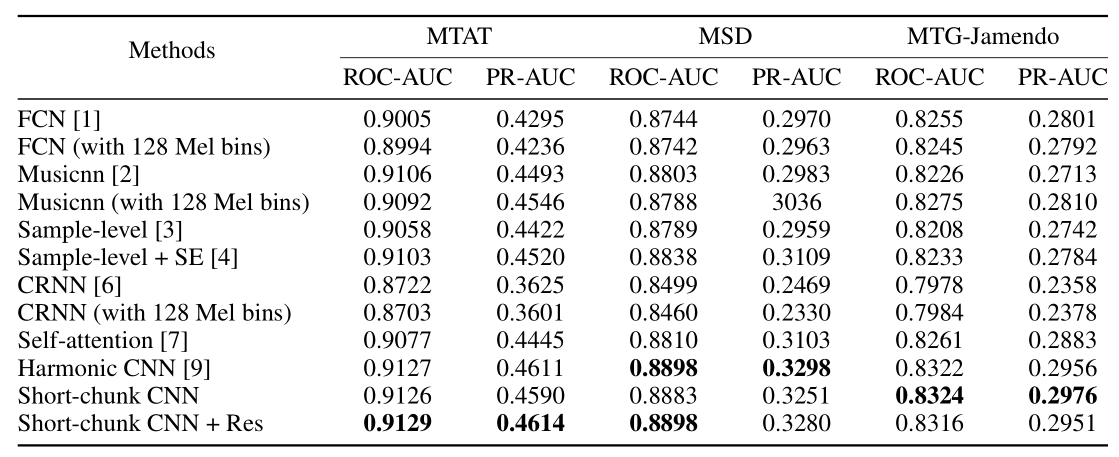

Performances of SOTA models

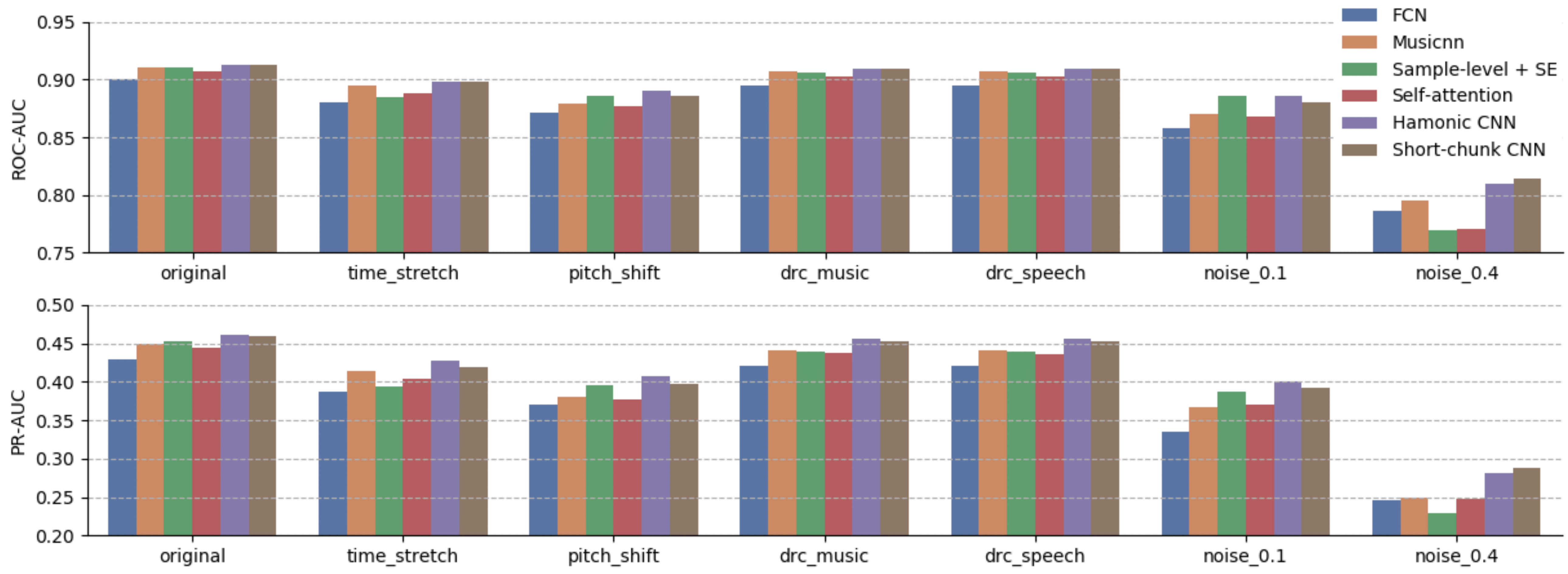

Performances with perturbed inputs

@inproceedings{won2020eval,

title={Evaluation of CNN-based automatic music tagging models},

author={Won, Minz and Ferraro, Andres and Bogdanov, Dmitry and Serra, Xavier},

booktitle={Proc. of 17th Sound and Music Computing},

year={2020}

}

MIT License

Copyright (c) 2020 Music Technology Group, Universitat Pompeu Fabra. Code developed by Minz Won.

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

in the Software without restriction, including without limitation the rights

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

copies of the Software, and to permit persons to whom the Software is

furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all

copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

SOFTWARE.

Available upon request.