Hongrui Cai · Wanquan Feng · Xuetao Feng · Yan Wang · Juyong Zhang

Paper | Project Page | Poster

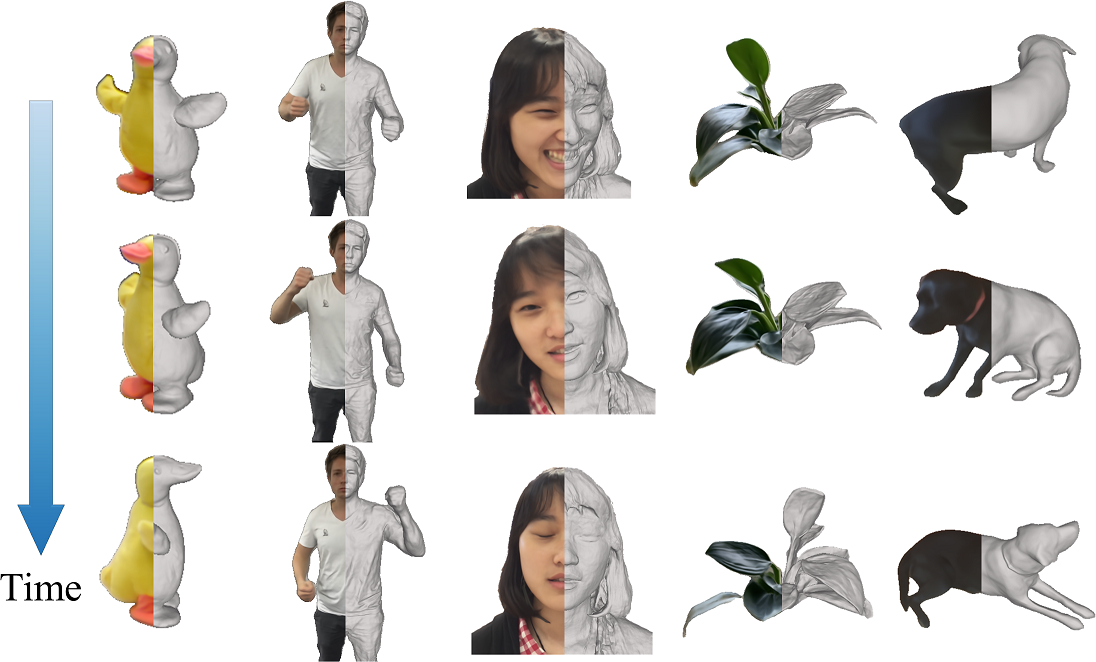

We propose Neural-DynamicReconstruction (NDR), a template-free method to recover high-fidelity geometry, motions and appearance of a dynamic scene from a monocular RGB-D camera.

The data is organized as NeuS

<case_name>

|-- cameras_sphere.npz # camera parameters

|-- depth

|-- # target depth for each view

...

|-- image

|-- # target RGB each view

...

|-- mask

|-- # target mask each view (For unmasked setting, set all pixels as 255)

...

Here cameras_sphere.npz follows the data format in IDR, where world_mat_xx denotes the world-to-image projection matrix, and scale_mat_xx denotes the normalization matrix.

You can download a part of pre-processed KillingFusion data here and unzip it into ./.

Important Tips: If the pre-processed data is useful, please cite the related paper(s) and strictly abide by related open-source license(s).

Clone this repository and create the environment (please notice CUDA version)

git clone https://github.com/USTC3DV/NDR-code.git

cd NDR-code

conda env create -f environment.yml

conda activate ndrDependencies (click to expand)

- torch==1.8.0

- opencv_python==4.5.2.52

- trimesh==3.9.8

- numpy==1.21.2

- scipy==1.7.0

- PyMCubes==0.1.2

- Training

python train_eval.py- Evaluating pre-trained model

python pretrained_eval.pyComing Soon

- Compiling renderer

cd renderer && bash build.sh && cd ..- Rendering meshes

Input path of original data, path of results, and iteration number, e.g.

python geo_render.py ./datasets/kfusion_frog/ ./exp/kfusion_frog/result/ 120000The rendered results will be put in dir [path_of_results]/validations_geo/

- More Pre-processed Data

- Code of Data Pre-processing

- Code of Geometric Projection

- Pre-trained Models and Evaluation Code

- Training Code

This project is built upon NeuS. Some code snippets are also borrowed from IDR and NeRF-pytorch. The pre-processing code for camera pose initialization is borrowed from Fast-Robust-ICP. The evaluation code for geometry rendering is borrowed from StereoPIFu_Code. Thanks for these great projects. We thank all the authors for their great work and repos.

If you have questions, please contact Hongrui Cai.

If you find our code or paper useful, please cite

@inproceedings{Cai2022NDR,

author = {Hongrui Cai and Wanquan Feng and Xuetao Feng and Yan Wang and Juyong Zhang},

title = {Neural Surface Reconstruction of Dynamic Scenes with Monocular RGB-D Camera},

booktitle = {Thirty-sixth Conference on Neural Information Processing Systems (NeurIPS)},

year = {2022}

}If you find our pre-processed data useful, please cite the related paper(s) and strictly abide by related open-source license(s).