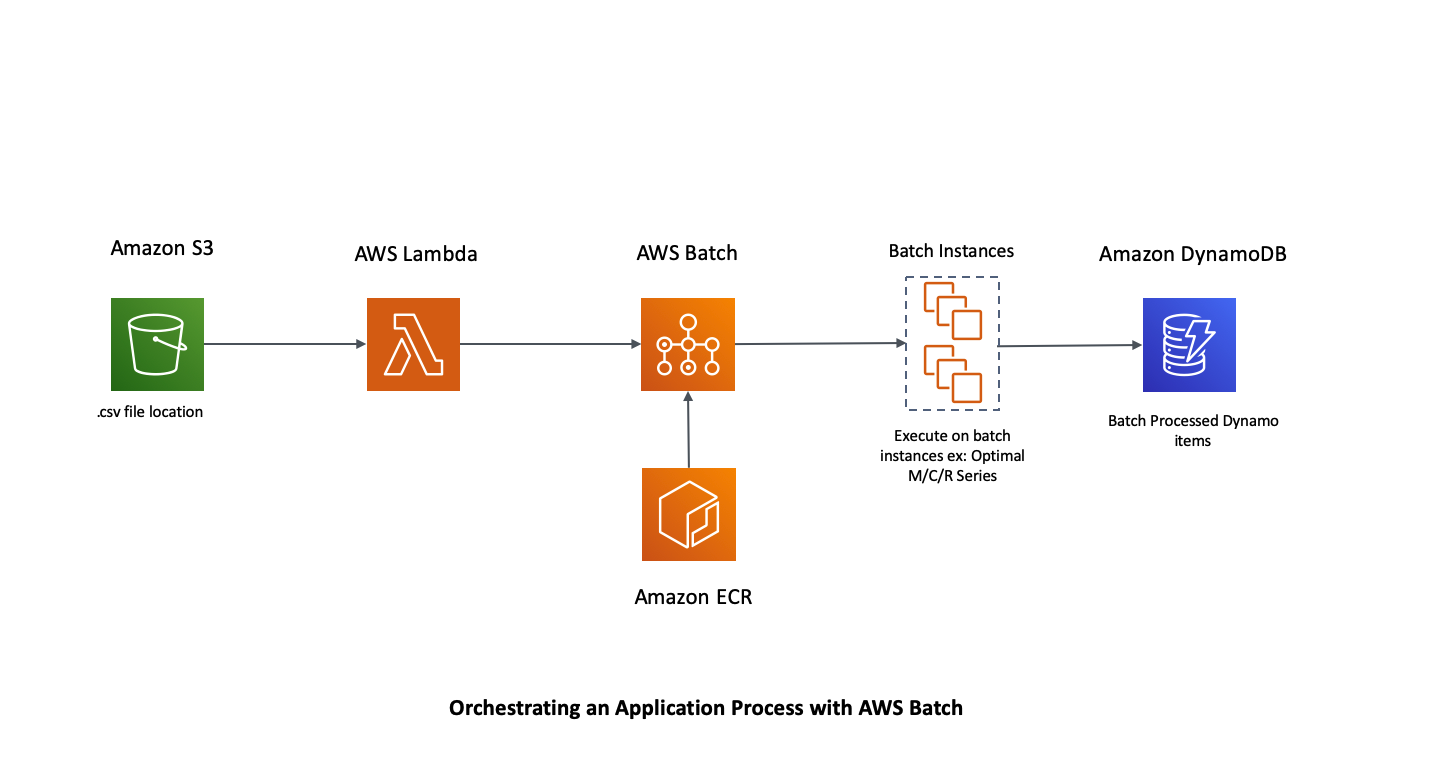

The sample provided spins up an application orchestration using AWS Services like AWS Simple Storage Service (S3), AWS Lambda and AWS DynamoDB. Amazon Elastic Container Registry (ECR) is used as the Docker container registry. Once the CloudFormation stack is spun up, the downloaded code can be checked in into your AWS CodeCommit repository (built as part of the stack) which would trigger the build to deploy the image to Amazon ECR. AWS Batch will be triggered by the lambda when a sample CSV file is dropped into the S3 bucket.

As part of this blog we will do the following.

-

Run the CloudFormation template (command provided) to create the necessary infrastructure

-

Set up the Docker image for the job

- Build a Docker image

- Tag the build and push the image to the repository

-

Drop the CSV into the S3 bucket (Copy paste the contents and create them as a sample file (“Sample.csv”)

-

Notice the Job runs and performs the operation based on the pushed container image. The job parses the CSV file and adds each row into DynamoDB.

-

Provided CloudFormation template has all the services (refer diagram below) needed for this exercise in one single template. In a production scenario, you may ideally want to split them into different templates (nested stacks) for easier maintenance.

-

Lambda uses Batch Jobs’ JobDefinition, JobQueue - Version as parameters. Once the Cloudformation stack is complete, this can be passed as input parameters and set as environment variables for the Lambda. Otherwise, When you deploy subsequent version of the jobs, you may need to manually change the queue definition:version.

-

Below example lets you build, tag, pushes the docker image to the repository (created as part of the stack). Optionally this can be done with the AWS CodeBuild building from the repository and shall push the image to AWS ECR.

- Download this repository - We will refer this as SOURCE_REPOSITORY

$ git clone https://github.com/aws-samples/aws-batch-processing-job-repo

- Execute the below commands to spin up the infrastructure cloudformation stack. This stack spins up all the necessary AWS infrastructure needed for this exercise

$ cd aws-batch-processing-job-repo

$ aws cloudformation create-stack --stack-name batch-processing-job --template-body file://template/template.yaml --capabilities CAPABILITY_NAMED_IAM

-

You can run the application in two different ways

-

This steps allows you to copy the contents from source git repo and trigger deployment into your repository

* The above command would have created a git repository in your personal account. Make sure to replace your region below accordingly * $ git clone https://git-codecommit.us-east-1.amazonaws.com/v1/repos/batch-processing-job-repo * cd batch-processing-job-repo * copy all the contents from SOURCE_REPOSITORY (from step 1) and paste inside this folder * $ git add . * $ git commit -m "commit from source" * $ git push -

RUN the below commands to dockerize the python file

i. Make sure to replace your account number, region accordingly

ii. Make sure to have Docker daemon running in your local computer

$ cd SOURCE_REPOSITORY (Refer step 1) $ cd src # get the login creds and copy the below output and paste/run on the command line $ $ aws ecr get-login --region us-east-1 --no-include-email # Build the docker image locally, tag and push it to the repository $ $ docker build -t batch_processor . $ docker tag batch_processor <YOUR_ACCOUNT_NUMBER>.dkr.ecr.us-east-1.amazonaws.com/batch-processing-job-repository $ docker push <YOUR_ACCOUNT_NUMBER>.dkr.ecr.us-east-1.amazonaws.com/batch-processing-job-repository

-

Make sure to complete the above step. You can review the image in AWS Console > ECR - "batch-processing-job-repository" repository

- AWS S3 bucket - batch-processing-job-<YOUR_ACCOUNT_NUMBER> is created as part of the stack.

- Drop the provided Sample.CSV into the S3 bucket. This will trigger the Lambda to trigger the AWS Batch

- In AWS Console > Batch, Notice the Job runs and performs the operation based on the pushed container image. The job parses the CSV file and adds each row into DynamoDB.

- In AWS Console > DynamoDB, look for "batch-processing-job" table. Note sample products provided as part of the CSV is added by the batch

-

AWS Console > S3 bucket - batch-processing-job-<YOUR_ACCOUNT_NUMBER> - Delete the contents of the file

-

AWS Console > ECR - batch-processing-job-repository - delete the image(s) that are pushed to the repository

-

run the below command to delete the stack.

$ aws cloudformation delete-stack --stack-name batch-processing-job

This library is licensed under the MIT-0 License. See the LICENSE file.