Image convergence: regularize by data

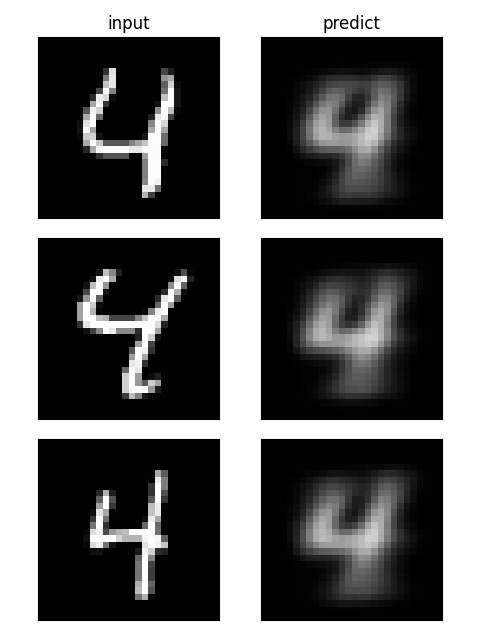

Leveraging similar concept as in N2N [1], variational autoencoder (VAE) [2], and Siamese network [3]. The regularization effect is similar to VAE: in VAE, the model aims to reconstruct the input image from an off-by-a-bit latent vector (sampled from the latent space), while here the model aims to reconstruct an off-by-a-bit target image (shuffled with label preserved) from the latent vector. The net effect is the model converged to the typical image(s) within the label group. The latent space from models like this allows new image generation, clustering, and other applications.

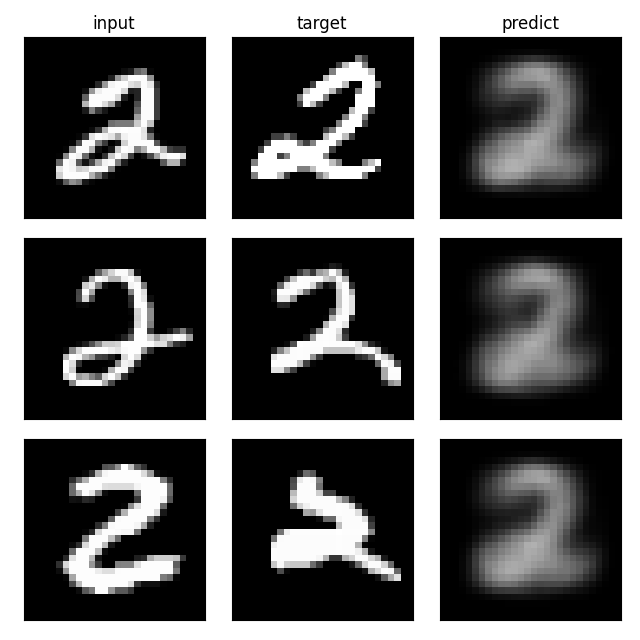

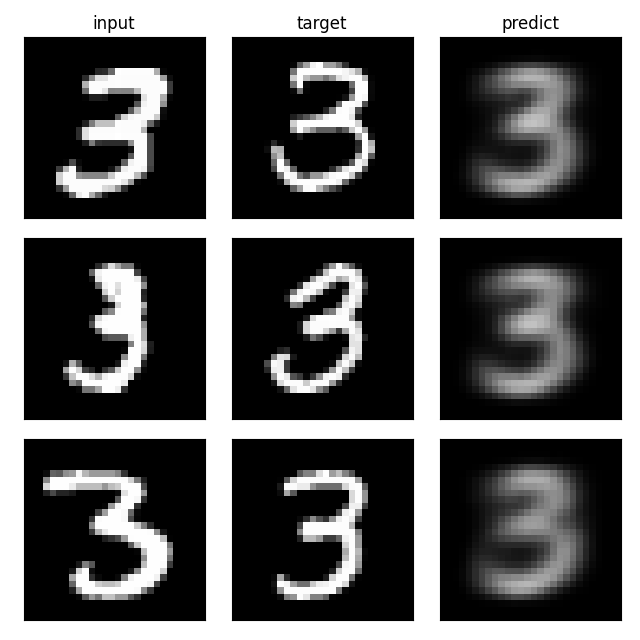

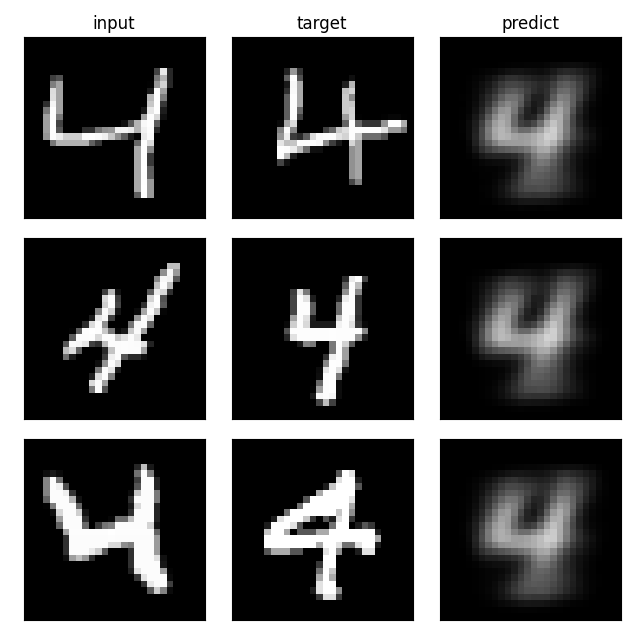

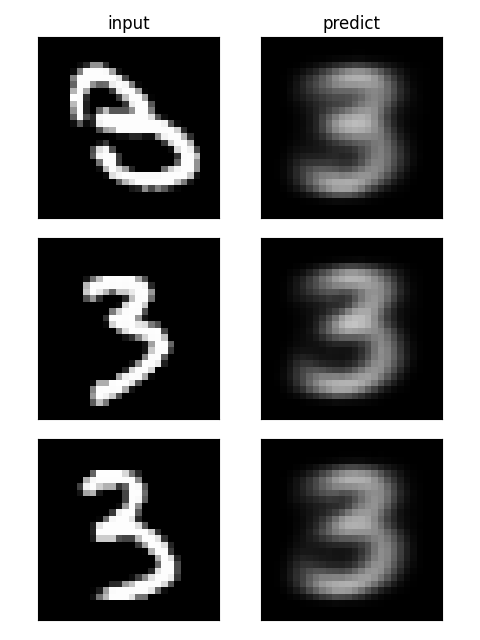

Example training data

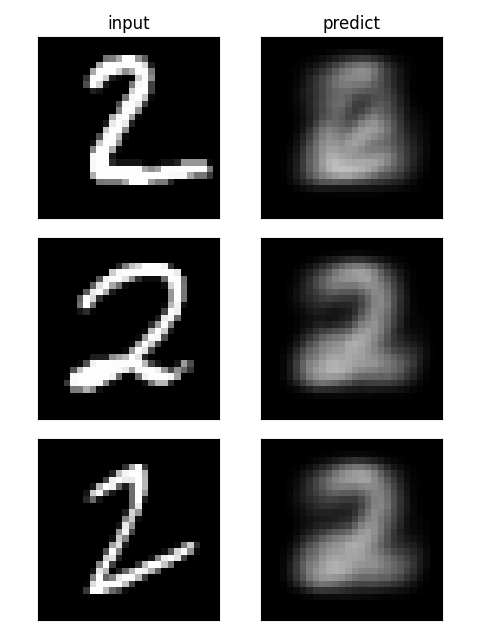

Example test result

Reference

[1] Noise2Noise: Learning Image Restoration without Clean Data (arxiv)

[2] Variational autoencoder (Wikipedia)

[3] Siamese neural network (Wikipedia)