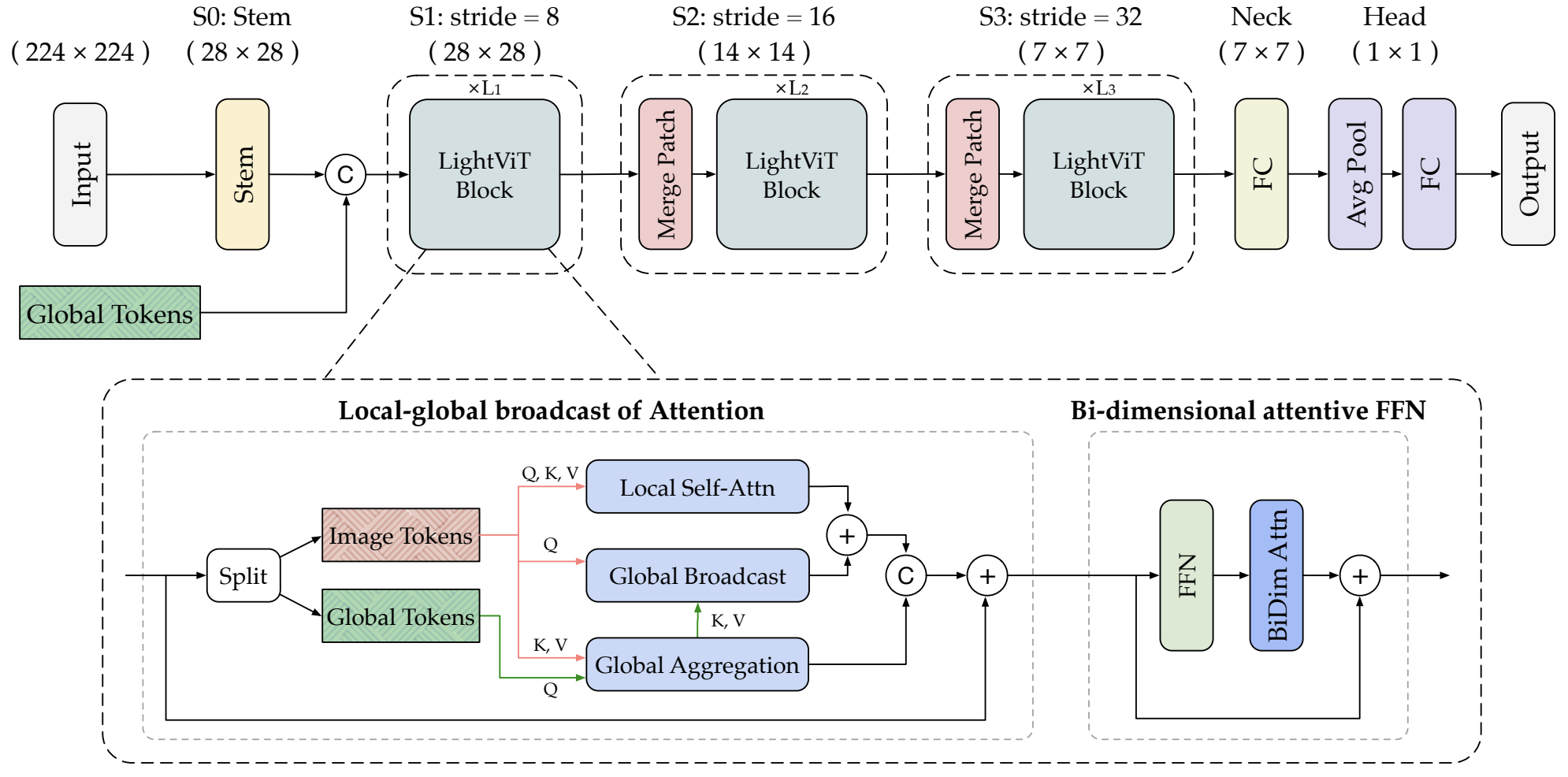

Official implementation for paper "LightViT: Towards Light-Weight Convolution-Free Vision Transformers".

By Tao Huang, Lang Huang, Shan You, Fei Wang, Chen Qian, Chang Xu.

Code for COCO detection was released.

Code for ImageNet training was released.

| model | resolution | acc@1 | acc@5 | #params | FLOPs | ckpt | log |

|---|---|---|---|---|---|---|---|

| LightViT-T | 224x224 | 78.7 | 94.4 | 9.4M | 0.7G | google drive | log |

| LightViT-S | 224x224 | 80.9 | 95.3 | 19.2M | 1.7G | google drive | log |

| LightViT-B | 224x224 | 82.1 | 95.9 | 35.2M | 3.9G | google drive | log |

-

Clone training code

git clone https://github.com/hunto/LightViT.git --recurse-submodules cd LightViT/classificationThe code of LightViT model can be found in lib/models/lightvit.py .

-

Requirements

torch>=1.3.0 # if you want to use torch.cuda.amp for mixed-precision training, the lowest torch version is 1.5.0 timm==0.5.4

-

Prepare your datasets following this link.

You can evaluate our results using the provided checkpoints. First download the checkpoints into your machine, then run

sh tools/dist_run.sh tools/test.py ${NUM_GPUS} configs/strategies/lightvit/config.yaml timm_lightvit_tiny --drop-path-rate 0.1 --experiment lightvit_tiny_test --resume ${ckpt_file_path}sh tools/dist_train.sh 8 configs/strategies/lightvit/config.yaml ${MODEL} --drop-path-rate 0.1 --experiment lightvit_tiny${MODEL} can be timm_lightvit_tiny, timm_lightvit_small, timm_lightvit_base .

For timm_lightvit_base, we added --amp option to use mixed-precision training, and set drop_path_rate to 0.3.

sh tools/dist_run.sh tools/speed_test.py 1 configs/strategies/lightvit/config.yaml ${MODEL} --drop-path-rate 0.1 --batch-size 1024or

python tools/speed_test.py -c configs/strategies/lightvit/config.yaml --model ${MODEL} --drop-path-rate 0.1 --batch-size 1024We conducted experiments on COCO object detection & instance segmentation tasks, see detection/README.md for details.

This project is released under the Apache 2.0 license.

@article{huang2022lightvit,

title = {LightViT: Towards Light-Weight Convolution-Free Vision Transformers},

author = {Huang, Tao and Huang, Lang and You, Shan and Wang, Fei and Qian, Chen and Xu, Chang},

journal = {arXiv preprint arXiv:2207.05557},

year = {2022}

}