This project is the group work of HKU COMP7404 Group A, and the team members are: Hu Shiyu, Lu Yuhan, Long Yafeng, Meng Liuchen.

The live demo: here

Since the well-trained NMT model is too large(more than 1.6G) and cannot be uploaded, please download it in Tensorflow NMT and use the commands written in trainModel.ipynb to accomplish the training process in terminal. If you want to download the well-trained model, please use this link

In this project, we want to construct a music style converter that can use machine learning algorithms to perform style conversions for the input MIDI music. We chose jazz music as a goal of conversion. The overall thinking is as follows:

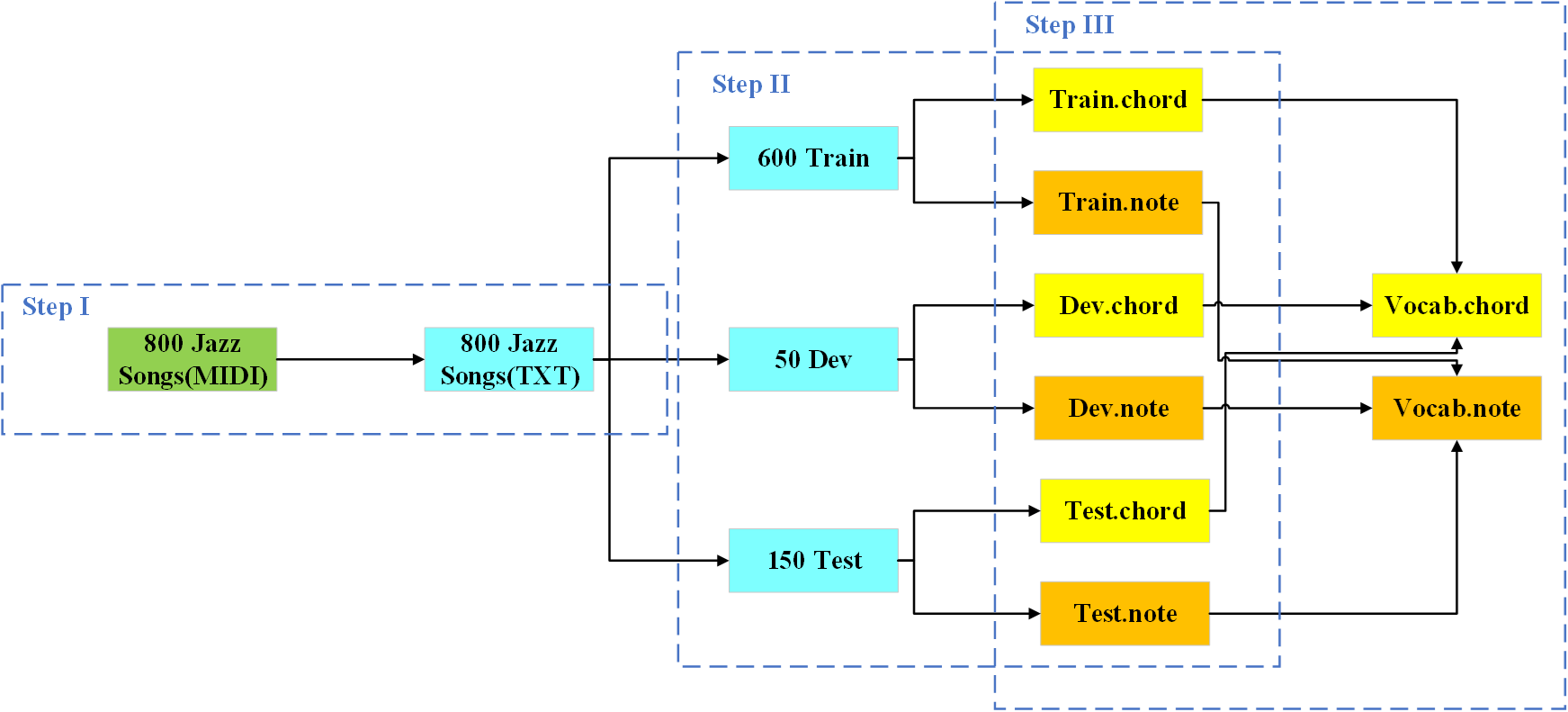

First, select 800 jazz to build the original data set, and divide the data set into three parts:

| Folder | Songs |

|---|---|

| train | 600 |

| dev | 50 |

| test | 150 |

Then, use music21 to extract information from the original MIDI song and retrieve its track information to save in the txt file.

Next, for the track information in each txt file, split it and get the chord and note sperately, save these two information in different files. This step is done for all folders.

Finally we get six files:

train.chord, train.note, dev.chord, dev.note, test.chord, test.note.

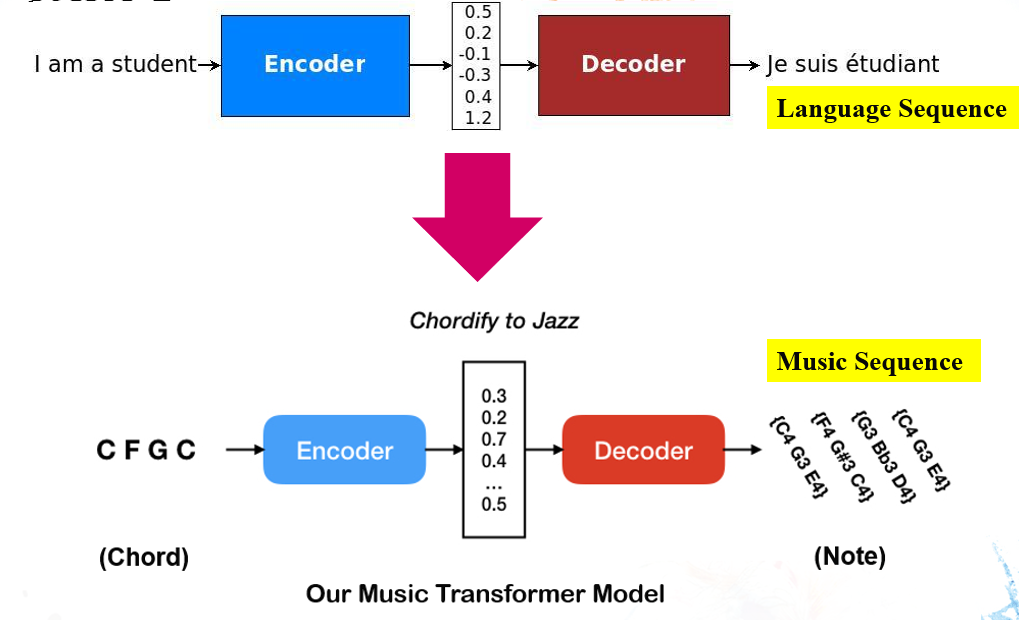

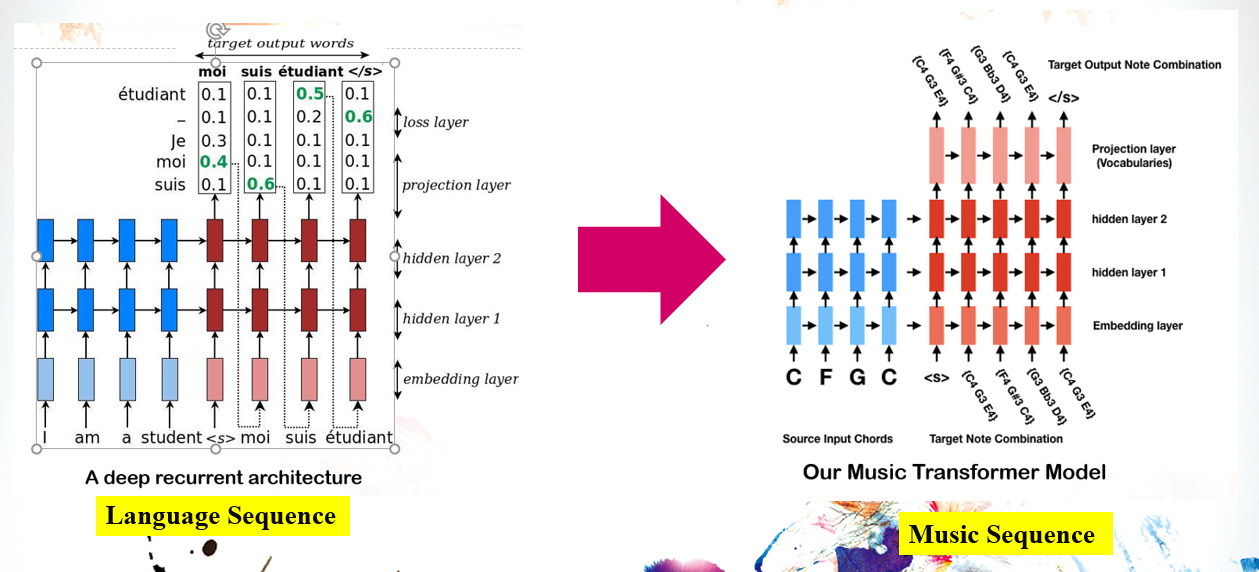

After that, we will use Tensorflow NMT to accomplish the training process. We regard the chord file as the source sequence while the note file as the target sequence. Based on this, the NMT will be trained to accomplish the chord to note transfermation. Since the target notes are collected from jazz songs, if we input a new song's chord file, the NMT will search the corresponding jazz note to combine the jazz-style note file. Thus, by using the input song and the jazz-style note file, we can generate a new song with jazz style based on music21's functions.

Music21 is a set of tools for helping scholars and other active listeners answer questions about music quickly and simply.

Music21 is very useful in processing the MIDI music. MIDI (Musical Instrument Digital Interface) is a technical standard that describes a communications protocol, digital interface, and electrical connectors that connect a wide variety of electronic musical instruments, computers, and related music and audio devices. A single MIDI link can carry up to sixteen channels of information, each of which can be routed to a separate device.

In this project, we mainly use music21 to extract the information in MIDI files. This useful package can help us to extract the track, chord and note information for the input MIDI, and can also use these information to generate a new song.

Tensorflow NMT(Neural Machine Translation) is a Sequence-to-sequence (seq2seq) model which can help us enjoy great success in a variety of tasks such as machine translation, speech recognition, and text summarization.

The advantage is that this model is well designed by Google, thus we can train it easily by providing the source sequence and the target dequence with some model structure parameters.

Since training NMT requires enough data processing ability, we need to build a deep learning environment for model training. To increase the training speed, it's better to use GPU in this process.

The necessary packages for building the deep learning environment:

| Name | Version |

|---|---|

| GPU | Nvidia GTX1070 |

| CUDA | 9.0 |

| cuDNN | 7.0 |

| Tensorflow | 1.7-gpu |

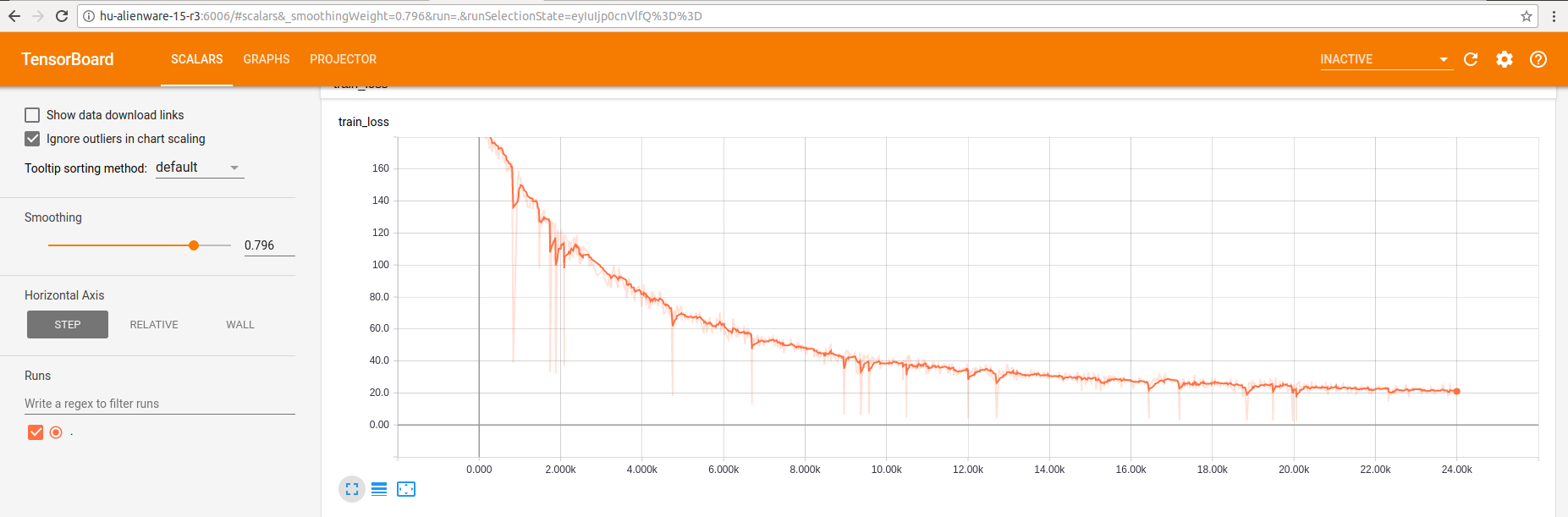

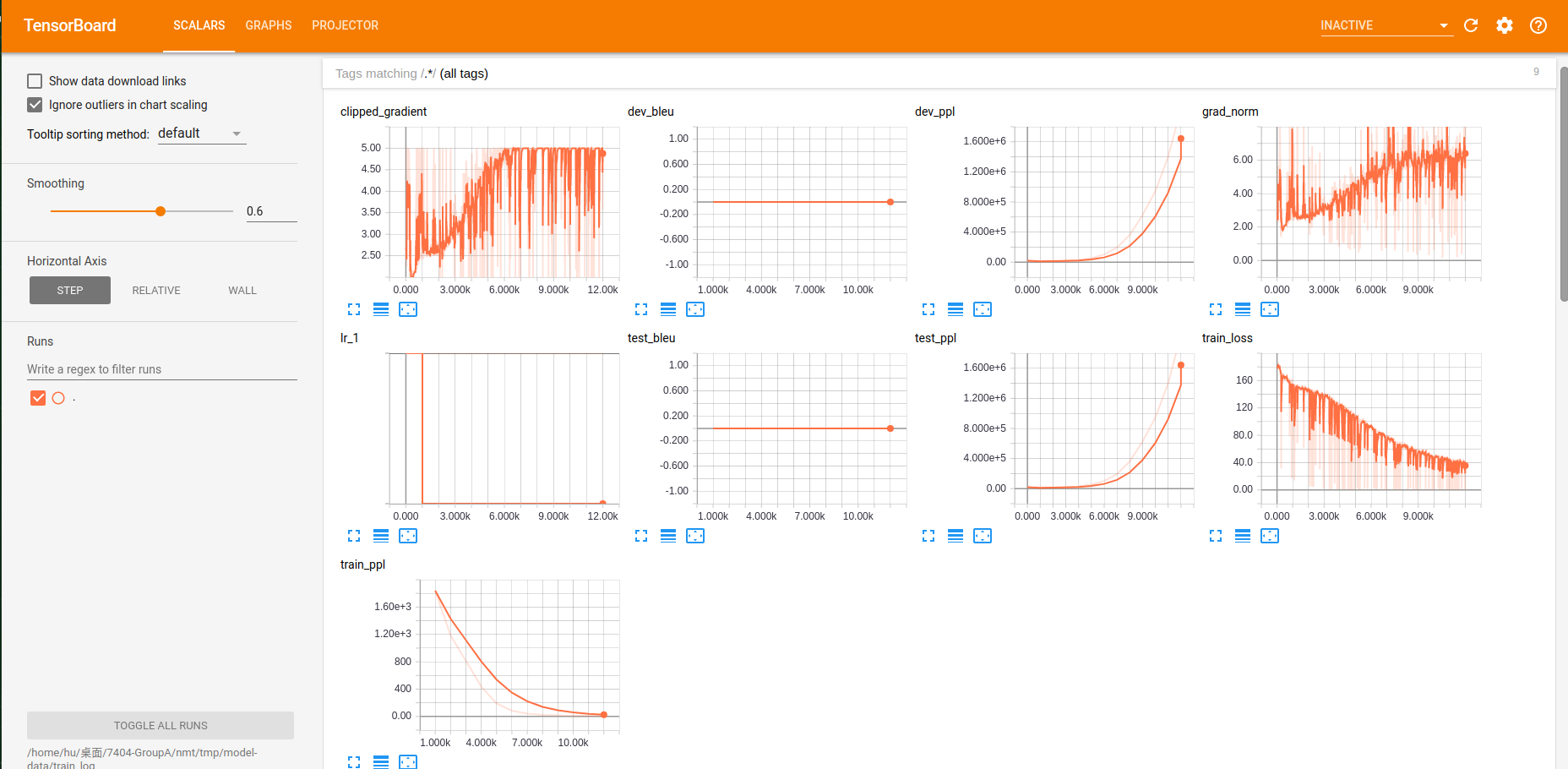

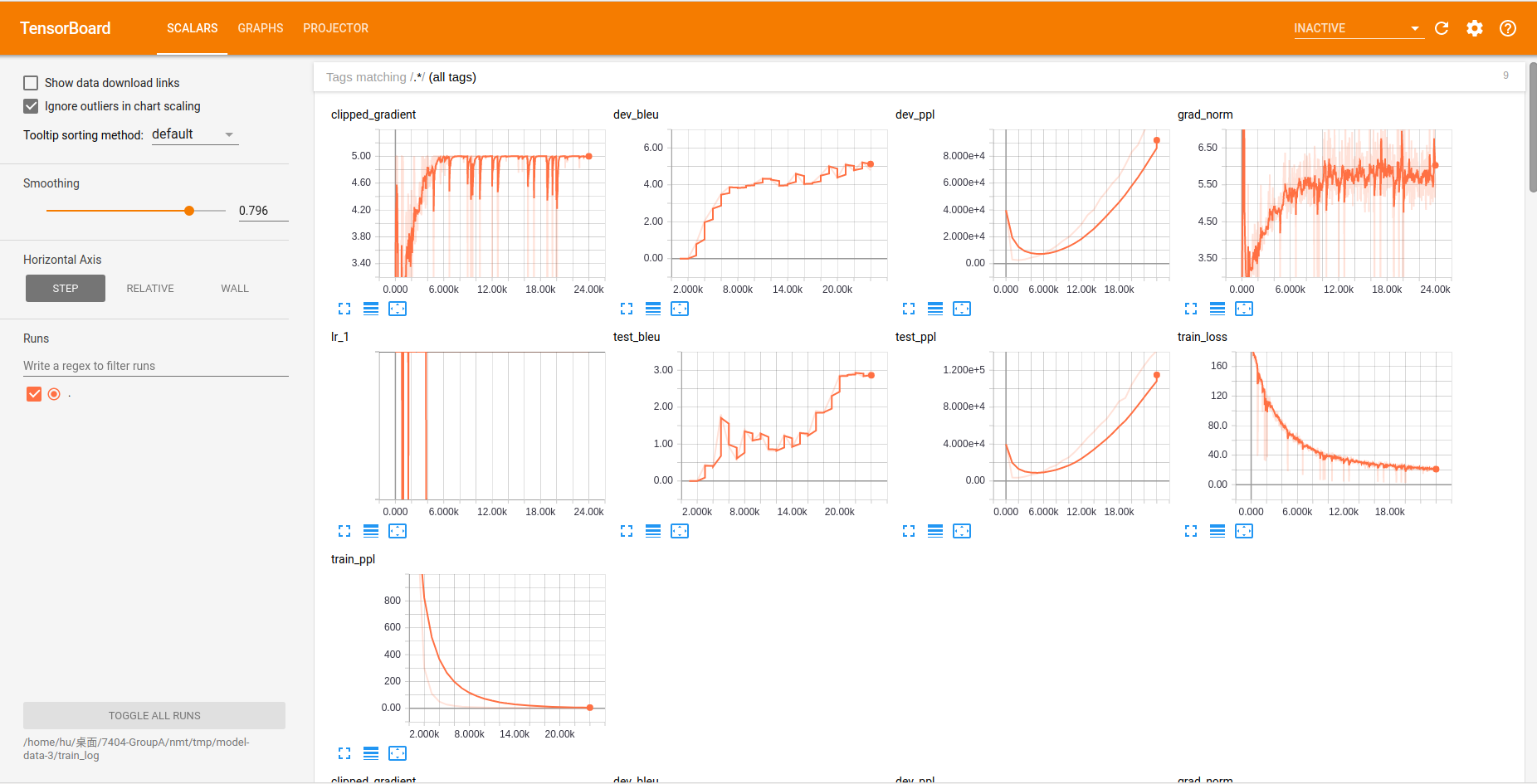

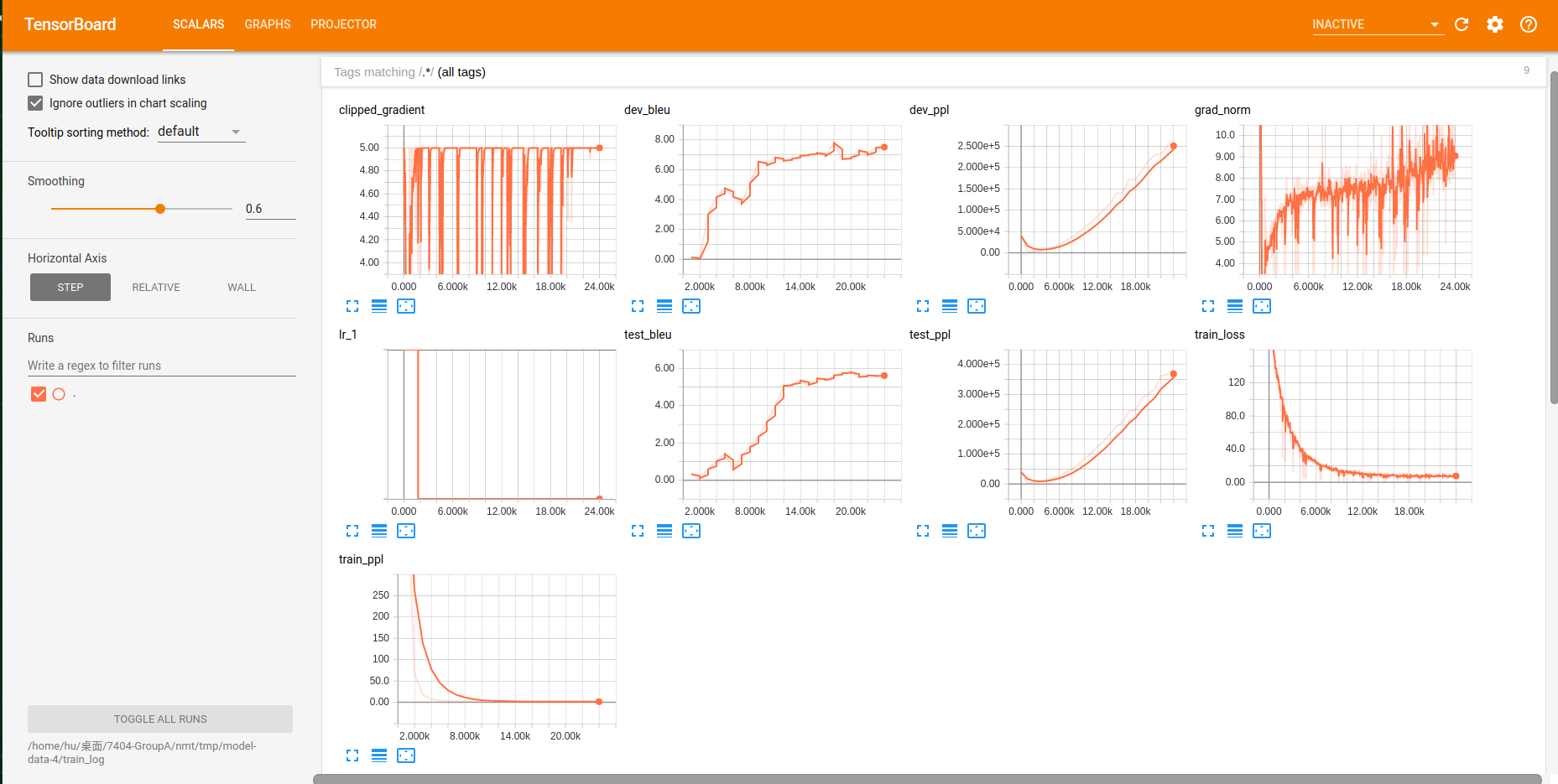

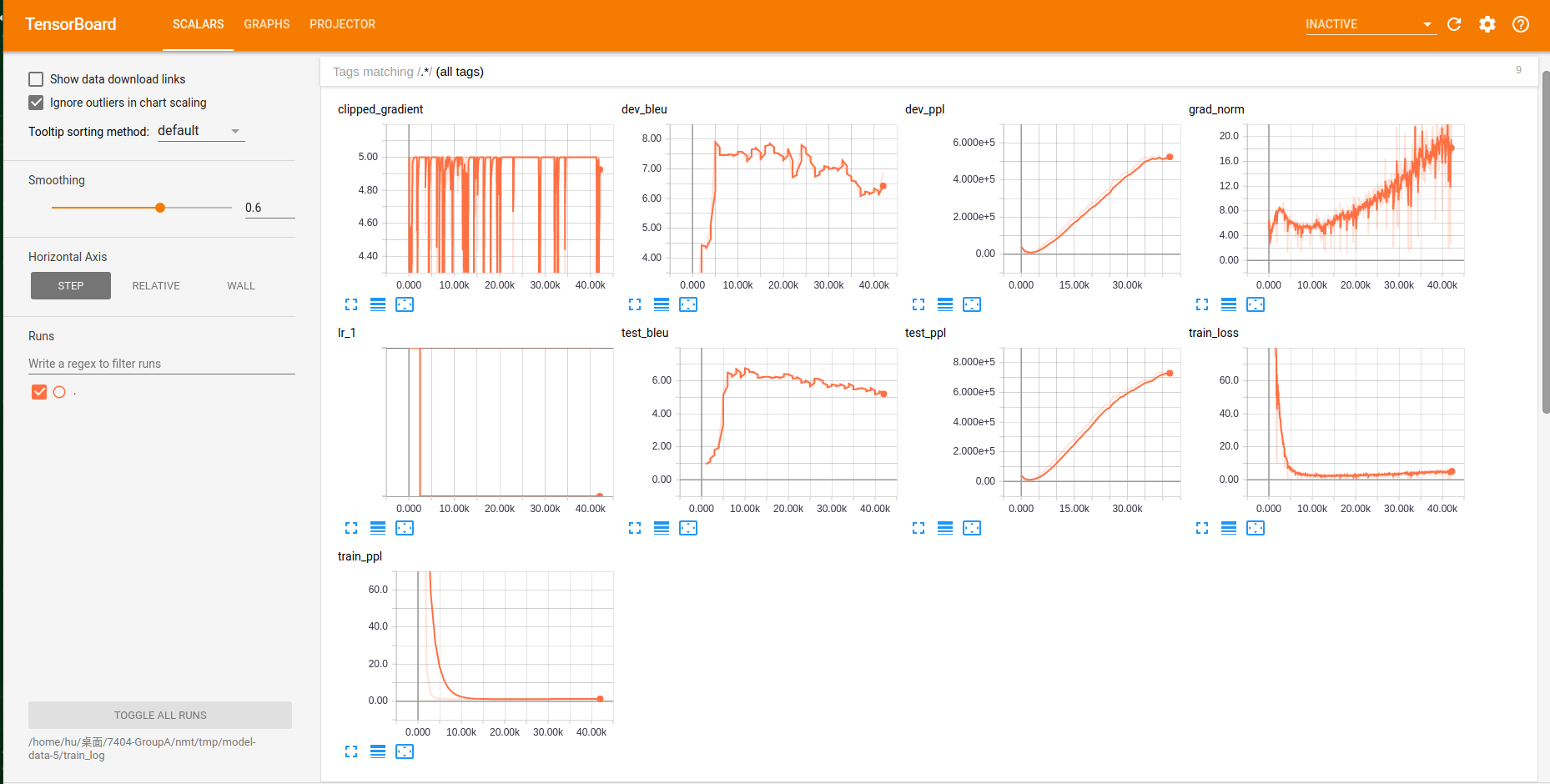

TensorBoard is used to visualize the TensorFlow graph, plot quantitative metrics about the execution of the graph, and show additional data like images that pass through it. The main page of it looks like:

We can use the command below to open TensorBoard:

tensorboard --logdir=/home/hu/桌面/7404-GroupA/nmt/tmp/model-data-4/train_log --port 4004

logdir is the path of the NMT log file and port will set the port to show the web UI(the default port is 6006)

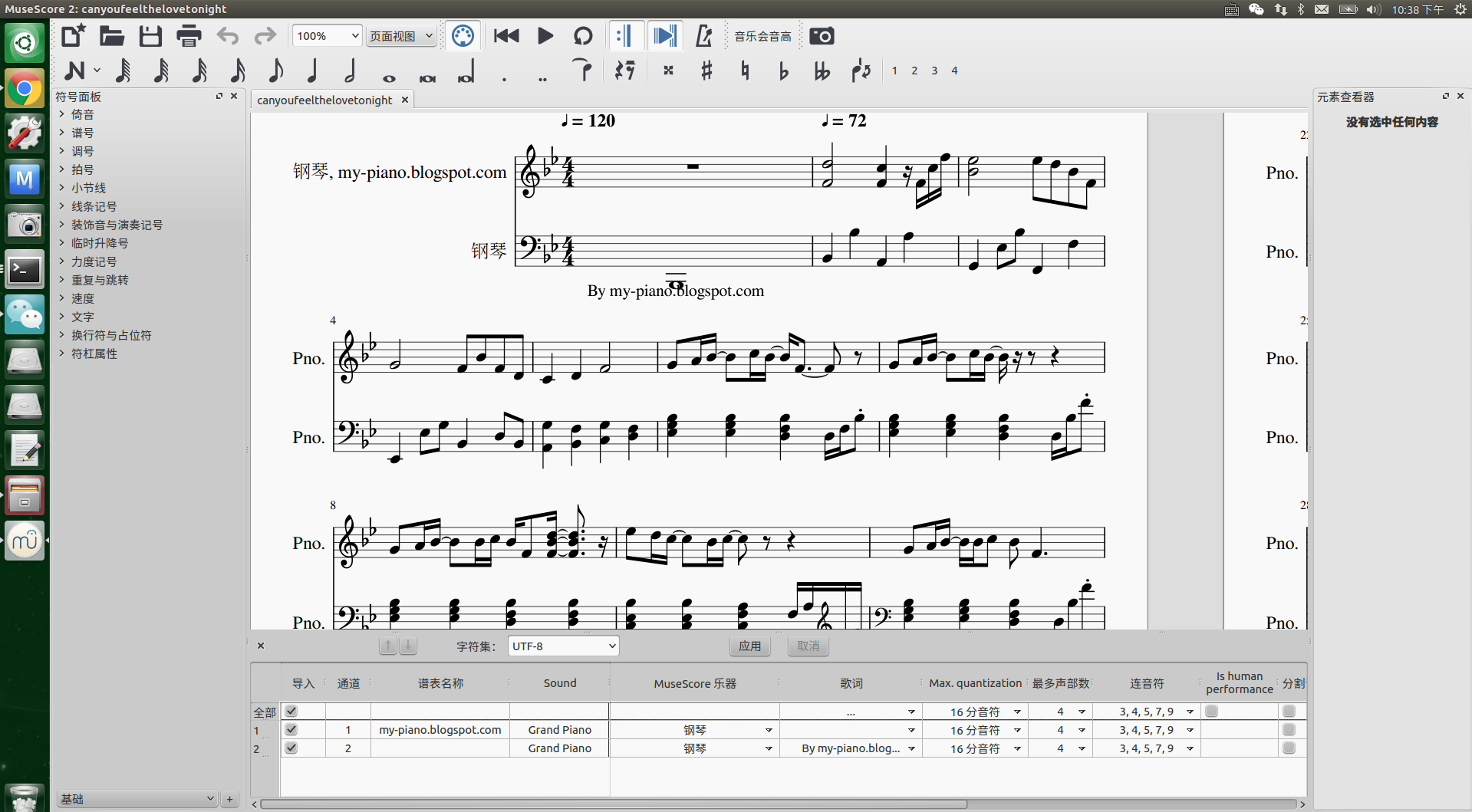

MuseScore is a free scorewriter for Windows, macOS, and Linux, comparable to Finale and Sibelius, supporting a wide variety of file formats and input methods. It is released as free and open-source software under the GNU General Public License.

For Ubunutu16.04, the UI looks like:

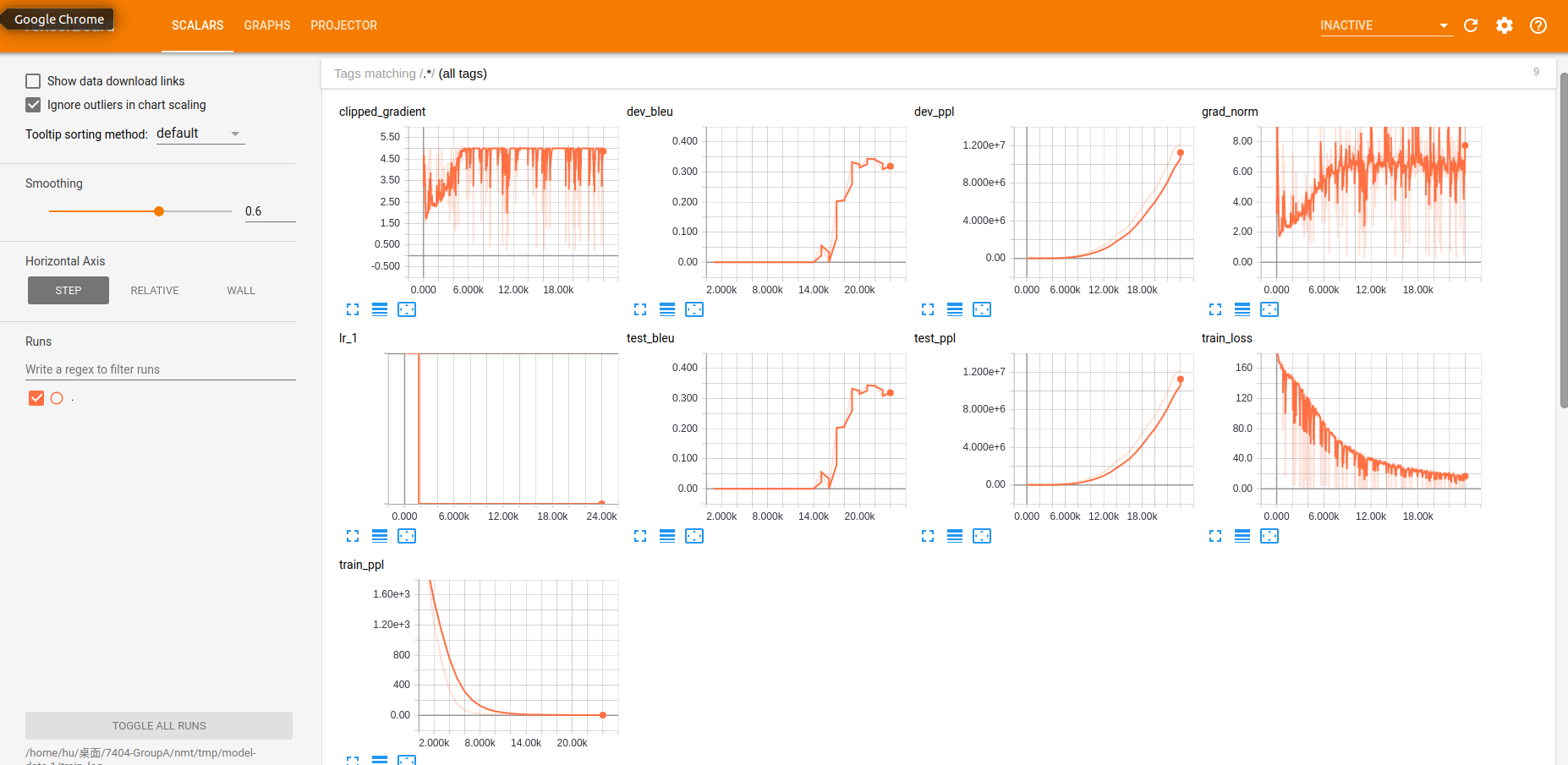

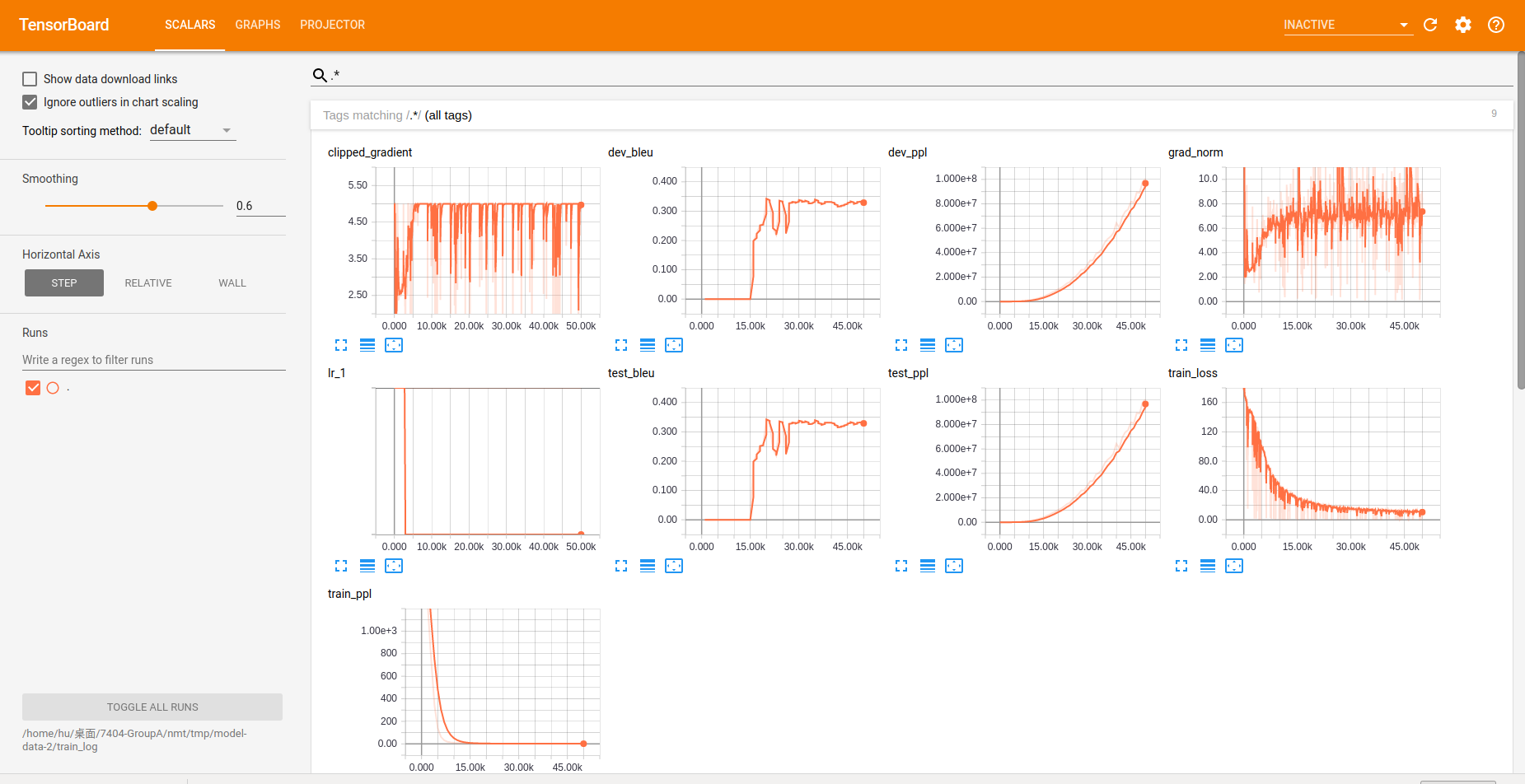

In this part, we've tried different parameter combinations to verify the training results.

Choice

- Chord processing method:

There are two possible methods to extract chords information:

The first one, all-information: extract the chord information with its duration information(such as F#4-flat-ninth_pentachord).

The second one, single-information: only extract the chord information(such as F#4).

- num_train_steps:

We've tried three different values of num_train_steps: 12000, 24000 and 50000.

- num_units:

We've tried two different values of num_units: 128 and 256.

ATTENTION

If you want to set the num_units larger, please make sure that your device is good enough. If you do not have a gpu or the gpu memory is not enough, please reduce the num_units to avoid out of memory error!

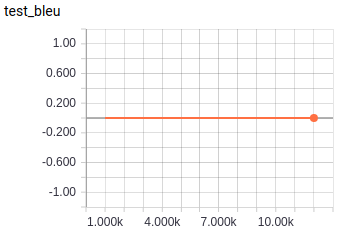

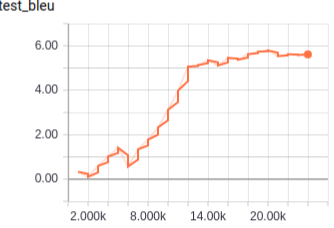

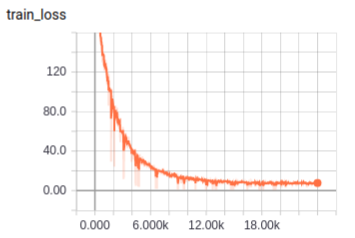

| Chord | num_train_steps | num_units |

|---|---|---|

| All-Information | 12000 | 128 |

| Chord | num_train_steps | num_units |

|---|---|---|

| All-Information | 24000 | 128 |

| Chord | num_train_steps | num_units |

|---|---|---|

| All-Information | 50000 | 128 |

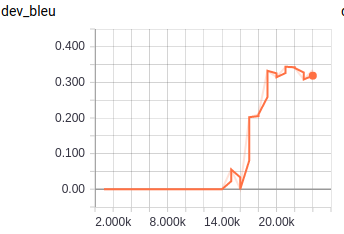

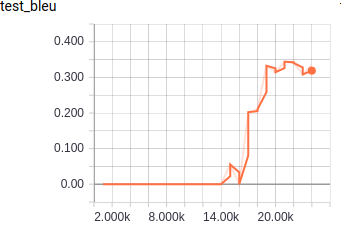

| Chord | num_train_steps | num_units |

|---|---|---|

| Single-Information | 24000 | 128 |

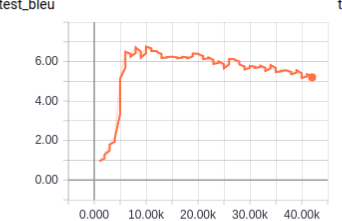

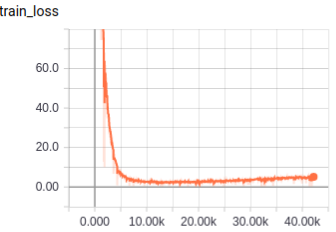

| Chord | num_train_steps | num_units |

|---|---|---|

| Single-Information | 24000 | 256 |

| Chord | num_train_steps | num_units |

|---|---|---|

| Single-Information | 50000 | 512 |

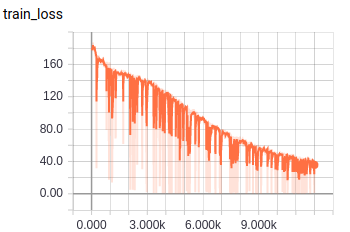

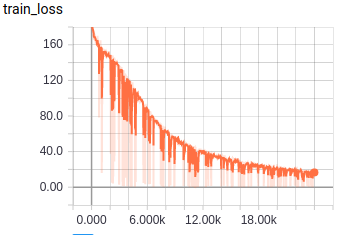

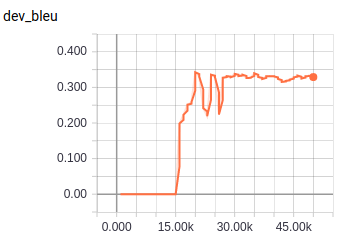

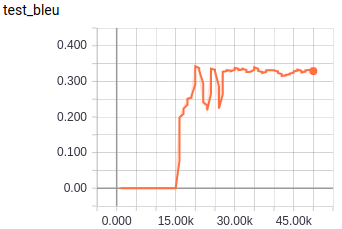

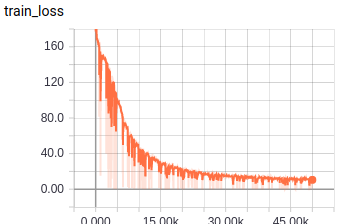

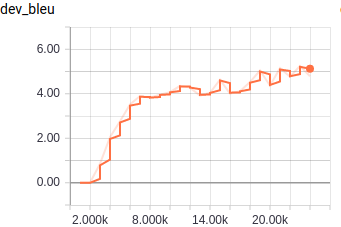

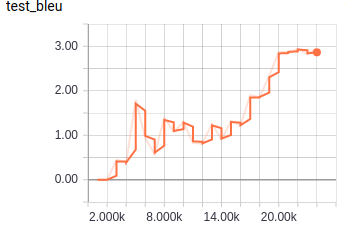

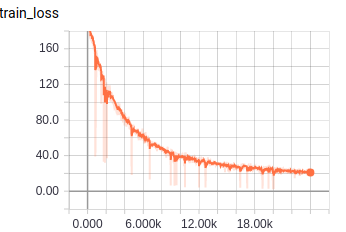

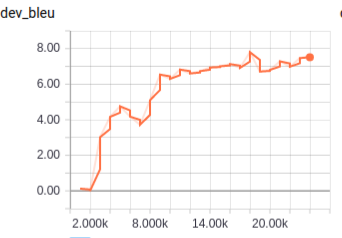

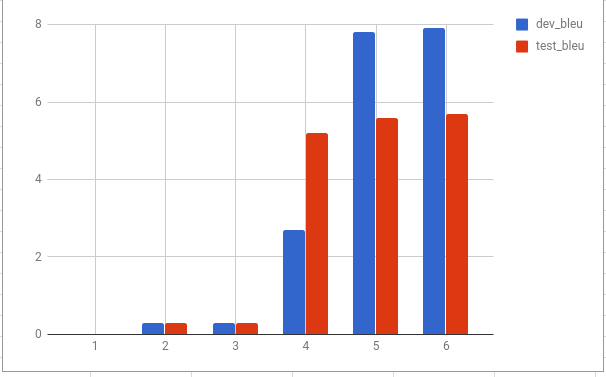

In our experiment, we use BLEU value and train loss value to evalute the models.

It is clearly that:

-

Extract the single chord information is better than extract all the information include the duration.

-

Increase the num units from 128 to 256 may help the model to get a better result. The longer input sequence may help the lstm to get more information about the relationship of different notes and chords.

-

The training steps should be bigger enough to provide the model have enough training process. 24000 is enough to provide throughly training for this model.

Here is the demo for our music transfermation.

| River_Flows_In_You | music | Music Score |

|---|---|---|

| River_Flows_In_You_Jazz | music | Music Score |

| Undertale_-_Megalovania_Piano_Added_guitar_fixed_tonality | music | Music Score |

| Undertale_-_Megalovania_Piano_Added_guitar_fixed_tonality_jazz | music | Music Score |