This repo is the implementation of Spatio-angular Dense Network (SADenseNet) for light field reconstruction.

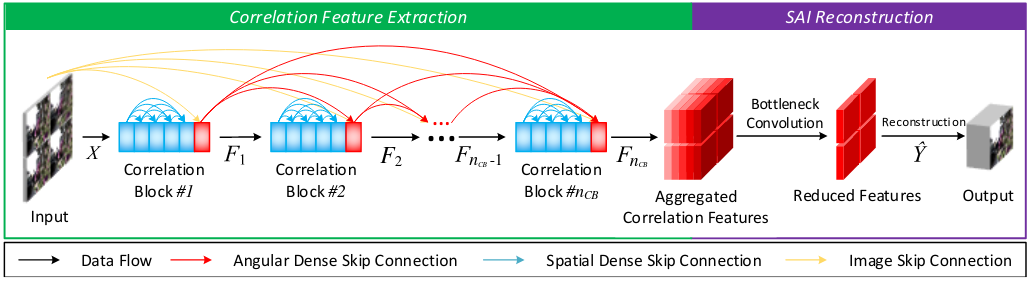

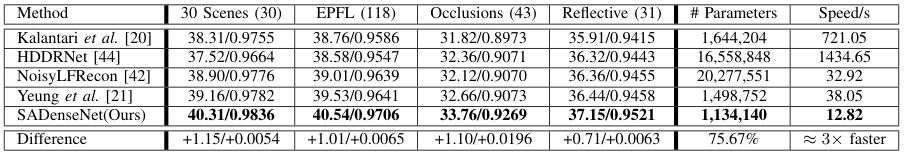

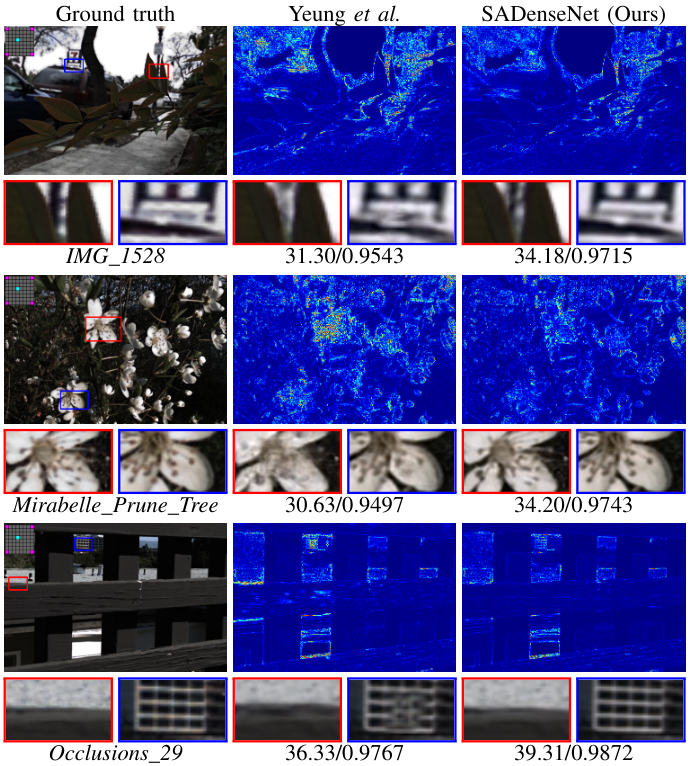

Efficient Light Field Reconstruction via Spatio-Angular Dense Network

Zexi Hu; Henry Wing Fung Yeung; Xiaoming Chen; Yuk Ying Chung; Haisheng Li

IEEE Transactions on Instrumentation and Measurement (2021)

[IEEE Xplore] [arXiv]

Python 3.6

Tensorflow 1.15.5 (Tensorflow 2 is not supported.)

Keras 2.3.1

Running the following command to install the required Python packages:

pip install -r requirements.txtAs the light field files are substaintially larger than regular image files, this repo using HDF5 format to store and preload the needed LF images for both training and testing.

To obtain the HDF5 files, two options are provided:

- Download the generated HDF5 files we provided in this page and put them under the

datafolder. - Do it yourself following the instruction below.

NOTICE:

- Beware of your disk space as the HDF5 files will be huge.

- As the cloud storage is provided the University of Sydney, it will expire after one year and needs manual renewal. Therefore, I would encourage readers to obtain HDF5 files via Do It Yourself presented below. In spite of that, you are welcome to use the pre-computed HDF5 files or remind me to renew it again if expiration happens again.

Modify the datasets variable in prepare.py to specify which datasets to be prepared, and then following the instruction below to have raw data and configuration ready. The HDF5 files will be placed in dir_data variable in config.py.

30Scenes (Kalantari)

- Visit the page to download the training and test sets and unzip them somewhere. As a result, there should be two folders, namely

TrainingSetandTestSet. - In

components/datasets/lytro/KalantariDataset.py, modifypath_rawto the path containing the two folders mentioned in the last step. - If your intention is just the test set, comment out

Dataset.MODE_TRAINinlistvariable incomponents/datasets/lytro/KalantariDataset.pyto save time. - Run

python prepare.py.

Stanford Lytro Light Field Archive

- Visit the the official website to download

OcclusionsandRefelctivecategories, and unzip them in the same folder. - In

components/datasets/lytro/StanfordDataset.py, modifypath_rawto the unzipped folder. - Run

python prepare.py.

EPFL

- The original data was downloaded from the official website or the alternative website and converted from

.matfiles to.pngfiles. Unfortunately, the conversion code is lost while the PNG files are kept here. - In

components/datasets/lytro/EpflDataset.py, modifypath_rawto the unzipped folder. - Run

python prepare.py.

- We provide the pre-trained models mentioned in the paper in the release page. Download them and put it somewhere, e.g. the

modelsfolder. - In

config.py, change the corresponding variables in theNetworksection for the model you choose. The default configuration is for the full SADenseNet variantISA_651which provides the best performance.

Simply run the following command:

python --model /path/to/model.hdf5 --bgr --diff --mp 1Assuming we are benchmarking on 30Scenes:

--mpenables multiprocessing when it is larger than 0 while you can disable it by set it to 0. Default to 1.--bgrenables image rendering into PNG files intmp/test/30Scenes.--diffenables error map rendering intmp/test/30Scenes.diffto visualize the reconstruction performance.

The result will be output to tmp/test/test_model_30Scenes.csv with PSNR, SSIM and running time for each sample:

sample,psnr,ssim,time

IMG_1085_eslf,44.03,0.9859,1.58

IMG_1086_eslf,46.95,0.9925,1.66

IMG_1184_eslf,44.04,0.9789,1.08

IMG_1187_eslf,43.77,0.9837,1.16

If you find our research helpful, please cite the paper.

@article{hu_TIM2021_SADenseNet,

title={Efficient Light Field Reconstruction via Spatio-Angular Dense Network},

author={Hu, Zexi and Yeung, Henry Wing Fung and Chen, Xiaoming and Chung, Yuk Ying and Li, Haisheng},

journal={IEEE Transactions on Instrumentation and Measurement},

volume={70},

pages={1--14},

year={2021},

publisher={IEEE}

}