This project utilizes Retrieval-Augmented Generation (RAG) to create a medical assistant capable of answering questions by retrieving relevant information from a medical dataset on Hugging Face. By combining the power of retrieval mechanisms with generative models, the assistant provides contextually accurate and informative responses.

Important Note: This medical assistant is not a substitute for professional medical advice, diagnosis, or treatment. Always consult a qualified healthcare provider for any medical concerns.

- Dataset: keivalya/MedQuad-MedicalQnADataset

- LLM: Gemma2 2B

- Embedding model: multi-qa-distilbert-cos-v1

- Database: Elasticsearch

- Python 3.10 or higher

- Docker

-

Create a Python virtual environment and run

pip install -r requirements.txtto install the required dependencies. -

Run

./start.shto start the Docker containers in detached mode, wait for them to initialize, pull the necessary model into theollamacontainer, and then execute theindex_document.pyscript to index your documents. -

Run

streamlit run app.py, and you can start asking questions to the medical assistant! -

Optionally, you can run

python retrieval_evaluation.pyto evaluate the retrieval results.

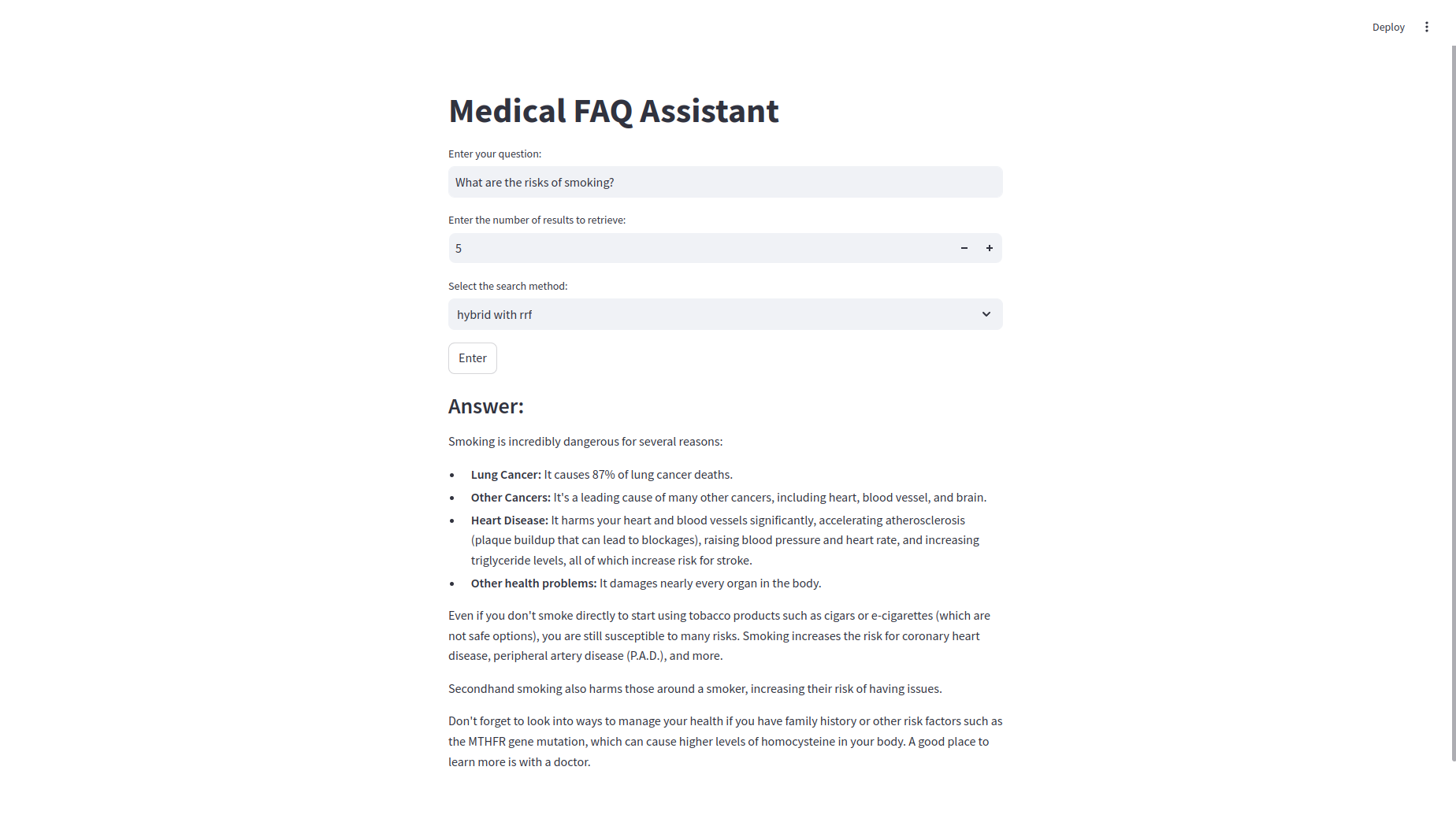

- I have implemented four types of search methods: keyword, KNN, hybrid, and hybrid with reciprocal rank fusion (document reranking). You can choose your preferred method when using the app, and you can also specify the number of search results to be returned.

- I used only a small portion of the data to generate the ground truth for retrieval evaluation.

Warning: This project has only been tested on Ubuntu 22.04. Compatibility with other operating systems is not guaranteed.

Below is a preview of the application interface:

| Search Method | Hit Rate | Mean Reciprocal Rank |

|---|---|---|

| Keyword Search | 0.5842 | 0.4132 |

| KNN Search | 0.8406 | 0.6795 |

| Hybrid Search | 0.6190 | 0.4372 |

| Hybrid Search with Reciprocal Rank Fusion | 0.8371 | 0.6218 |