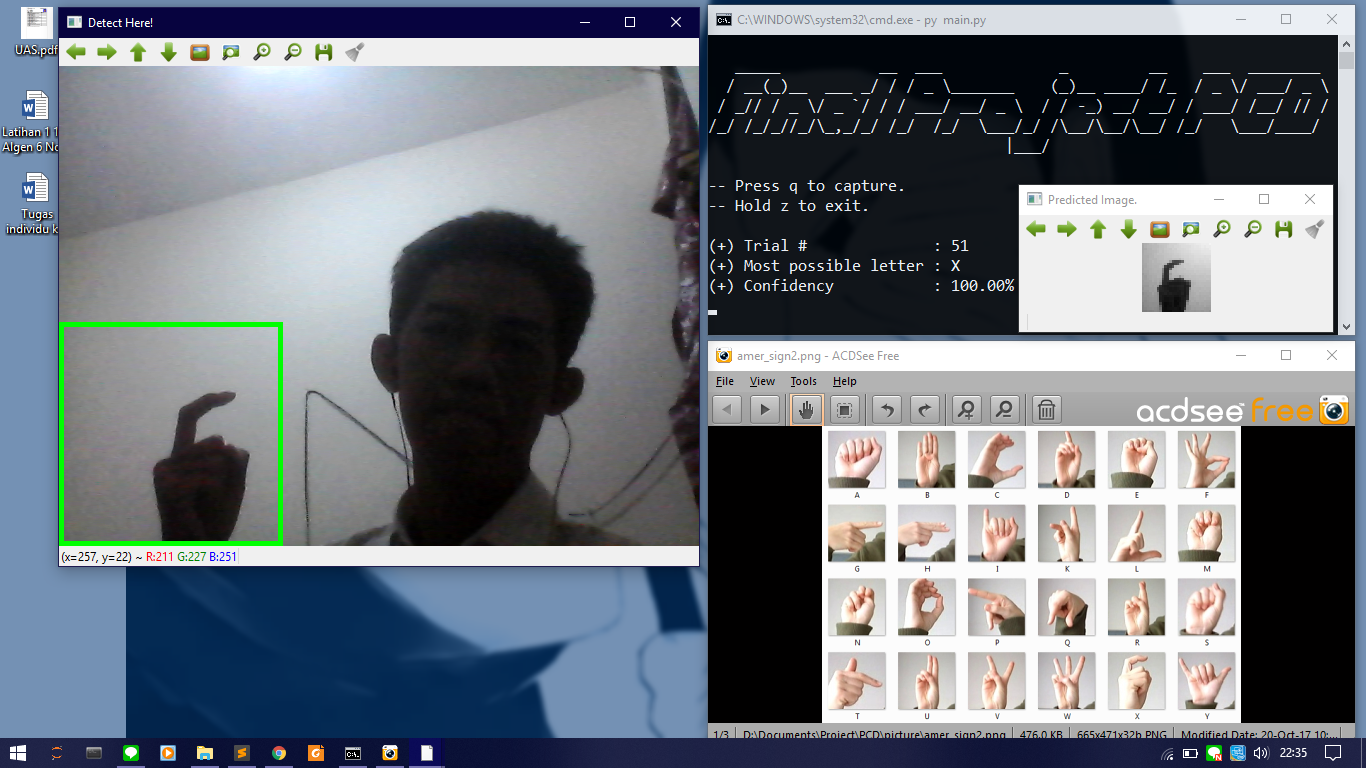

A simple detector to predict letters from sign language using webcam.

This project is done in accomplishment of final project of PCD lecture in undergraduate programme in Computer Science of Universitas Gadjah Mada. PCD stands for Pengolahan Citra Digital in Bahasa Indonesia, which translated to Digital Image Processing (DIP) in English. This project use Convolutional Neural Network as a prediction model used to predict what letter the input image is, and use OpenCV library to open webcam and handle digital image processing techniques. The sign language used here is American Sign Language.

This project use command-line to run. All you need just webcam, Python 3, and some of libraries installed. Download this repo first.

- Open command line

- In the command line, change directory to where this repo is in.

If this repo is in C:\Users\Rimba\Downloads, to change the directory you can typecd C:\Users\Rimba\Downloads\Final-Project-PCDin command line. - Run

main.pyusing Python command. You can typepy main.pyorpython3 main.pyin command line to run. - Wait the program until it finished importing library and loading model. Webcam window should appear after it finished.

- Try one of sign language letter in

amer_sign2.pngfile. Use your right hand, and place it in green box on the webcam window. - Press

qbutton to capture. Predicted image and its result will appear in command line. Holdzbutton to exit. Make sure the webcam window is your active window while pressing these buttons.

- NumPy. Image will be treated as 2D matrix with 3 layers (red, green, and blue). Numpy is most used library to handle this.

- OpenCV for Python 3. Used for showing webcam and do some digital image processing (DIP) techniques.

- Keras. Used to load prediciton model and predict letter from image given.

Installing using conda is more recommended than using pip install for a reason. Type these commands to install the library.

conda install -c anaconda numpyconda install -c conda-forge opencvconda install -c conda-forge keras

To import NumPy, OpenCV, or Keras, use import numpy, import cv2, or import keras respectively.

The program works as follows:

- Importing library, loading model, and open the webcam with green box on the bottom left

- Crop 224x224 pixel image in the box and use it as input image

- If

qis pressed, input image will go through these techniques consecutively:

- Contrast stretching. This will stretch pixel intensity of the image to a range of [a, b]. By default, the value of a is 0 and b is 255 in this project.

- Grayscaling. For each pixel of the image, its intensity replaced with mean of its R, G, and B intensity value.

- Downsampling. The prediction model only accept 28x28 pixel of input image, then the input image should be resized (downsampled) using certain interpolation method. Default method used is linear interpolation.

- The prediction model will predict what letter is the input image.

- Else, if

zis pressed, the program will closing webcam window and exit.

Some of additional feature in this project beside predicting letters:

- If you type

py main.py minein command line instead of onlypy main.py, every number of trials which multiple of 5, the webcam will capture the original image and save it to foldermined\big. It always save the downsampled input image (the 28x28 px grayscale image) inmined\smalleven if the command used is onlypy main.py. This mined image can be used for some purpose such as generate data training and test the model on big image containing some hands. Saved image has name format ofDD-MM-YY HH-MM-SS.JPG.

This project still have some sort of weaknesses that can be repaired soon:

- Bad prediction performance if the background behind the hand is not bright/white. This is the biggest weakness in this project I think. The solution to handle this weakness is maybe do some background substracting technique.

- Only good at predicting some letters even though if the background is bright. This project mostly successful in predicting C, F, L, O, V, W, and X. The letters A, B, E, I, K, M, N, P, Q, S, or U are so rarely predicted as correct. Letter J and Z can not be predicted since a person have to make a motion with their hand to sign it. The remaining letters have average correct prediction but not as good as the first seven letters. This weakness can be repaired by using different model.

- DIP techniques used are just too few, and the ConvNet model is too vanilla. I use the kernel here to create the model. Supposed to use more advanced DIP techniques and neural network configurations.

- Only predict when triggered by

qbutton. It could predict in stream using sliding window technique only if the prediction model is good enough.