Hongyu Zhou, Jiahao Shao, Lu Xu, Dongfeng Bai, Weichao Qiu, Bingbing Liu, Yue Wang, Andreas Geiger , Yiyi Liao

| Webpage | Full Paper | Video

This repository contains the official authors implementation associated with the paper "HUGS: Holistic Urban 3D Scene Understanding via Gaussian Splatting", which can be found here.

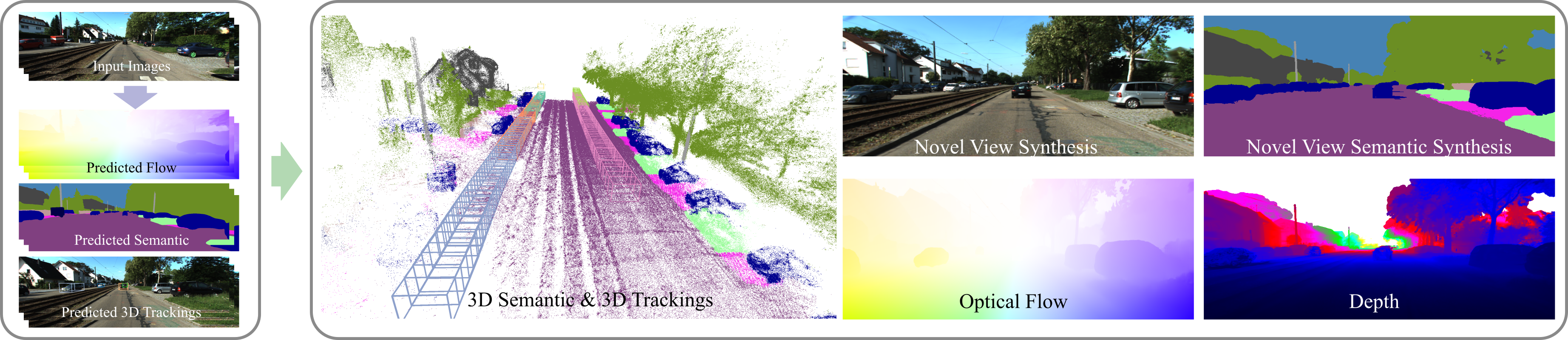

Abstract: Holistic understanding of urban scenes based on RGB images is a challenging yet important problem. It encompasses understanding both the geometry and appearance to enable novel view synthesis, parsing semantic labels, and tracking moving objects. Despite considerable progress, existing approaches often focus on specific aspects of this task and require additional inputs such as LiDAR scans or manually annotated 3D bounding boxes. In this paper, we introduce a novel pipeline that utilizes 3D Gaussian Splatting for holistic urban scene understanding. Our main idea involves the joint optimization of geometry, appearance, semantics, and motion using a combination of static and dynamic 3D Gaussians, where moving object poses are regularized via physical constraints. Our approach offers the ability to render new viewpoints in real-time, yielding 2D and 3D semantic information with high accuracy, and reconstruct dynamic scenes, even in scenarios where 3D bounding box detection are highly noisy. Experimental results on KITTI, KITTI-360, and Virtual KITTI 2 demonstrate the effectiveness of our approach.

The repository contains submodules, thus please check it out with

# SSH

git clone git@github.com:hyzhou404/hugs.git --recursiveor

# HTTPS

git clone https://github.com/hyzhou404/hugs --recursiveCreate conda environment:

conda create -n hugs python=3.10 -yPlease install PyTorch, tiny-cuda-nn, pytorch3d and flow-vis-torch by following official instructions.

Install submodules by running:

pip install submodules/simple-knn

pip install submodules/hugs-rasterizationInstall remaining packages by running:

pip install -r requirements.txtwe have made available two sequences from KITTI as indicated in our paper. Furthermore, three sequences from KITTI-360 and one sequence from Waymo has also been provided.

Download checkpoints from here.

unzip ${sequence}.zipRender test views by running:

python render.py -m ${checkpoint_path} --data_type ${dataset_type} --iteration 30000 --affine The variable dataset_type is a string, and its value can be one of the following: kitti, kitti360, or waymo.

python metrics.py -m ${checkpoint_path}

This repository only includes the inference code of HUGS. The code for training will be released in future work.

@InProceedings{Zhou_2024_CVPR,

author = {Zhou, Hongyu and Shao, Jiahao and Xu, Lu and Bai, Dongfeng and Qiu, Weichao and Liu, Bingbing and Wang, Yue and Geiger, Andreas and Liao, Yiyi},

title = {HUGS: Holistic Urban 3D Scene Understanding via Gaussian Splatting},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024},

pages = {21336-21345}

}