This is the offical repository of the paper:

Vis2Mesh: Efficient Mesh Reconstruction from Unstructured Point Clouds of Large Scenes with Learned Virtual View Visibility

https://arxiv.org/abs/2108.08378

@misc{song2021vis2mesh,

title={Vis2Mesh: Efficient Mesh Reconstruction from Unstructured Point Clouds of Large Scenes with Learned Virtual View Visibility},

author={Shuang Song and Zhaopeng Cui and Rongjun Qin},

year={2021},

eprint={2108.08378},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

- 2021/9/6: Intialize all in one project. Only this version only supports inferencing with our pre-trained weights. We will release Dockerfile to relief deploy efforts.

- Ground truth generation and network training.

- Evaluation scripts

# Add the package repositories

distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

sudo apt-get update && sudo apt-get install -y nvidia-container-toolkit

sudo systemctl restart dockerdocker build . -t vis2mesh

Please create a conda environment with pytorch and check out our setup script:

./setup_tools.sh

pip install gdown

./checkpoints/get_pretrained.sh

./example/get_example.shThe main command for surface reconstruction, the result will be copied as $(CLOUDFILE)_vis2mesh.ply.

python inference.py example/example1.ply --cam cam0

We suggested to use docker, either in interactive mode or single shot mode.

xhost +

name=vis2mesh

# Run in interactive mode

docker run -it \

--mount type=bind,source="$PWD/checkpoints",target=/workspace/checkpoints \

--mount type=bind,source="$PWD/example",target=/workspace/example \

--privileged \

--net=host \

-e NVIDIA_DRIVER_CAPABILITIES=all \

-e DISPLAY=unix$DISPLAY \

-v $XAUTH:/root/.Xauthority \

-v /tmp/.X11-unix:/tmp/.X11-unix:rw \

--device=/dev/dri \

--gpus all $name

cd /workspace

python inference.py example/example1.ply --cam cam0

# Run with single shot call

docker run \

--mount type=bind,source="$PWD/checkpoints",target=/workspace/checkpoints \

--mount type=bind,source="$PWD/example",target=/workspace/example \

--privileged \

--net=host \

-e NVIDIA_DRIVER_CAPABILITIES=all \

-e DISPLAY=unix$DISPLAY \

-v $XAUTH:/root/.Xauthority \

-v /tmp/.X11-unix:/tmp/.X11-unix:rw \

--device=/dev/dri \

--gpus all $name \

/workspace/inference.py example/example1.ply --cam cam0python inference.py example/example1.ply

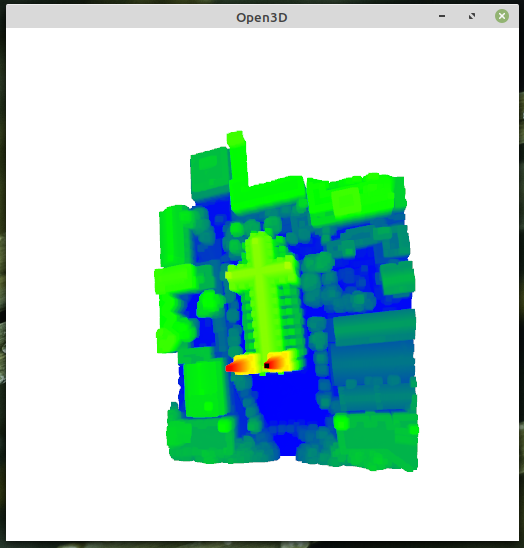

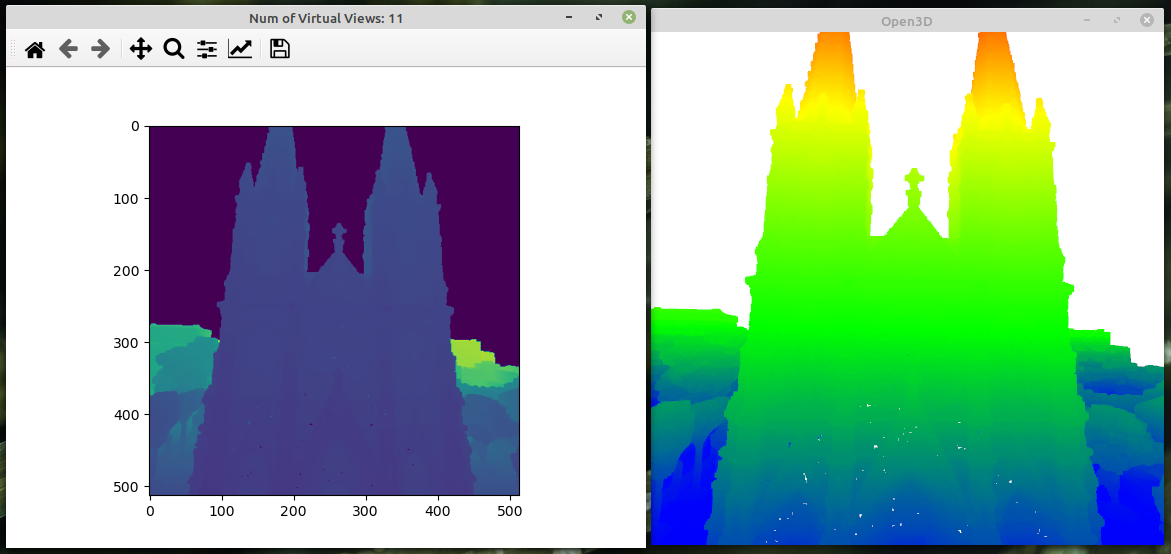

Run the command without --cam flag, you can add virtual views interactively with the following GUI. Your views will be recorded in example/example1.ply_WORK/cam*.json.

Navigate in 3D viewer and click key [Space] to record current view. Click key [Q] to close the window and continue meshing process.