This github is a official implementation of the paper:

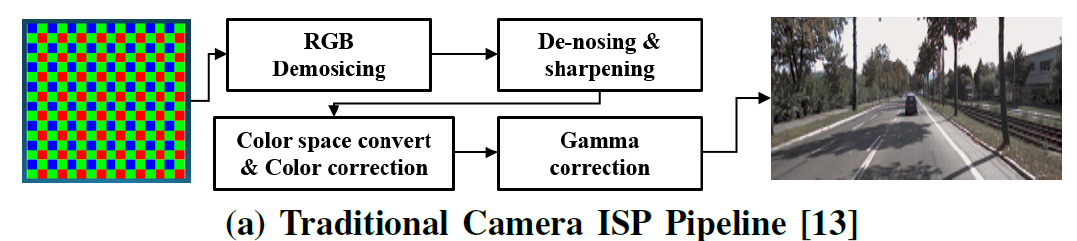

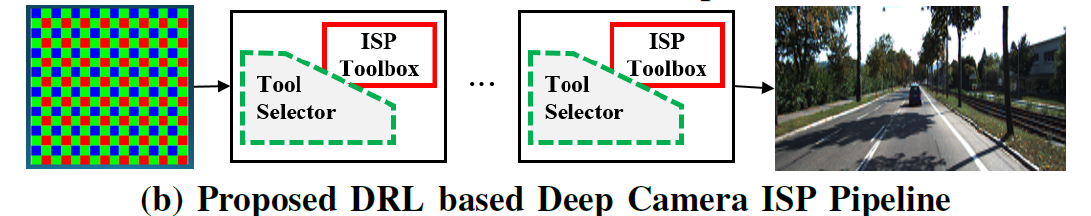

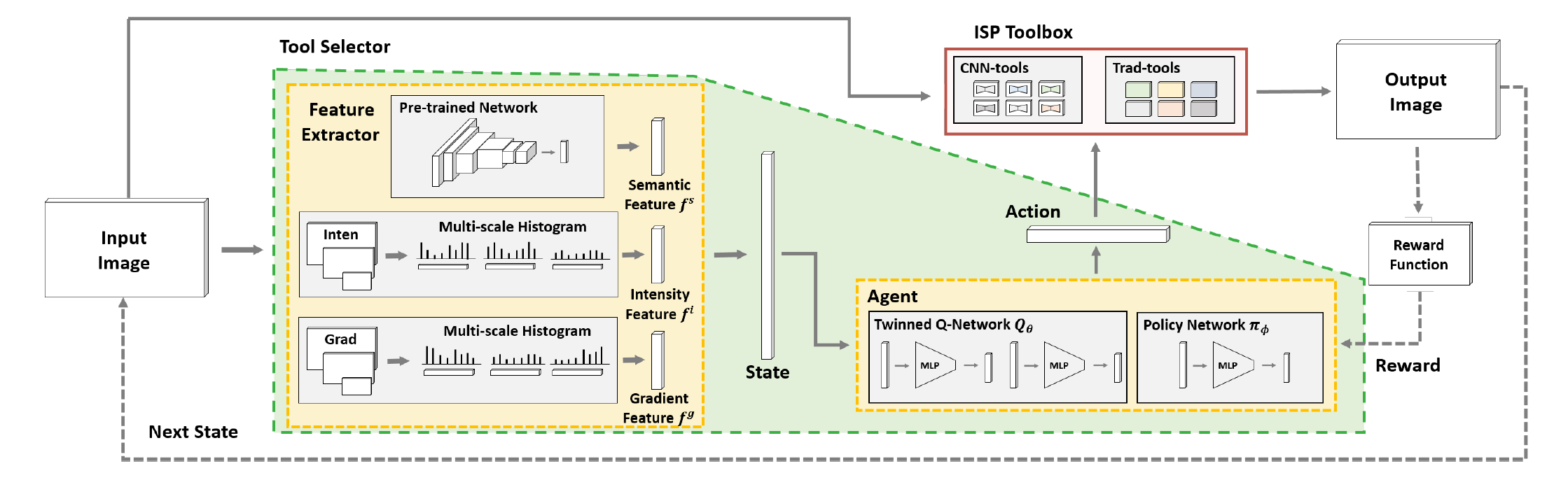

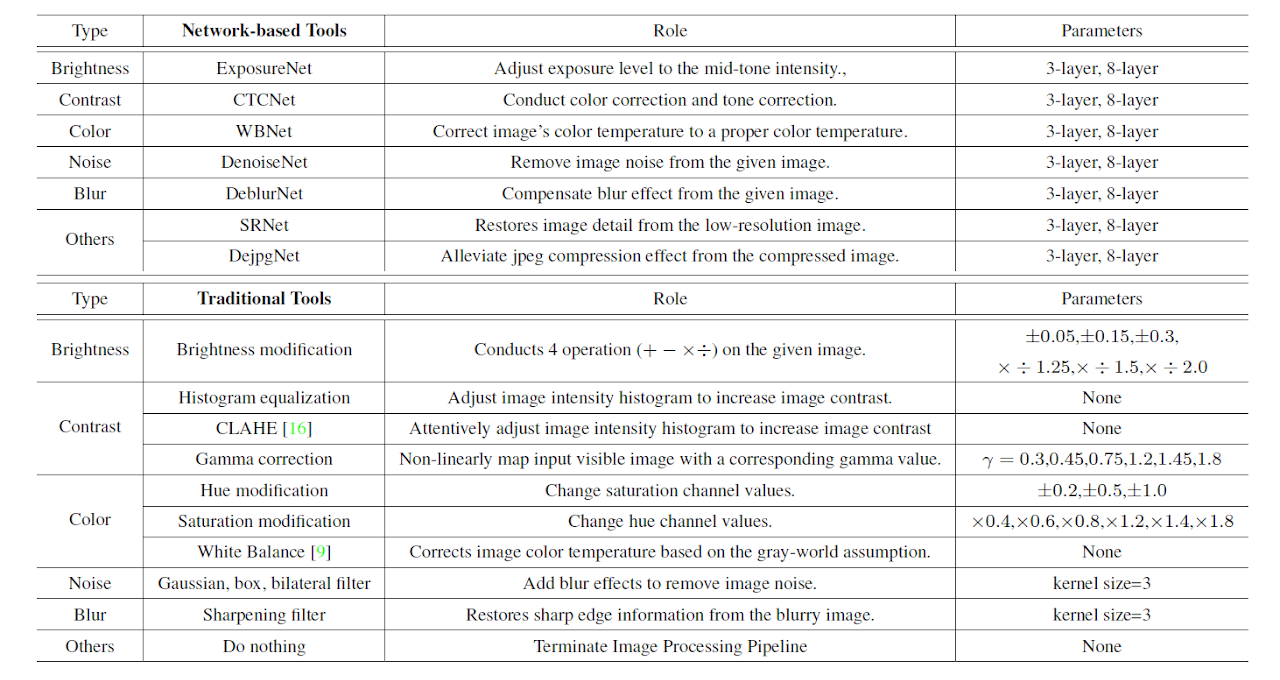

DRL-ISP: Multi-Objective Camera ISP with Deep Reinforcement Learning

Ukcheol Shin, Kyunghyun Lee, In So Kweon

First two authors equally contributed

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 2022

[Paper] [Project webpage]

Recently, self-supervised learning of depth and ego-motion from thermal images shows strong robustness and reliability under challenging lighting and weather conditions. However, the inherent thermal image properties such as weak contrast, blurry edges, and noise hinder to generate effective self-supervision from thermal images. Therefore, most previous researches just rely on additional self-supervisory sources such as RGB video, generative models, and Lidar information. In this paper, we conduct an in-depth analysis of thermal image characteristics that degenerates self-supervision from thermal images. Based on the analysis, we propose an effective thermal image mapping method that significantly increases image information, such as overall structure, contrast, and details, while preserving temporal consistency. By resolving the fundamental problem of the thermal image, our depth and pose network trained only with thermal images achieves state-of-the-art results without utilizing any extra self-supervisory source. As our best knowledge, this work is the first self-supervised learning approach to train monocular depth and relative pose networks with only thermal images.

This codebase was developed and tested with python 3.7, Pytorch 1.5.1, and CUDA 10.2 on Ubuntu 16.04. Modify "prefix" of the environment.yml file according to your environment.

conda env create --file environment.yml

conda activate pytorch1.5_rlFor the raw2rgb for detection task, you need to additionally install detectron2.

In order to train and evaluate our code, you need to download DIV2K, MSCOCO, and KITTI detection dataset.

Also, Rendered WB dataset (Set2) dataset is required to train white-balance tools.

For the depth task, we used the pre-processed KITTI RAW dataset of SC-SfM-Learner

We convert RGB images of each dataset into the Bayer pattern images by utilizing this Github

However, it taks a lots of time, we provide pre-processed RAW datasets.

After download our pre-processed dataset, unzip the files to form the below structure.

Dataset_DRLISP/

RGB/

DIV2K/

DIV2K_train_HR/

DIV2K_valid_HR/

KITTI_detection/

data_object_images_2/

KITTI_depth/

KITTI_SC/

2011_09_26_drive_0001_sync_02/

...

MS_COCO/

train2017/

val2017/

test2017/

annotation/

RAW/

DIV2K/

DIV2K_train_HR/

DIV2K_valid_HR/

KITTI_detection/

data_object_images_2/

KITTI_depth/

KITTI_SC/

2011_09_26_drive_0001_sync_02/

...

MS_COCO/

train2017/

val2017/

test2017/

annotation/

Set2/

train/

test/

Upon the above dataset structure, you can generate training/testing dataset by running the script.

cd tool_trainner

sh scripts/1.prepare_dataset.shor you can quickly start from our pre-prossed synthetic RAW/RGB dataset without downloading all above RGB and RAW dataset ((1),(2)) and preparing traning/testing dataset (4).

You can diretly train each tool and tool selector for RAW2RGB task from our pre-prossed RAW/RGB dataset.

You can individual network-based tool by running the script.

sh scripts/2.train_individual.shAfter individual tool training stage, you can train the tools collective way.

sh scripts/3.train_jointly.shAfter collective tool training stage, copy trained tools for the tool-selector training.

sh scripts/4.copy_weights.shYou can start a tensorboard session in the folder to see a progress of each training stage by opening https://localhost:6006 on your browser.

tensorboard --logdir=logs/cd tool_rl_selector

python main.py --app restoration --train_dir $TRAINDIR --test_dir $TESTDIR --use_tool_factorFor TRAINDIR and TESTDIR, specify the location of the folder contains syn_dataset.

python main.py --app detection --train_dir $TRAINDIR --test_dir $TESTDIR --use_tool_factor --reward_scaler 2.0 --use_small_detectionFor TRAINDIR and TESTDIR, specify the location of the folder MS_COCO.

python main.py --app depth --train_dir $TRAINDIR --test_dir $TESTDIR --use_tool_factorFor TRAINDIR, specify the location of the folder KITTI_sc.

For TESTDIR, specify the location of the folder kitti_depth_test.

python main.py --app restoration --train_dir $TRAINDIR --test_dir $TESTDIR --use_tool_factor --continue_file $CONTINUEFILE --is_testFor TRAINDIR and TESTDIR, specify the location of the folder contains syn_dataset.

For CONTINUEFILE, specify the location of the checkpoint.

python main.py --app detection --train_dir $TRAINDIR --test_dir $TESTDIR --use_tool_factor --reward_scaler 2.0 --use_small_detection --continue_file $CONTINUEFILE --is_testFor TRAINDIR and TESTDIR, specify the location of the folder MS_COCO.

For CONTINUEFILE, specify the location of the checkpoint.

python main.py --app depth --train_dir $TRAINDIR --test_dir $TESTDIR --use_tool_factor --continue_file $CONTINUEFILE --is_testFor TRAINDIR, specify the location of the folder KITTI_sc.

For TESTDIR, specify the location of the folder kitti_depth_test.

For CONTINUEFILE, specify the location of the checkpoint.

Please cite the following paper if you use our work, parts of this code, and pre-processed dataset in your research.

@article{shin2022drl,

title={DRL-ISP: Multi-Objective Camera ISP with Deep Reinforcement Learning},

author={Shin, Ukcheol and Lee, Kyunghyun and Kweon, In So},

journal={arXiv preprint arXiv:2207.03081},

year={2022}

}