Zhe Kong* · Feng Gao* ·Yong Zhang✉ · Zhuoliang Kang · Xiaoming Wei · Xunliang Cai

*Equal Contribution ✉Corresponding Authors

TL; DR: MultiTalk is an audio-driven multi-person conversational video generation. It enables the video creation of multi-person conversation 💬, singing 🎤, interaction control 👬, and cartoon 🙊.

001.mp4 |

004.mp4 |

003.mp4 |

002.mp4 |

005.mp4 |

006.mp4 |

003.mp4 |

002.mp4 |

003.mp4 |

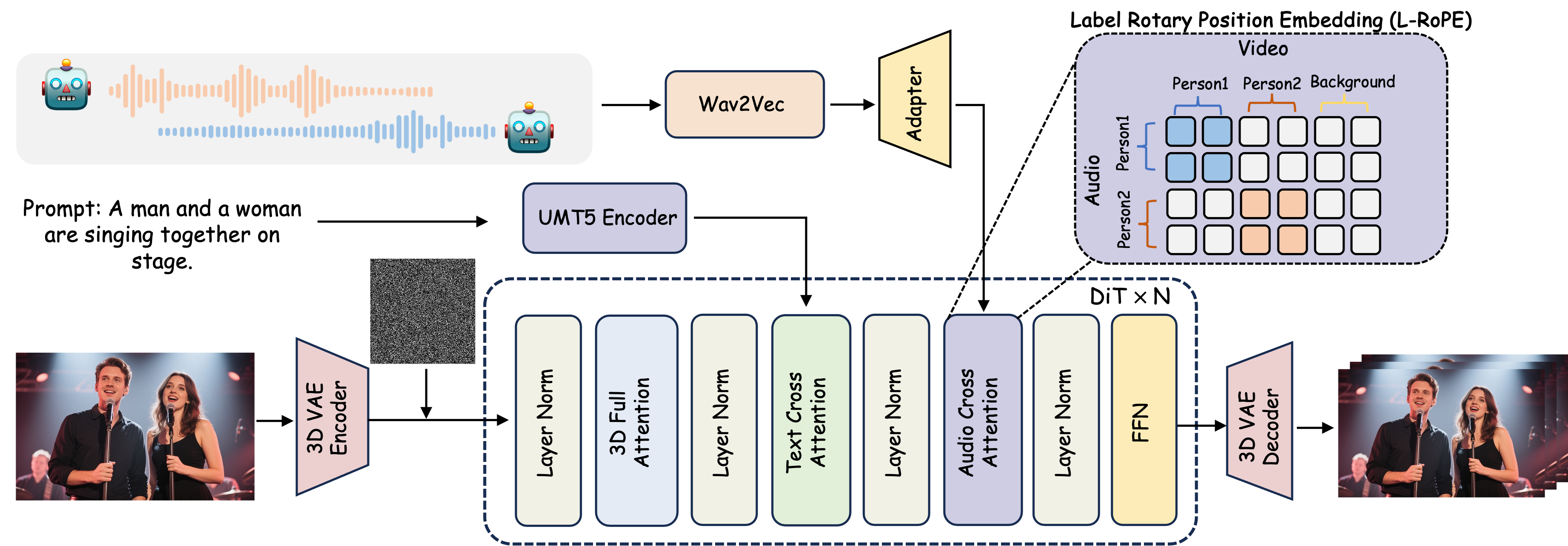

We propose MultiTalk , a novel framework for audio-driven multi-person conversational video generation. Given a multi-stream audio input, a reference image and a prompt, MultiTalk generates a video containing interactions following the prompt, with consistent lip motions aligned with the audio.

- 💬 Realistic Conversations - Support single & multi-person generation

- 👥 Interactive Character Control - Direct virtual humans via prompts

- 🎤 Generalization Performances - Support the generation of cartoon character and singing

- 📺 Resolution Flexibility: 480p & 720p output at arbitrary aspect ratios

- ⏱️ Long Video Generation: Support video generation up to 15 seconds

- June 9, 2025: 🔥🔥 We release the weights and inference code of MultiTalk

- May 29, 2025: We release the Technique-Report of MultiTalk

- May 29, 2025: We release the project page of MultiTalk

- Release the technical report

- Inference

- Checkpoints

- TTS integration

- Multi-GPU Inference

- Gradio demo

- Inference acceleration

- ComfyUI

conda create -n multitalk python=3.10

conda activate multitalk

pip install torch==2.2.2 torchvision==0.17.2 torchaudio==2.2.2 --index-url https://download.pytorch.org/whl/cu121

pip install -U xformers==0.0.25.post1 --index-url https://download.pytorch.org/whl/cu121

pip install packaging

pip install ninja

pip install psutil==7.0.0

pip install flash-attn==2.5.6 --no-build-isolation

or

wget https://github.com/Dao-AILab/flash-attention/releases/download/v2.5.6/flash_attn-2.5.6+cu122torch2.2cxx11abiFALSE-cp310-cp310-linux_x86_64.whl

python -m pip install flash_attn-2.5.6+cu122torch2.2cxx11abiFALSE-cp310-cp310-linux_x86_64.whl

conda install -c conda-forge librosa

pip install -r requirements.txt

conda install -c conda-forge ffmpeg

or

sudo yum install ffmpeg ffmpeg-devel

| Models | Download Link | Notes |

|---|---|---|

| Wan2.1-I2V-14B-480P | 🤗 Huggingface | Base model |

| chinese-wav2vec2-base | 🤗 Huggingface | Audio encoder |

| MeiGen-MultiTalk | 🤗 Huggingface | Our audio condition weights |

Download models using huggingface-cli:

huggingface-cli download Wan-AI/Wan2.1-I2V-14B-480P --local-dir ./weights/Wan2.1-I2V-14B-480P

huggingface-cli download TencentGameMate/chinese-wav2vec2-base --local-dir ./weights/chinese-wav2vec2-base

huggingface-cli download MeiGen-AI/MeiGen-MultiTalk --local-dir ./weights/MeiGen-MultiTalkLink through:

mv weights/Wan2.1-I2V-14B-480P/diffusion_pytorch_model.safetensors.index.json weights/Wan2.1-I2V-14B-480P/diffusion_pytorch_model.safetensors.index.json_old

sudo ln -s {Absolute path}/weights/MeiGen-MultiTalk/diffusion_pytorch_model.safetensors.index.json weights/Wan2.1-I2V-14B-480P/

sudo ln -s {Absolute path}/weights/MeiGen-MultiTalk/multitalk.safetensors weights/Wan2.1-I2V-14B-480P/

Or, copy through:

mv weights/Wan2.1-I2V-14B-480P/diffusion_pytorch_model.safetensors.index.json weights/Wan2.1-I2V-14B-480P/diffusion_pytorch_model.safetensors.index.json_old

cp weights/MeiGen-MultiTalk/diffusion_pytorch_model.safetensors.index.json weights/Wan2.1-I2V-14B-480P/

cp weights/MeiGen-MultiTalk/multitalk.safetensors weights/Wan2.1-I2V-14B-480P/

Our model is compatible with both 480P and 720P resolutions. The current code only supports 480P inference. 720P inference requires multiple GPUs, and we will provide an update soon.

Some tips

- Lip synchronization accuracy: Audio CFG works optimally between 3–5. Increase the audio CFG value for better synchronization.

- Video clip length: The model was trained on 81-frame videos at 25 FPS. For optimal prompt following performance, generate clips at 81 frames. Generating up to 201 frames is possible, though longer clips might reduce prompt-following performance.

- Long video generation: Audio CFG influences color tone consistency across segments. Set this value to 3 to alleviate tonal variations.

- Sampling steps: If you want to generate a video fast, you can decrease the sampling steps to even 10 that will not hurt the lip synchronization accuracy, but affects the motion and visual quality. More sampling steps, better video quality.

python generate_multitalk.py --ckpt_dir weights/Wan2.1-I2V-14B-480P \

--wav2vec_dir 'weights/chinese-wav2vec2-base' --input_json examples/single_example_1.json --sample_steps 40 --frame_num 81 --mode clip --save_file single_exp

python generate_multitalk.py --ckpt_dir weights/Wan2.1-I2V-14B-480P \

--wav2vec_dir 'weights/chinese-wav2vec2-base' --input_json examples/single_example_1.json --sample_steps 40 --mode streaming --save_file single_long_exp

python generate_multitalk.py --ckpt_dir weights/Wan2.1-I2V-14B-480P \

--wav2vec_dir 'weights/chinese-wav2vec2-base' --input_json examples/multitalk_example_1.json --sample_steps 40 --frame_num 81 --mode clip --save_file multi_exp

python generate_multitalk.py --ckpt_dir weights/Wan2.1-I2V-14B-480P \

--wav2vec_dir 'weights/chinese-wav2vec2-base' --input_json examples/multitalk_example_2.json --sample_steps 40 --mode streaming --save_file multi_long_exp

If you find our work useful in your research, please consider citing:

@article{kong2025let,

title={Let Them Talk: Audio-Driven Multi-Person Conversational Video Generation},

author={Kong, Zhe and Gao, Feng and Zhang, Yong and Kang, Zhuoliang and Wei, Xiaoming and Cai, Xunliang and Chen, Guanying and Luo, Wenhan},

journal={arXiv preprint arXiv:2505.22647},

year={2025}

}

The models in this repository are licensed under the Apache 2.0 License. We claim no rights over the your generated contents, granting you the freedom to use them while ensuring that your usage complies with the provisions of this license. You are fully accountable for your use of the models, which must not involve sharing any content that violates applicable laws, causes harm to individuals or groups, disseminates personal information intended for harm, spreads misinformation, or targets vulnerable populations.