This repository contains the official PyTorch implementation of the following paper:

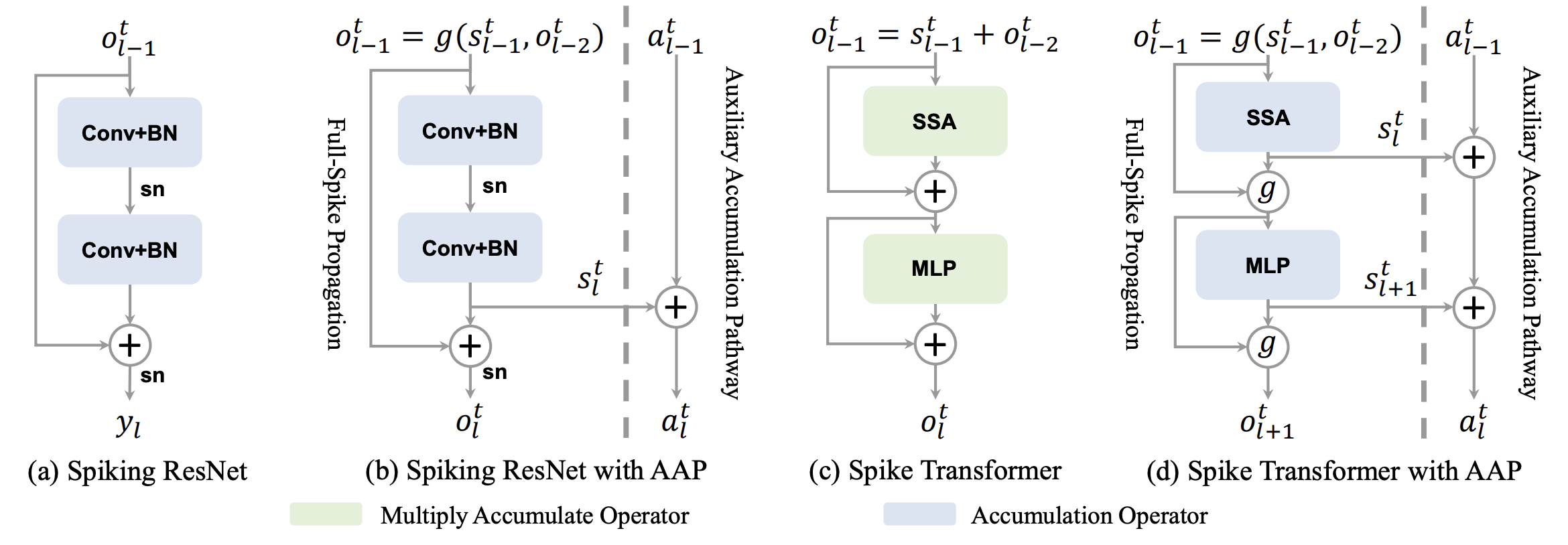

Training Full Spike Neural Networks via Auxiliary Accumulation Pathway,

Guangyao Chen, Peixi Peng, Guoqi Li, Yonghong Tian [arXiv][Bibtex]

- [05/2023] Code are released.

We suggest to use anaconda install all packages.

Install torch>=1.5.0 by referring to:

https://pytorch.org/get-started/previous-versions/

Install tensorboard:

pip install tensorboardThe origin codes uses a specific SpikingJelly. To maximize reproducibility, the user can download the latest SpikingJelly and rollback to the version that we used to train:

git clone https://github.com/fangwei123456/spikingjelly.git

cd spikingjelly

git reset --hard 2958519df84ad77c316c6e6fbfac96fb2e5f59a3

python setup.py installcd imagenetTrain the FSNN-18 (AAP) with 8 GPUs:

python -m torch.distributed.launch --nproc_per_node=8 --use_env train.py --cos_lr_T 320 --model dsnn18 -b 64 --output-dir ./logs --tb --print-freq 500 --amp --cache-dataset --connect_f IAND --T 4 --lr 0.1 --epoch 320 --data-path /datasets/imagenetIf you find our work useful for your research, please consider giving a star ⭐ and citation 🍺:

@article{chen2023training,

title={Training Full Spike Neural Networks via Auxiliary Accumulation Pathway},

author={Chen, Guangyao and Peng, Peixi and Li, Guoqi and Tian, Yonghong},

journal={arXiv preprint arXiv:2301.11929},

year={2023}

}This code is built using the spikingjelly framework, the syops-counter tool and the Spike-Element-Wise-ResNet repository.