DiffusionMagic is simple to use Stable Diffusion workflows using diffusers. DiffusionMagic focused on the following areas:

- Easy to use

- Cross-platform (Windows/Linux/Mac)

- Modular design, latest best optimizations for speed and memory

The Segmind Stable Diffusion Model (SSD-1B) is a smaller version of the Stable Diffusion XL (SDXL) that is 50% smaller but maintains high-quality text-to-image generation. It offers a 60% speedup compared to SDXL.

You can run SSD-1B on Google Colab

You can run StableDiffusion XL 1.0 on Google Colab

You can run Würstchen 2.0 on Google Colab

You can run Illusion Diffusion on Google Colab

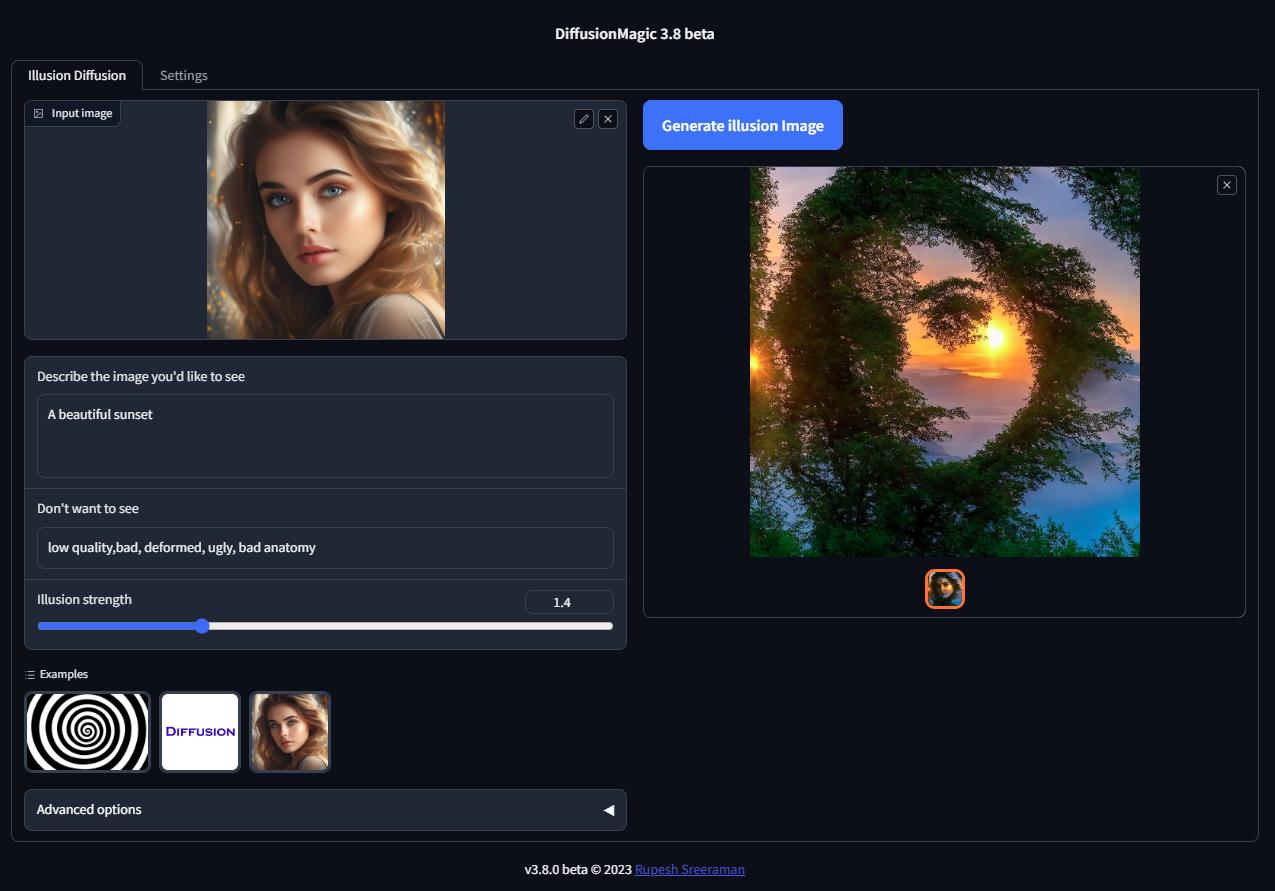

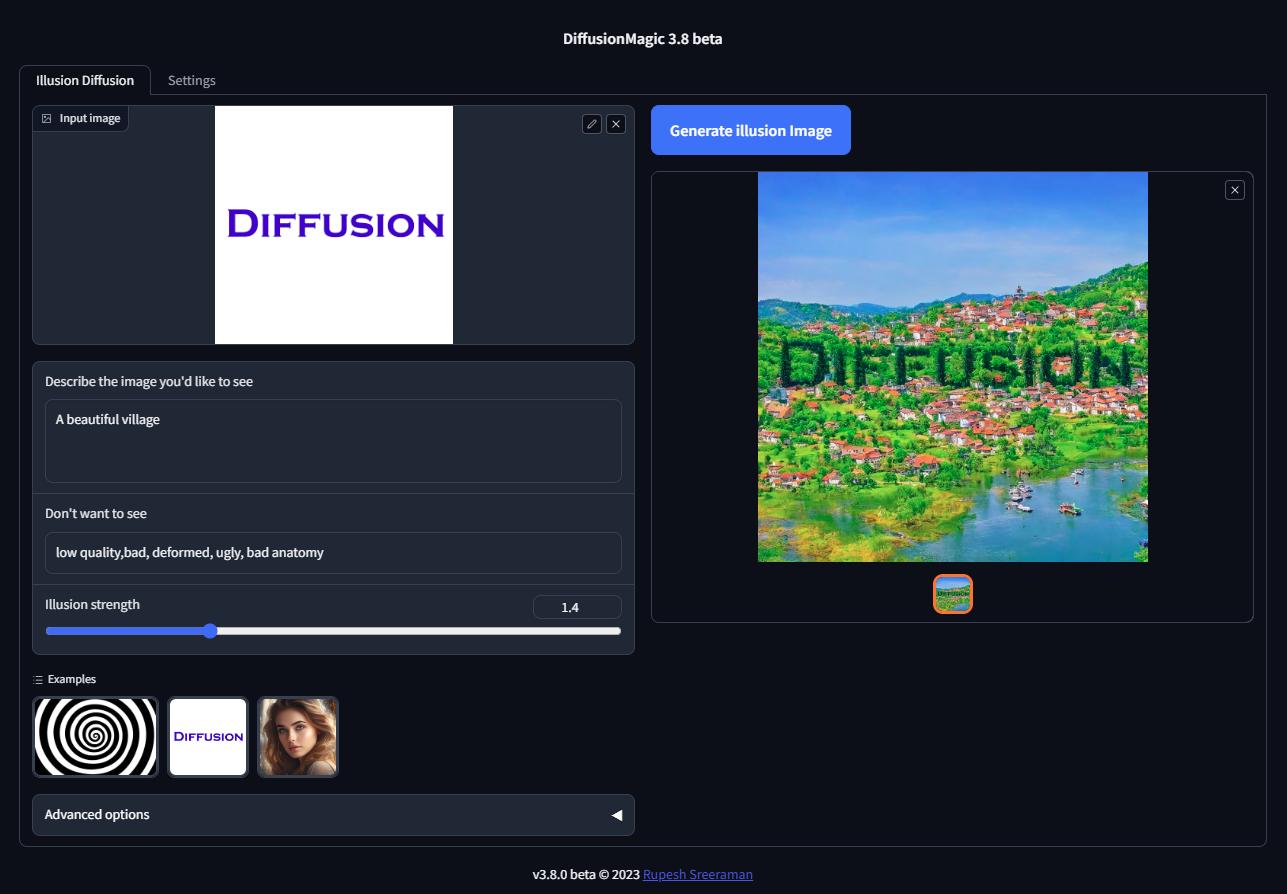

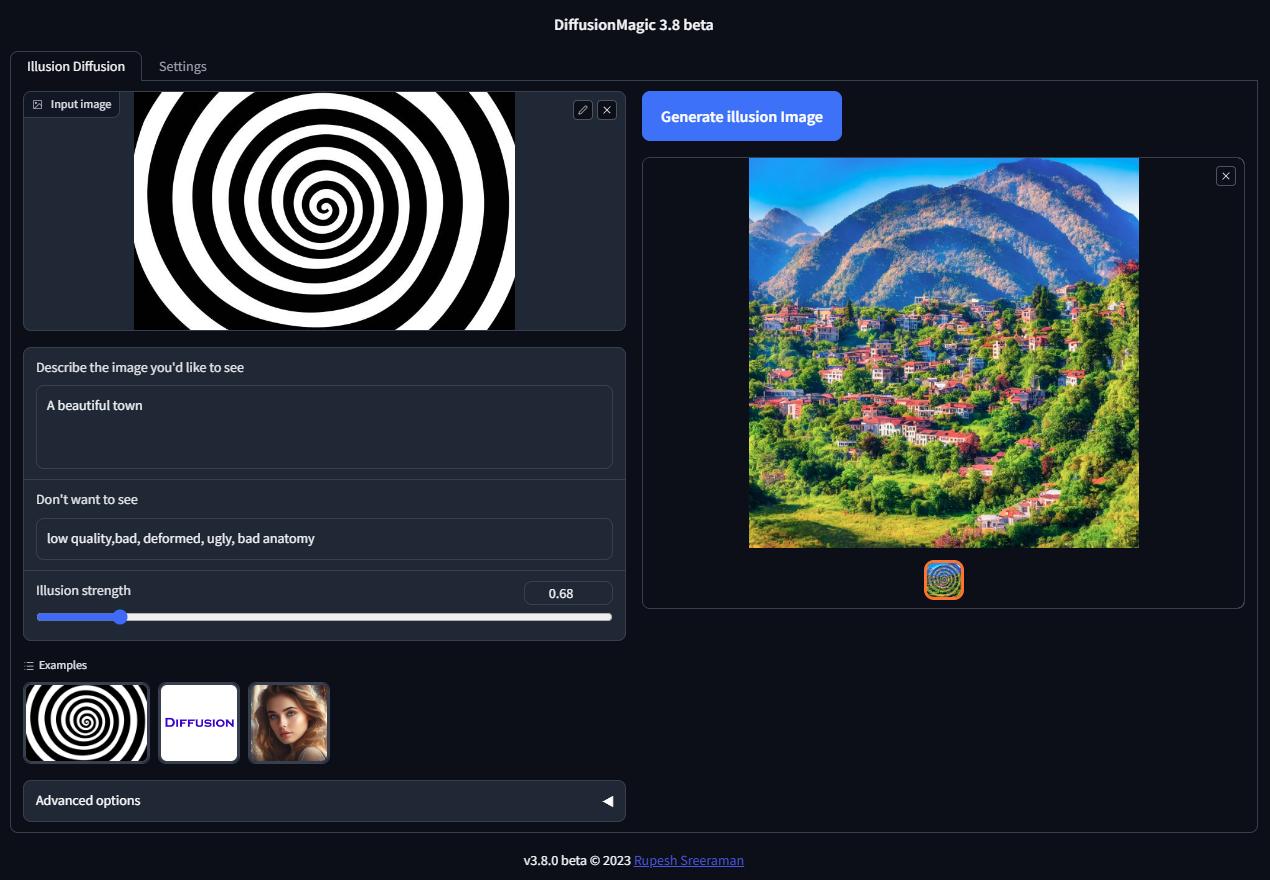

Illusion diffusion supports following types of input images as illusion control :

- Color images

- Text images

- Patterns

You need to adjust the illusion strength to get desired result.

- Supports Würstchen

- Supports Stable diffusion XL

- Supports various Stable Diffusion workflows

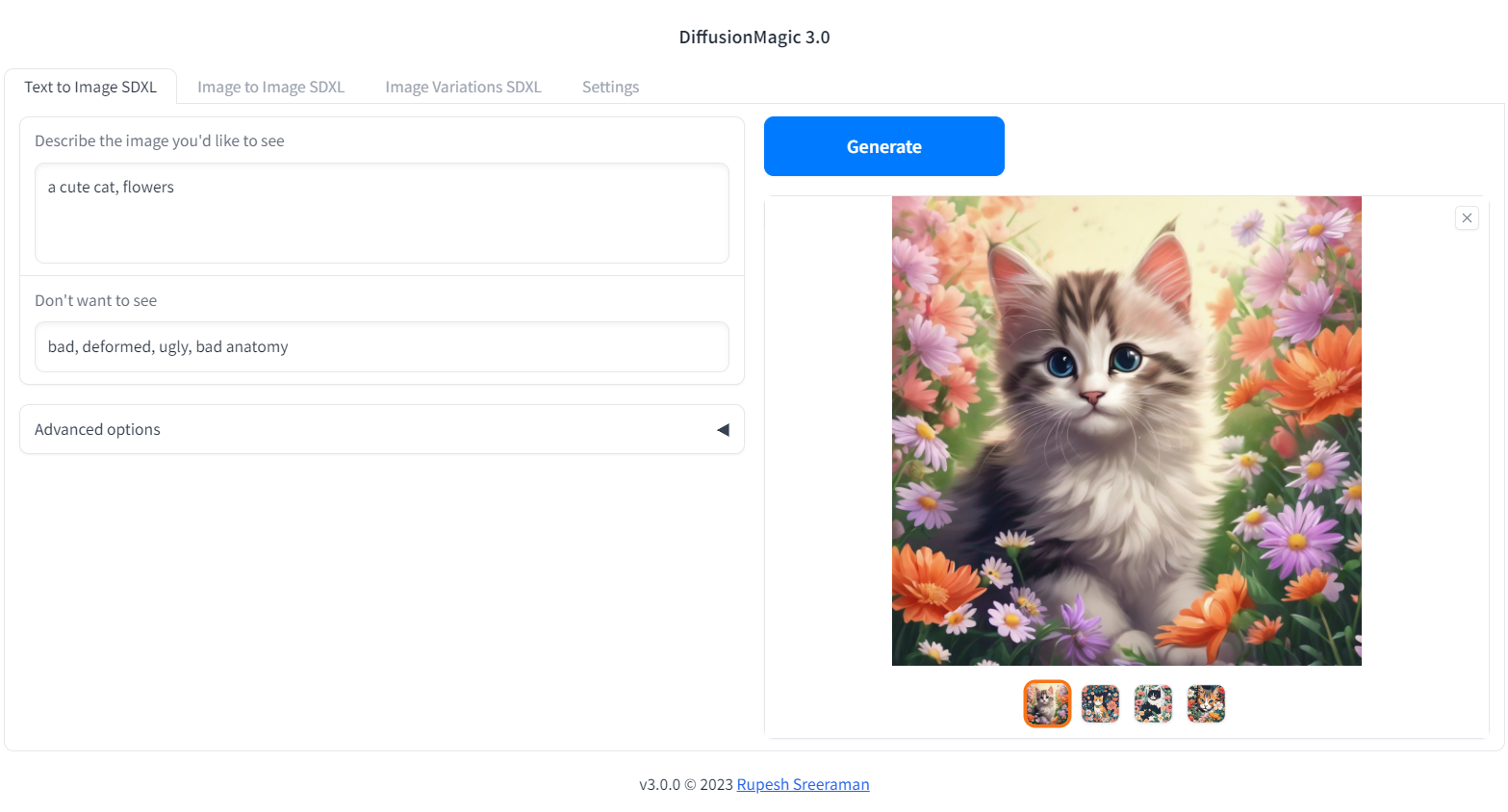

- Text to Image

- Image to Image

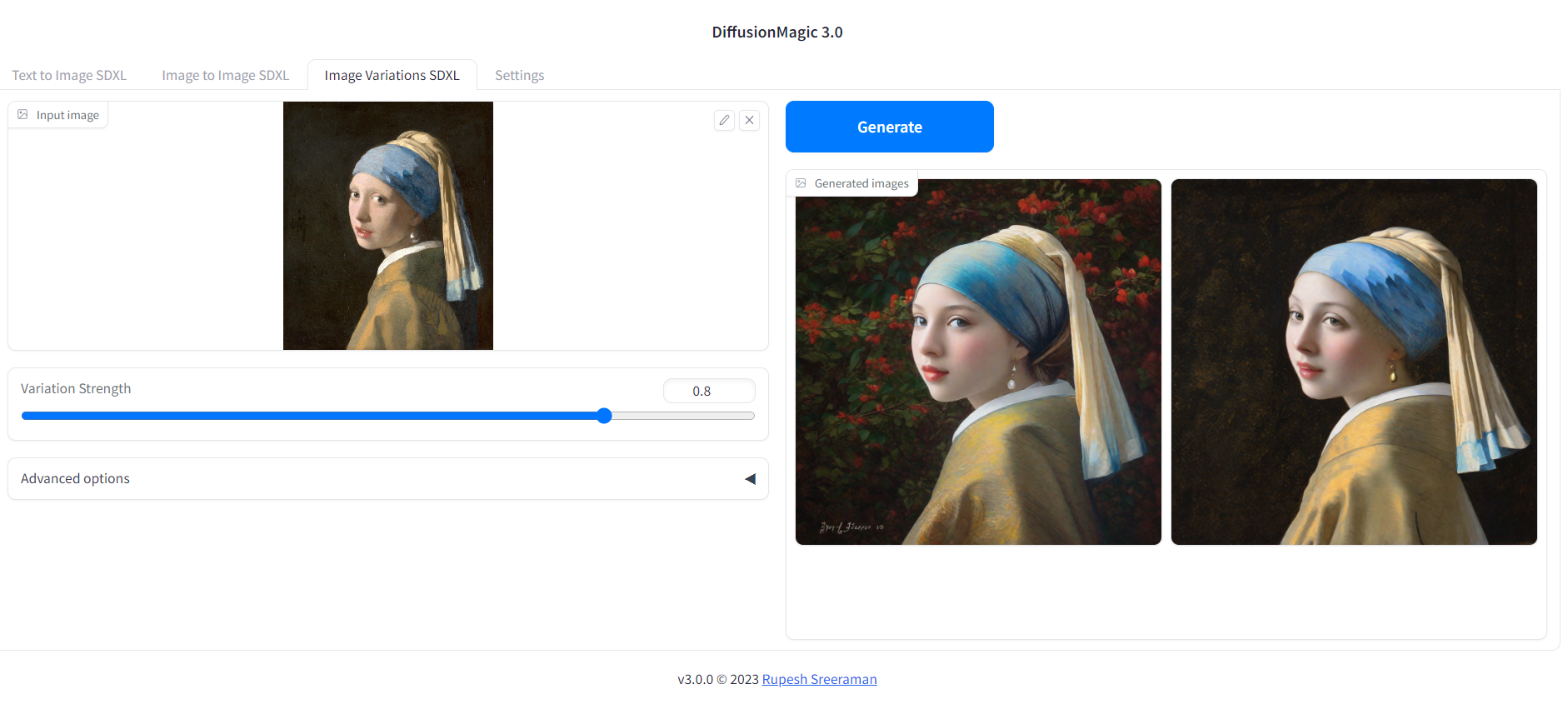

- Image variations

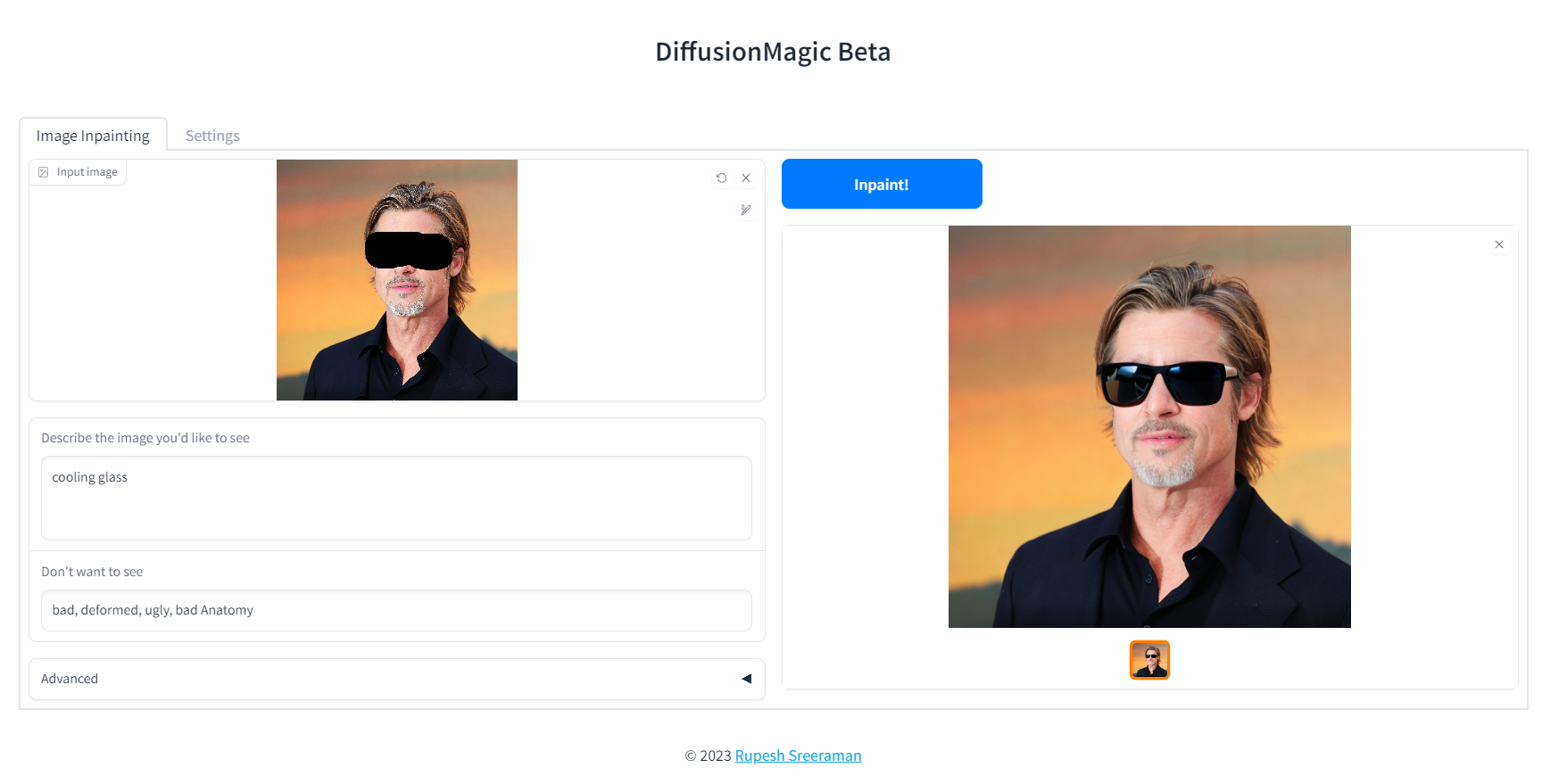

- Image Inpainting

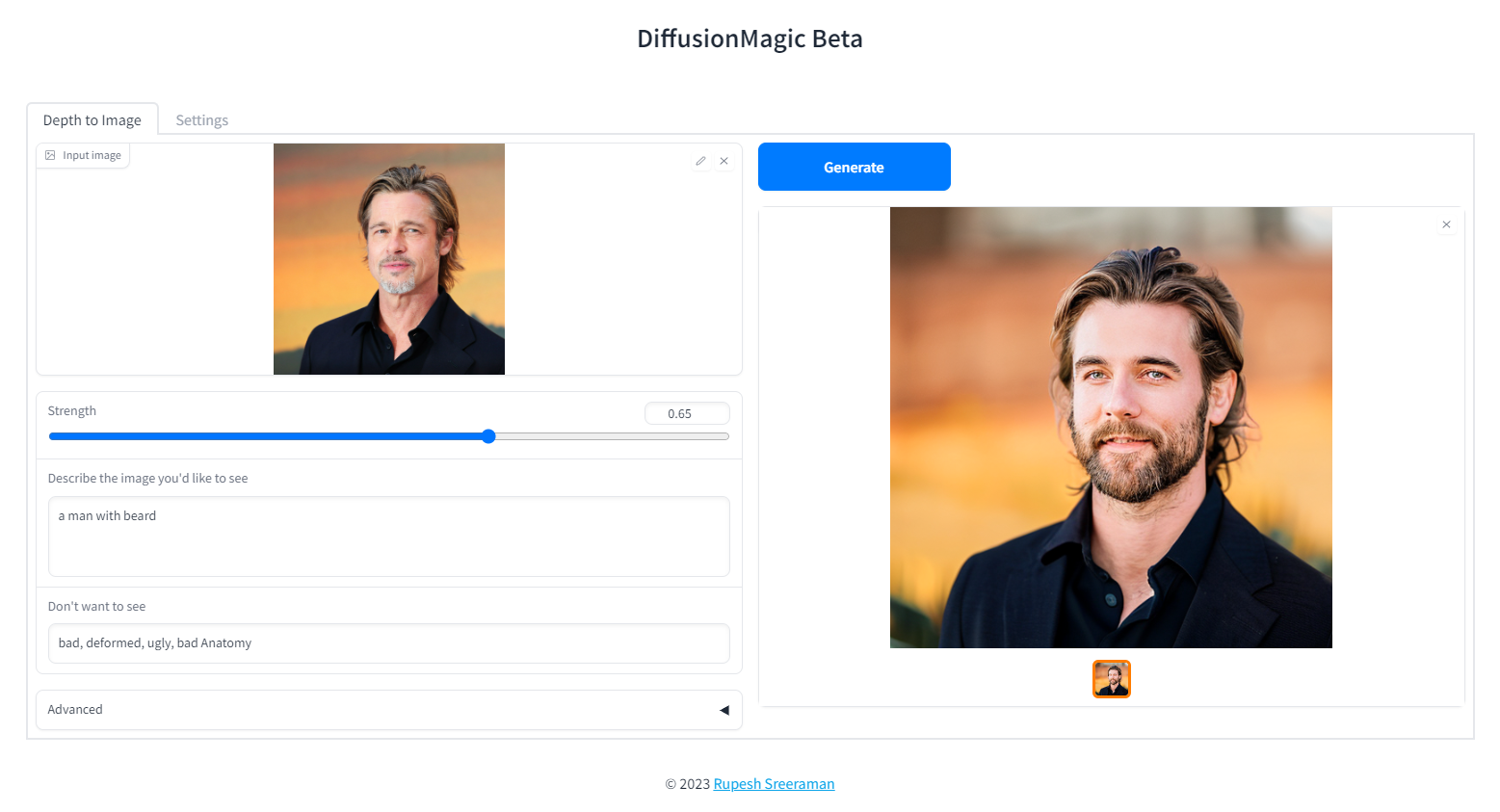

- Depth to Image

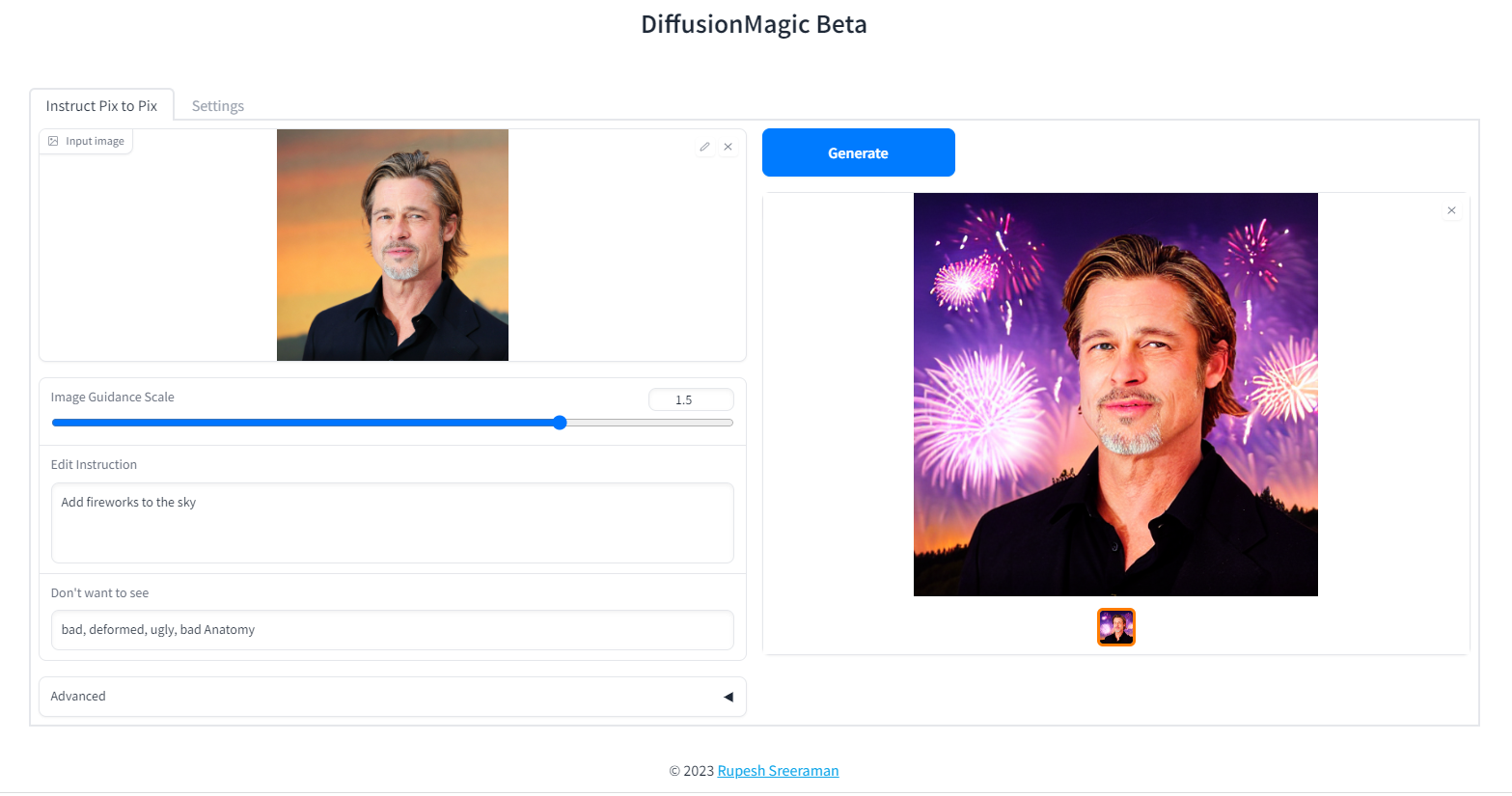

- Instruction based image editing

- Supports Controlnet workflows

- Canny

- MLSD (Line control)

- Normal

- HED

- Pose

- Depth

- Scribble

- Segmentation

- Pytorch 2.0 support

- Supports all stable diffusion Hugging Face models

- Supports Stable diffusion v1 and v2 models, derived models

- Works on Windows/Linux/Mac 64-bit

- Works on CPU,GPU(Recent Nvidia GPU),Apple Silicon M1/M2 hardware

- Supports DEIS scheduler for faster image generation (10 steps)

- Supports 7 different samplers with latest DEIS sampler

- LoRA(Low-Rank Adaptation of Large Language Models) models support (~3 MB size)

- Easy to add new diffuser model by updating stable_diffusion_models.txt

- Low VRAM mode supports GPU with RAM < 4 GB

- Fast model loading

- Supports Attention slicing and VAE slicing

- Simple installation using install.bat/install.sh

Please note that AMD GPUs are not supported.

- Works on Windows/Linux/Mac 64-bit

- Works on CPU,GPU,Apple Silicon M1/M2 hardware

- 12 GB System RAM

- ~11 GB disk space after installation (on SSD for best performance)

DiffusionMagic runs on low VRAM GPUs. Here is our guide to run StableDiffusion XL on low VRAM GPUs.

Download release from the github DiffusionMagic releases.

Follow the steps to install and run the Diffusion magic on Windows.

- First we need to run(double click) the

install.batbatch file it will install the necessary dependencies for DiffusionMagic. (It will take some time to install,depends on your internet speed) - Run the

install.batscript. - To start DiffusionMagic double click

start.bat

Follow the steps to install and run the Diffusion magic on Linux.

- Run the following command:

chmod +x install.sh - Run the

install.shscript../install.sh - To start DiffusionMagic run:

./start.sh

Testers needed - If you have MacOS feel free to test and contribute

- Mac computer with Apple silicon (M1/M2) hardware.

- macOS 12.6 or later (13.0 or later recommended).

Follow the steps to install and run the Diffusion magic on Mac (Apple Silicon M1/M2).

- Run the following command:

chmod +x install-mac.sh - Run the

install-mac.shscript../install-mac.sh - To start DiffusionMagic run:

./start.sh

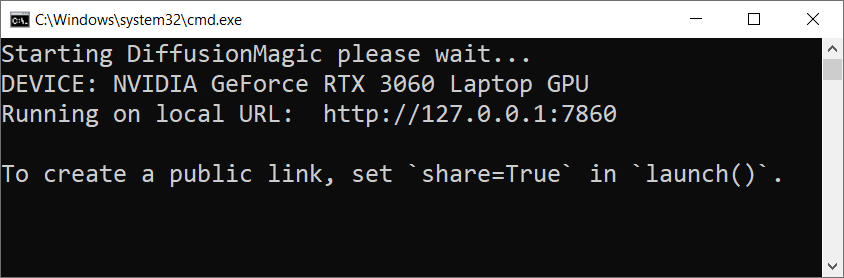

Open the browser http://localhost:7860/

To get dark theme :

http://localhost:7860/?__theme=dark

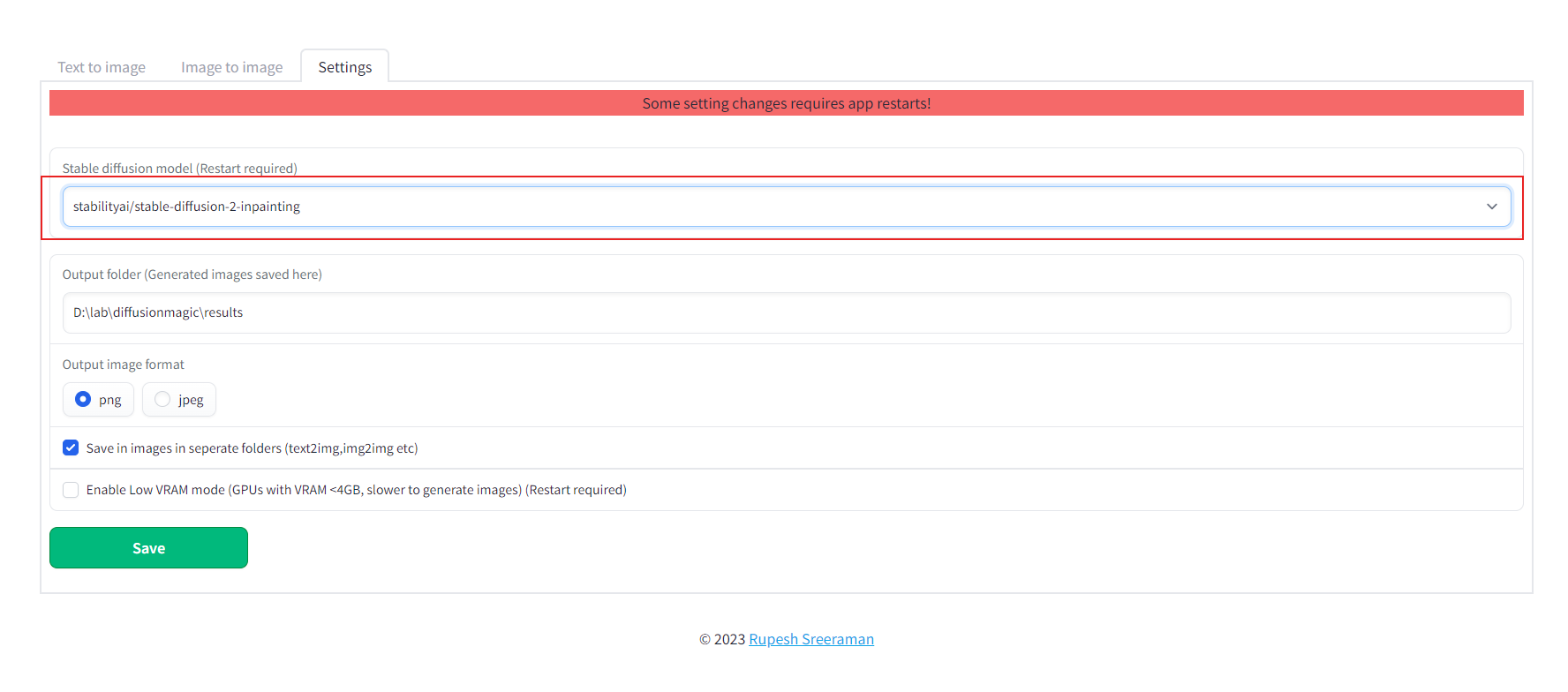

Diffusion magic will change UI based on the model selected. Follow the steps to switch the models() inpainting,depth to image or instruct pix to pix or any other hugging face stable diffusion model)

- Start the Diffusion Magic app, open the settings tab and change the model

- Save the settings

- Close the app and start using start.bat/start.sh

We can add any Hugging Face stable diffusion model to DiffusionMagic by

- Adding Hugging Face models id or local folder path to the configs/stable_diffusion_models.txt file

E.g

https://huggingface.co/dreamlike-art/dreamlike-diffusion-1.0Here model id isdreamlike-art/dreamlike-diffusion-1.0Or we can clone the model use the local folder path as model id. - Adding locally copied model path to configs/stable_diffusion_models.txt file

Run the following commands from src folder

mypy --ignore-missing-imports --explicit-package-bases .

flake8 --max-line-length=100 .

Contributions are welcomed.